User Guide

AWS Schema Conversion Tool

Version 1.0.672

Copyright © 2024 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

AWS Schema Conversion Tool User Guide

AWS Schema Conversion Tool: User Guide

Copyright © 2024 Amazon Web Services, Inc. and/or its affiliates. All rights reserved.

Amazon's trademarks and trade dress may not be used in connection with any product or service

that is not Amazon's, in any manner that is likely to cause confusion among customers, or in any

manner that disparages or discredits Amazon. All other trademarks not owned by Amazon are

the property of their respective owners, who may or may not be affiliated with, connected to, or

sponsored by Amazon.

AWS Schema Conversion Tool User Guide

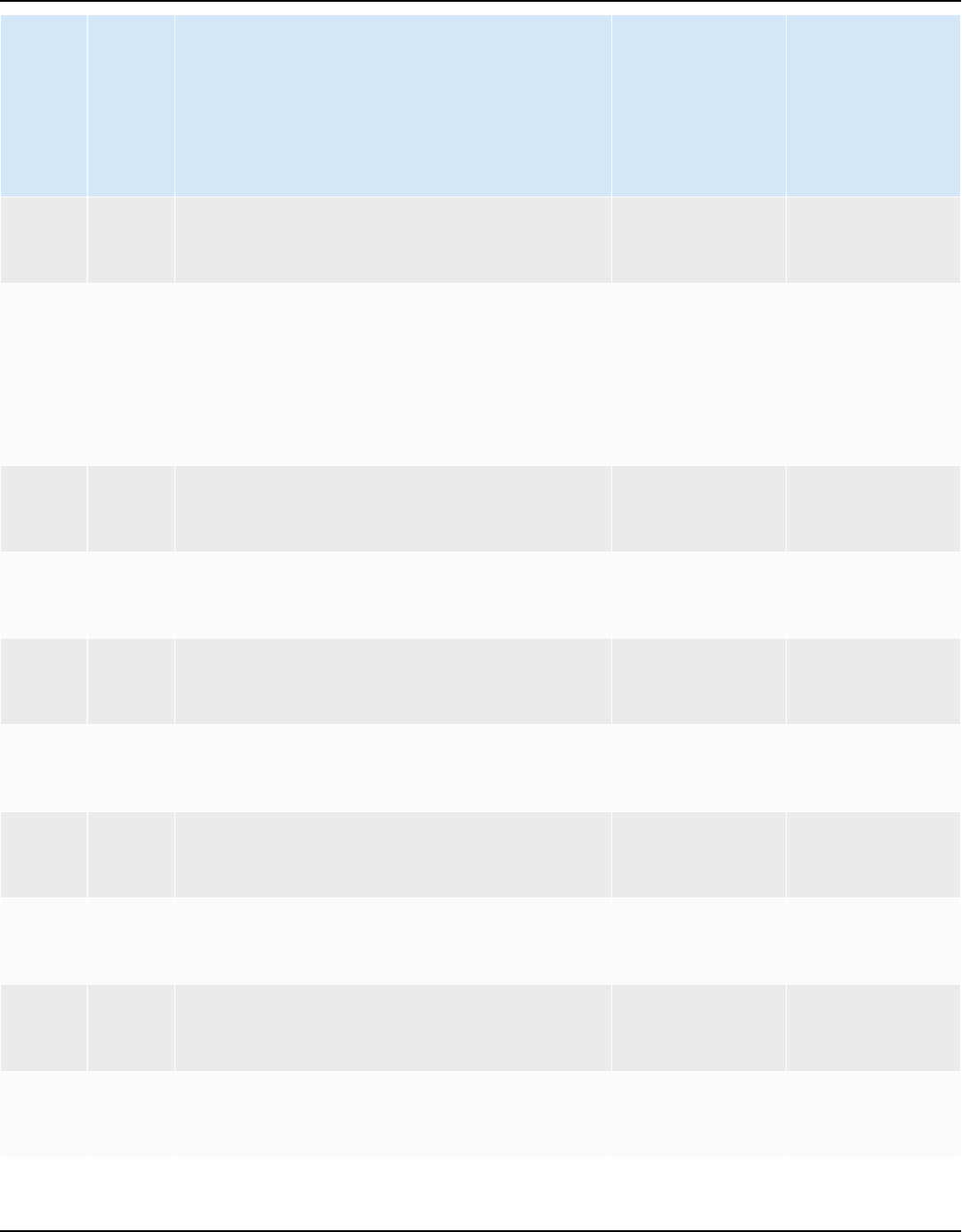

Table of Contents

What is AWS SCT ............................................................................................................................. 1

Schema conversion overview ..................................................................................................................... 5

Giving feedback ............................................................................................................................................ 6

Installing and Configuring AWS SCT .............................................................................................. 7

Installing AWS SCT ....................................................................................................................................... 7

Validating installation .................................................................................................................................. 9

Verifying the checksum of the AWS SCT file .................................................................................... 9

Verifying the AWS SCT RPM files on Fedora .................................................................................. 10

Verifying the AWS SCT DEB files on Ubuntu .................................................................................. 10

Verifying the AWS SCT MSI file on Microsoft Windows ................................................................ 11

Installing JDBC drivers .............................................................................................................................. 11

Installing JDBC drivers on Linux ........................................................................................................ 15

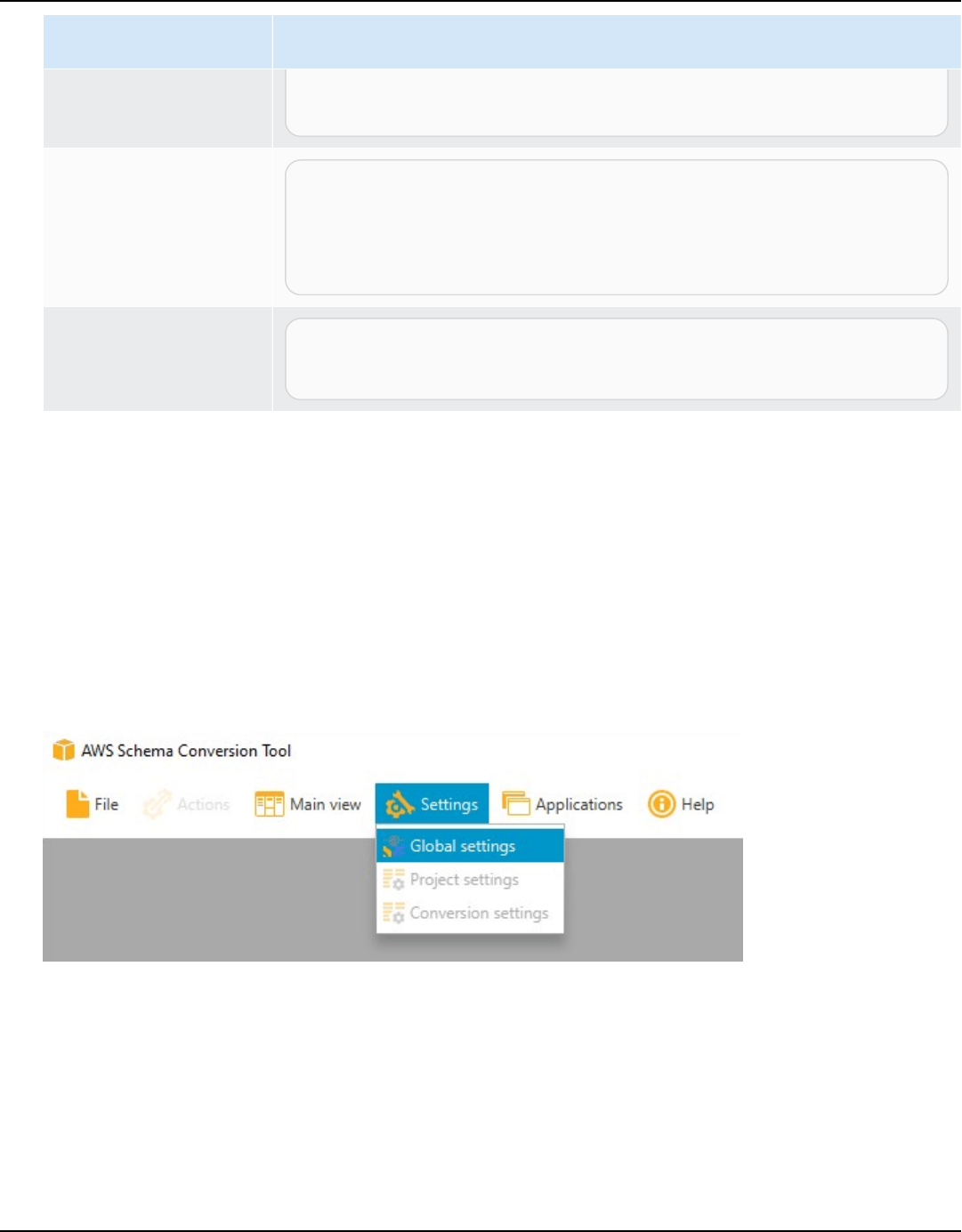

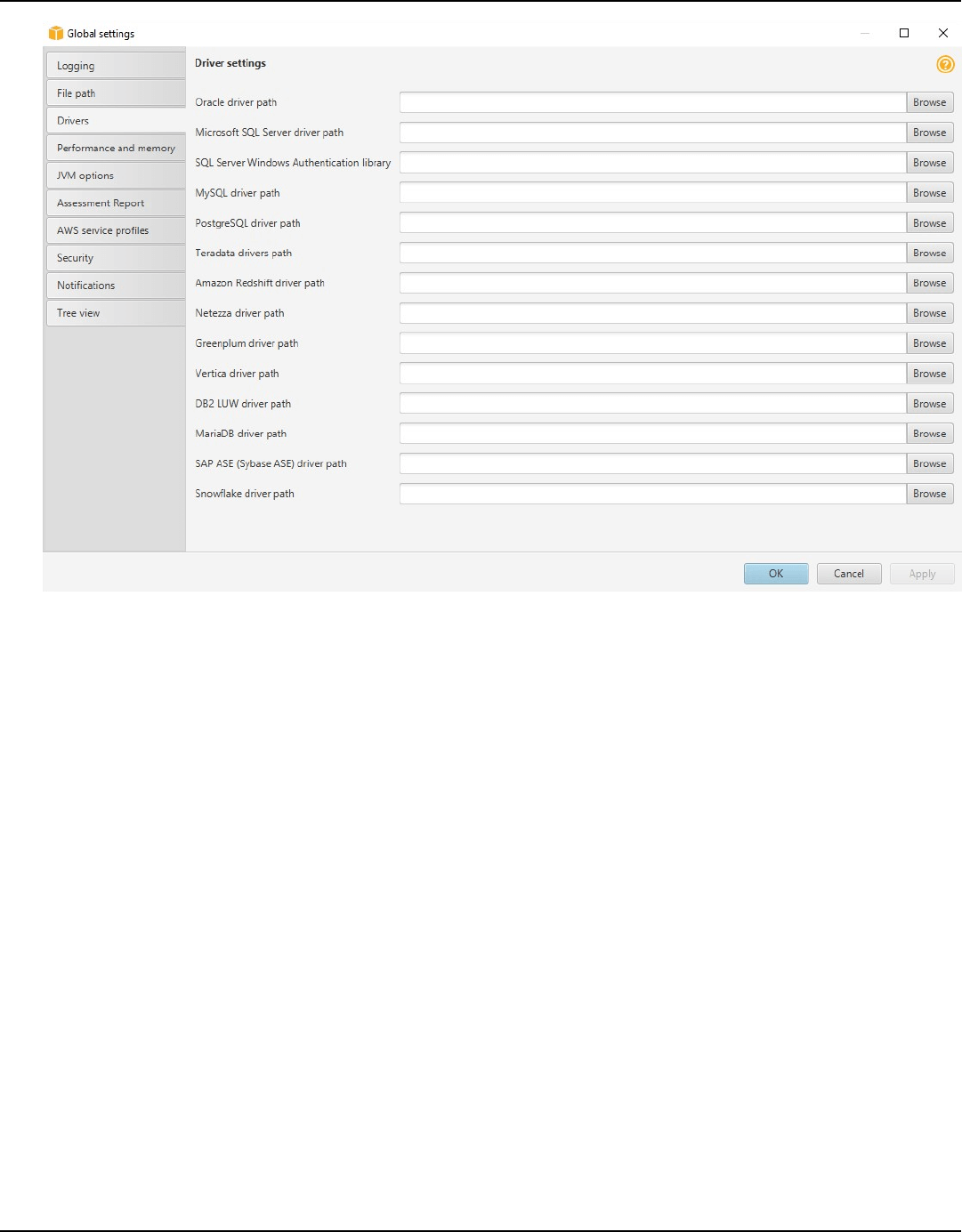

Storing driver paths in the global settings ..................................................................................... 16

Updating AWS SCT .................................................................................................................................... 17

AWS Schema Conversion Tool CLI .......................................................................................................... 18

AWS SCT user interface ................................................................................................................ 19

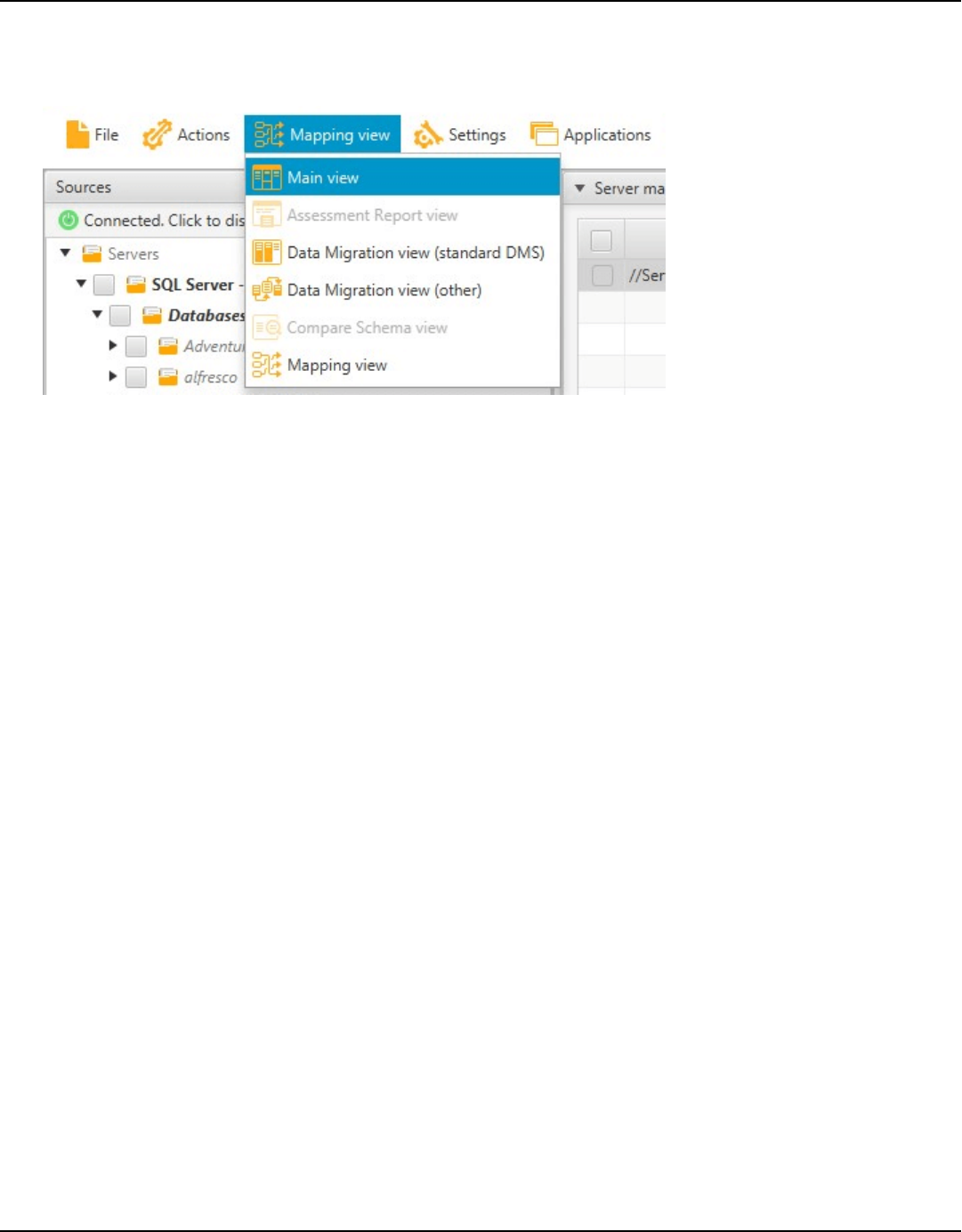

Project window ........................................................................................................................................... 19

Starting and Managing projects ............................................................................................................. 21

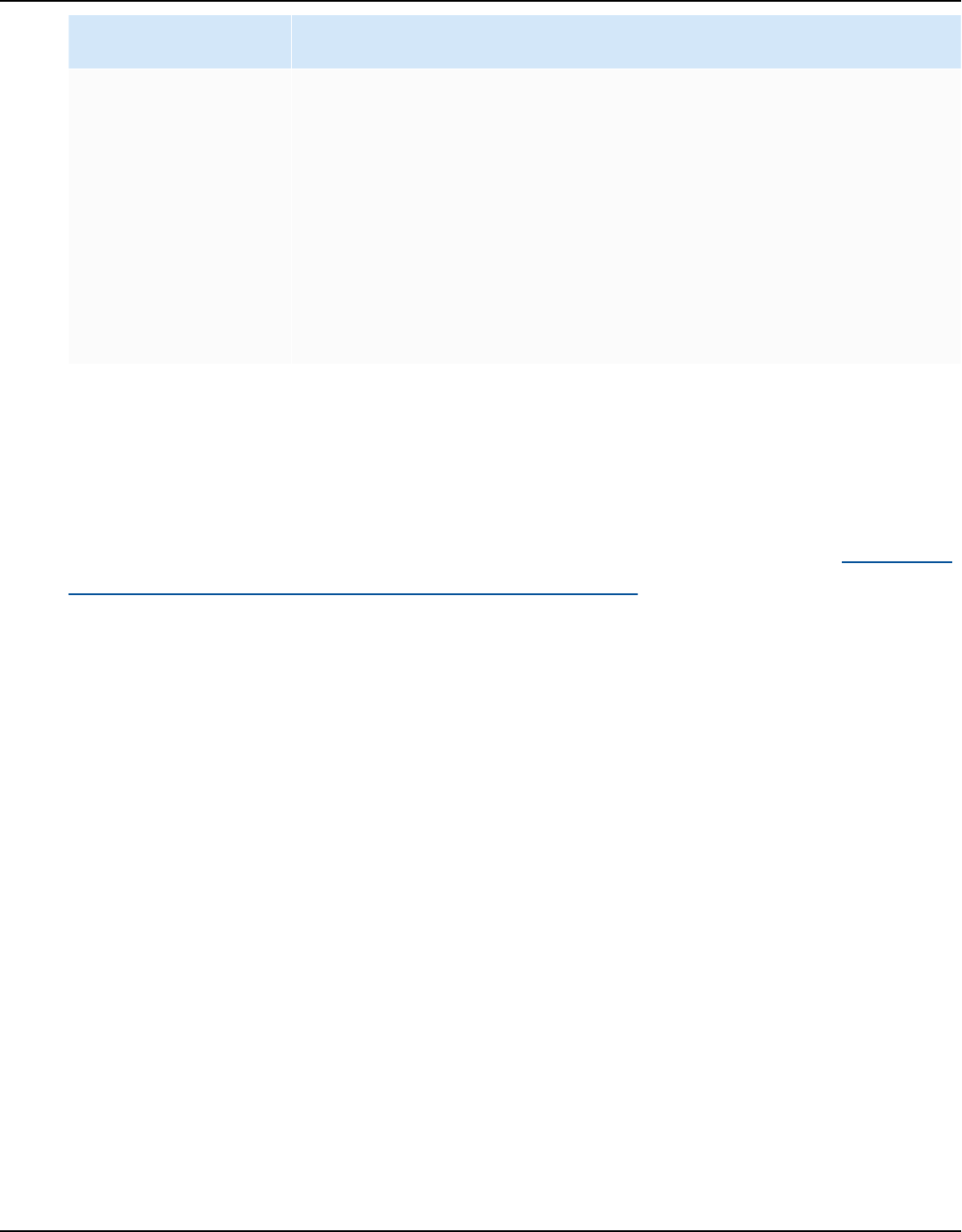

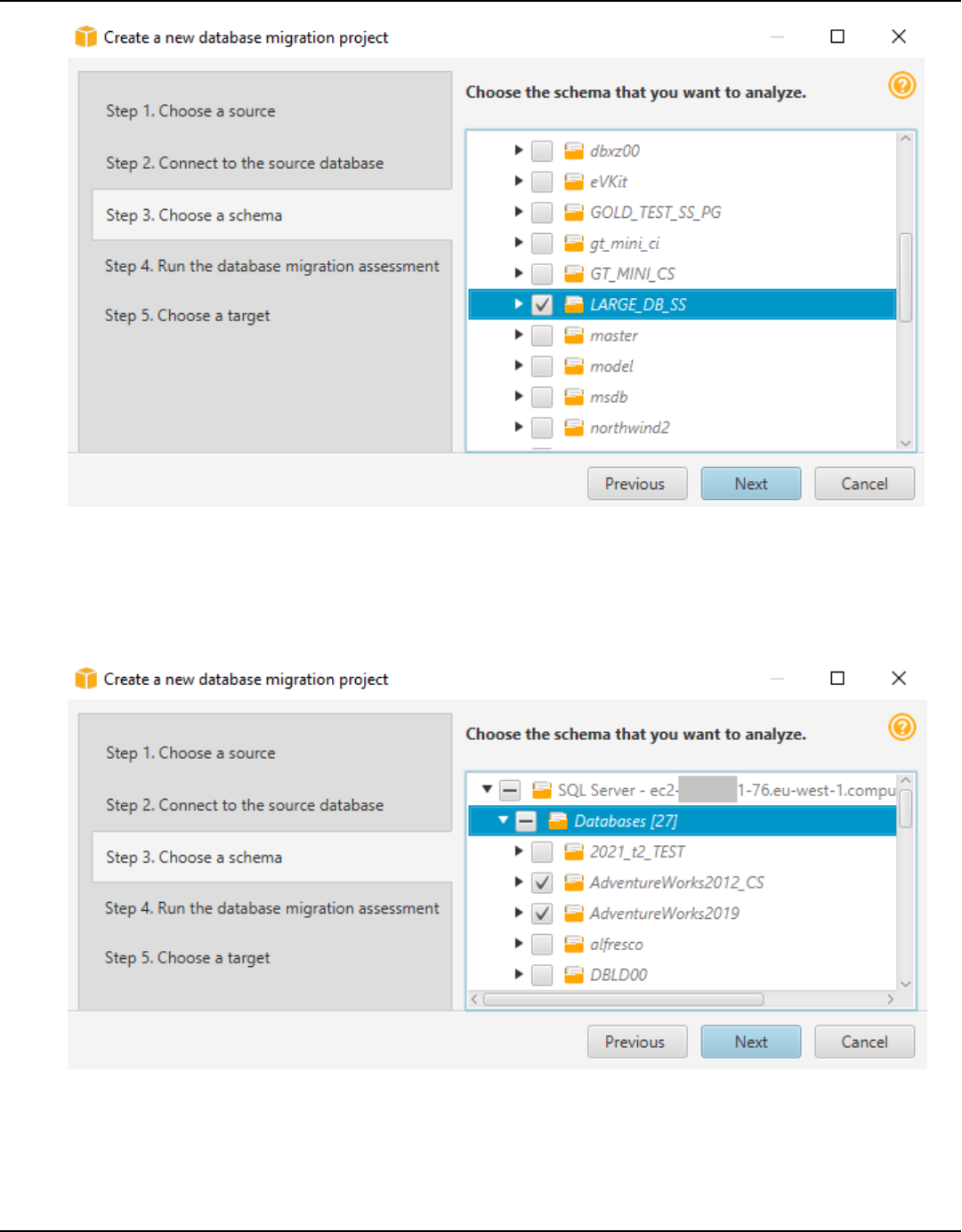

Using the Wizard ........................................................................................................................................ 22

Saving projects ............................................................................................................................................ 25

Adding database servers .......................................................................................................................... 26

Offline mode ............................................................................................................................................... 27

Tree filters .................................................................................................................................................... 28

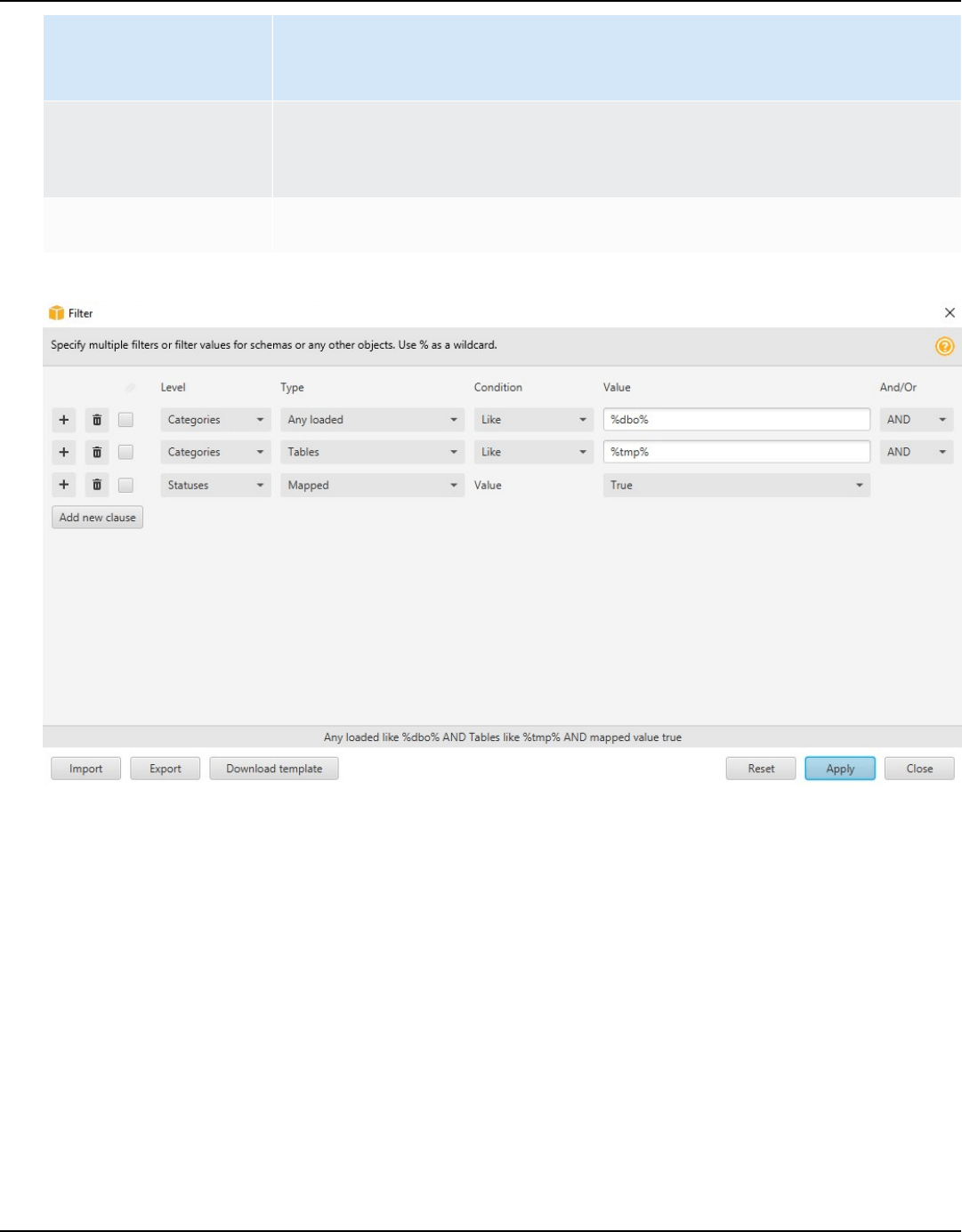

.................................................................................................................................................................. 29

Importing a file list for the tree filter .............................................................................................. 31

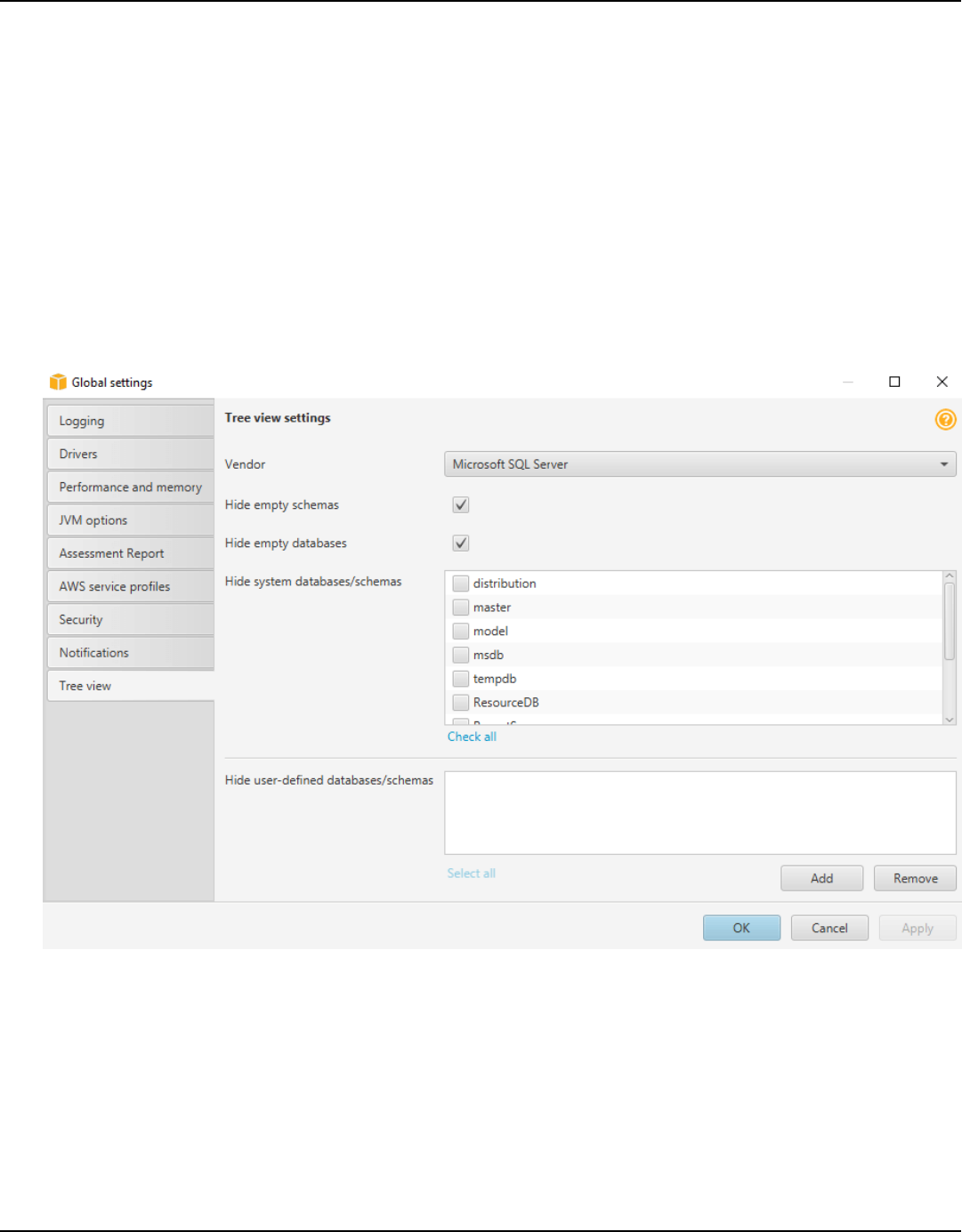

Hiding schemas ........................................................................................................................................... 32

Assessment report ..................................................................................................................................... 33

Converting schemas ................................................................................................................................... 37

Applying converted schemas ................................................................................................................... 40

Managing profiles ...................................................................................................................................... 41

Storing AWS credentials ...................................................................................................................... 41

Setting the default profile for a project .......................................................................................... 43

Permissions for using the AWS service profile ............................................................................... 44

Configuring Secrets Manager .................................................................................................................. 45

Version 1.0.672 iii

AWS Schema Conversion Tool User Guide

Storing database passwords .................................................................................................................... 46

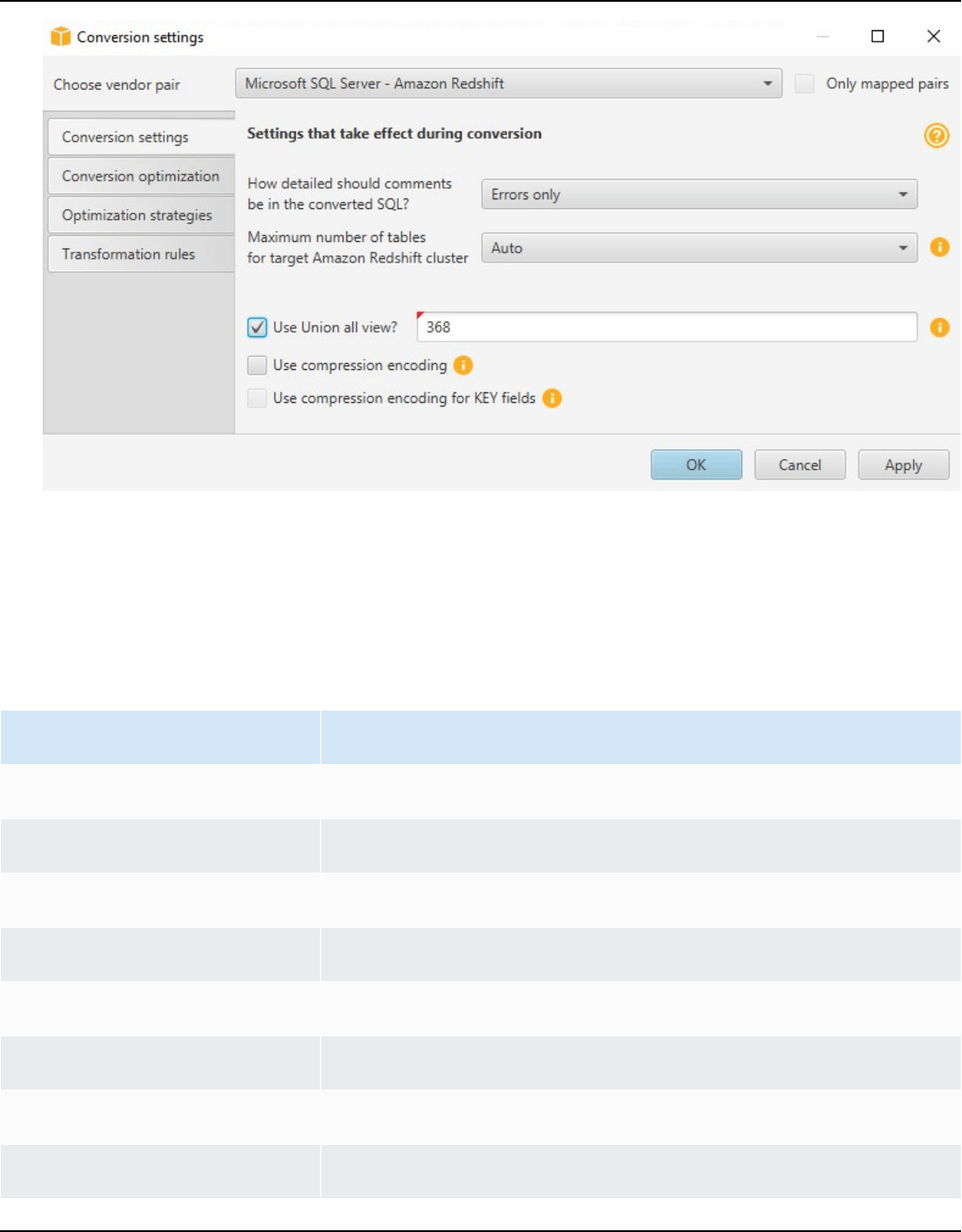

UNION ALL view ......................................................................................................................................... 46

Keyboard shortcuts .................................................................................................................................... 47

Getting started .............................................................................................................................. 49

Connecting to source databases ................................................................................................... 51

Connecting to encrypted Amazon RDS and and Aurora .................................................................... 52

Connecting to Apache Cassandra ........................................................................................................... 55

Connecting to Apache Cassandra as a source ............................................................................... 55

Connecting to Apache Hadoop ............................................................................................................... 57

Prerequisites for using Apache Hadoop as a source ...................................................................... 57

Permissions for Hive as a source ....................................................................................................... 58

Permissions for HDFS as a source ..................................................................................................... 59

Permissions for HDFS as a target ...................................................................................................... 59

Connecting to Apache Hadoop as a source .................................................................................... 59

Connecting to Hive and HDFS ........................................................................................................... 61

Connecting to Amazon EMR as a target .......................................................................................... 64

Connecting to Apache Oozie ................................................................................................................... 67

Prerequisites ........................................................................................................................................... 67

Connecting to Apache Oozie as a source ........................................................................................ 68

Permissions for AWS Lambda ............................................................................................................ 70

Connecting to AWS Step Functions as a target ............................................................................. 72

Connecting to Azure SQL ......................................................................................................................... 73

Privileges for Azure SQL Database ................................................................................................... 73

Connecting to Azure SQL Database as a source ........................................................................... 74

Connecting to IBM DB2 for z/OS ........................................................................................................... 75

Prerequisites for Db2 for z/OS .......................................................................................................... 76

Privileges for Db2 for z/OS ................................................................................................................ 76

Connecting to Db2 for z/OS as a source ......................................................................................... 77

Privileges for MySQL as a target ....................................................................................................... 79

Privileges for PostgreSQL as a target .............................................................................................. 81

Db2 for z/OS to PostgreSQL conversion settings .......................................................................... 81

IBM Db2 LUW databases .......................................................................................................................... 83

Privileges for Db2 LUW ....................................................................................................................... 83

Connecting to Db2 LUW as a source ................................................................................................ 86

Db2 LUW to PostgreSQL ..................................................................................................................... 88

Db2 LUW to MySQL ............................................................................................................................. 90

Version 1.0.672 iv

AWS Schema Conversion Tool User Guide

Using MySQL as a source ......................................................................................................................... 92

Privileges for MySQL ............................................................................................................................ 92

Connecting to MySQL as a source .................................................................................................... 92

Privileges for PostgreSQL as a target .............................................................................................. 95

Oracle databases ........................................................................................................................................ 96

Privileges for Oracle ........................................................................................................................... 96

Connecting to Oracle as a source ..................................................................................................... 97

Oracle to PostgreSQL ........................................................................................................................ 100

Oracle to MySQL ................................................................................................................................ 106

Oracle to Amazon RDS for Oracle .................................................................................................. 116

PostgreSQL databases ............................................................................................................................ 123

Privileges for PostgreSQL ................................................................................................................ 123

Connecting to PostgreSQL as a source ........................................................................................ 123

Privileges for MySQL as a target .................................................................................................... 126

SAP databases .......................................................................................................................................... 127

Privileges for SAP ASE ..................................................................................................................... 128

Connecting to SAP ASE as a source .............................................................................................. 128

Privileges for MySQL as a target .................................................................................................... 130

SAP ASE to MySQL conversion settings ........................................................................................ 132

Privileges for PostgreSQL as a target ............................................................................................ 132

SAP ASE to PostgreSQL conversion settings ................................................................................ 133

SQL Server databases ............................................................................................................................. 134

Privileges for Microsoft SQL Server ............................................................................................... 135

Using Windows Authentication with Microsoft SQL Server ....................................................... 136

Connecting to SQL Server as a source ........................................................................................... 138

SQL Server to MySQL ........................................................................................................................ 141

SQL Server to PostgreSQL ............................................................................................................... 145

SQL Server to Amazon RDS SQL Server ...................................................................................... 180

Data warehouses ...................................................................................................................................... 182

Amazon Redshift ................................................................................................................................ 182

Azure Synapse Analytics as a source .............................................................................................. 188

BigQuery as a source ......................................................................................................................... 193

Greenplum databases ........................................................................................................................ 198

Netezza databases .............................................................................................................................. 204

Oracle data warehouse ..................................................................................................................... 213

Snowflake ............................................................................................................................................. 221

Version 1.0.672 v

AWS Schema Conversion Tool User Guide

SQL Server Data Warehouses .......................................................................................................... 229

Teradata databases ............................................................................................................................ 235

Vertica databases ................................................................................................................................ 251

Data type mapping ..................................................................................................................... 258

New data type mapping ........................................................................................................................ 259

Editing data type mappings .................................................................................................................. 259

Virtual target mapping ........................................................................................................................... 260

Data type mapping limitations ............................................................................................................. 261

Reports ......................................................................................................................................... 263

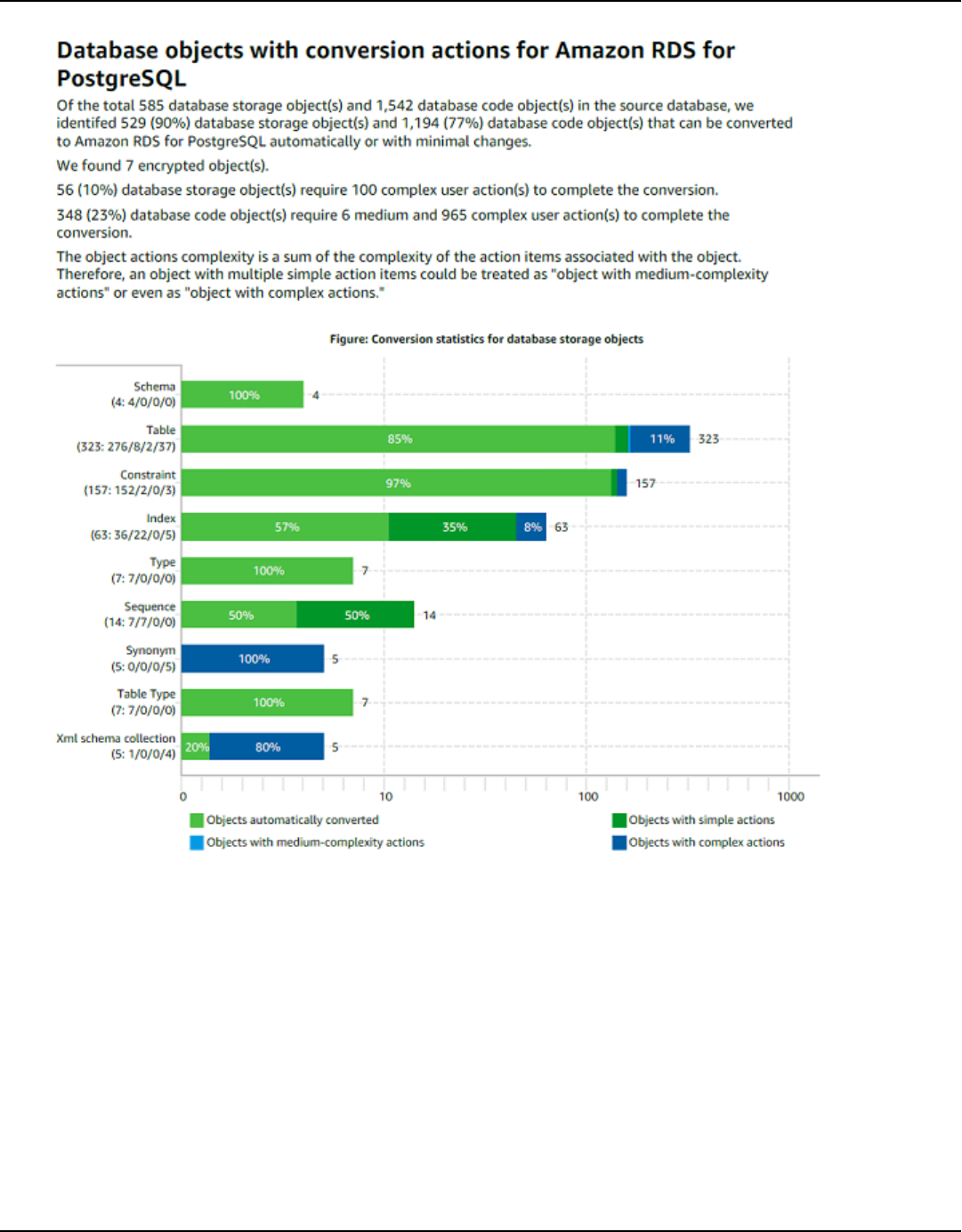

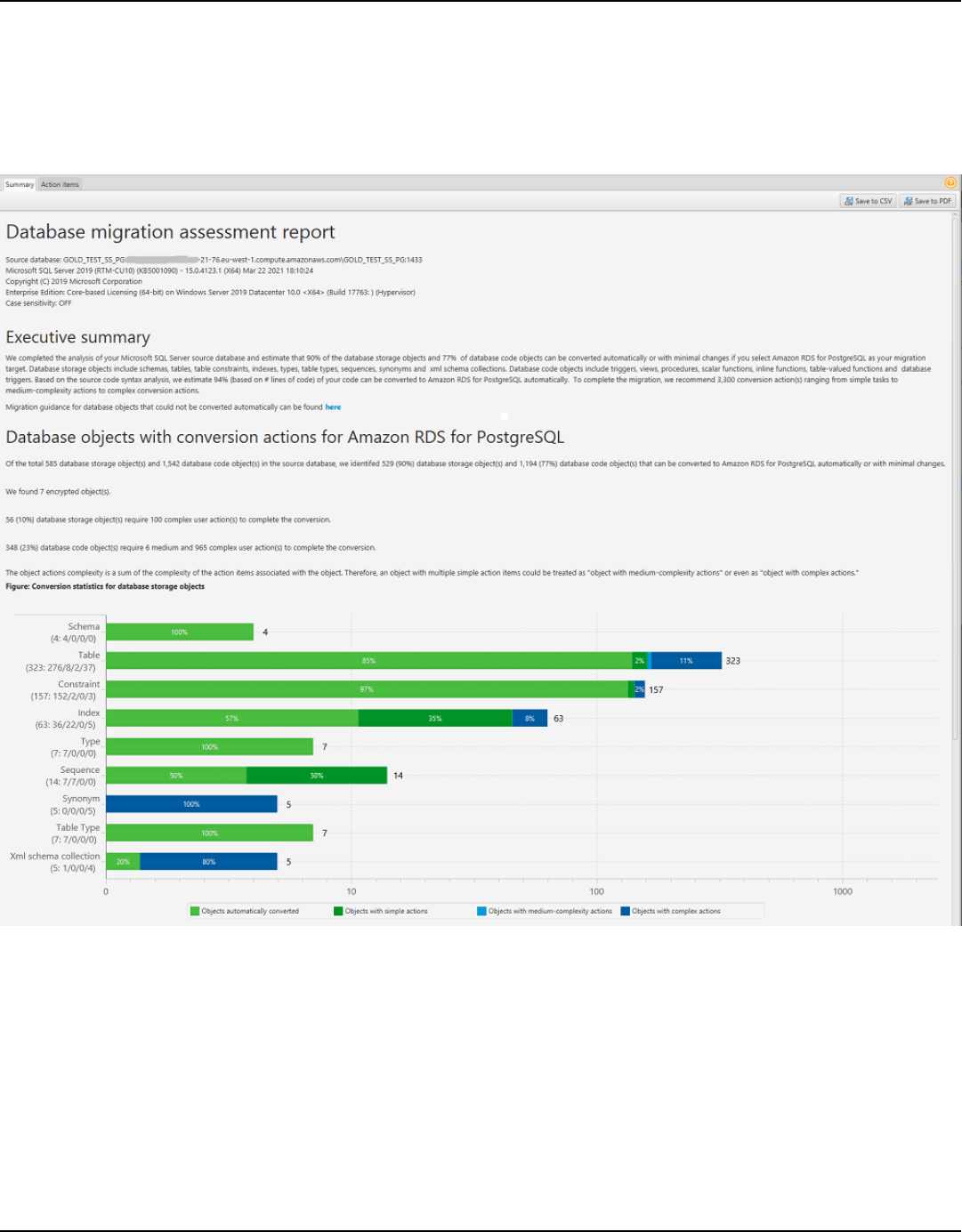

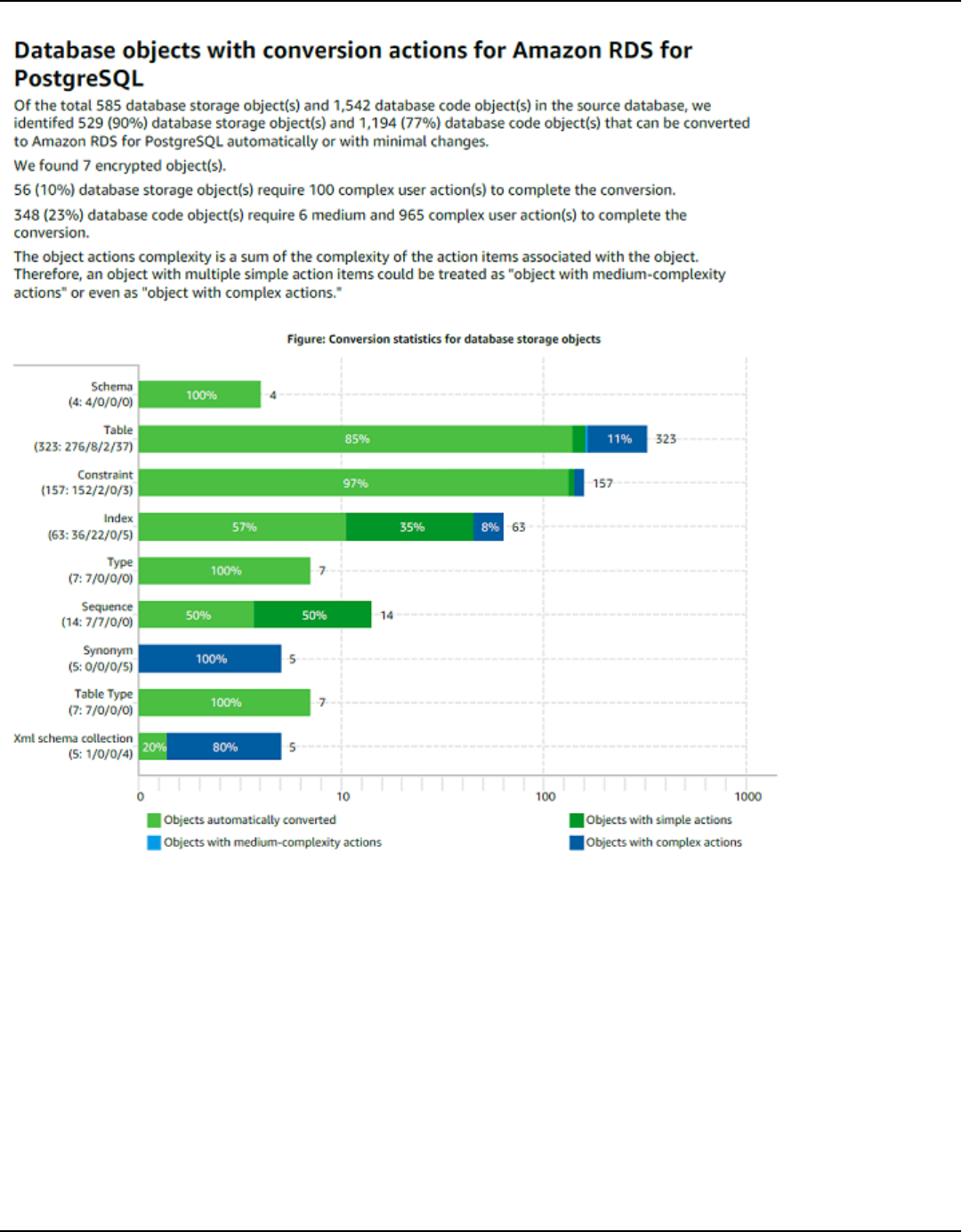

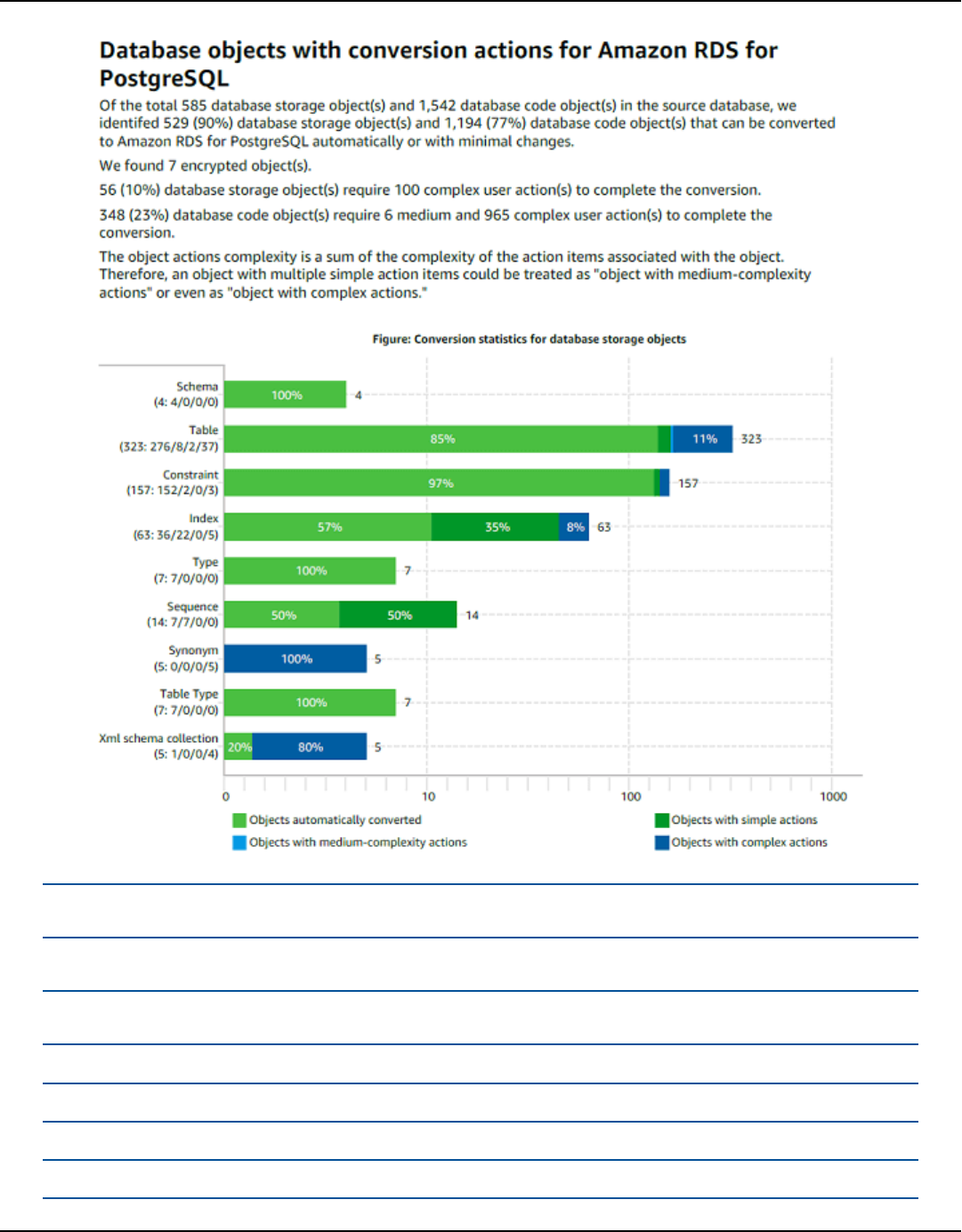

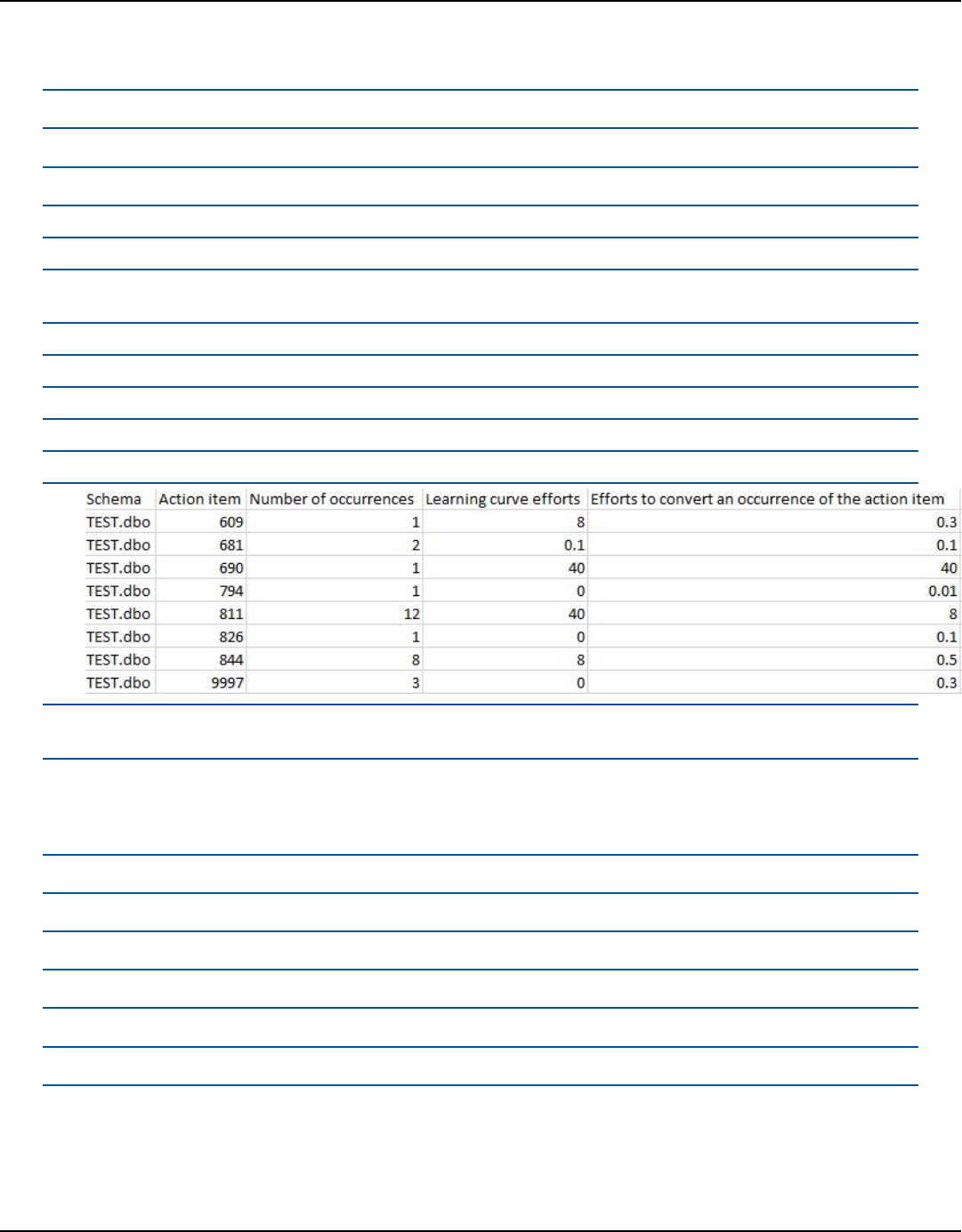

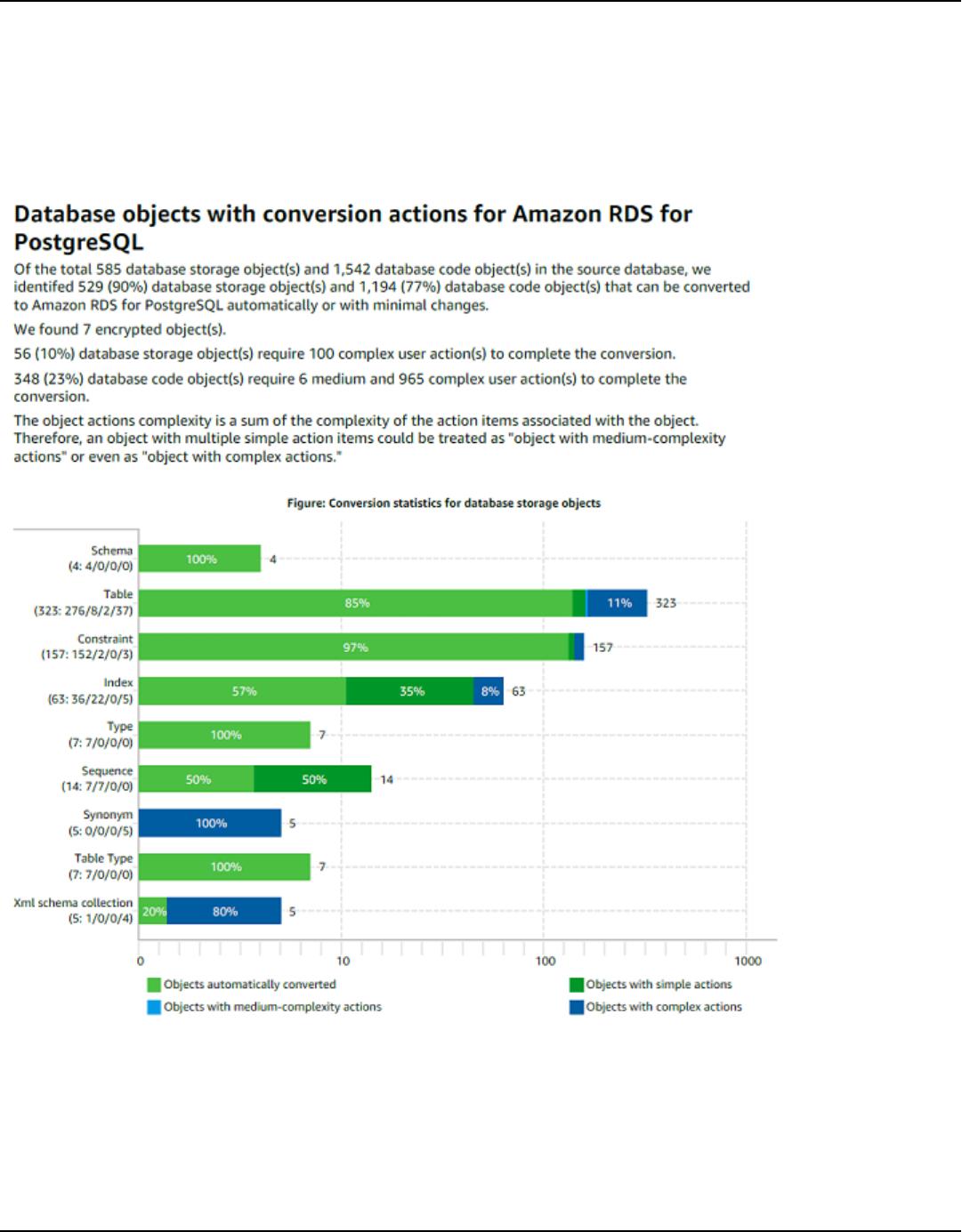

Assessment report ................................................................................................................................... 263

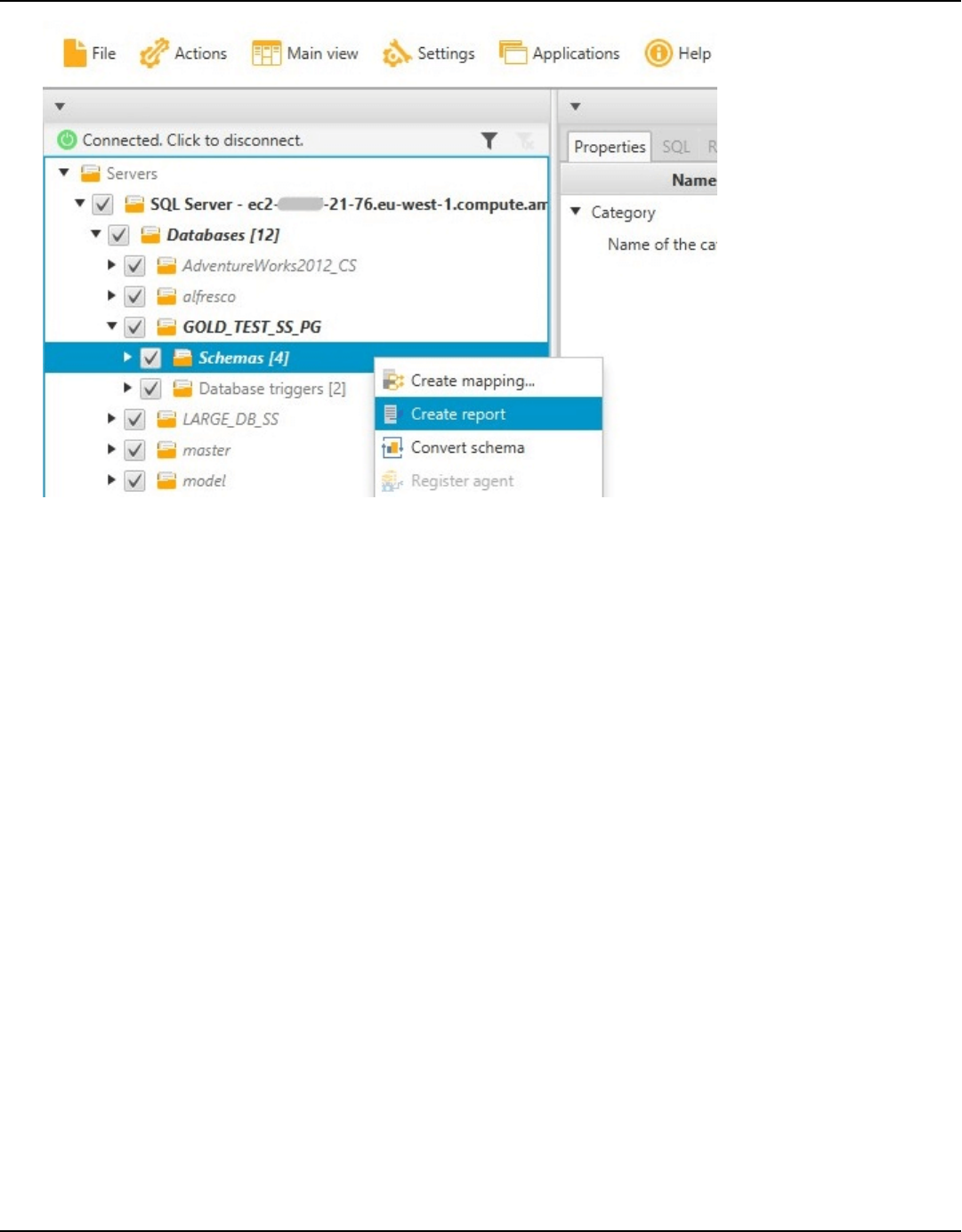

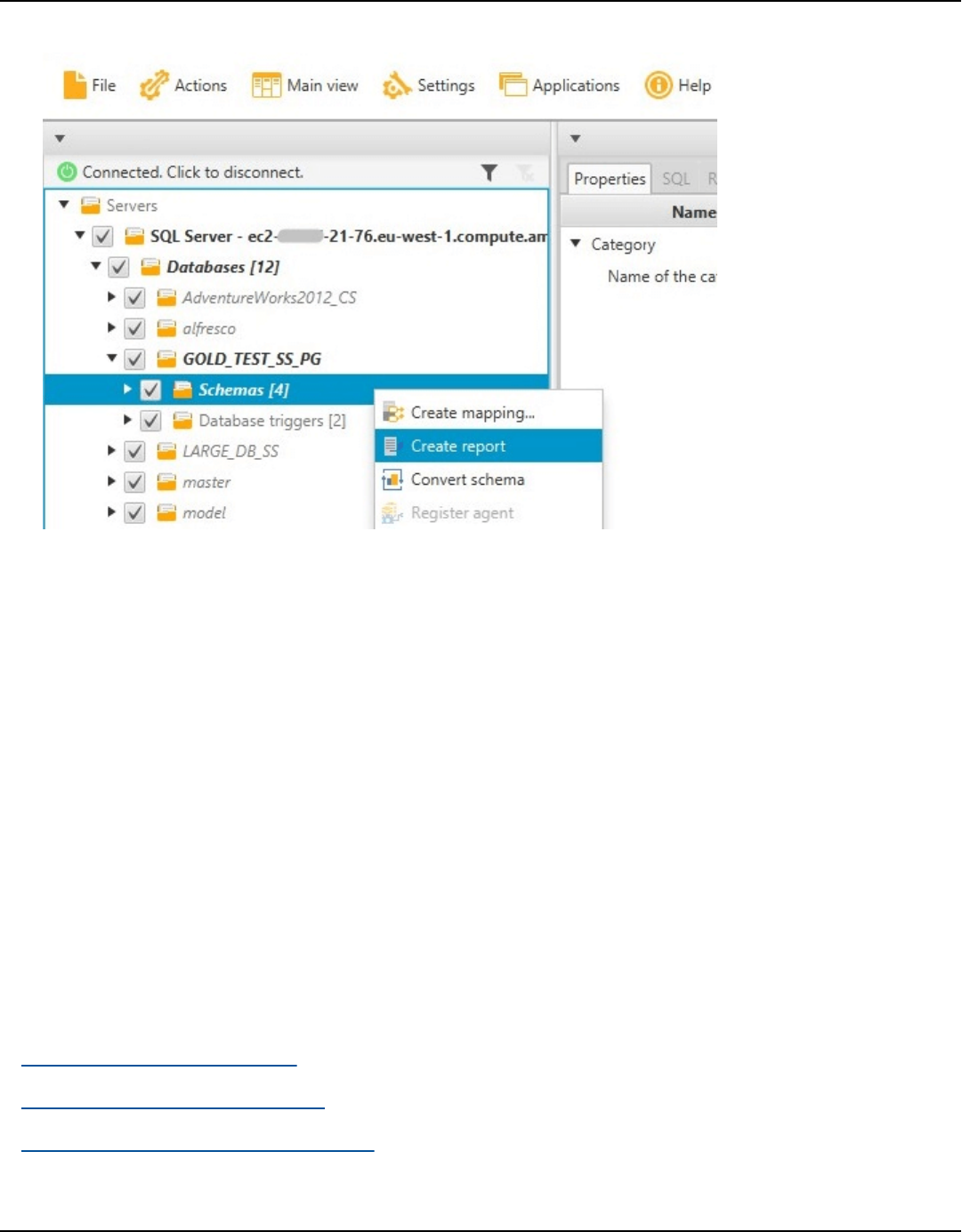

Create assessment report ................................................................................................................. 264

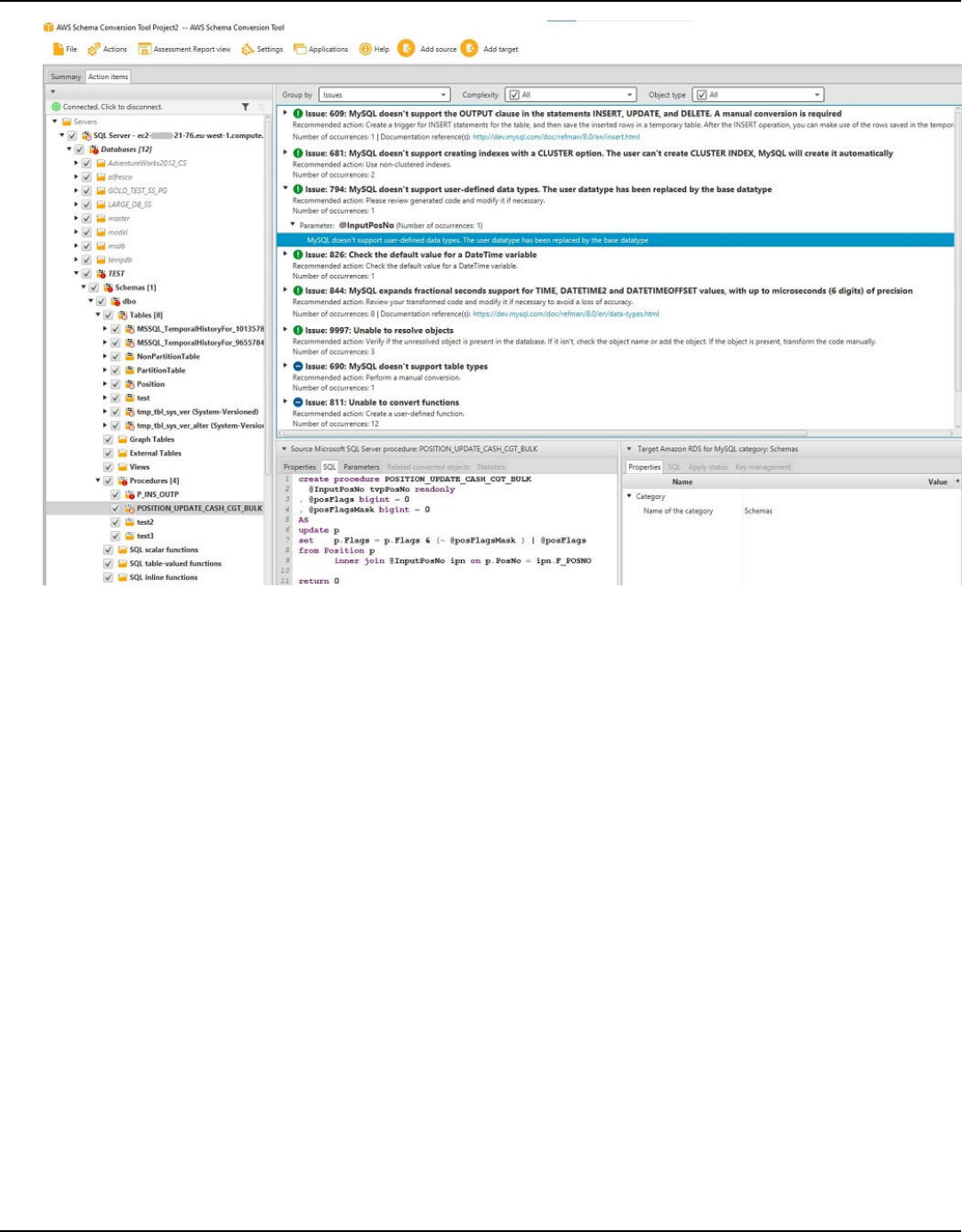

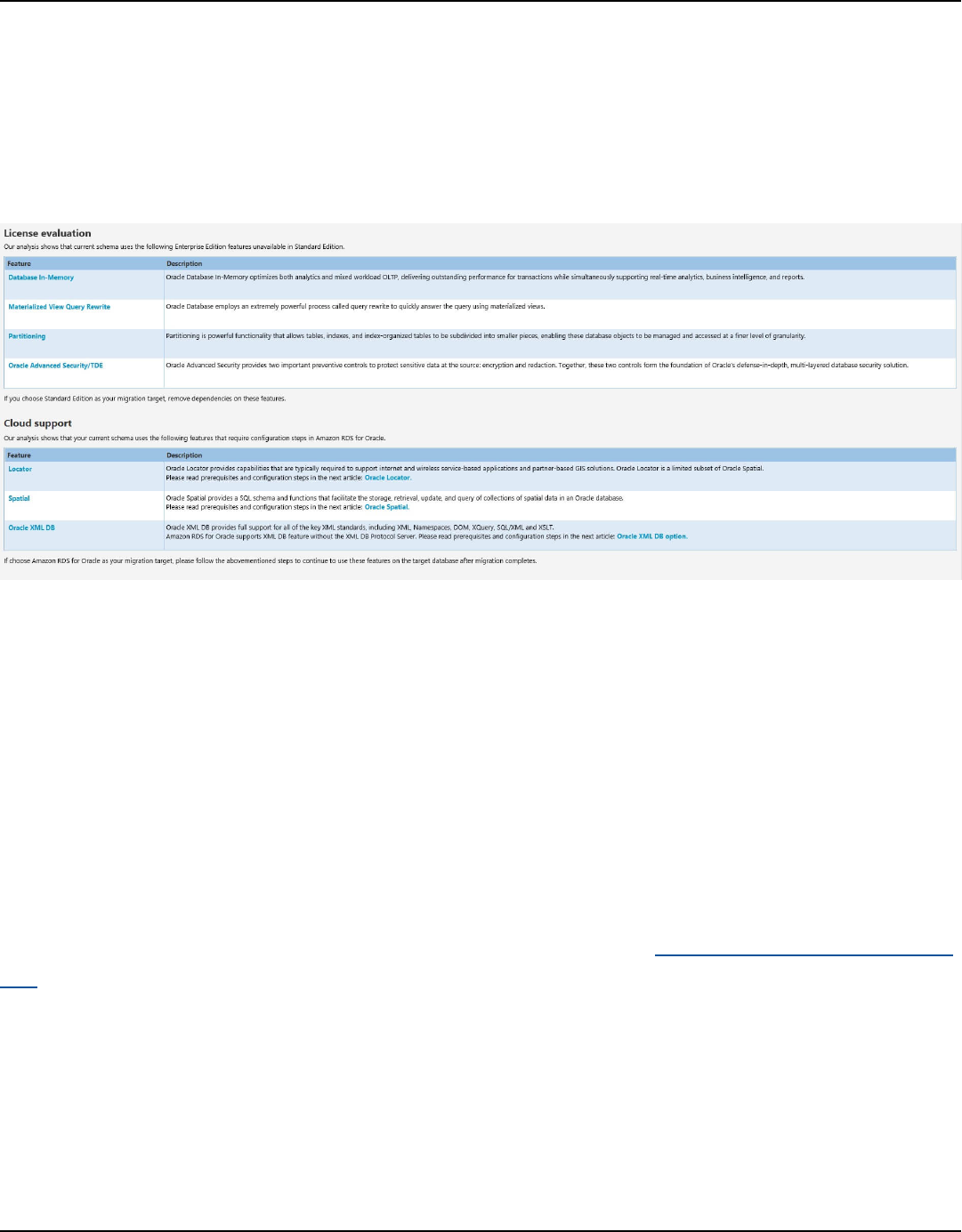

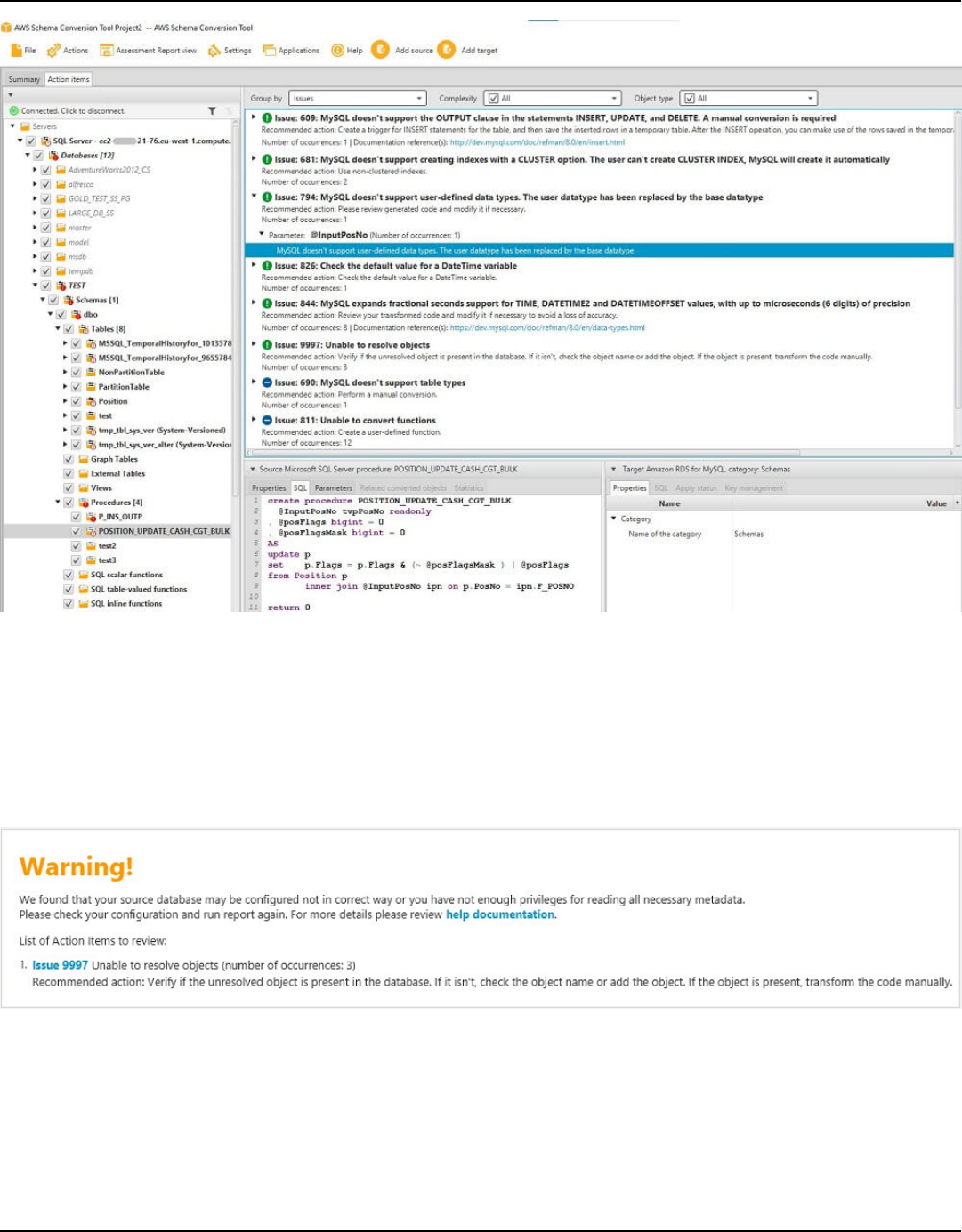

Viewing assessment report .............................................................................................................. 265

Saving the assessment report ......................................................................................................... 269

Configuring an assessment report .................................................................................................. 271

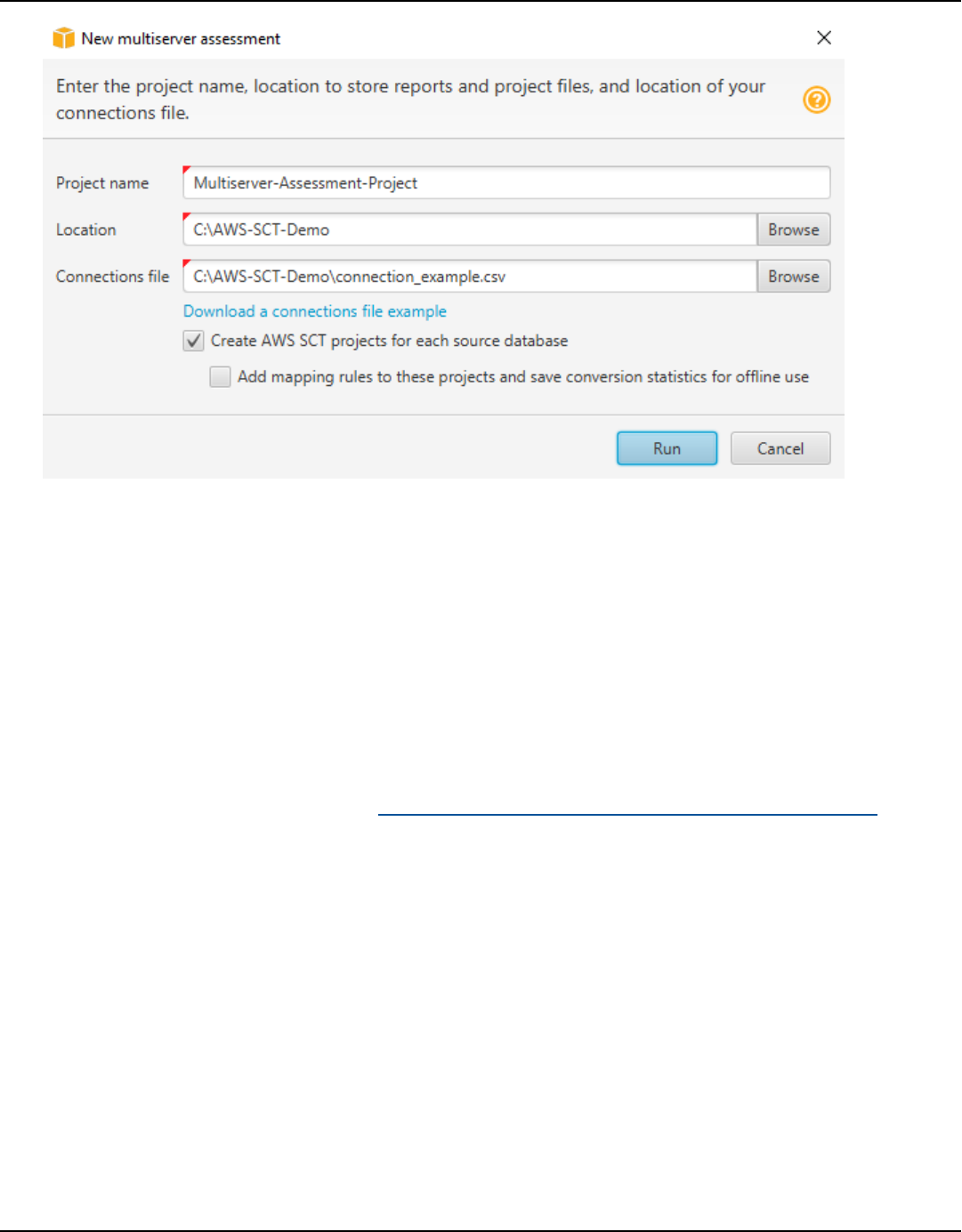

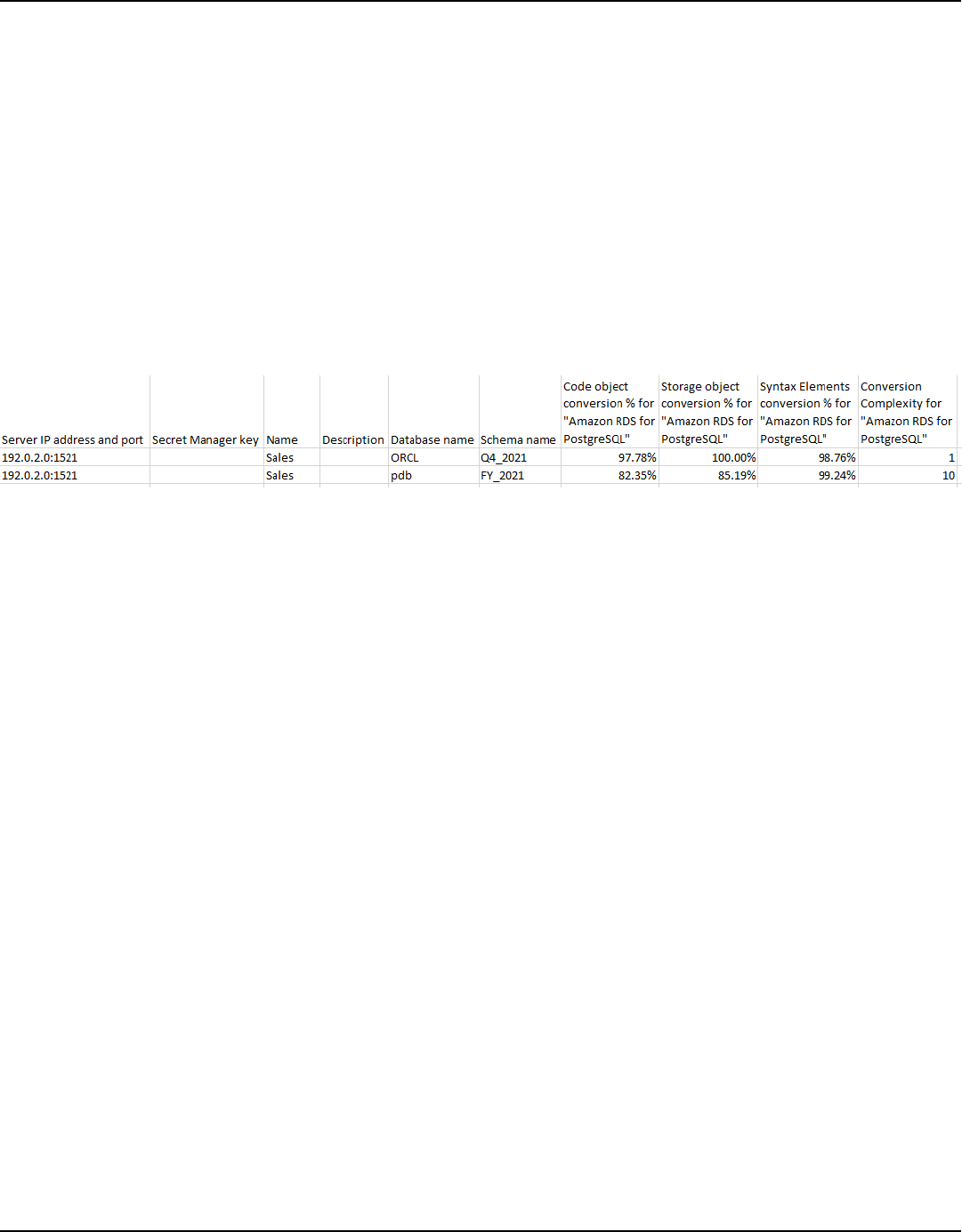

Multiserver assessment report ........................................................................................................ 275

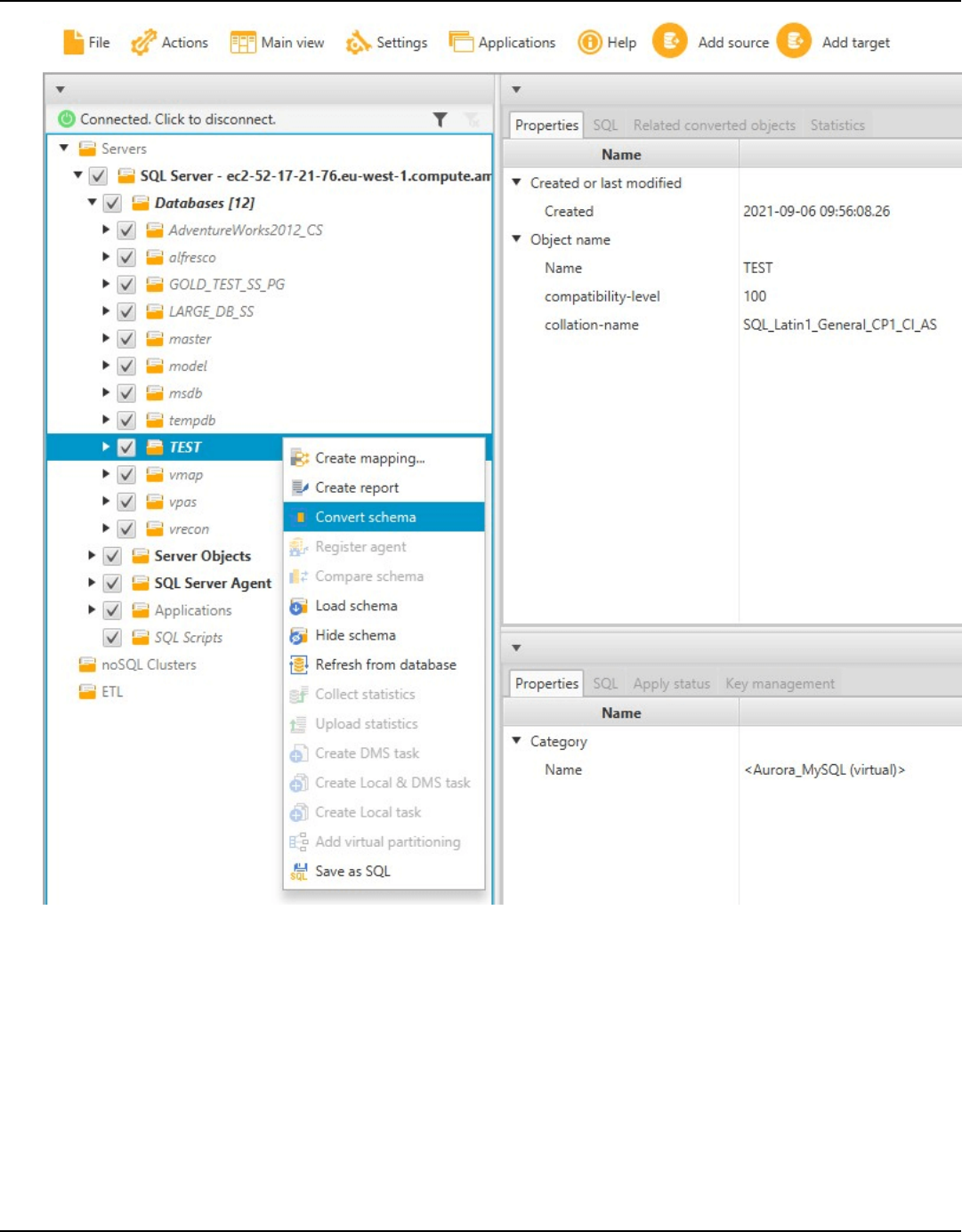

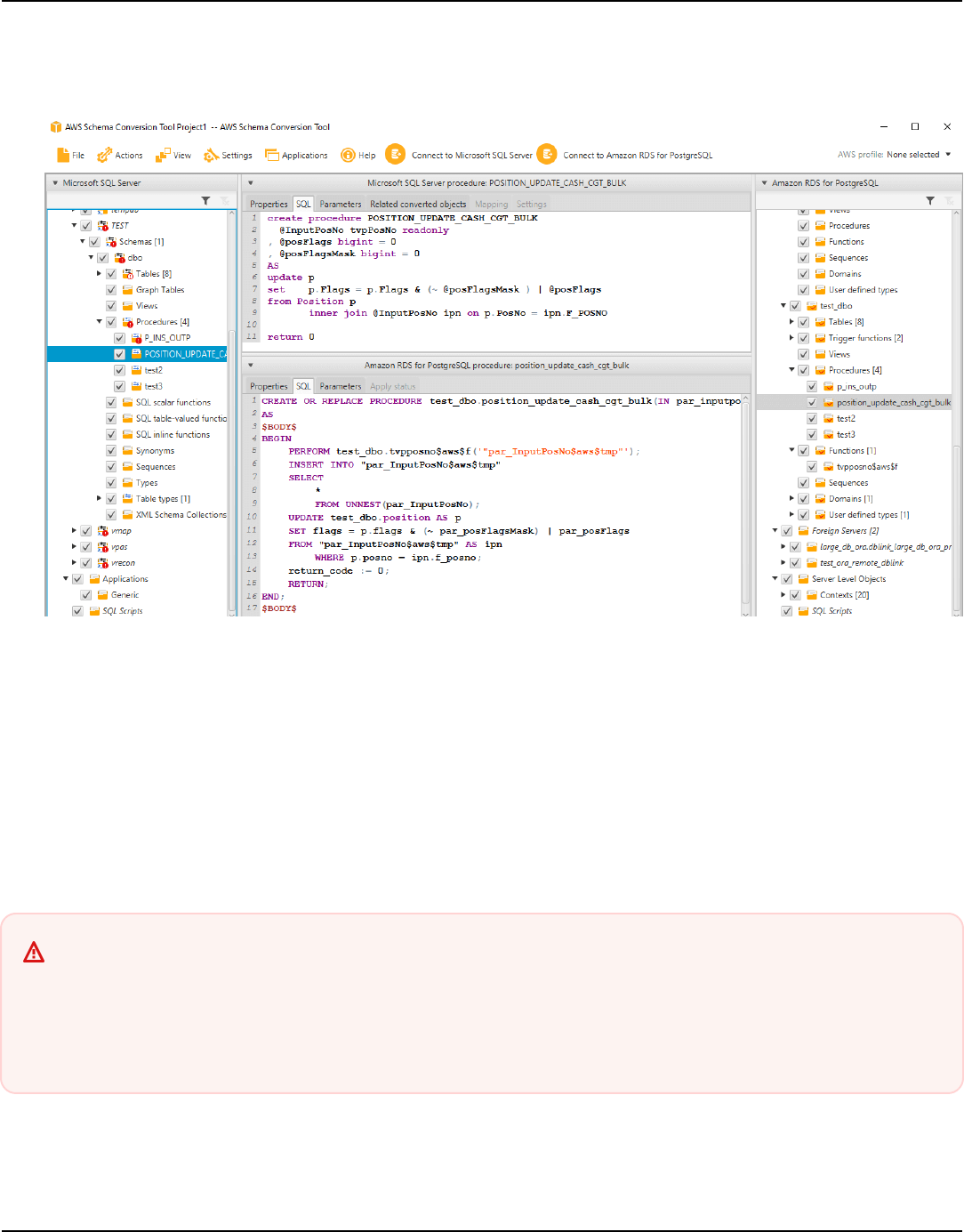

Converting schemas .................................................................................................................... 284

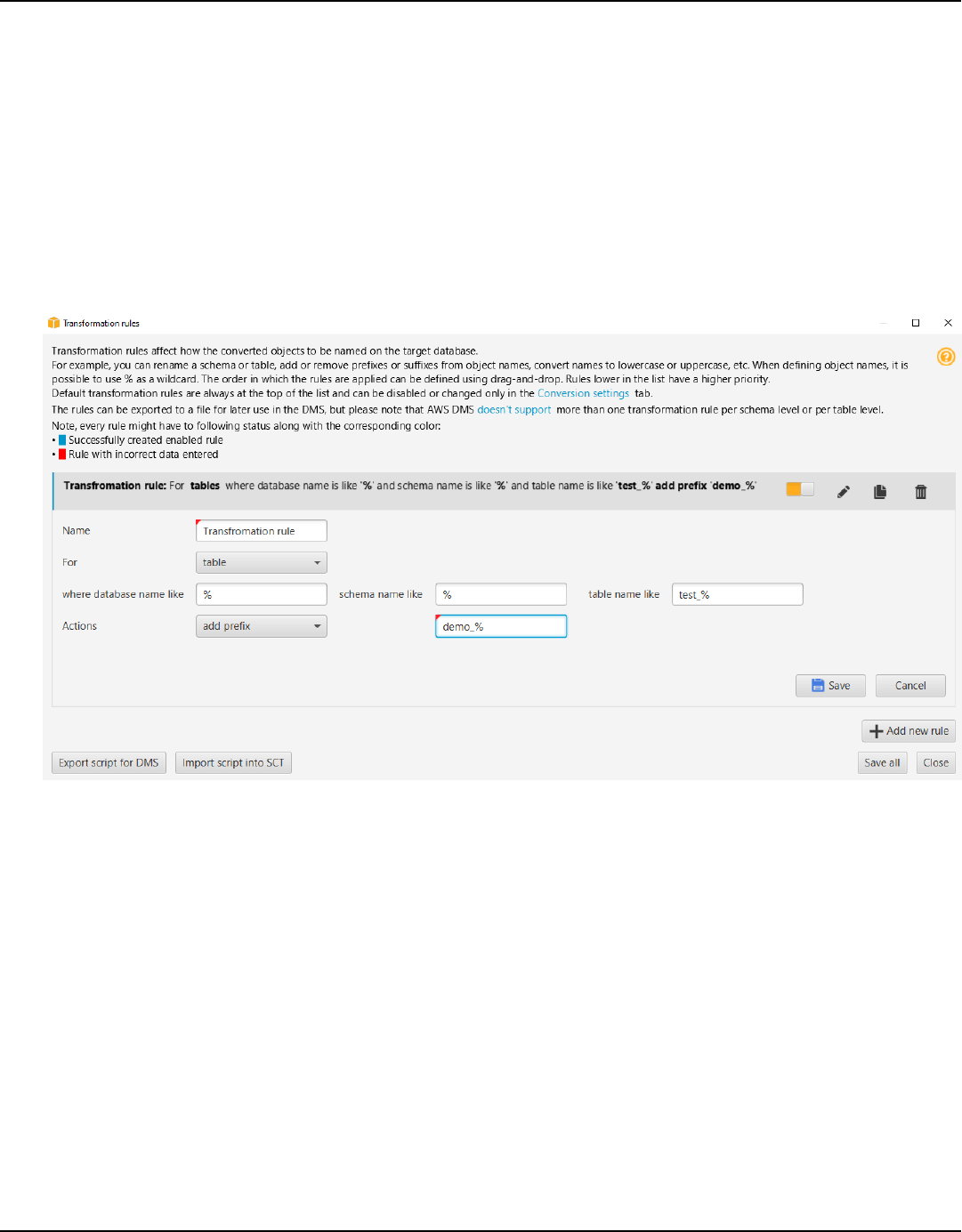

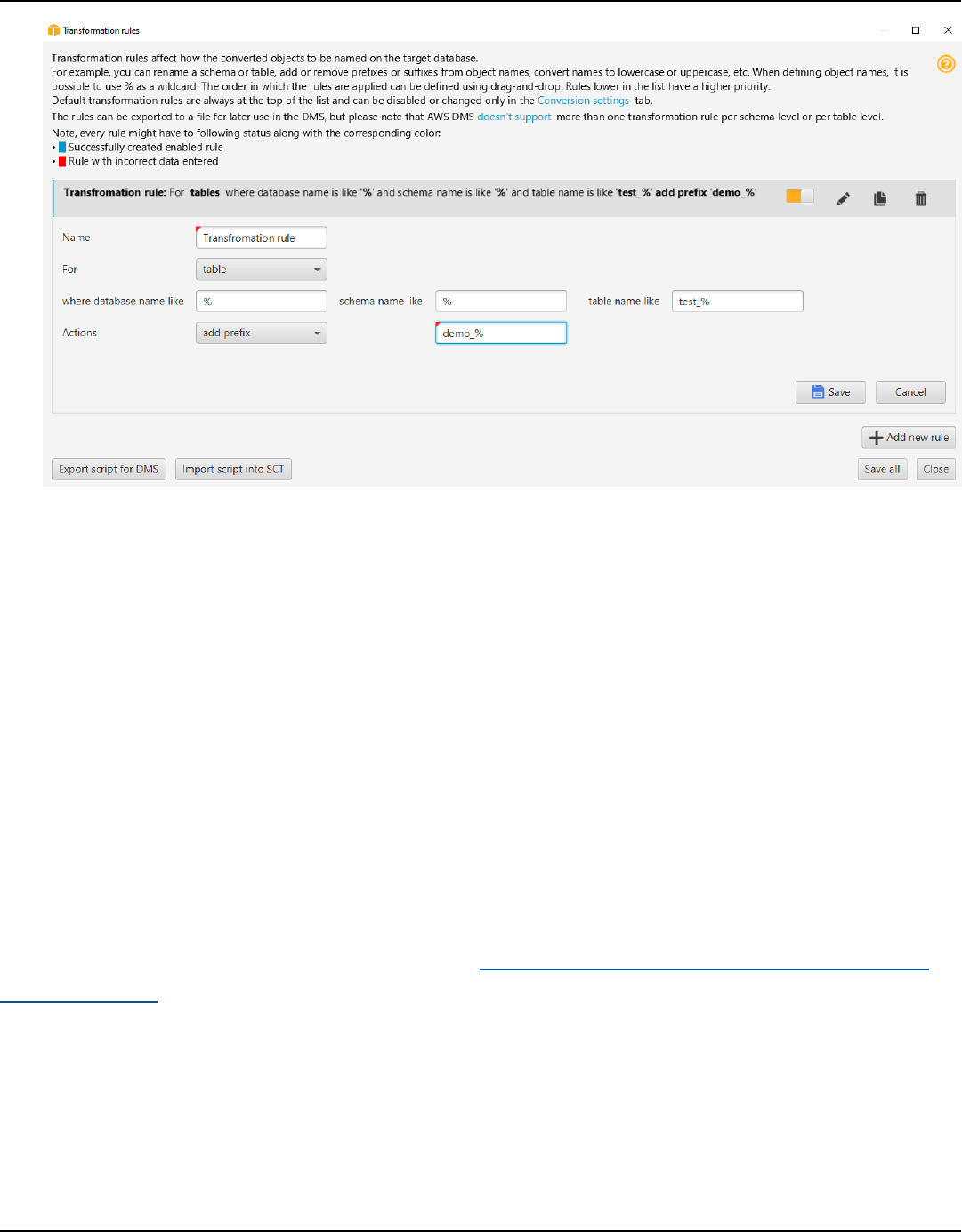

Applying migration rules ........................................................................................................................ 286

Creating migration rules ................................................................................................................... 287

Exporting migration rules ................................................................................................................. 289

Converting schemas manually .............................................................................................................. 289

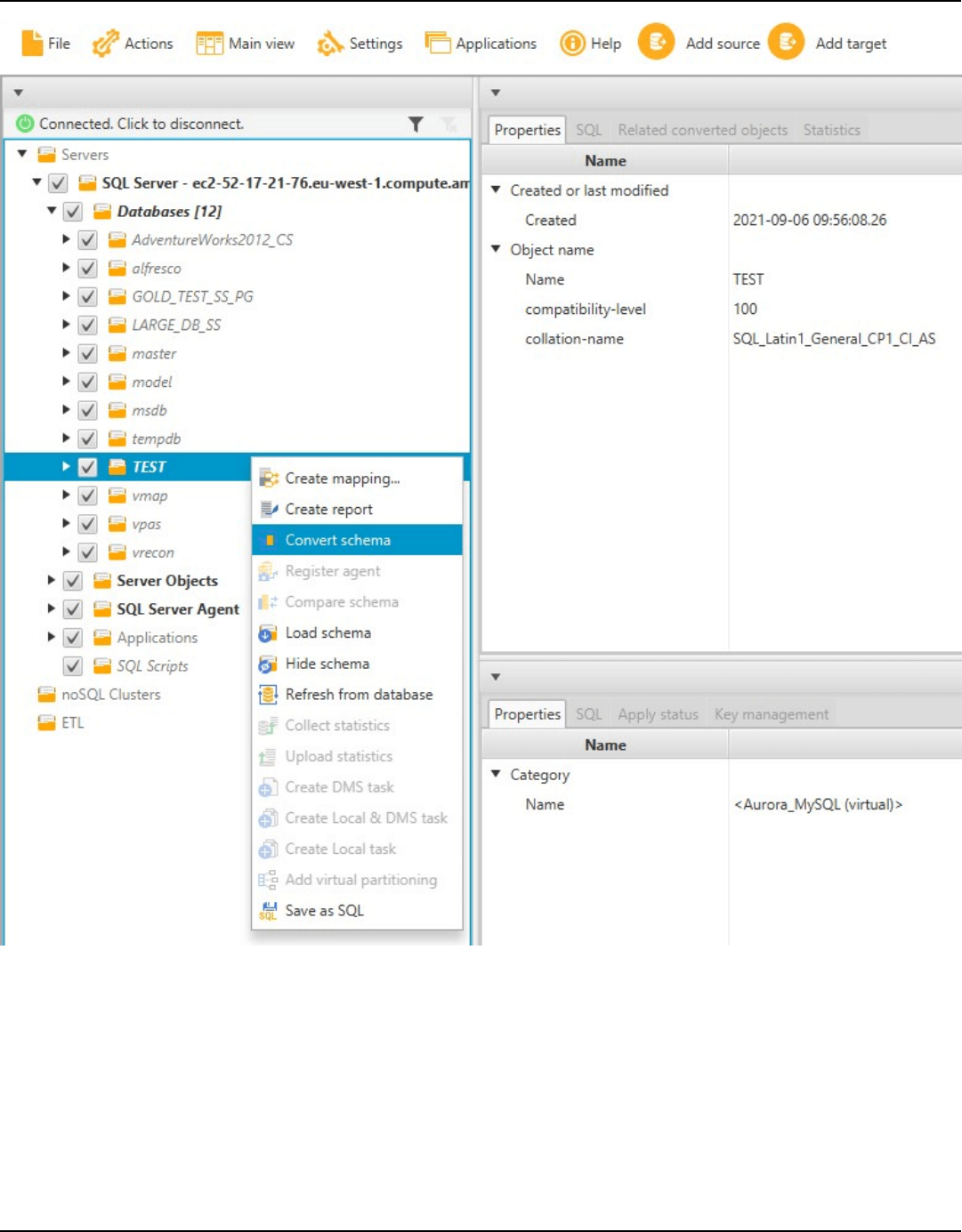

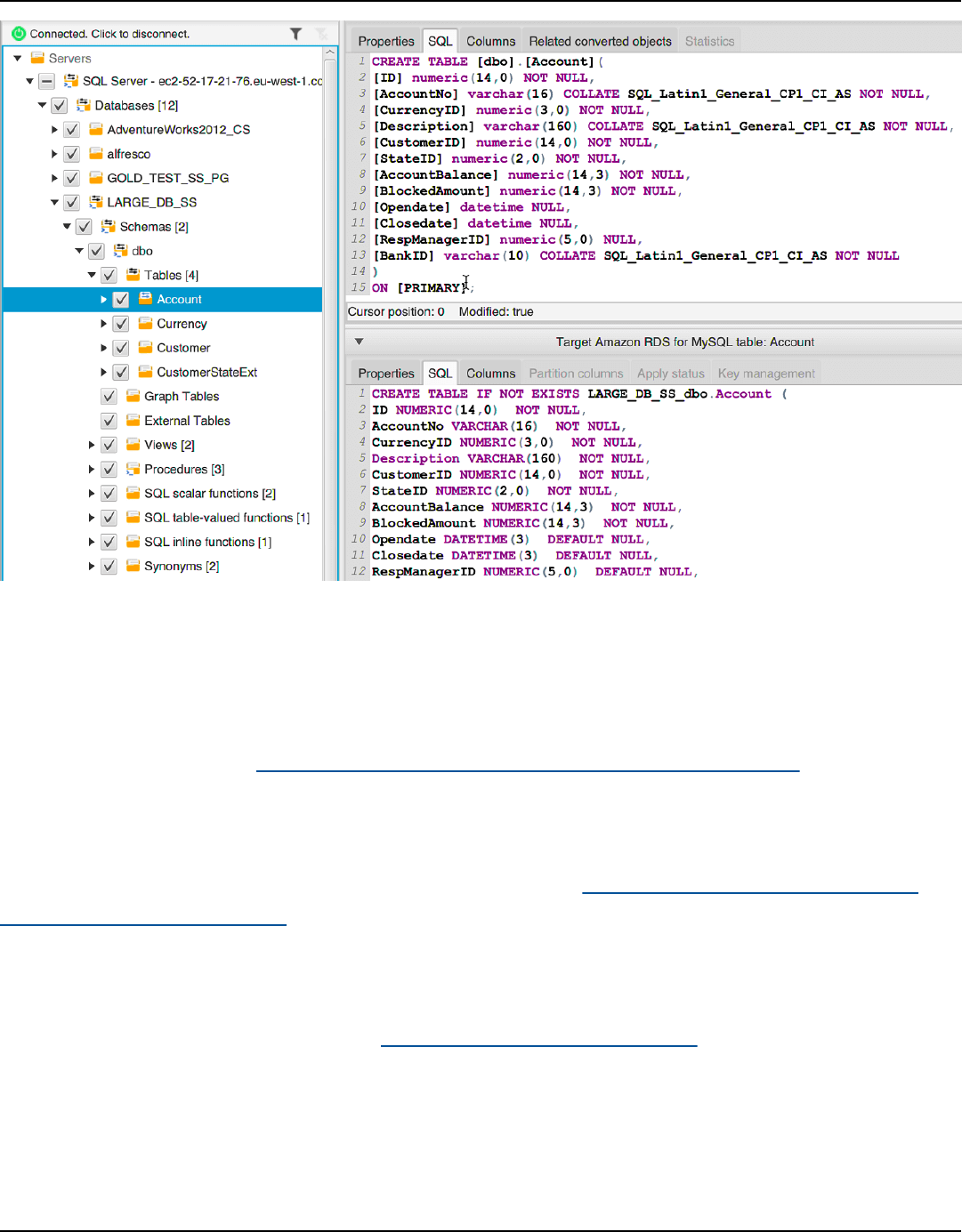

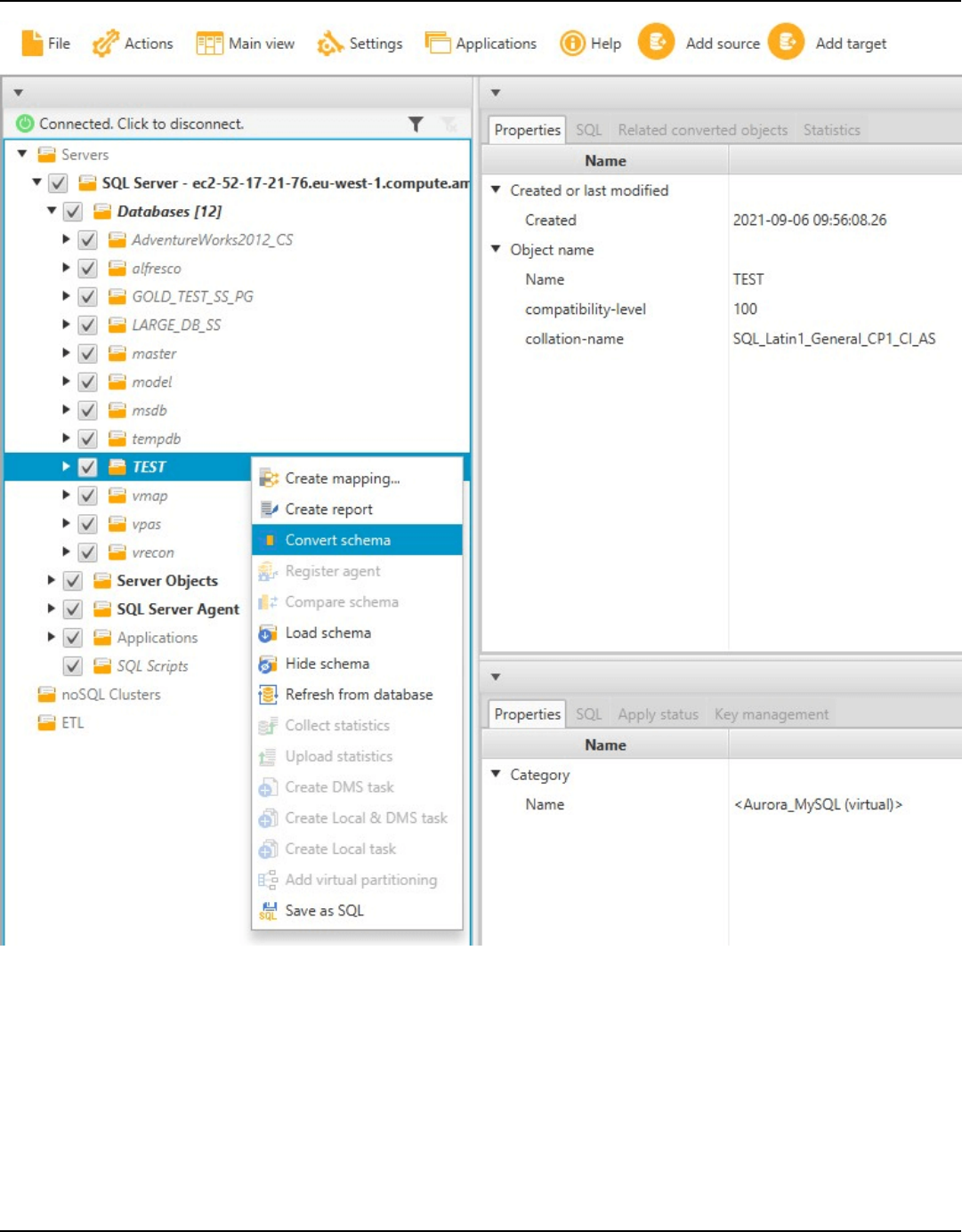

Converting schema ............................................................................................................................. 289

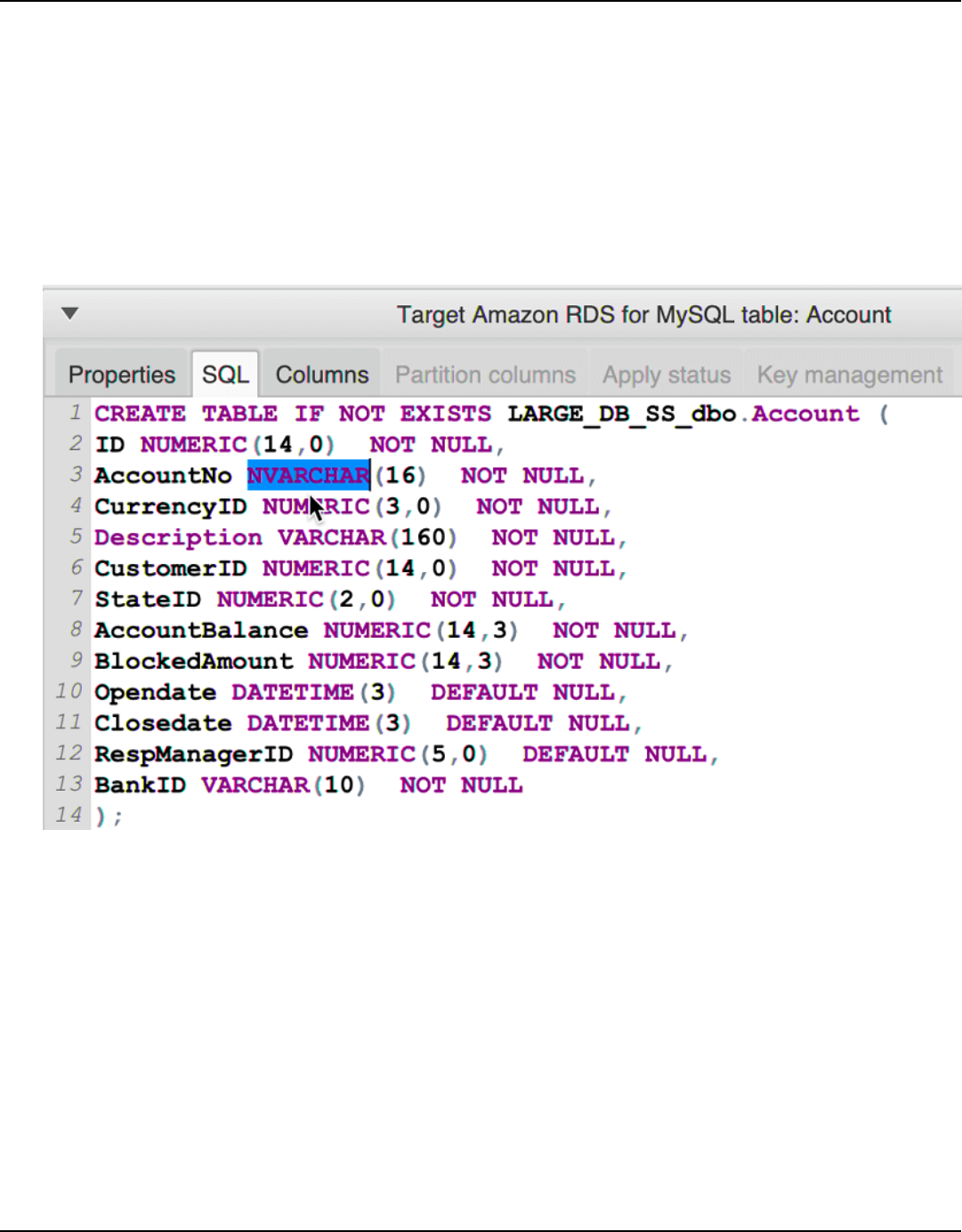

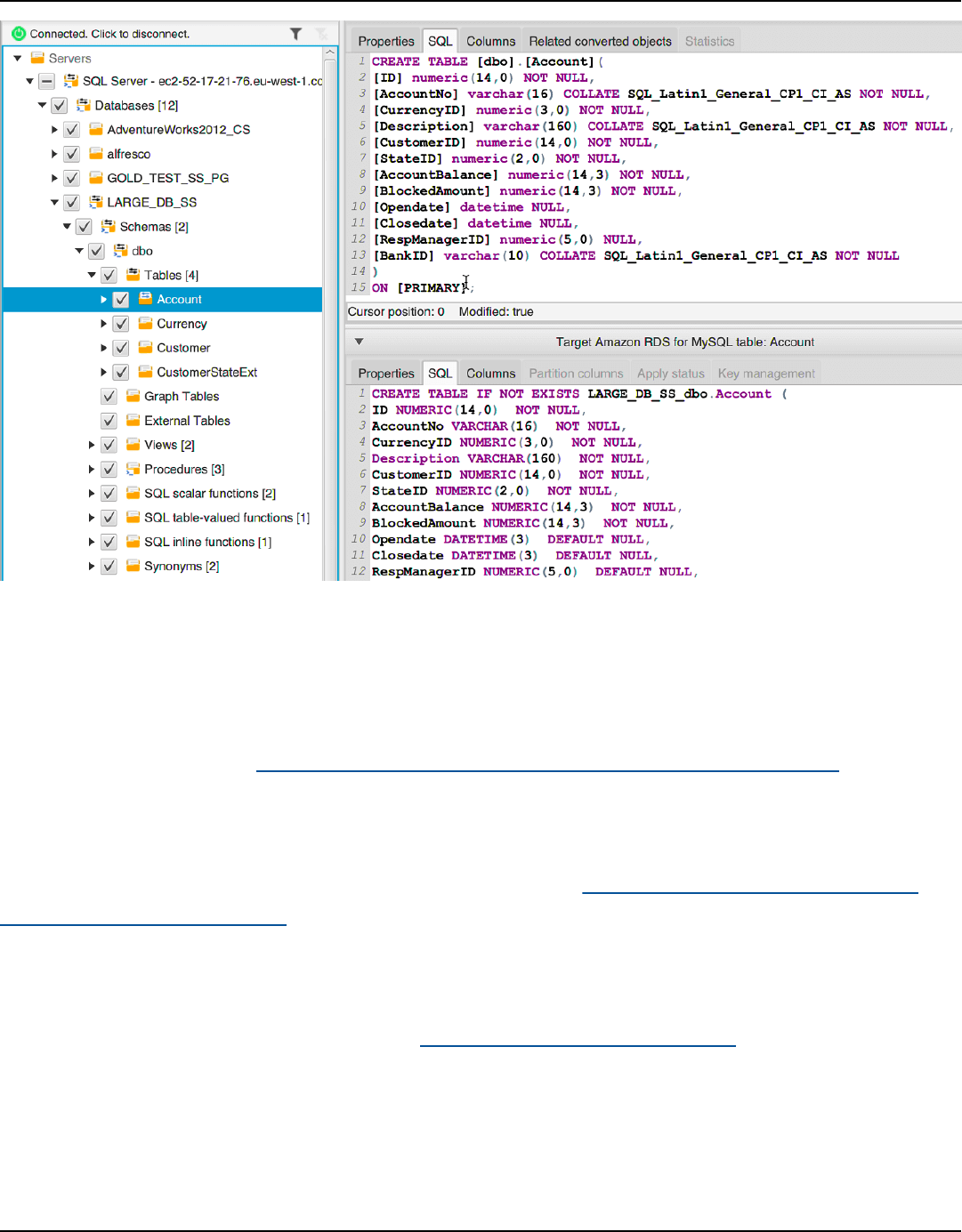

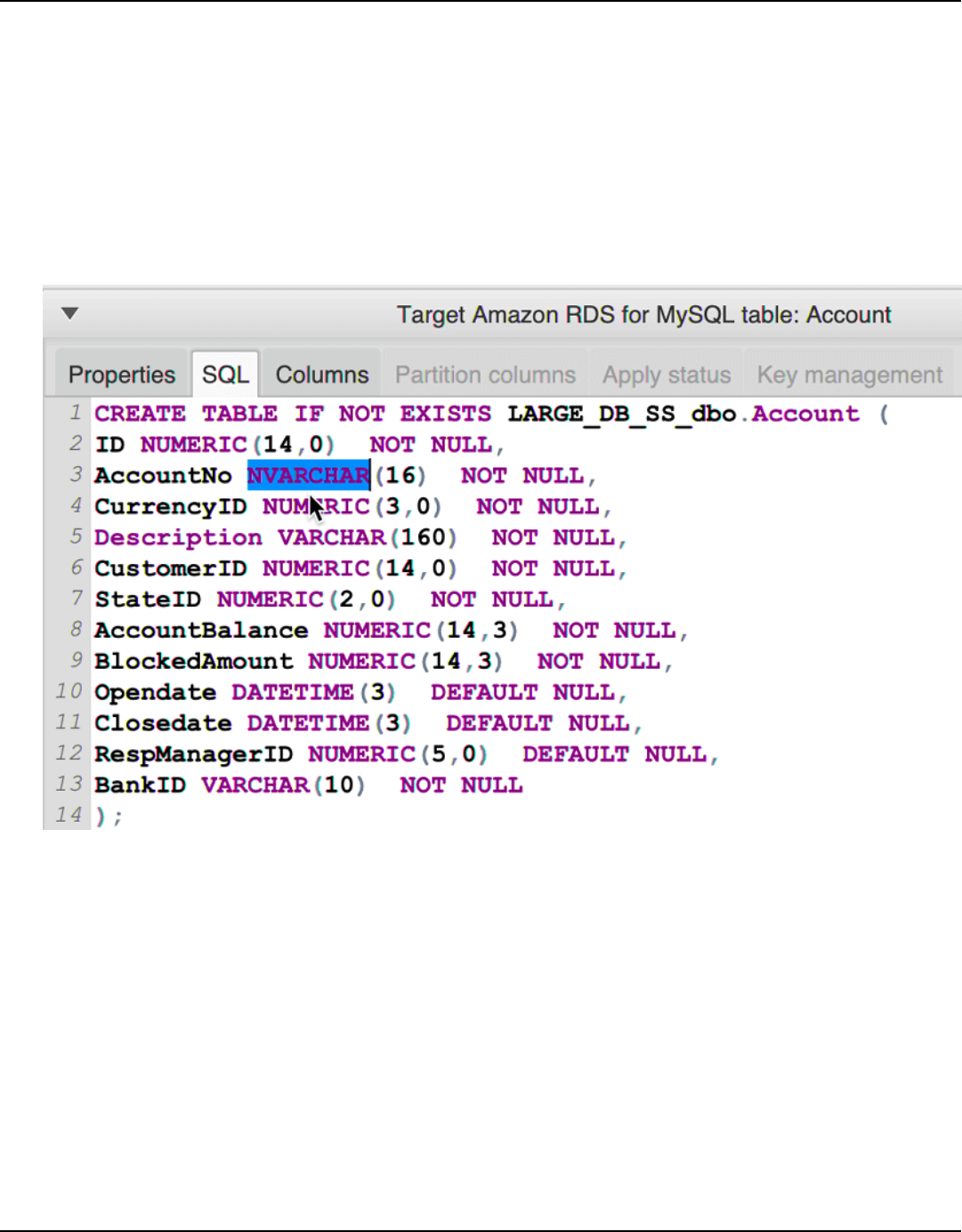

Editing converted schema ................................................................................................................ 291

Clearing a converted schema ........................................................................................................... 292

Manually converting schemas ............................................................................................................... 293

Modifying your source schema ........................................................................................................ 293

Modifying your target schema ........................................................................................................ 293

Updating and refreshing schemas ....................................................................................................... 294

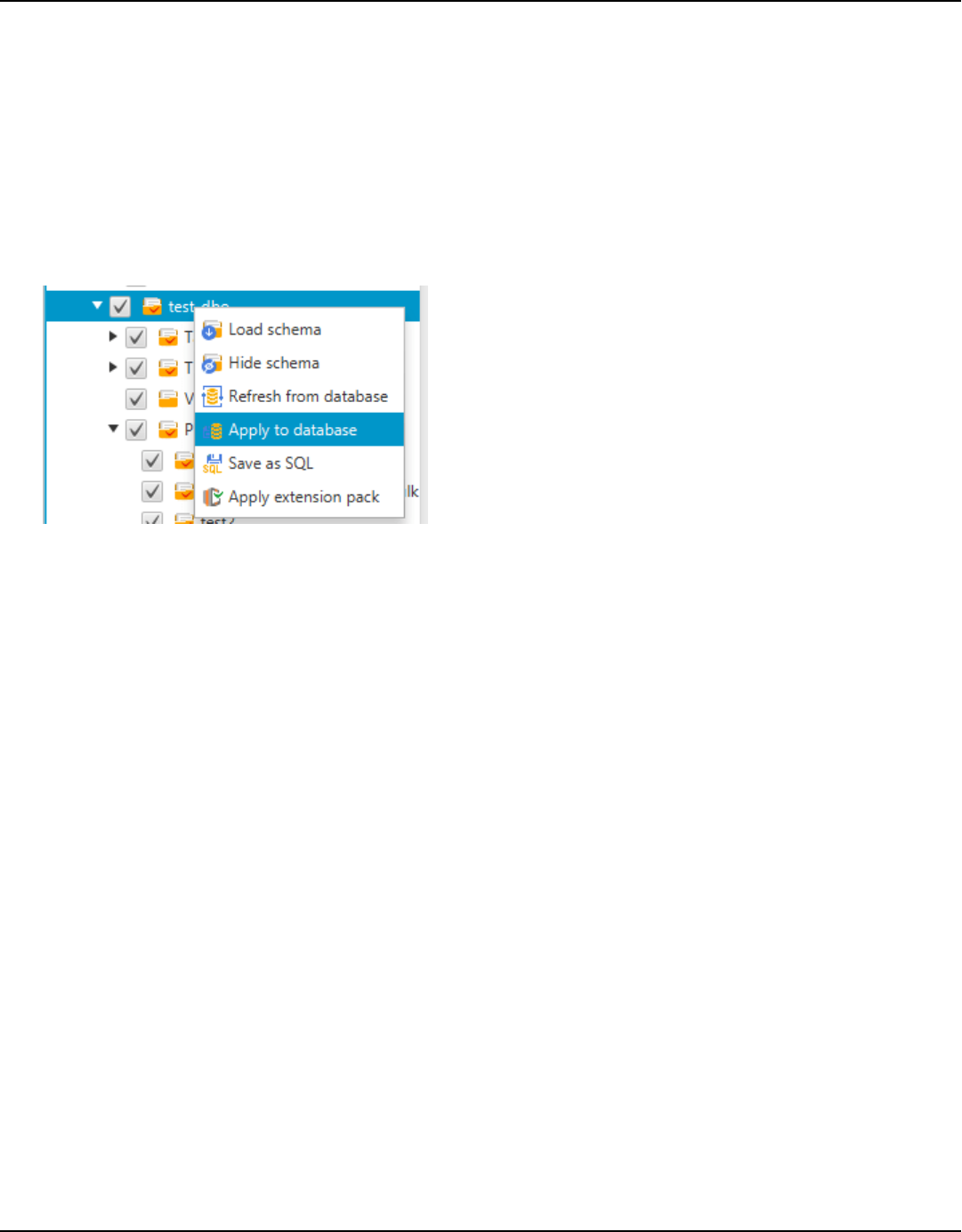

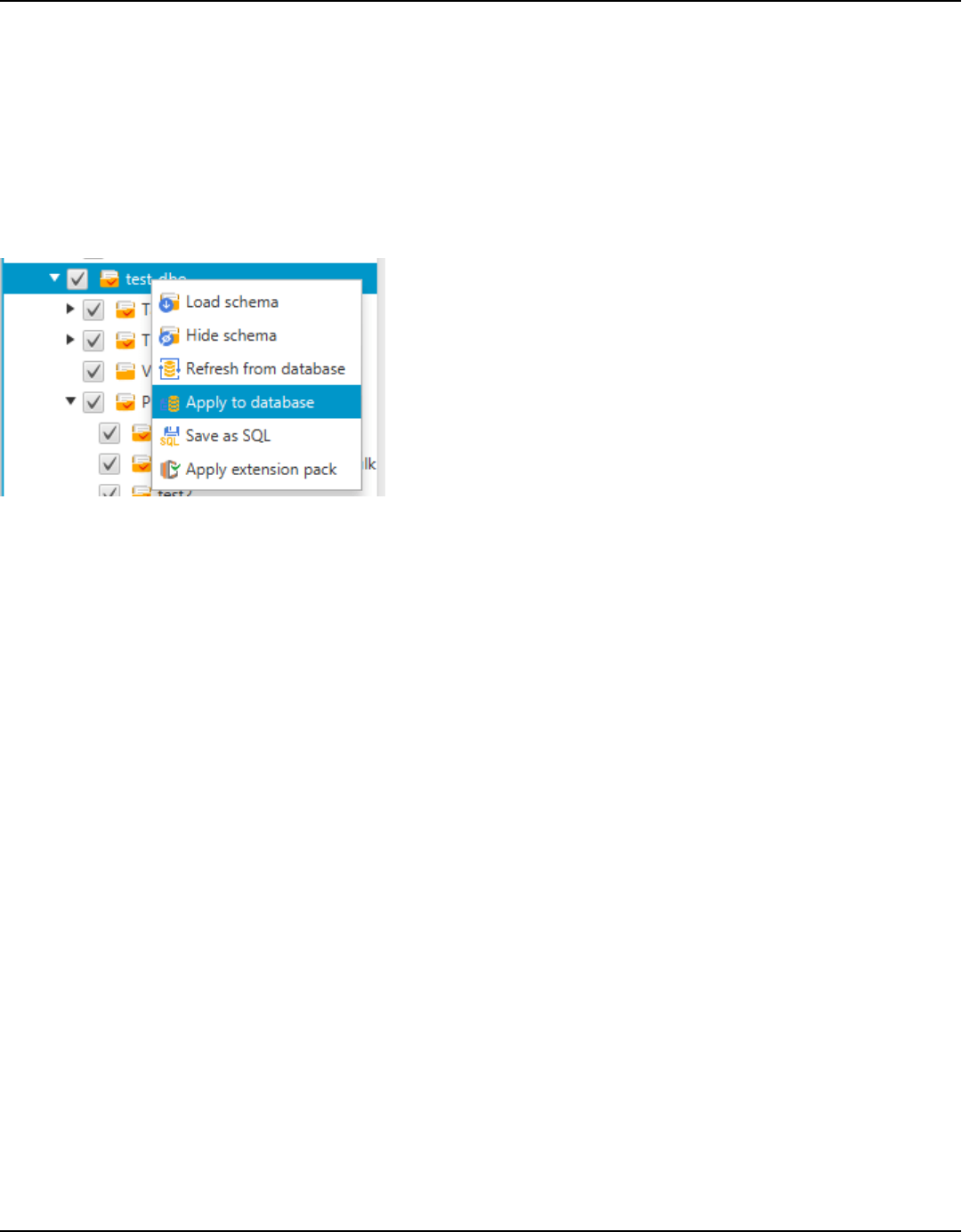

Saving and applying converted schemas ............................................................................................ 295

Saving your converted schema ........................................................................................................ 295

Applying your converted schema .................................................................................................... 296

The extension pack schema ............................................................................................................. 296

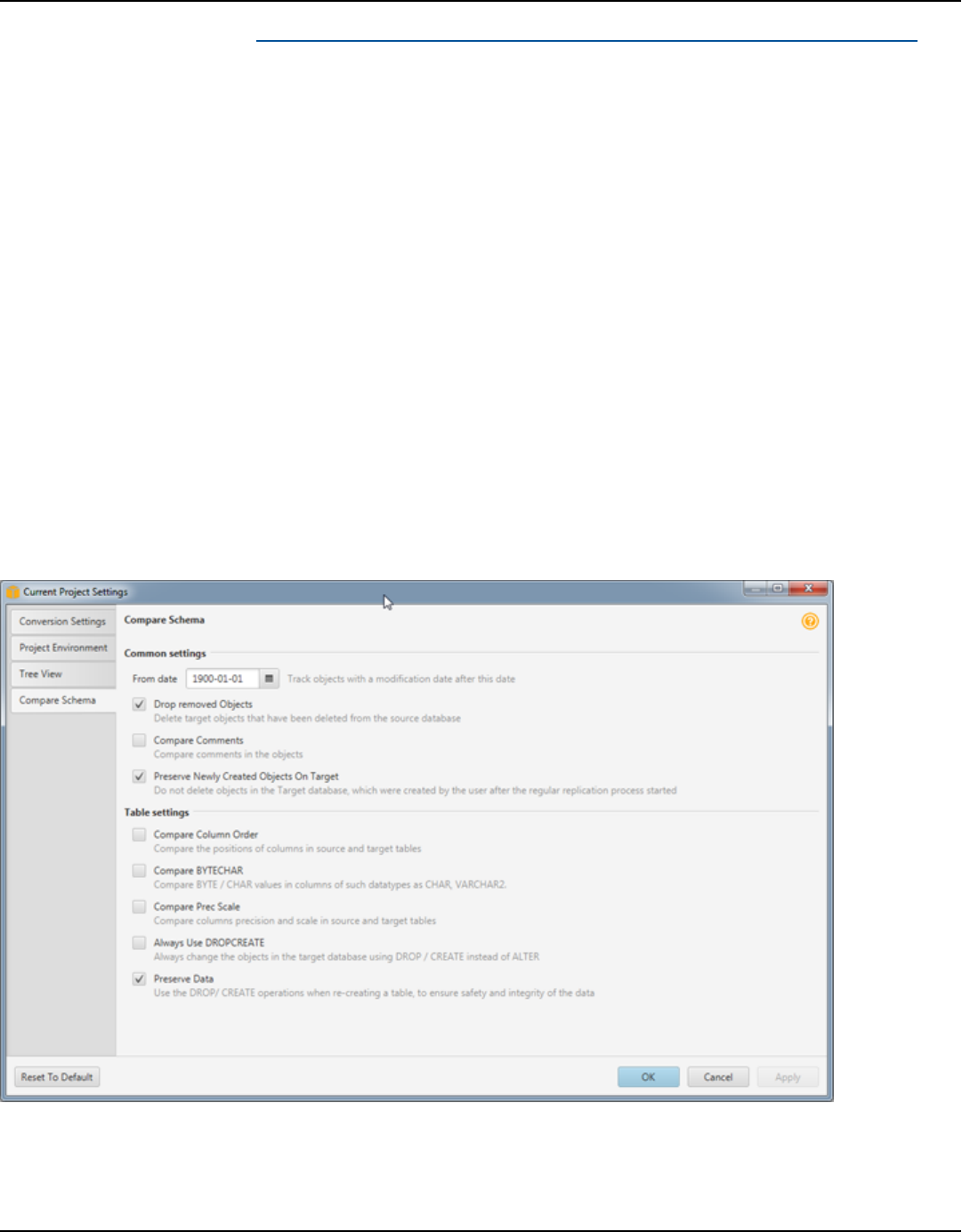

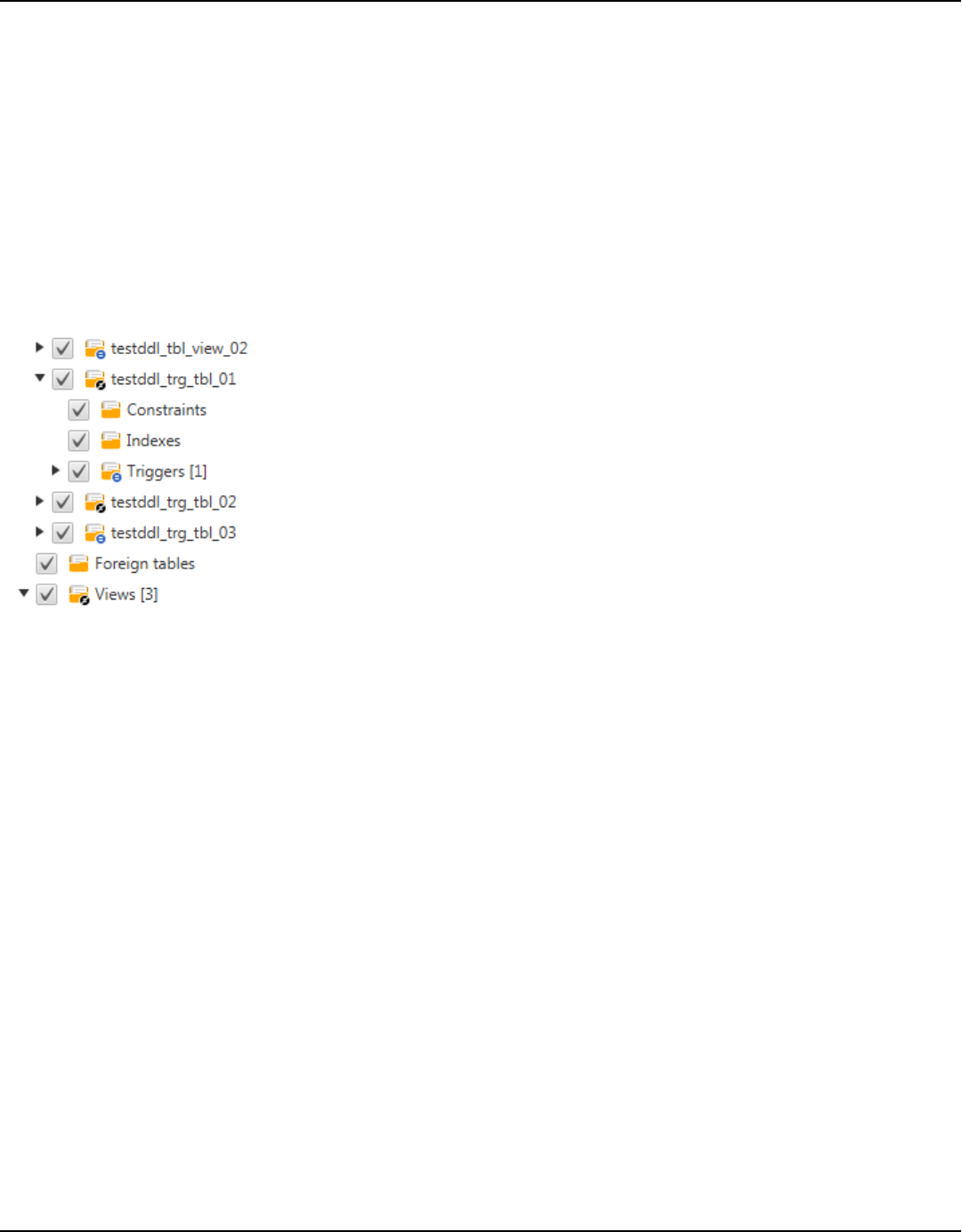

Comparing schemas ................................................................................................................................ 297

Viewing related transformed objects .................................................................................................. 298

Converting data warehouse schemas ......................................................................................... 300

Permissions for Amazon Redshift ........................................................................................................ 301

Choosing optimization strategies and rules ....................................................................................... 303

Version 1.0.672 vi

AWS Schema Conversion Tool User Guide

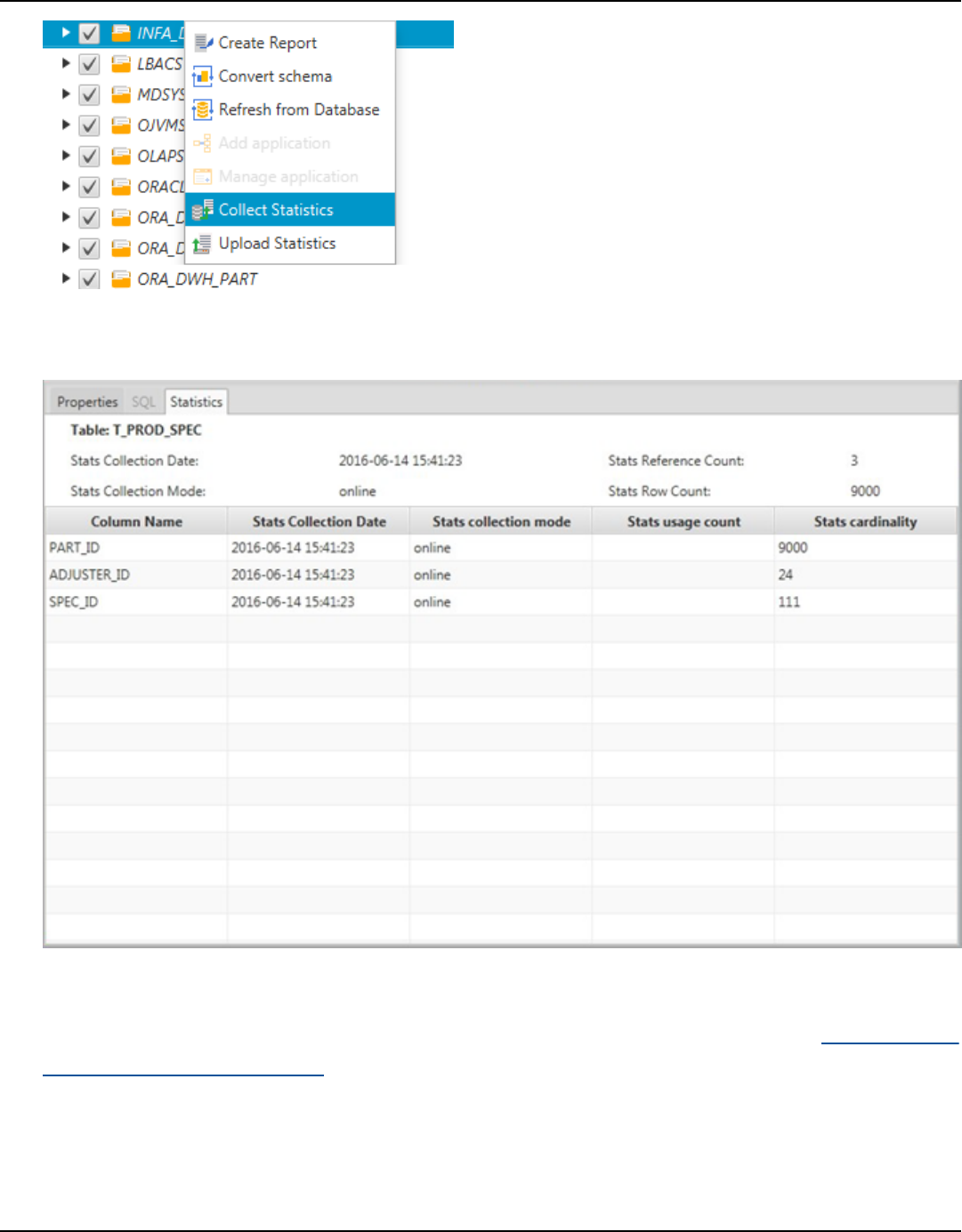

Collecting or uploading statistics ......................................................................................................... 304

Creating migration rules ........................................................................................................................ 306

Creating migration rules ................................................................................................................... 306

Exporting migration rules ................................................................................................................. 308

Converting your schema ........................................................................................................................ 309

Converting schema ............................................................................................................................. 309

Editing converted schema ................................................................................................................ 311

Clearing a converted schema ........................................................................................................... 312

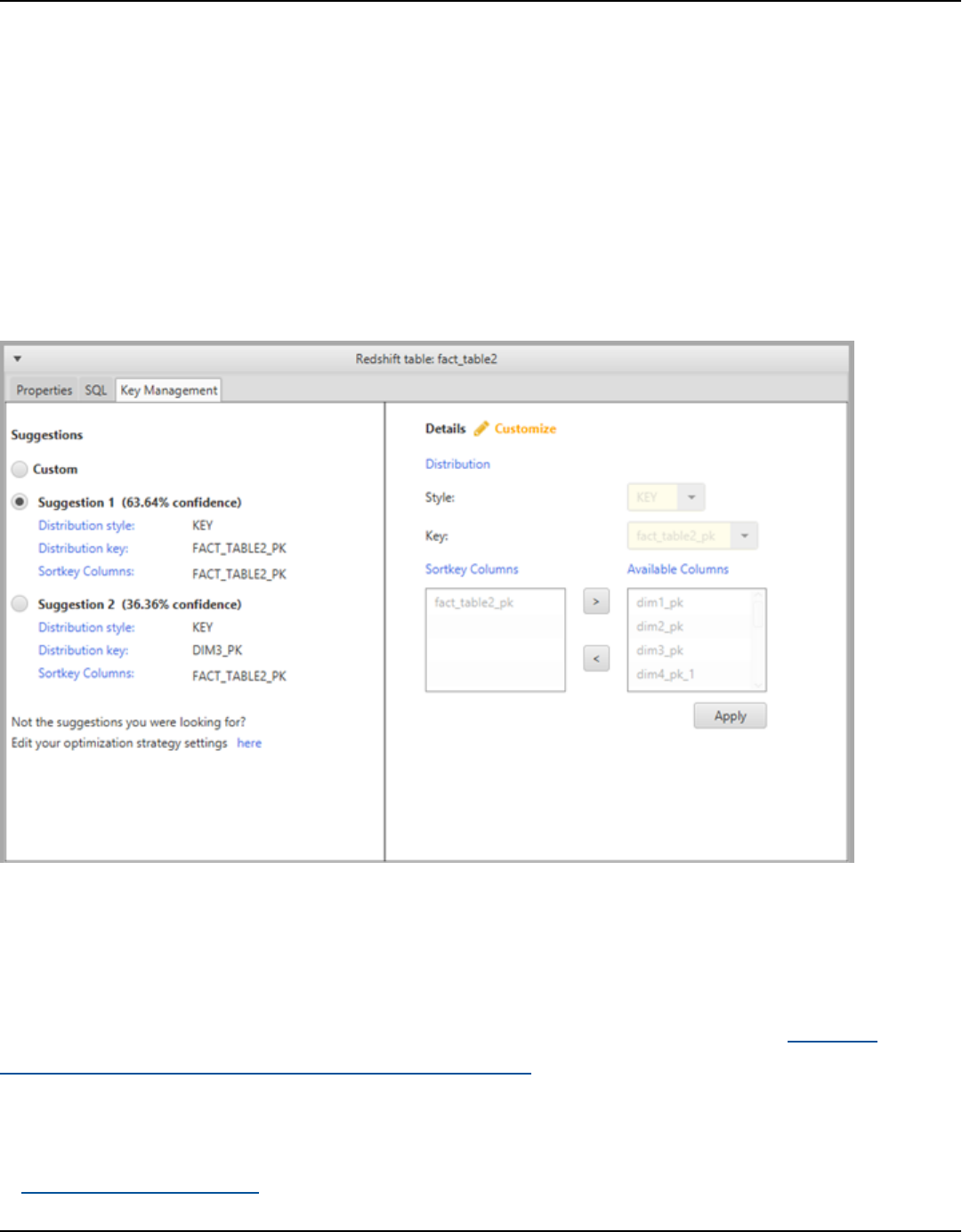

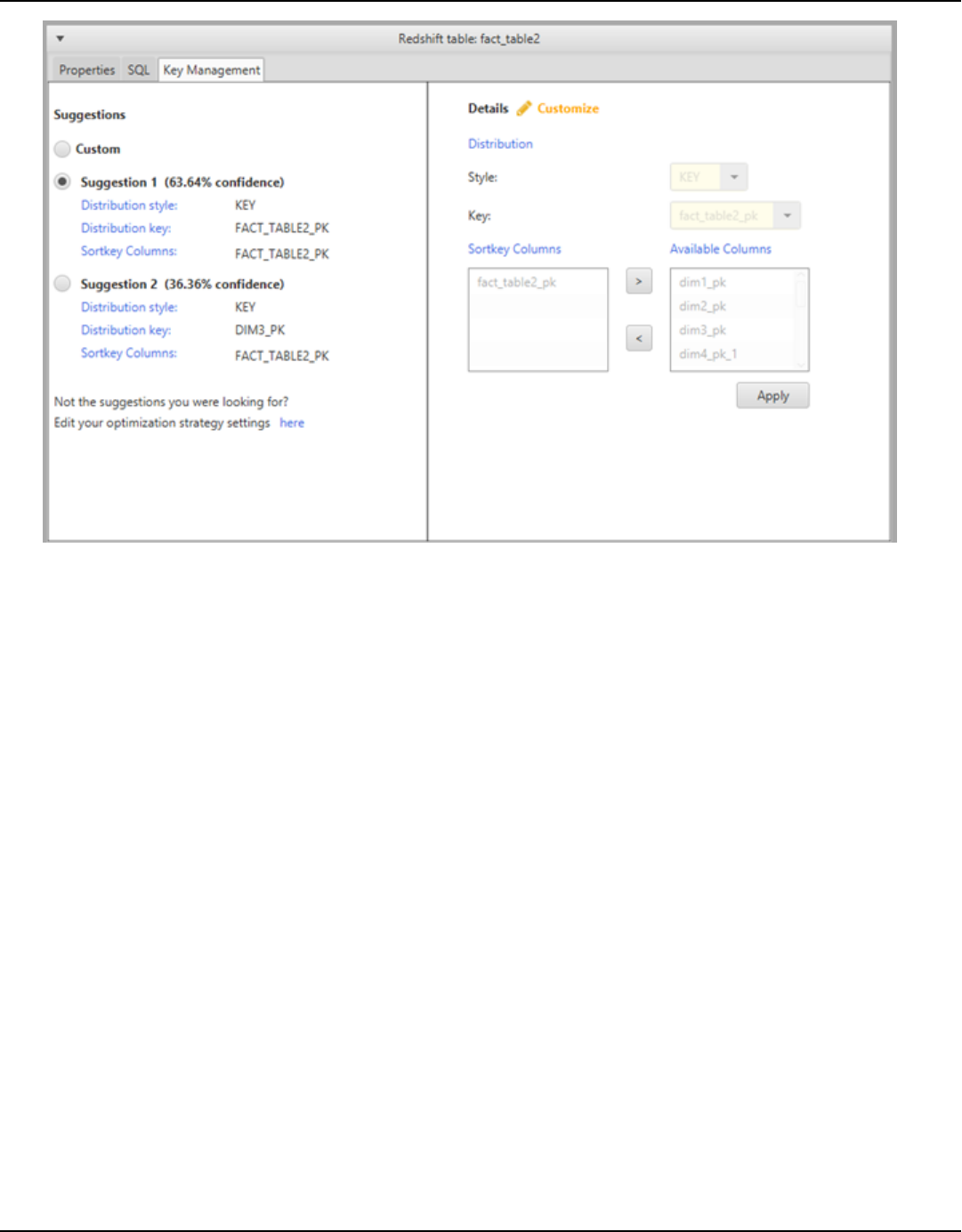

Managing and customizing keys .......................................................................................................... 313

Related topics ...................................................................................................................................... 313

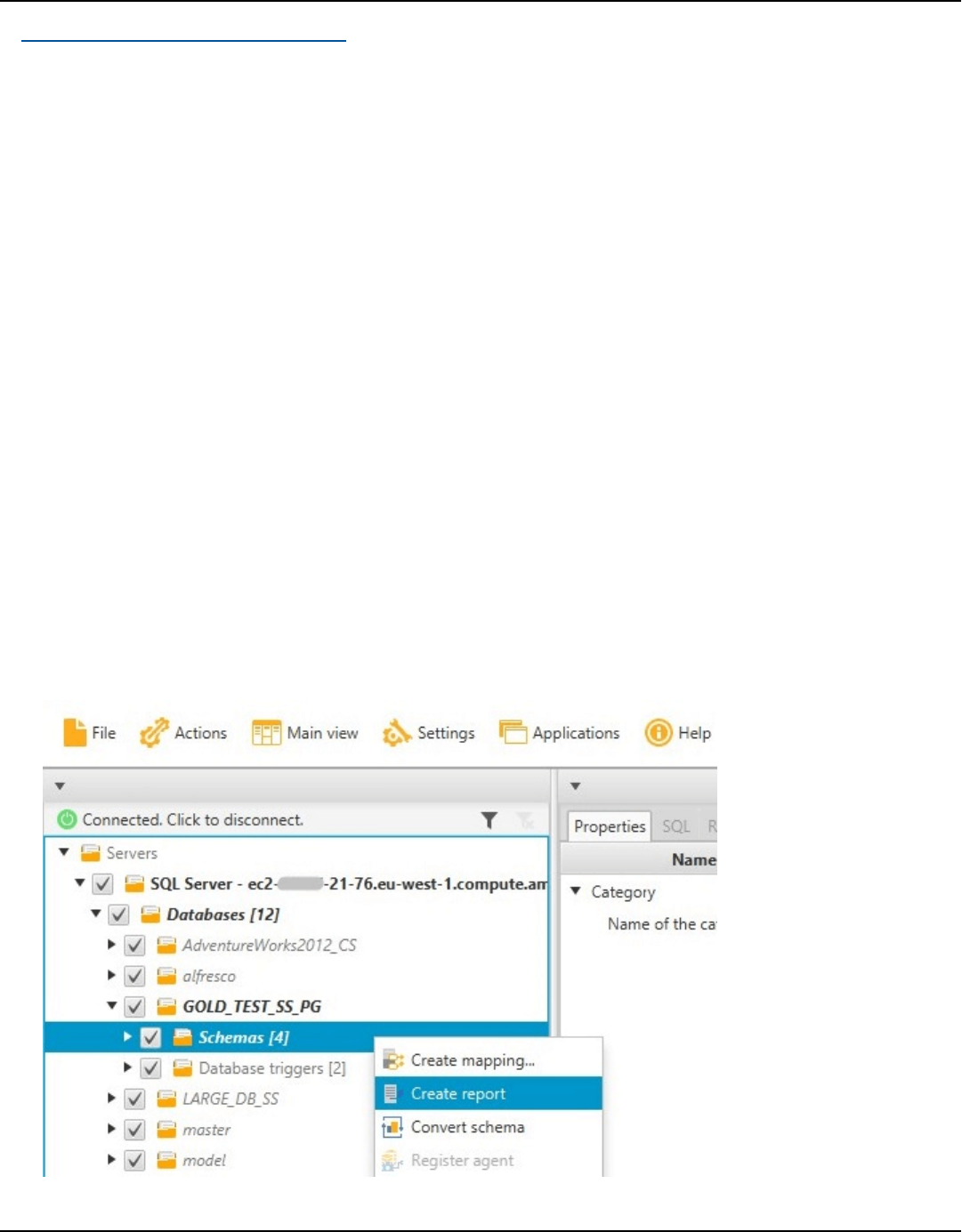

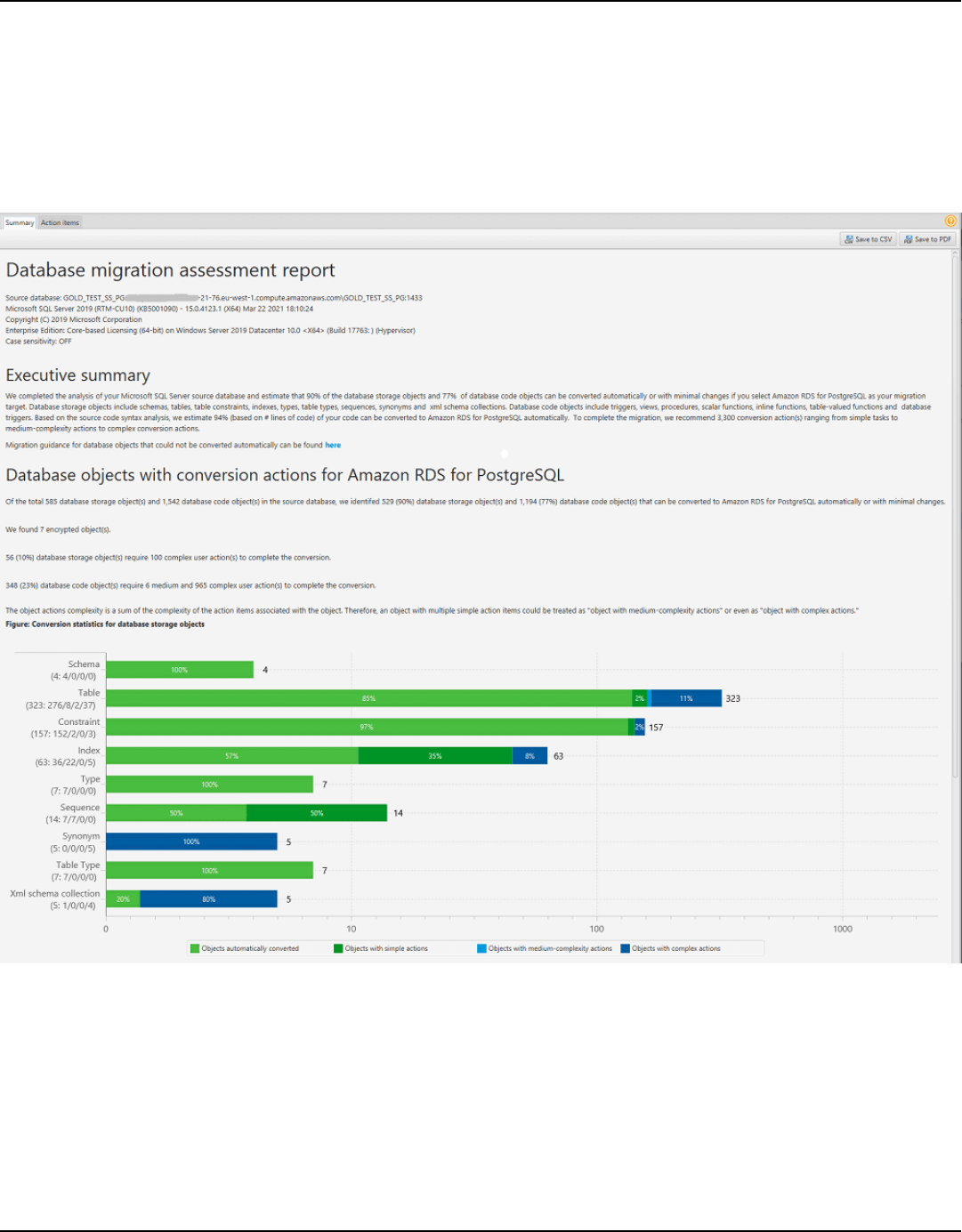

Creating and using the assessment report ......................................................................................... 314

Creating a database migration assessment report ...................................................................... 314

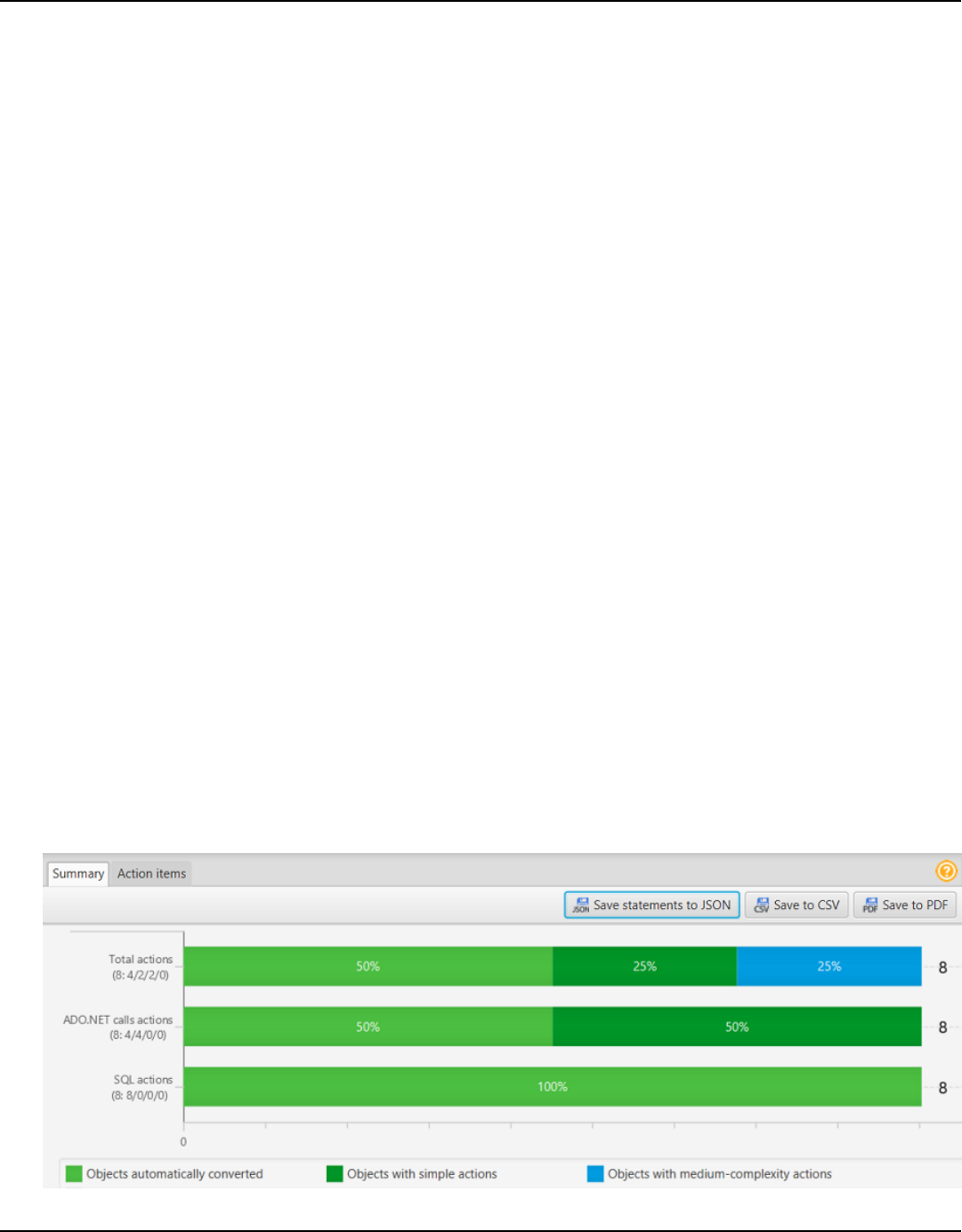

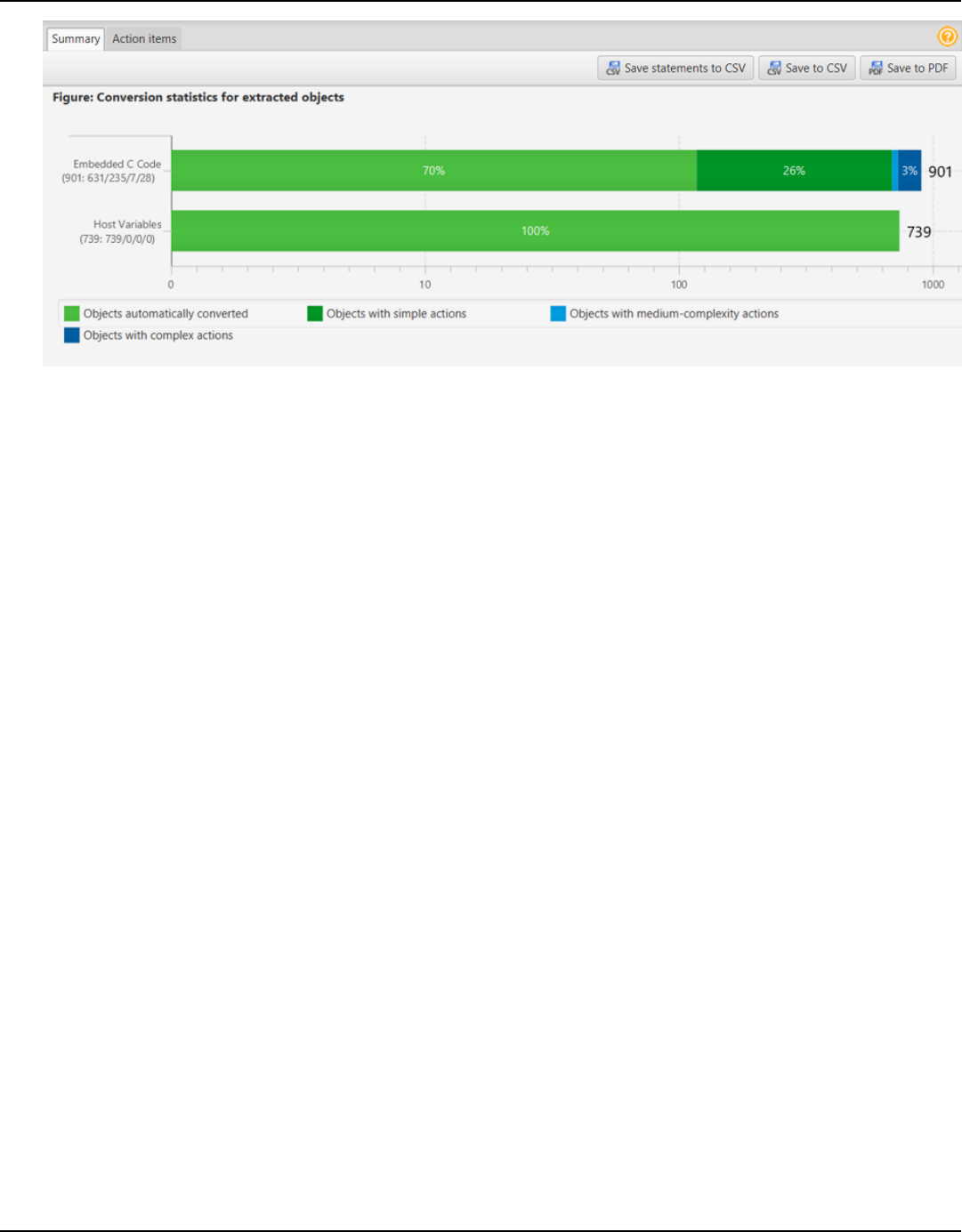

Summary .............................................................................................................................................. 315

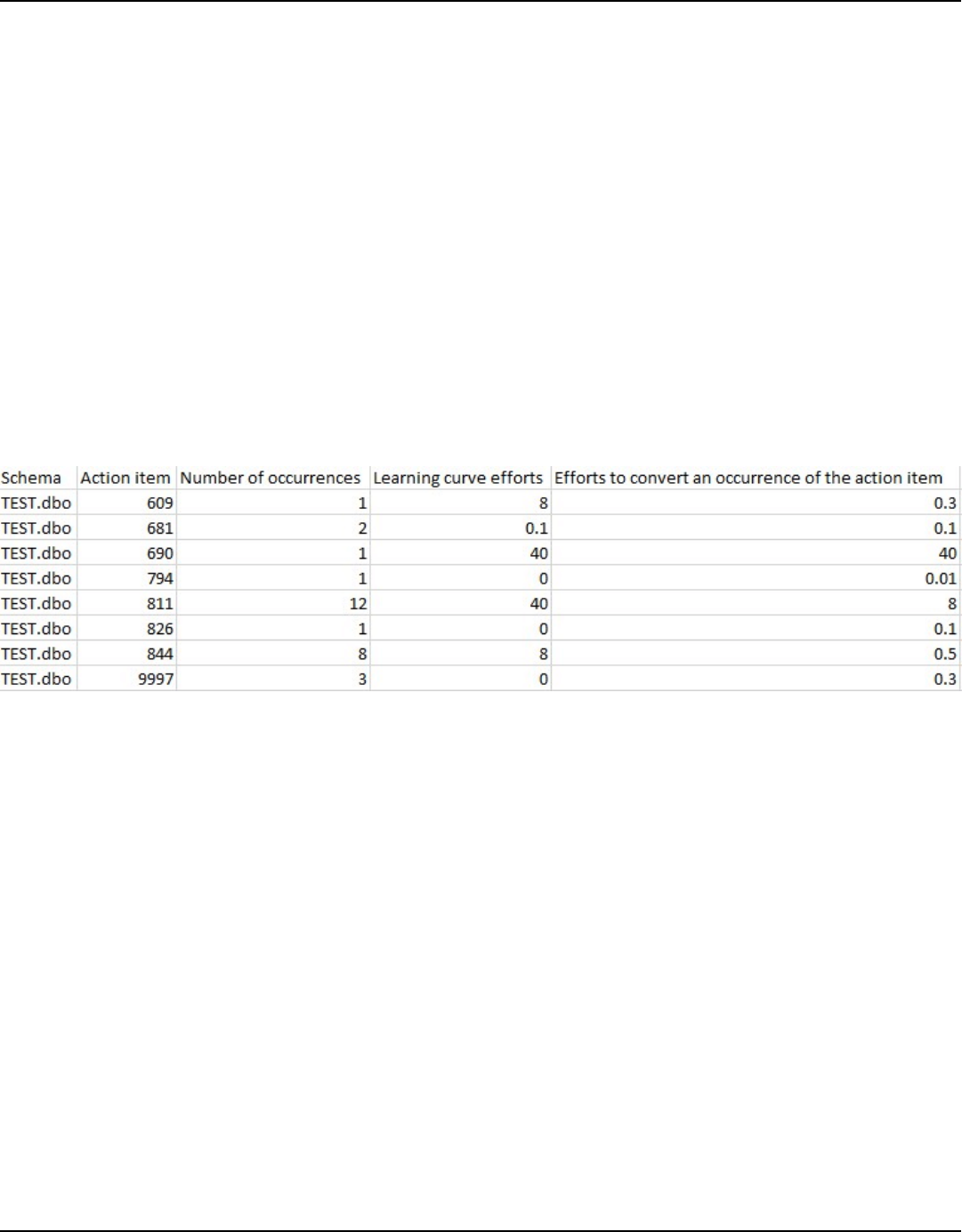

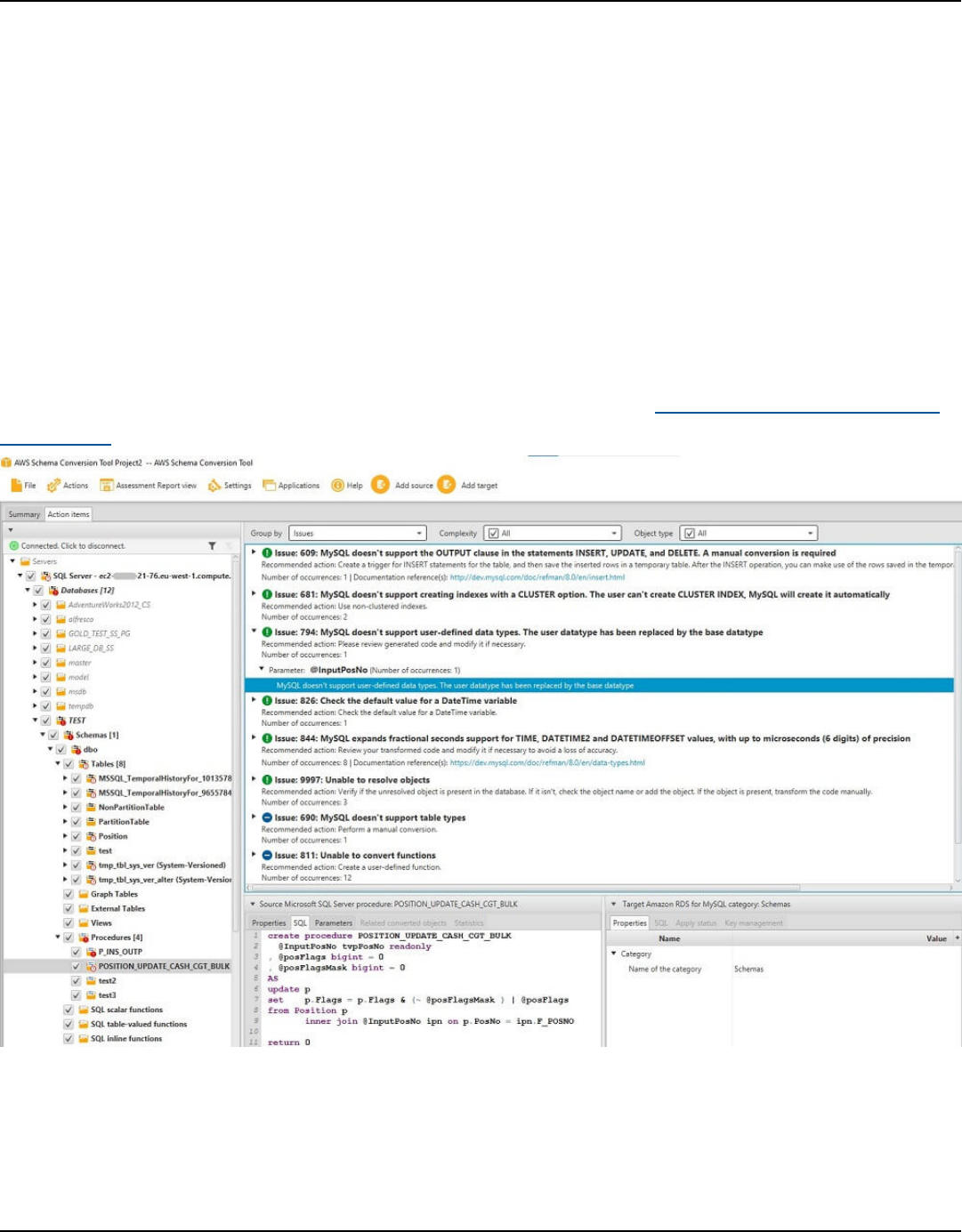

Action items ......................................................................................................................................... 316

Saving the assessment report ......................................................................................................... 317

Handling manual conversions ............................................................................................................... 318

Modifying your source schema ........................................................................................................ 318

Modifying your target schema ........................................................................................................ 318

Updating and refreshing your converted schema ............................................................................. 319

Saving and applying your converted schema .................................................................................... 319

Saving your converted schema to a file ........................................................................................ 320

Applying your converted schema .................................................................................................... 320

The extension pack schema ............................................................................................................. 321

Python libraries ................................................................................................................................... 322

Converting Amazon Redshift data ....................................................................................................... 322

Optimizing your Amazon Redshift database ................................................................................ 322

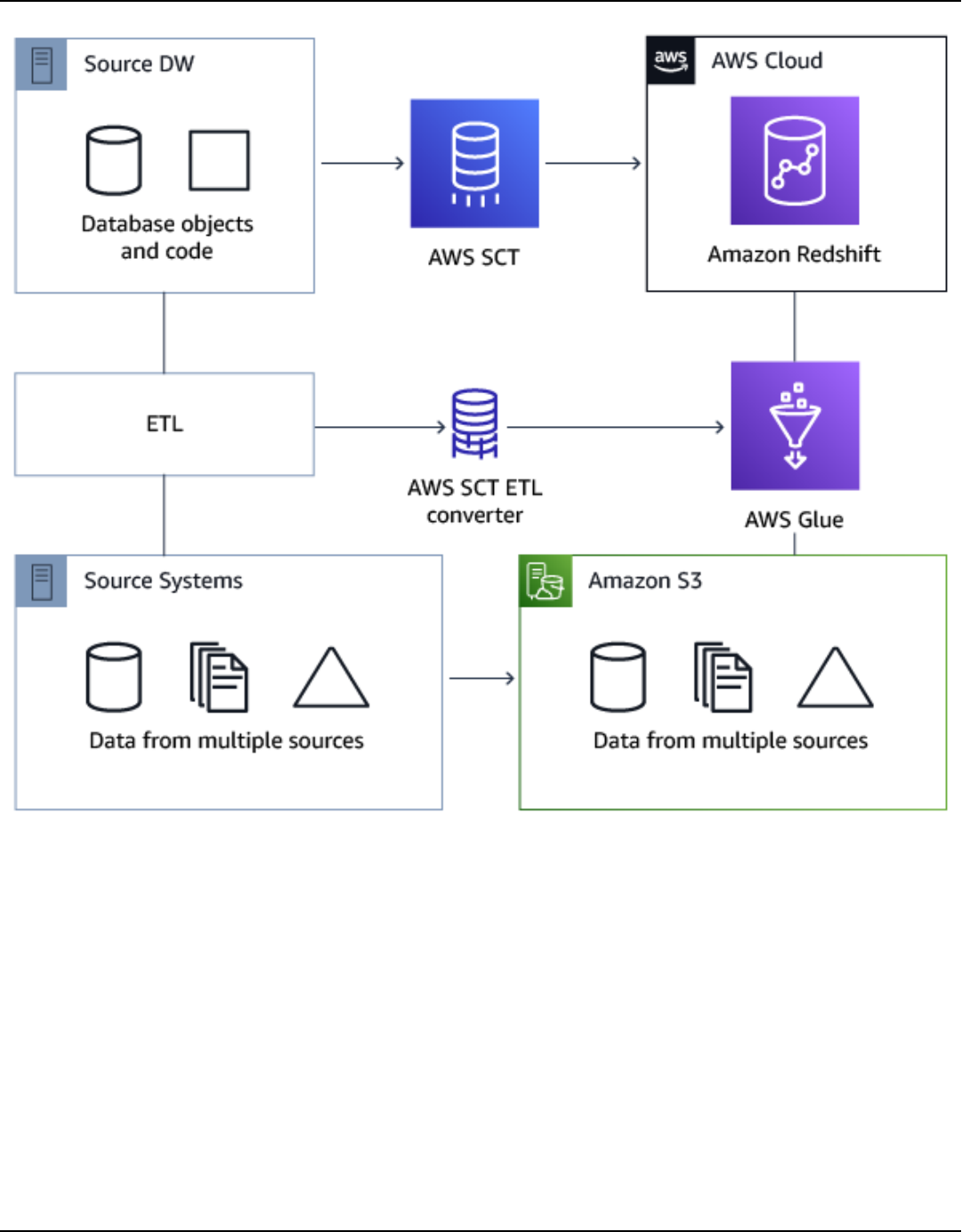

Converting Data Using ETL ......................................................................................................... 324

ETL processes ............................................................................................................................................ 325

Prerequisites ........................................................................................................................................ 326

AWS Glue Data Catalog .................................................................................................................... 327

Limitations ............................................................................................................................................ 327

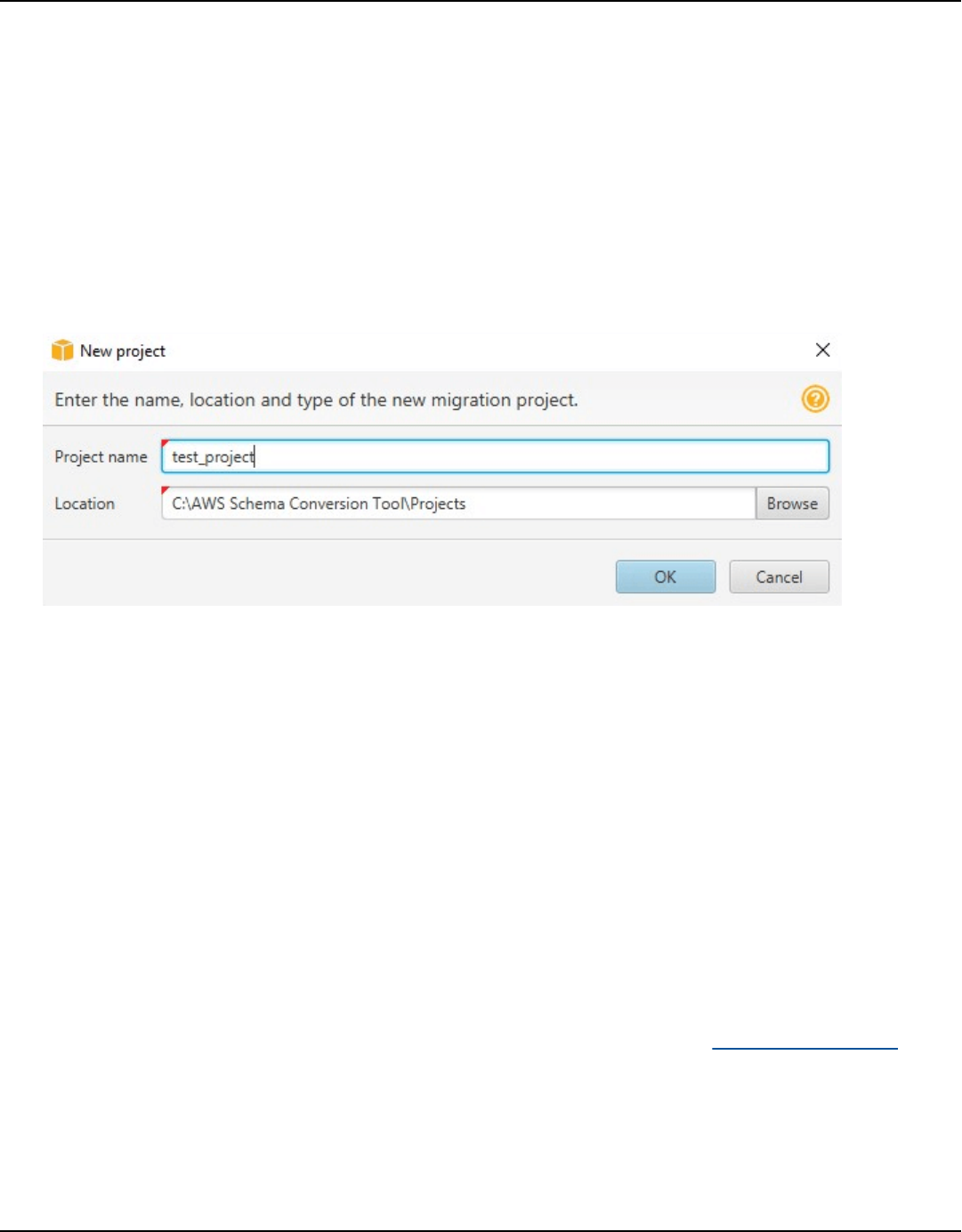

Step 1: Create a new project ........................................................................................................... 329

Step 2: Create an AWS Glue job ..................................................................................................... 330

ETL processes using Python .................................................................................................................. 331

Step 1: Create a database ................................................................................................................ 331

Step 2: Create a connection ............................................................................................................. 332

Version 1.0.672 vii

AWS Schema Conversion Tool User Guide

Step 3: Create an AWS Glue crawler .............................................................................................. 333

Informatica ETL scripts ........................................................................................................................... 336

SSIS packages ........................................................................................................................................... 341

Supported SSIS components ........................................................................................................... 345

SSIS to AWS Glue Studio ....................................................................................................................... 347

Prerequisites ........................................................................................................................................ 347

Adding SSIS packages to your AWS SCT project ......................................................................... 349

Converting SSIS packages ................................................................................................................ 350

Creating AWS Glue Studio jobs ....................................................................................................... 351

Creating an SSIS conversion assessment report .......................................................................... 352

Supported SSIS components ........................................................................................................... 353

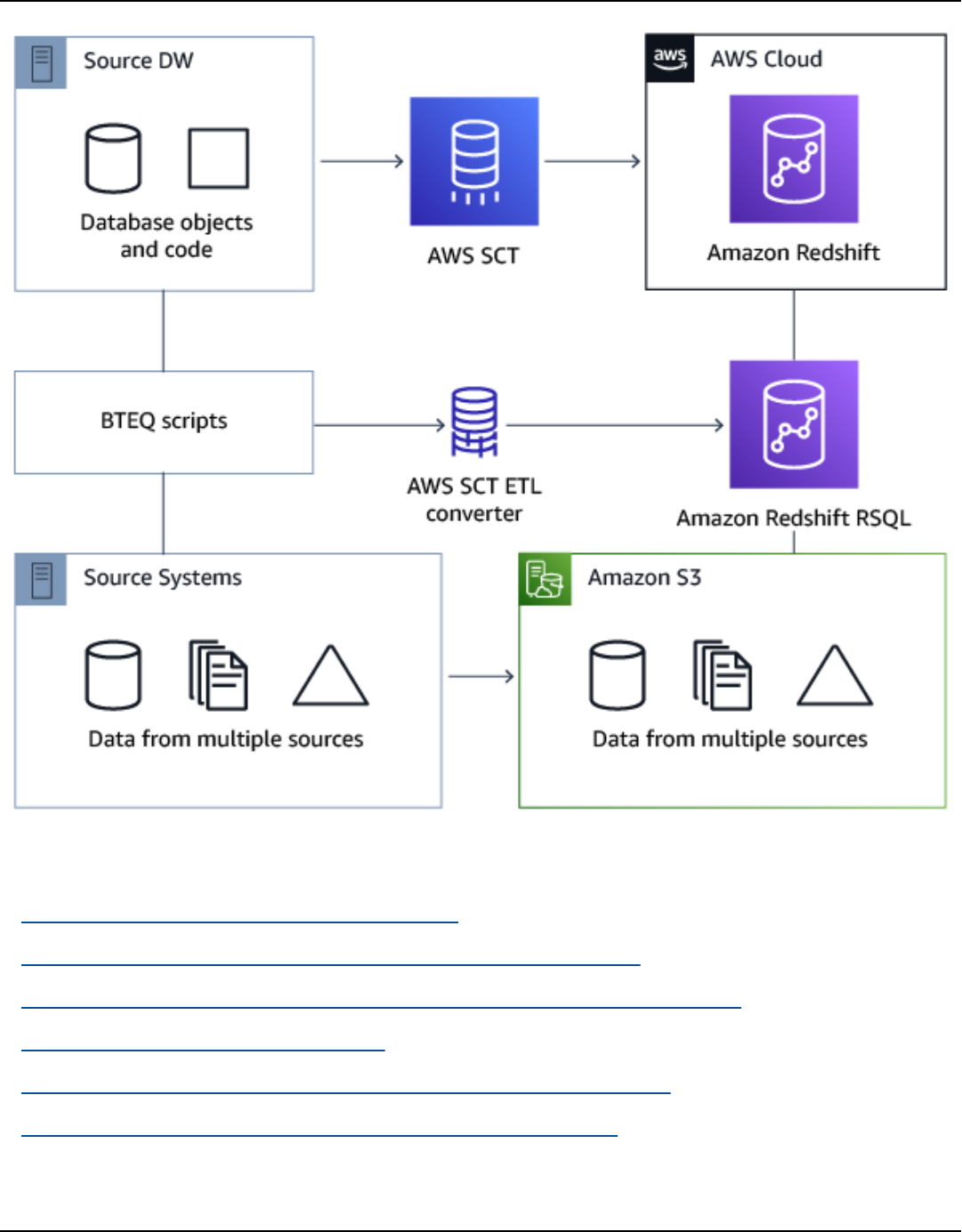

Teradata BTEQ scripts ............................................................................................................................. 354

Adding BTEQ scripts to your AWS SCT project ............................................................................ 356

Configuring substitution variables in BTEQ scripts ..................................................................... 356

Converting BTEQ scripts ................................................................................................................... 357

Managing BTEQ scripts ..................................................................................................................... 358

Creating a BTEQ script conversion assessment report ............................................................... 359

Editing and saving your converted BTEQ scripts ......................................................................... 359

Shell scripts ............................................................................................................................................... 360

Adding shell scripts to your AWS SCT project .............................................................................. 361

Configuring substitution variables in shell scripts ....................................................................... 362

Converting shell scripts ..................................................................................................................... 362

Managing shell scripts ....................................................................................................................... 363

Creating a shell script conversion assessment report ................................................................. 364

Editing and saving your converted shell scripts ........................................................................... 365

FastExport scripts ..................................................................................................................................... 365

Adding FastExport job scripts to your AWS SCT project ............................................................ 366

Configuring substitution variables in FastExport job scripts ..................................................... 367

Converting FastExport job scripts ................................................................................................... 368

Managing FastExport job scripts ..................................................................................................... 369

Creating a FastExport job script conversion assessment report ............................................... 369

Editing and saving your converted FastExport job scripts ......................................................... 370

FastLoad scripts ........................................................................................................................................ 371

Adding FastLoad job scripts to your AWS SCT project ............................................................... 371

Configuring substitution variables in FastLoad job scripts ........................................................ 372

Converting FastLoad job scripts ...................................................................................................... 374

Version 1.0.672 viii

AWS Schema Conversion Tool User Guide

Managing FastLoad job scripts ........................................................................................................ 375

Creating a FastLoad job script conversion assessment report .................................................. 375

Editing and saving your converted FastLoad job scripts ............................................................ 376

MultiLoad scripts .................................................................................................................................... 377

Adding MultiLoad job scripts to your AWS SCT project ............................................................. 377

Configuring substitution variables in MultiLoad job scripts ...................................................... 378

Converting MultiLoad job scripts .................................................................................................... 379

Managing MultiLoad job scripts ...................................................................................................... 380

Creating a MultiLoad job script conversion assessment report ................................................ 381

Editing and saving your converted MultiLoad job scripts .......................................................... 382

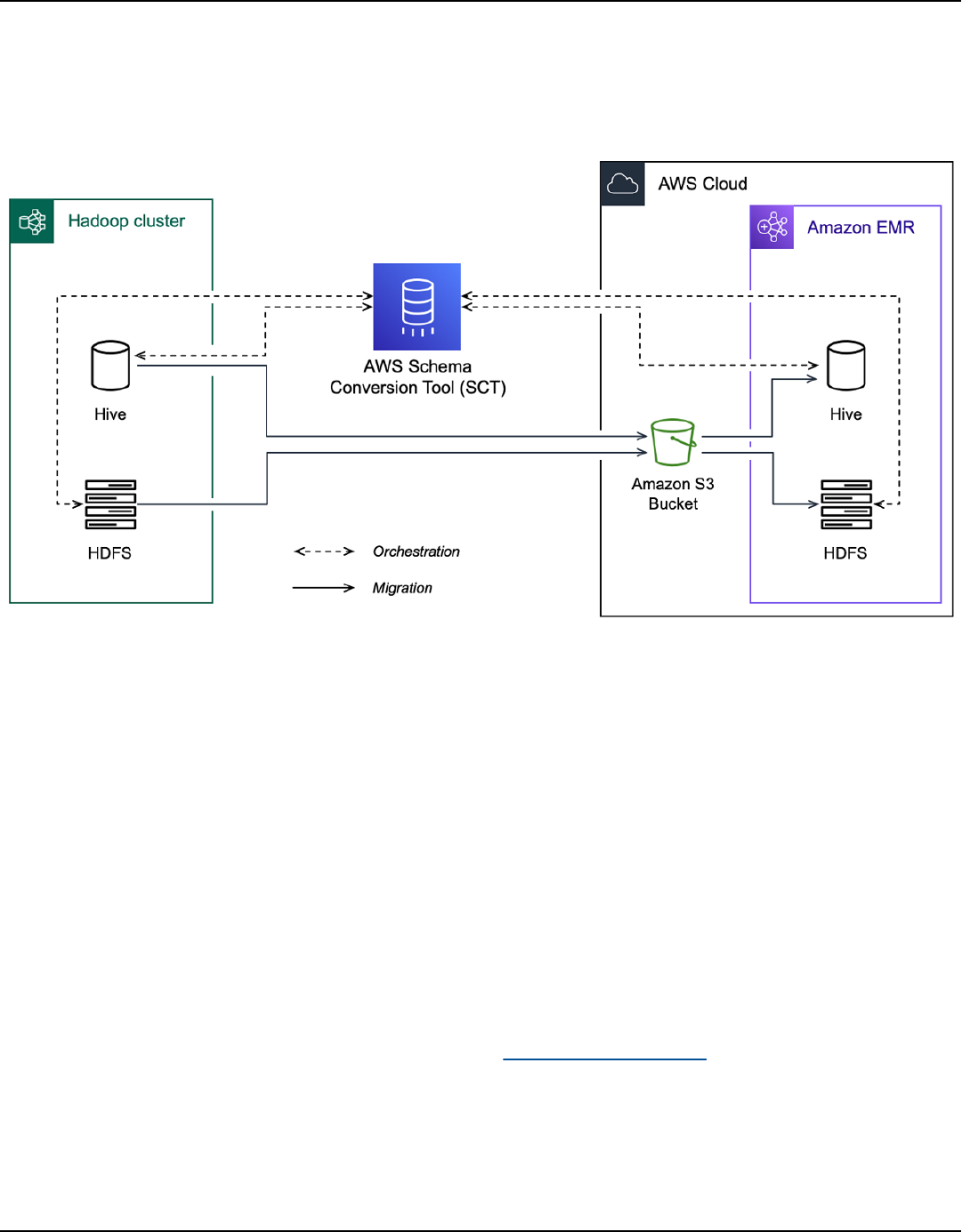

Migrating big data frameworks .................................................................................................. 383

Migrating Hadoop workloads ................................................................................................................ 383

Overview ............................................................................................................................................... 384

Step 1: Connect to your Hadoop clusters ..................................................................................... 385

Step 2: Set up the mapping rules .................................................................................................. 385

Step 3: Create an assessment report ............................................................................................. 386

Step 4: Migrate your Apache Hadoop cluster to Amazon EMR ................................................. 388

Running your CLI script ..................................................................................................................... 389

Managing your migration project ................................................................................................... 389

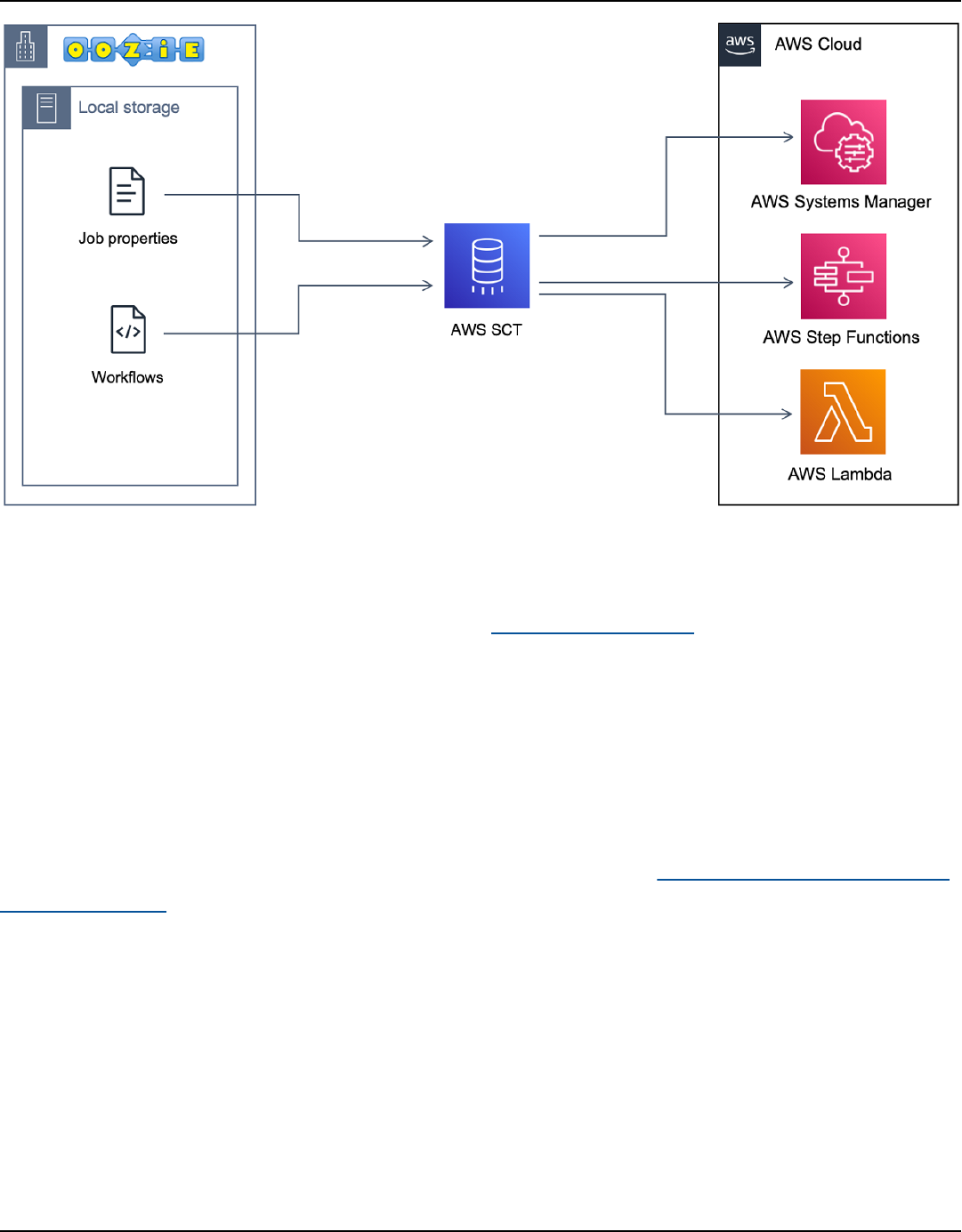

Converting Oozie workflows; ................................................................................................................ 391

Overview ............................................................................................................................................... 392

Step 1: Connect to your source and target services ................................................................... 393

Step 2: Set up the mapping rules .................................................................................................. 394

Step 3: Configure parameters .......................................................................................................... 394

Step 4: Create an assessment report ............................................................................................. 396

Step 5: Convert your Apache Oozie workflows to AWS Step Functions .................................. 398

Running your CLI script ..................................................................................................................... 400

Supported nodes ................................................................................................................................ 401

Integrating with AWS DMS ......................................................................................................... 402

Using an AWS SCT replication agent with AWS DMS ...................................................................... 402

Using an AWS SCT data extraction agent with AWS DMS .............................................................. 402

Increasing logging levels when using AWS SCT with AWS DMS ................................................... 402

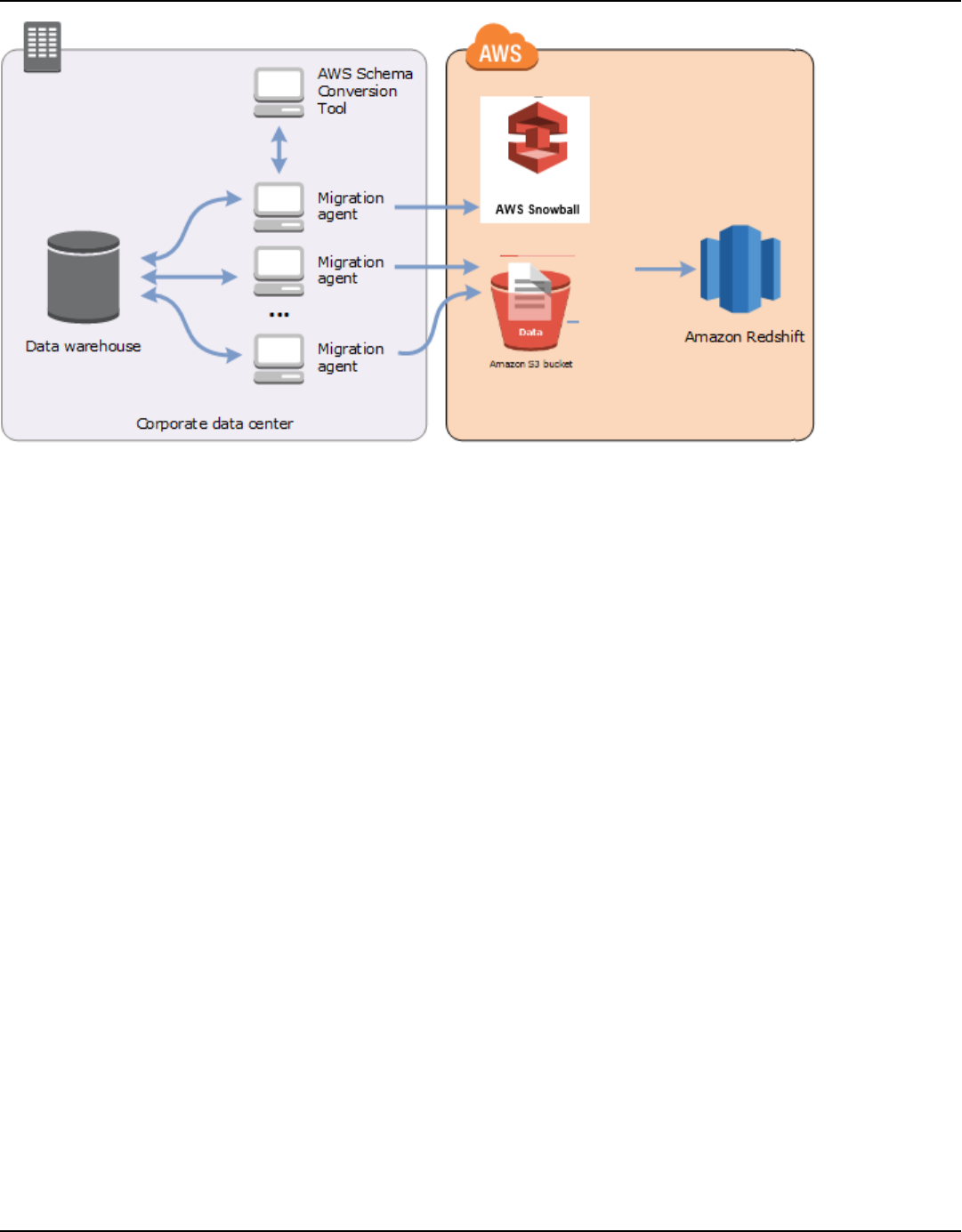

Migrating from a data warehouse .............................................................................................. 404

Prerequisites .............................................................................................................................................. 406

Amazon S3 settings ........................................................................................................................... 407

Assuming IAM roles ............................................................................................................................ 408

Version 1.0.672 ix

AWS Schema Conversion Tool User Guide

Security settings ................................................................................................................................. 409

Configuration settings ....................................................................................................................... 410

Installing agents ....................................................................................................................................... 410

Configuring agents .................................................................................................................................. 412

Installing and configuring dedicated copying agents ................................................................. 414

Starting agents ................................................................................................................................... 415

Registering agents ................................................................................................................................... 416

Hiding and recovering information for an AWS SCT agent ............................................................ 417

Creating data migration rules ............................................................................................................... 418

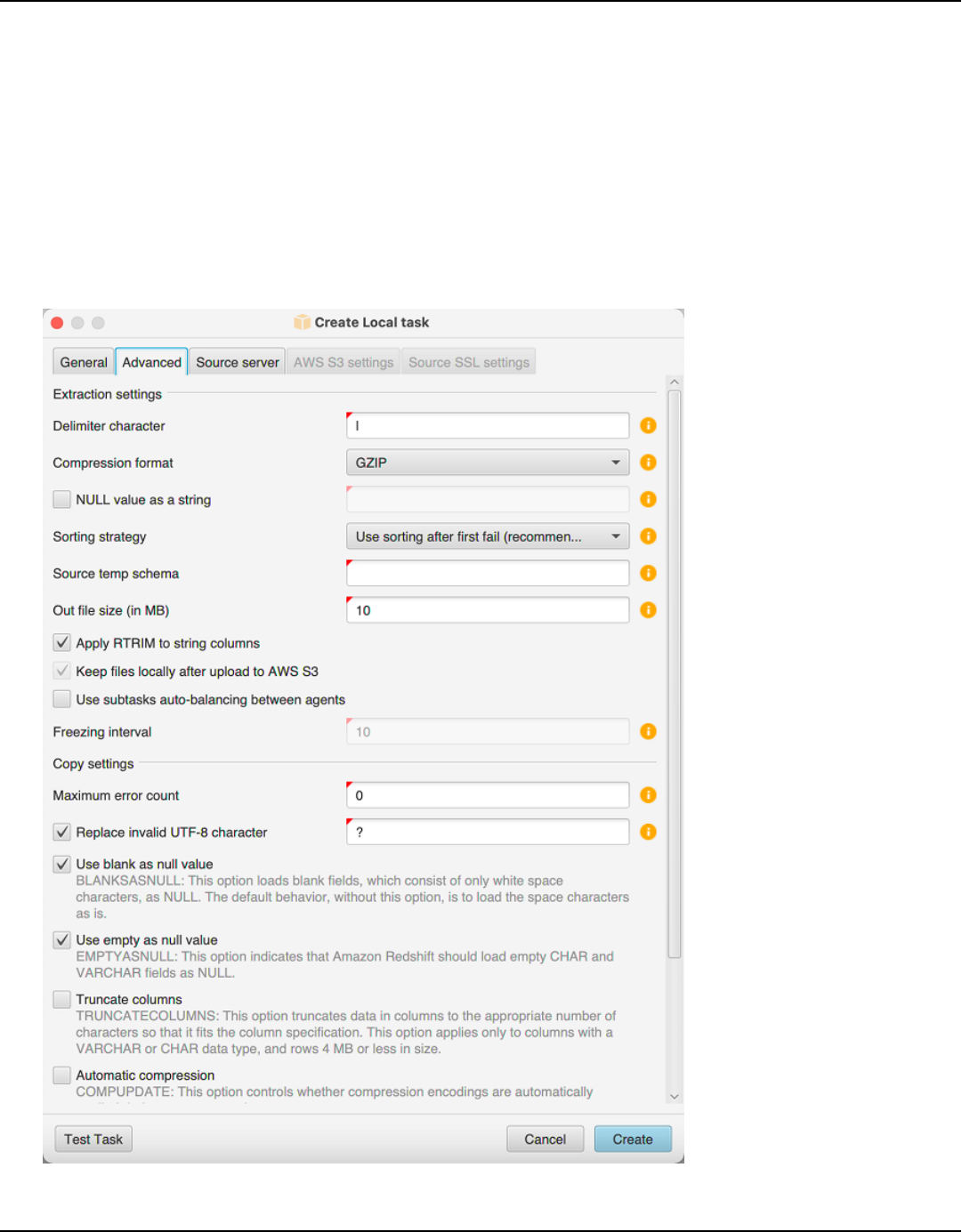

Changing extractor and copy settings for data migration .............................................................. 419

Sorting data .............................................................................................................................................. 422

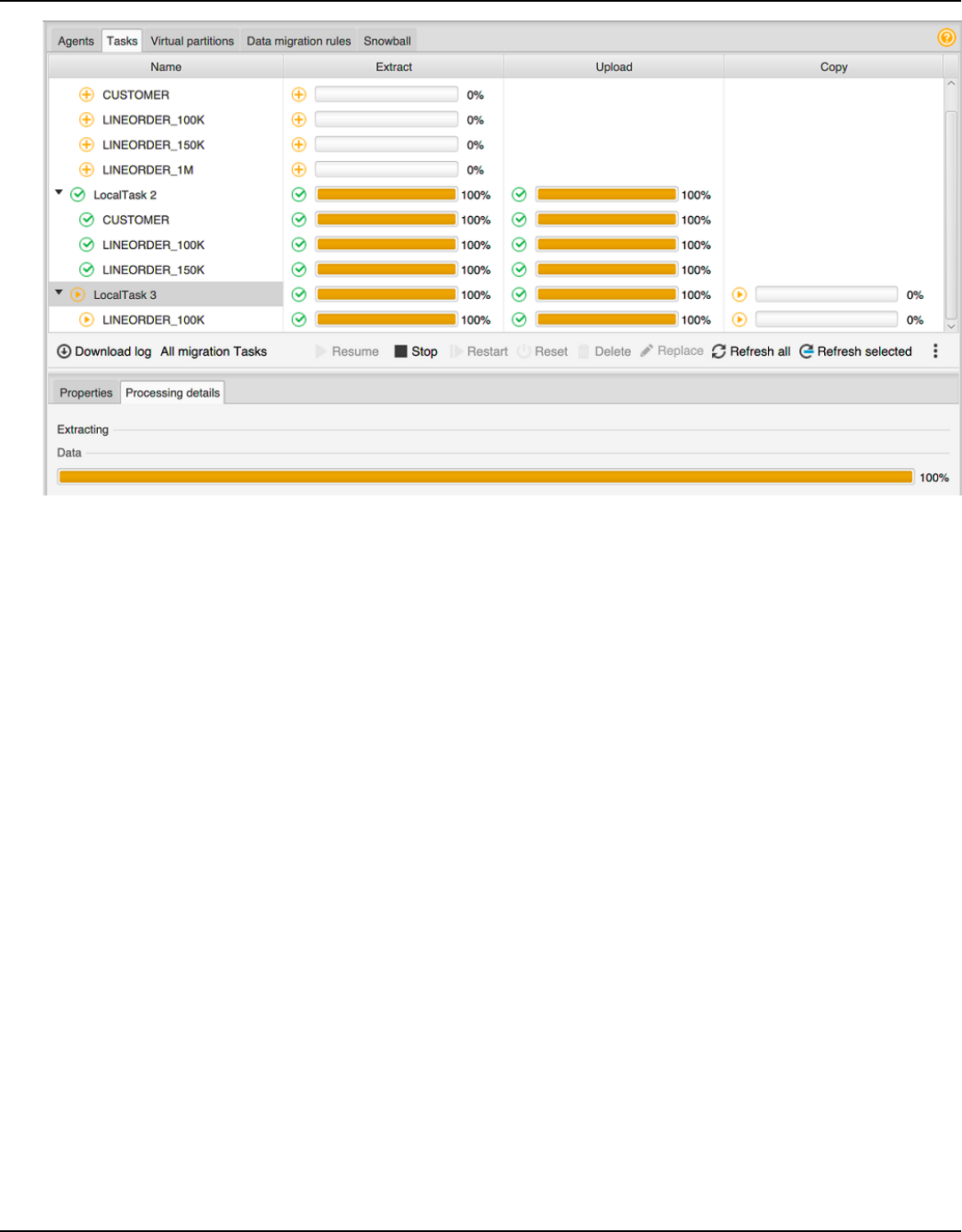

Creating, running, and monitoring an AWS SCT task ...................................................................... 424

Exporting and importing a data extraction task ............................................................................... 427

Data extraction using an AWS Snowball Edge device ...................................................................... 428

Step-by-step procedures for migrating data using AWS SCT and AWS Snowball Edge ....... 428

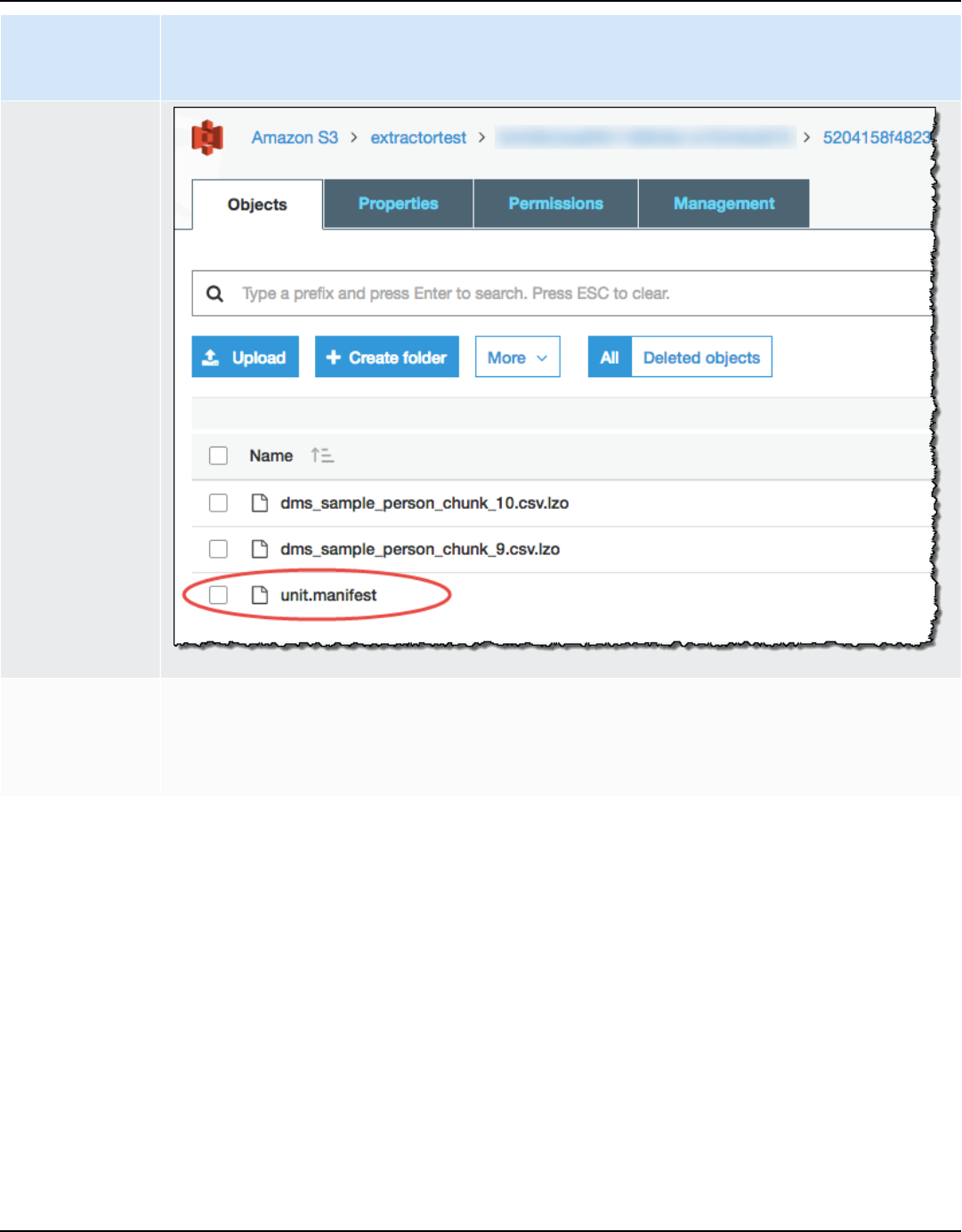

Data extraction task output .................................................................................................................. 432

Using virtual partitioning ....................................................................................................................... 433

Limits when creating virtual partitioning ...................................................................................... 434

RANGE partition type ........................................................................................................................ 434

LIST partition type ............................................................................................................................ 435

DATE AUTO SPLIT partition type .................................................................................................... 436

Using native partitioning ....................................................................................................................... 437

Working with LOBs .................................................................................................................................. 438

Best practices and troubleshooting ..................................................................................................... 439

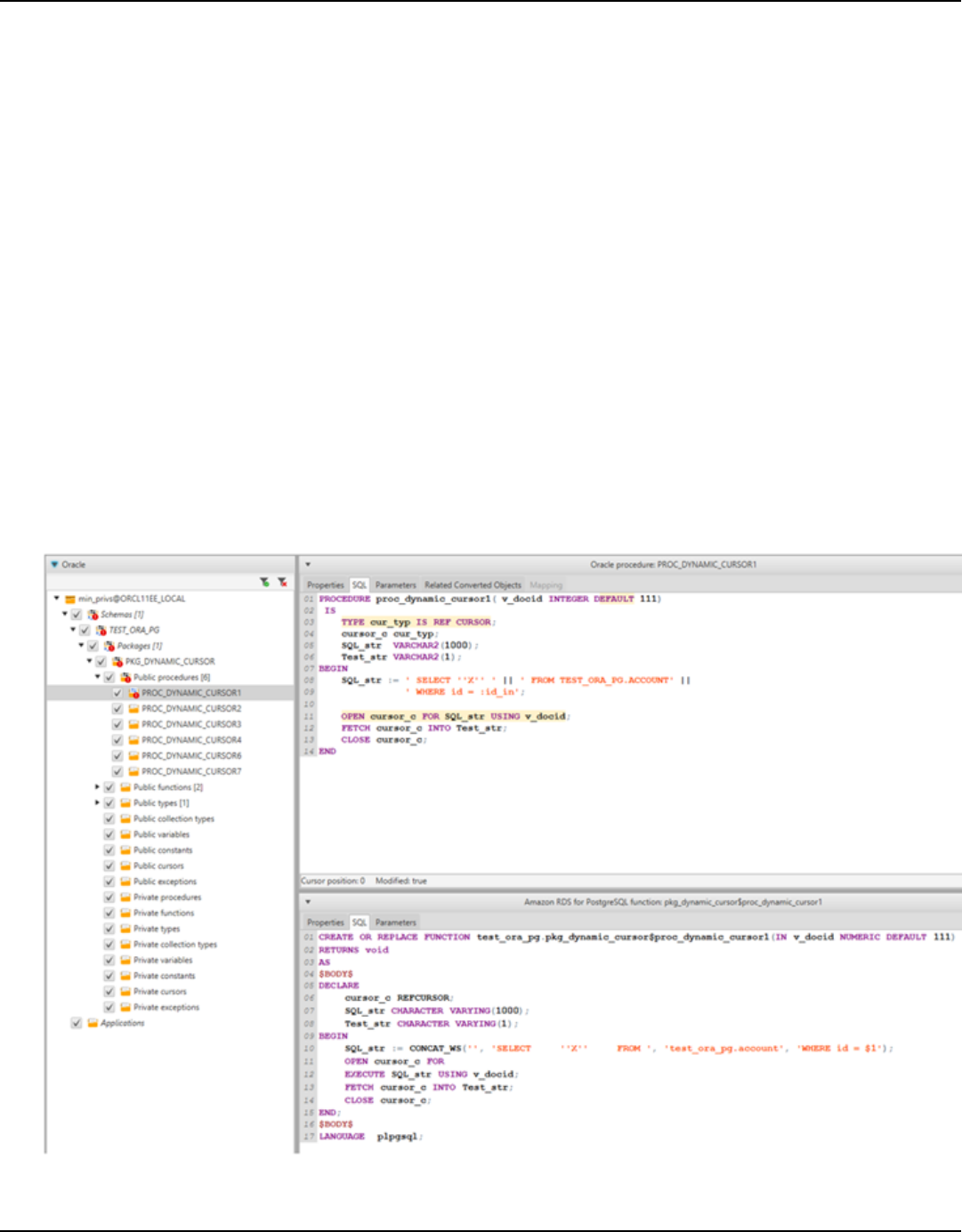

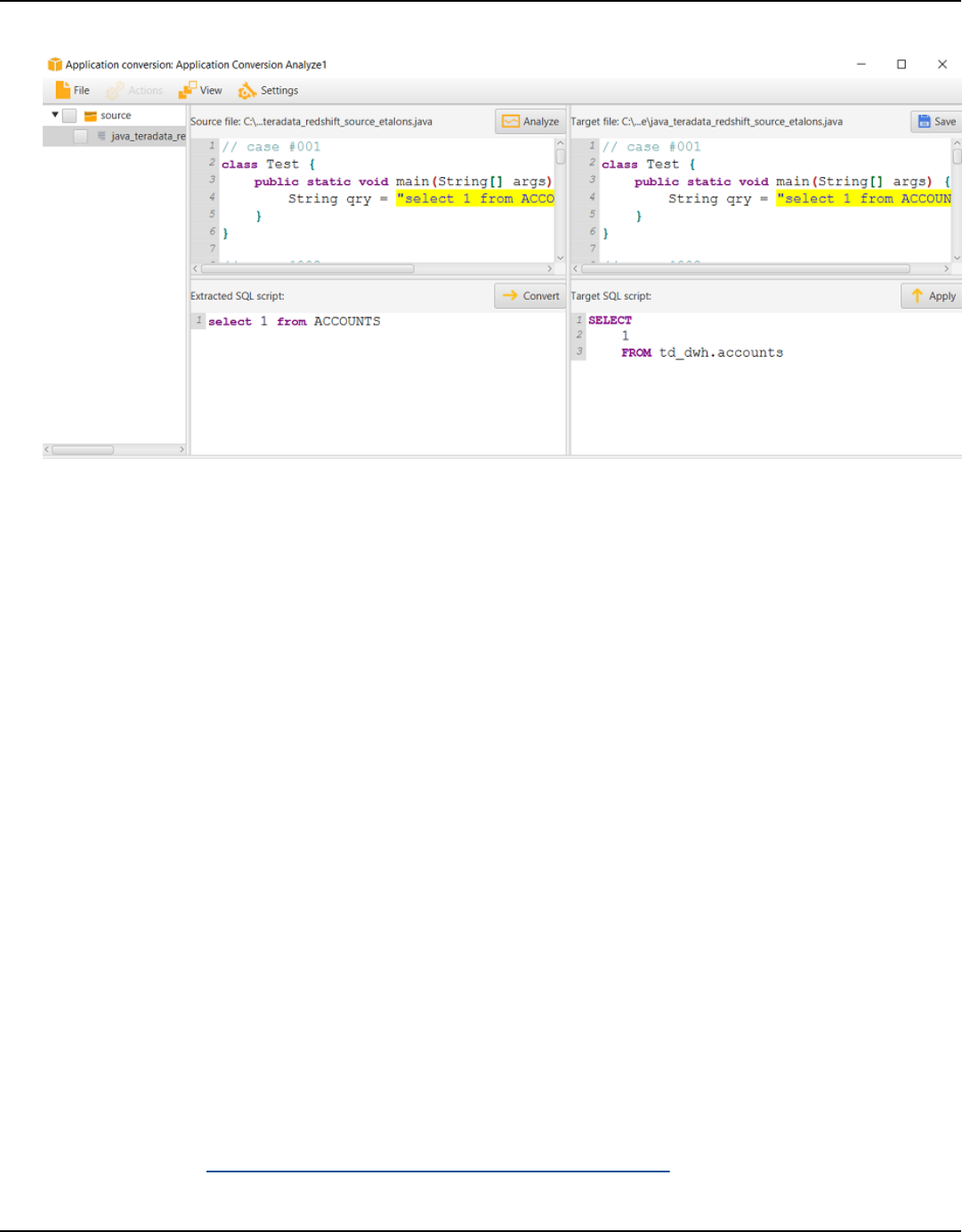

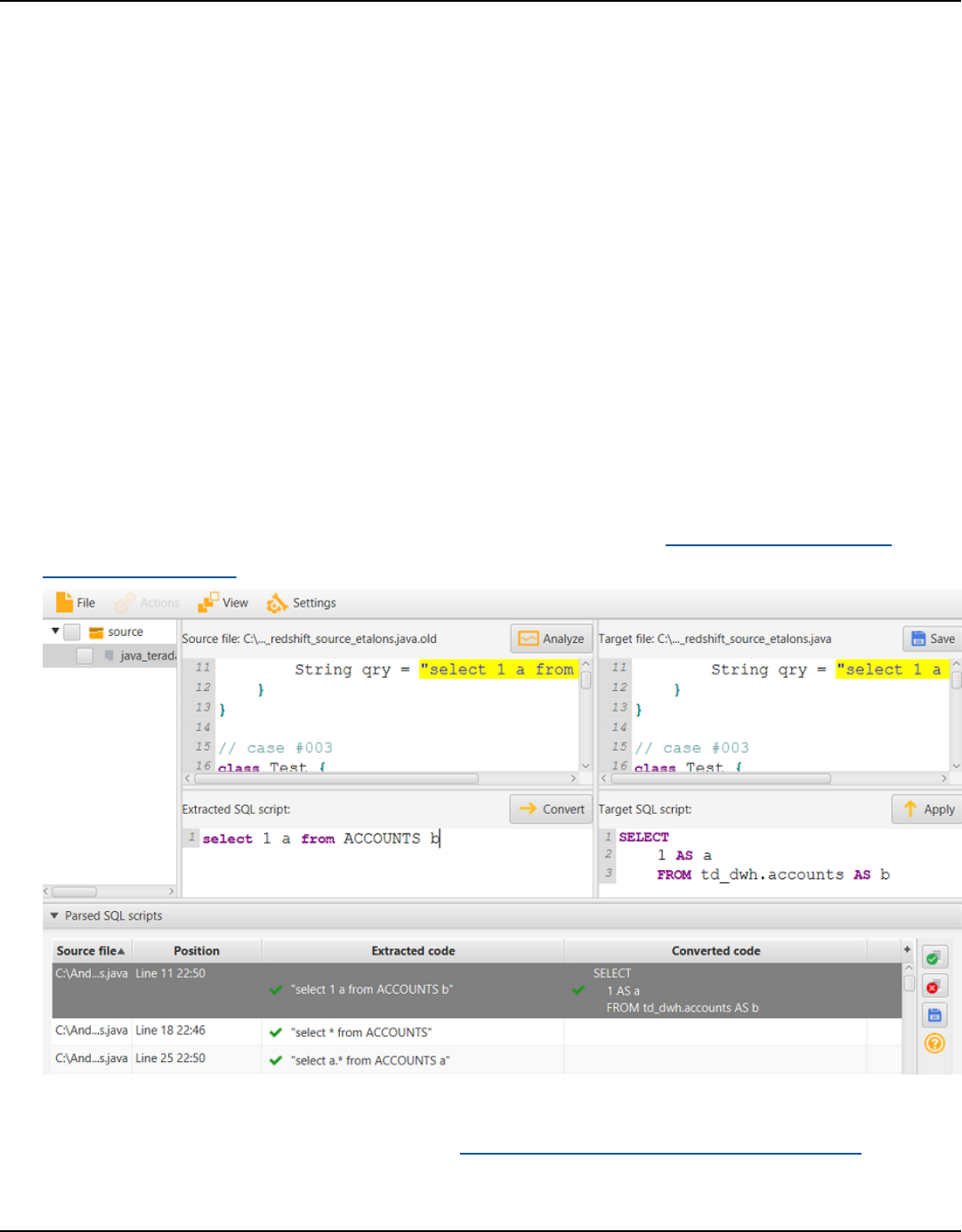

Converting application SQL ........................................................................................................ 441

Overview of converting application SQL ............................................................................................ 441

SQL code .................................................................................................................................................... 442

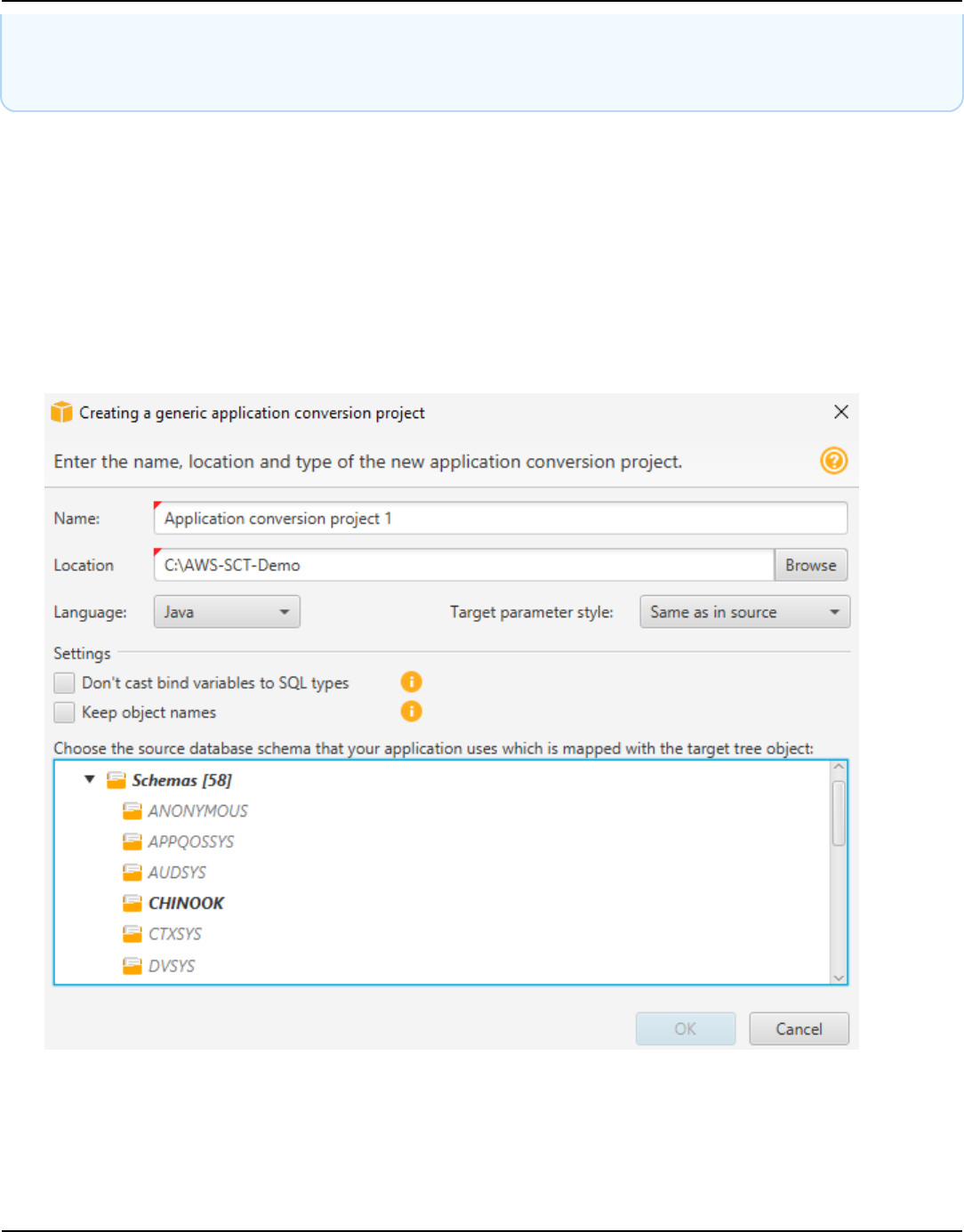

Creating generic application conversion projects ........................................................................ 442

Managing application conversion projects .................................................................................... 446

Analyzing and converting your SQL code ..................................................................................... 447

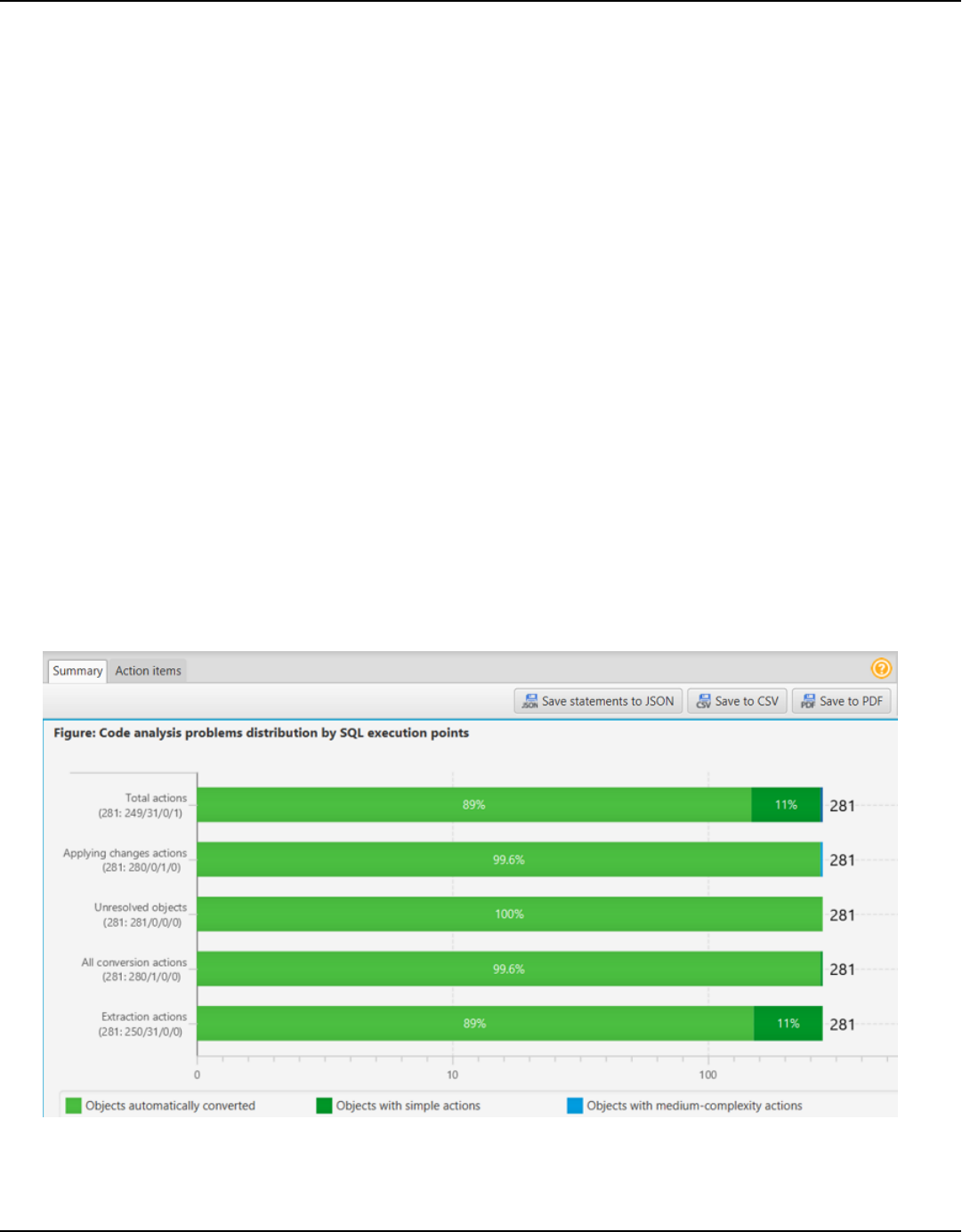

Creating and using the assessment report ................................................................................... 448

Editing and saving your converted SQL code ............................................................................... 449

SQL code in C# applications ................................................................................................................. 449

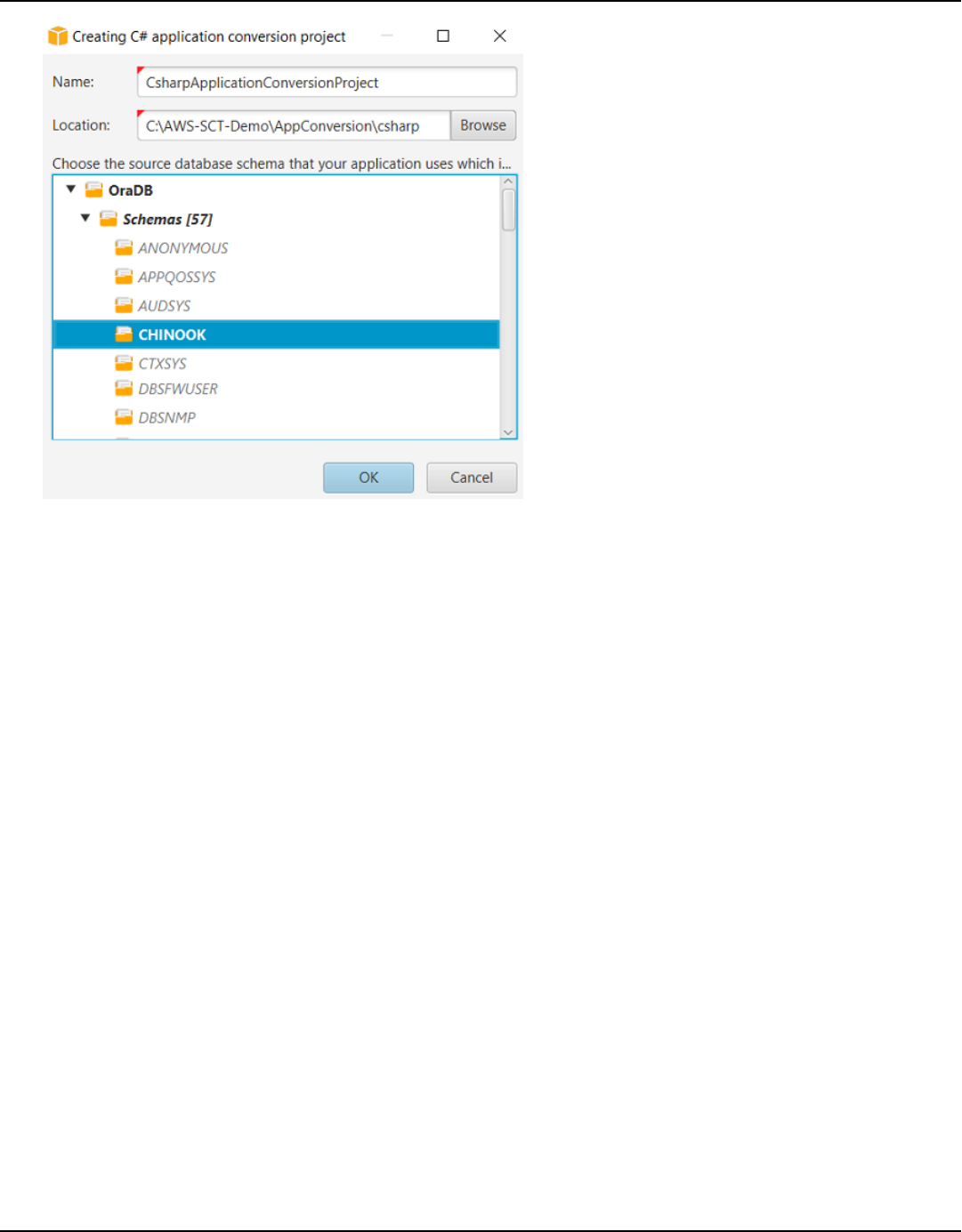

Creating C# application conversion projects ................................................................................ 450

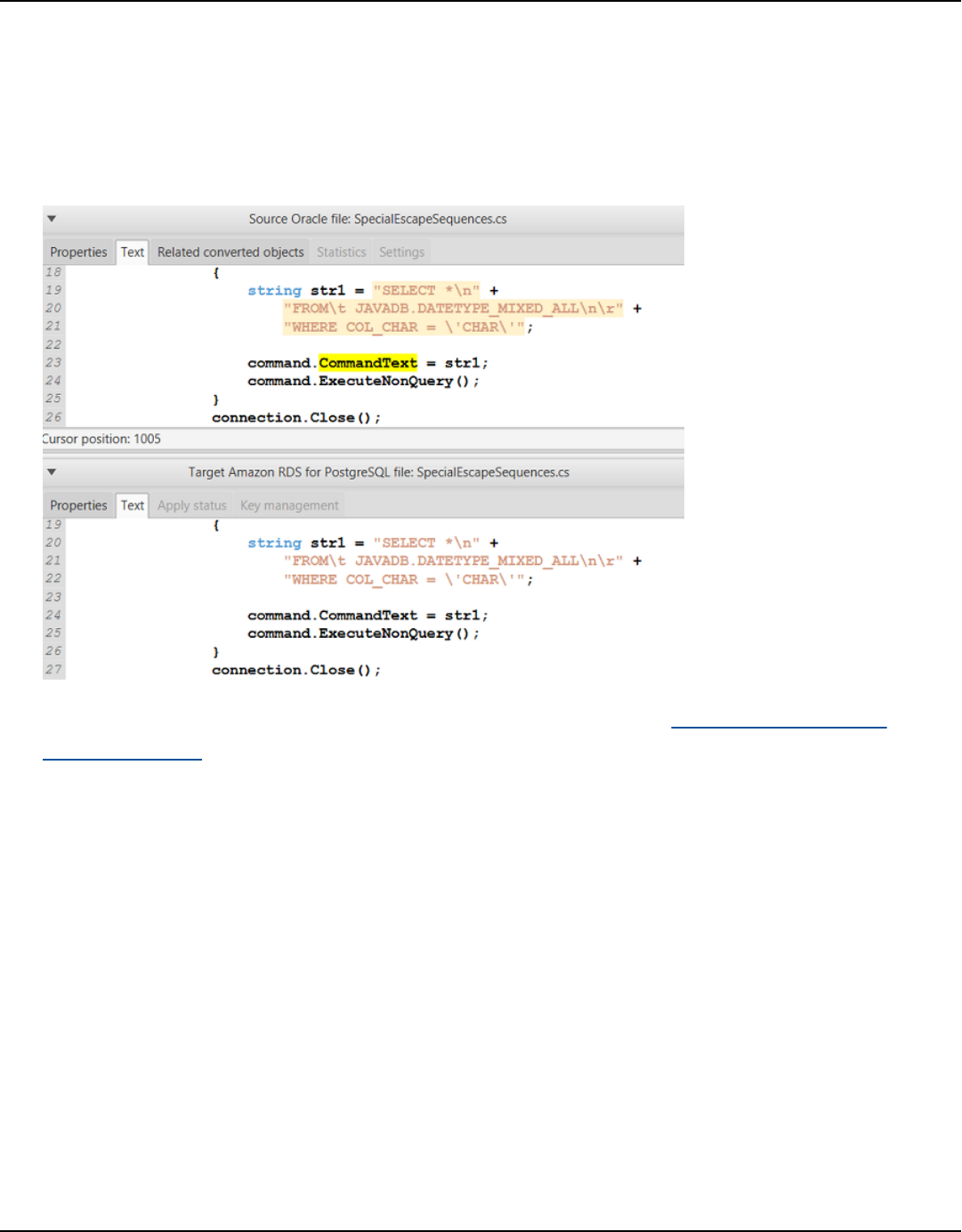

Converting your C# application SQL code .................................................................................... 451

Saving your converted application code ....................................................................................... 453

Version 1.0.672 x

AWS Schema Conversion Tool User Guide

Managing C# application conversion projects .............................................................................. 453

Creating a C# application conversion assessment report .......................................................... 454

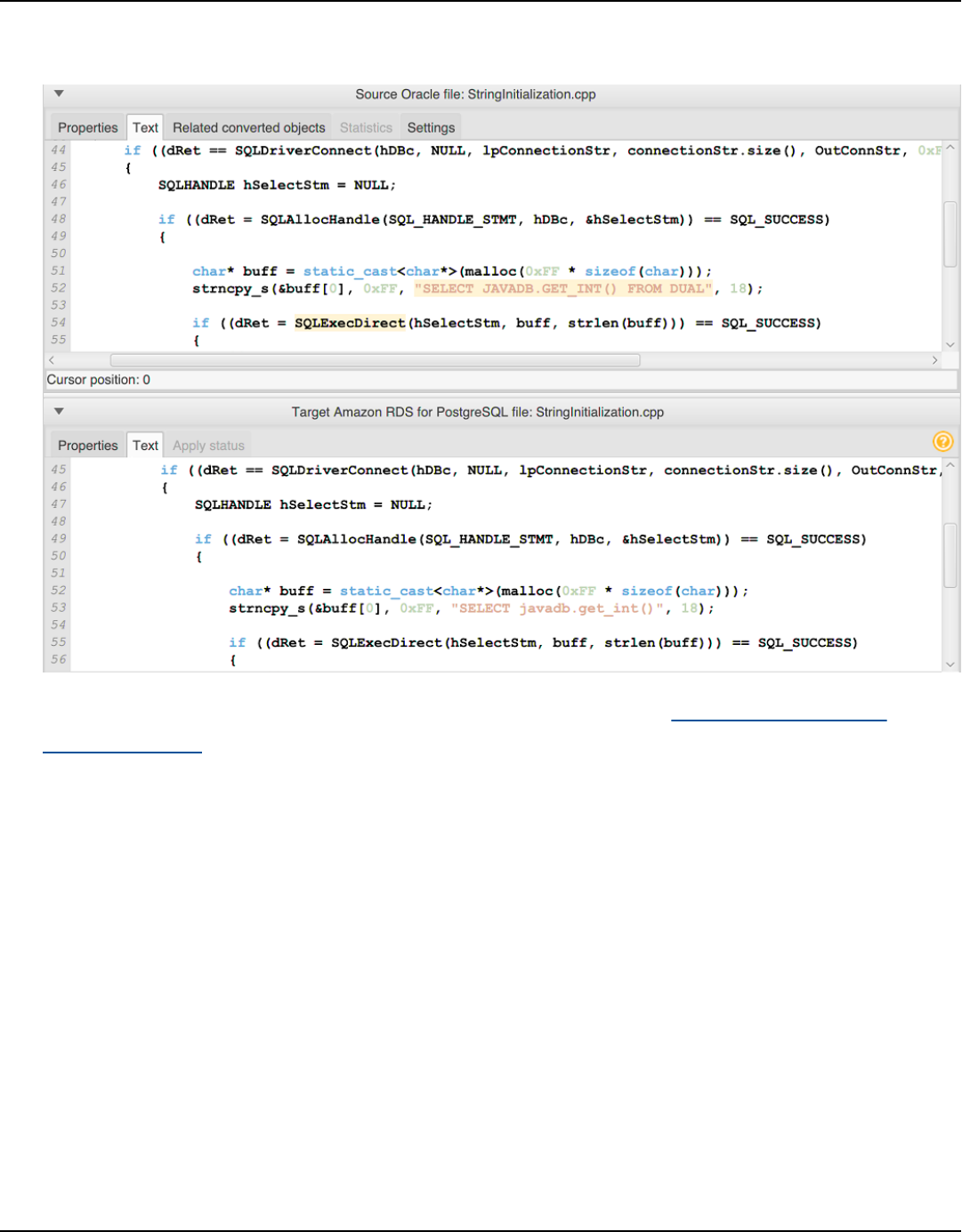

SQL code in C++ ...................................................................................................................................... 455

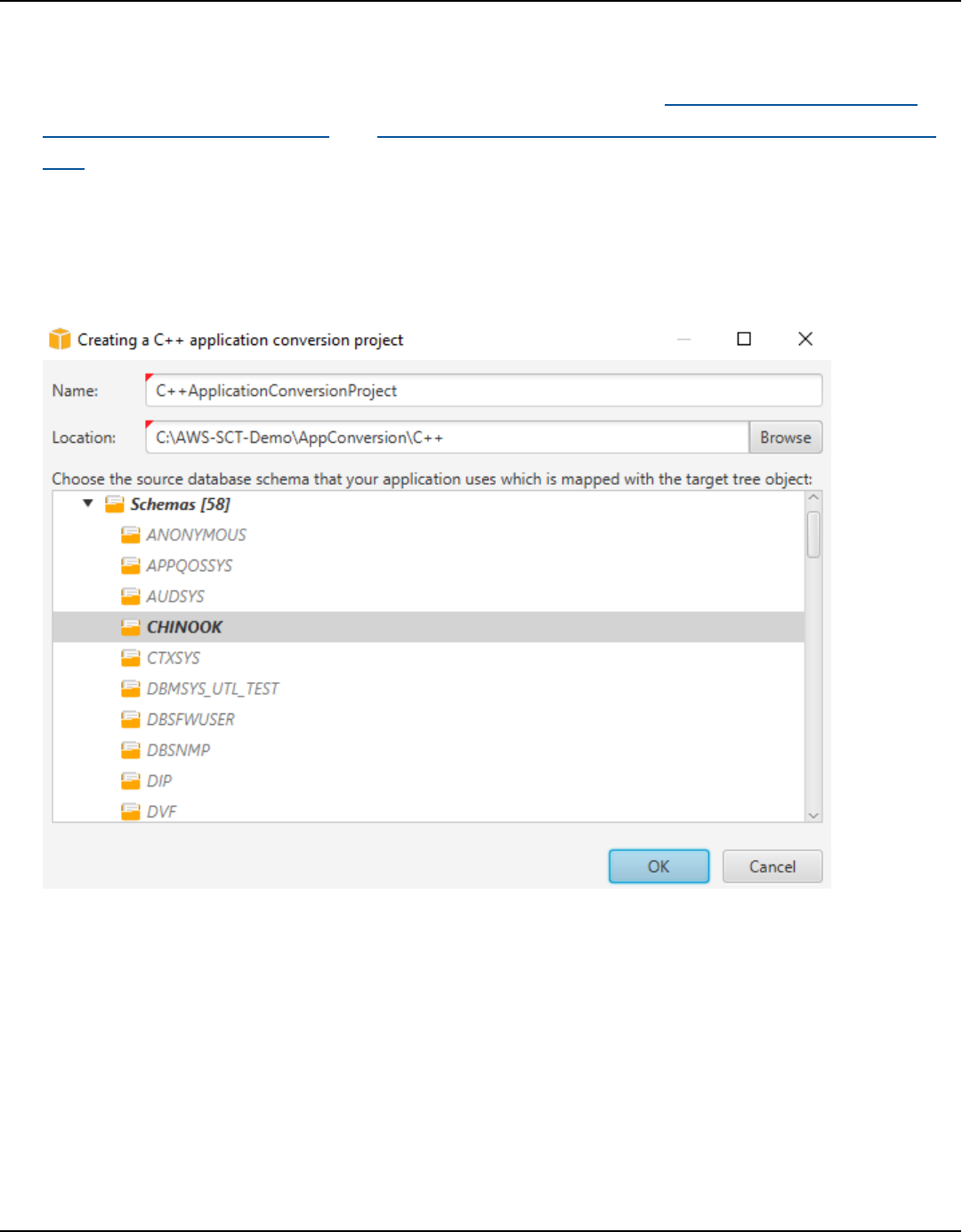

Creating C++ application conversion projects .............................................................................. 455

Converting your C++ application SQL code .................................................................................. 457

Saving your converted application code ....................................................................................... 458

Managing C++ application conversion projects ............................................................................ 459

Creating a C++ application conversion assessment report ........................................................ 460

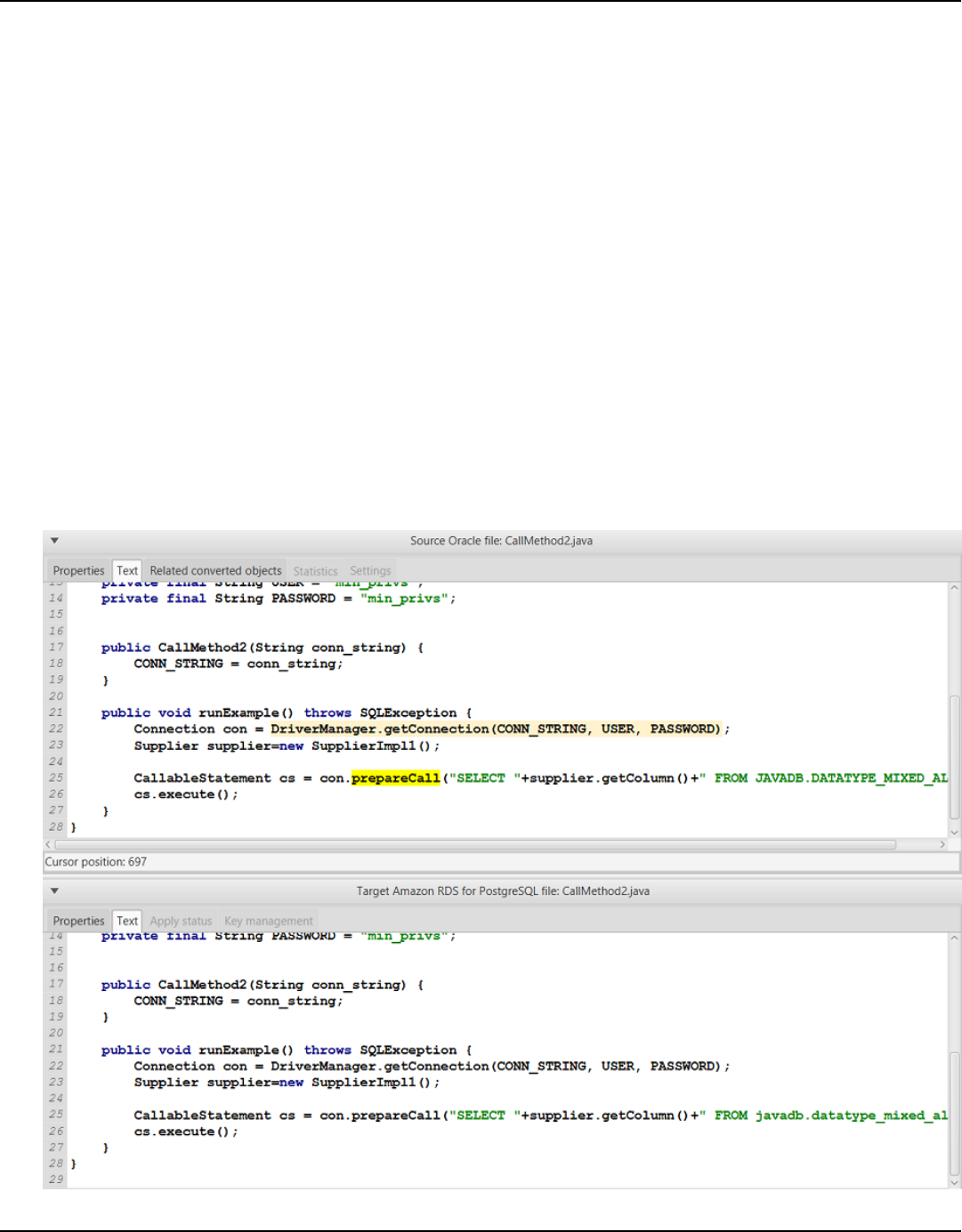

SQL code in Java ..................................................................................................................................... 461

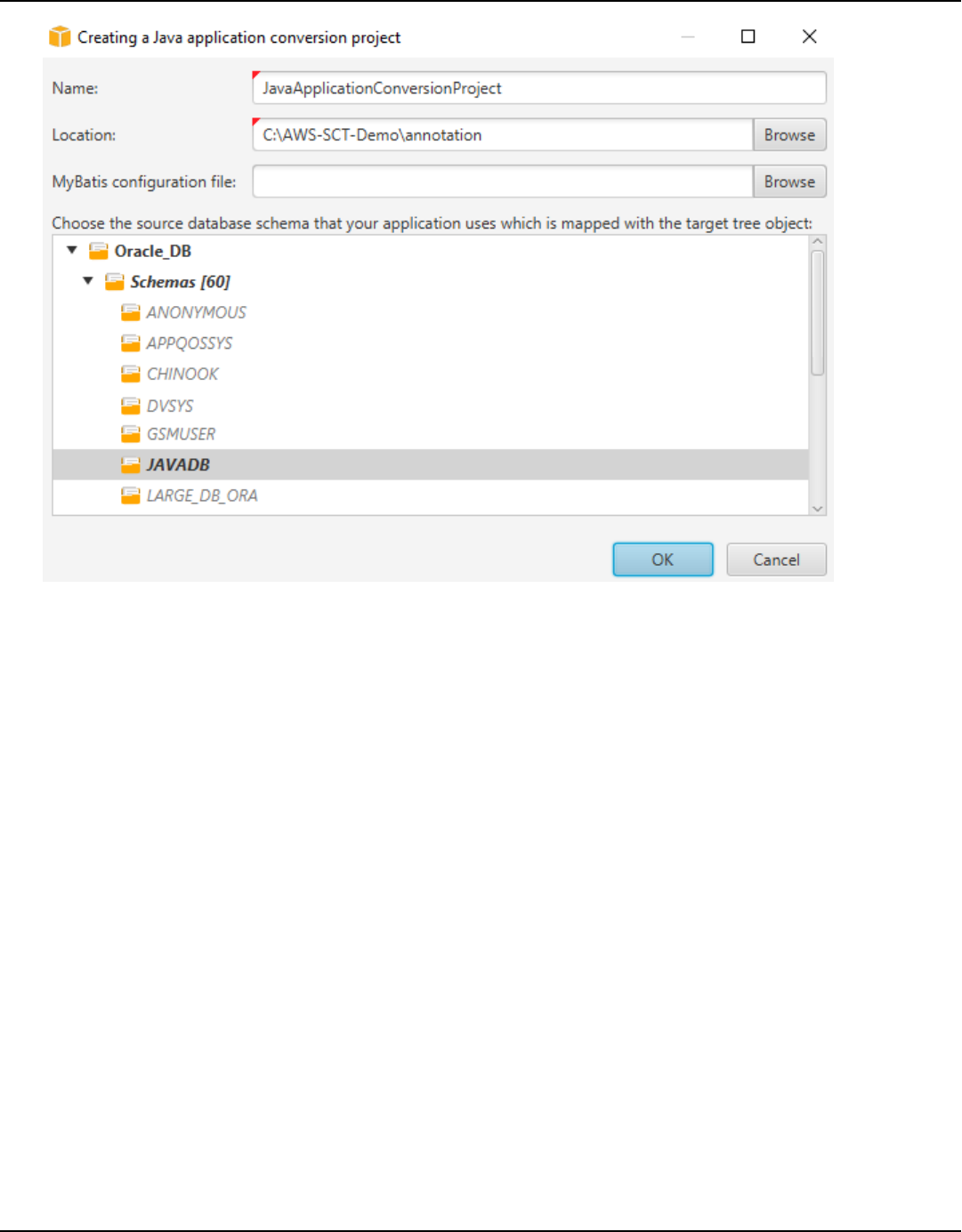

Creating Java application conversion projects ............................................................................. 462

Converting your Java application SQL code ................................................................................. 464

Saving your converted application code ....................................................................................... 465

Managing Java application conversion projects ........................................................................... 465

Creating a Java application conversion assessment report ....................................................... 467

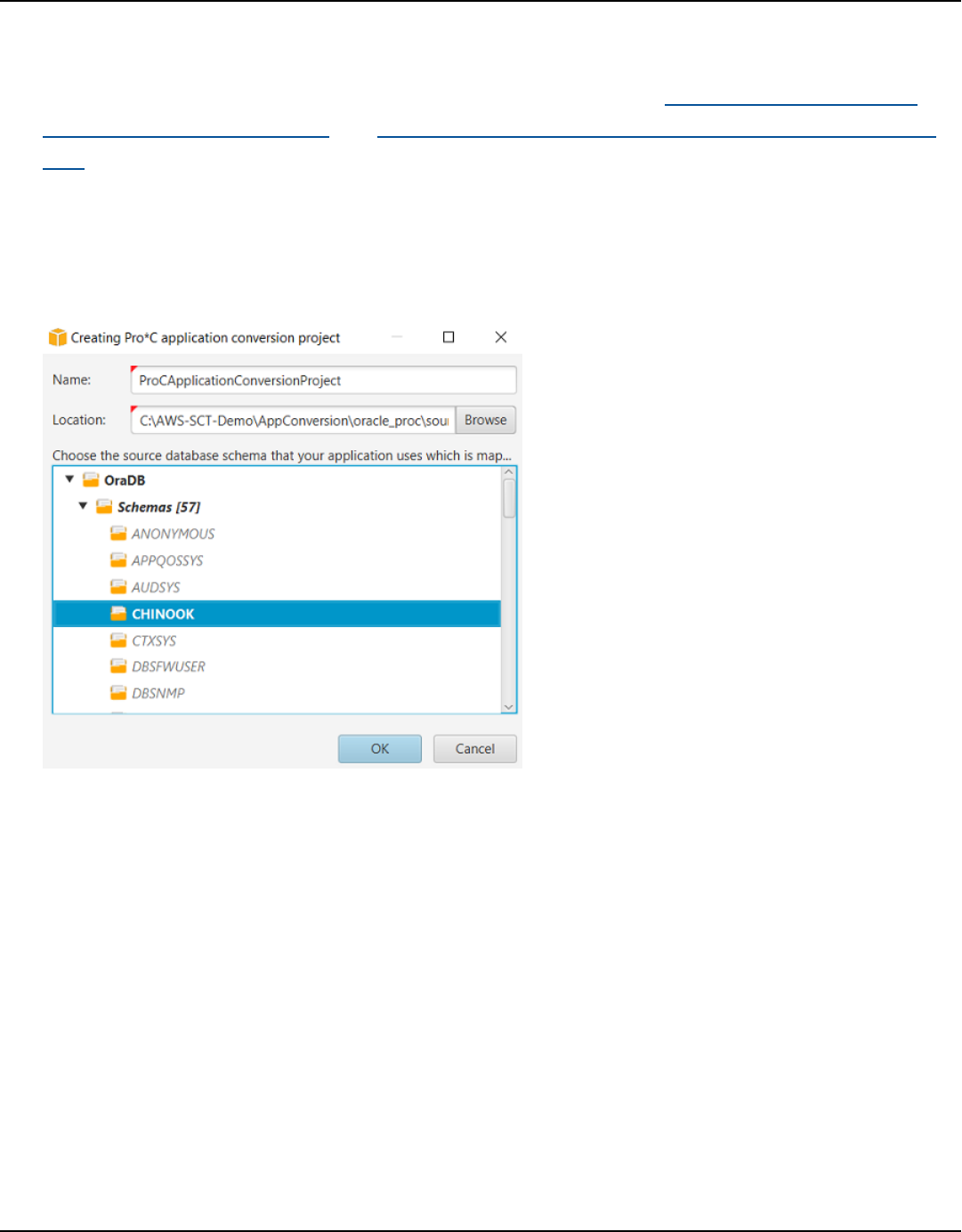

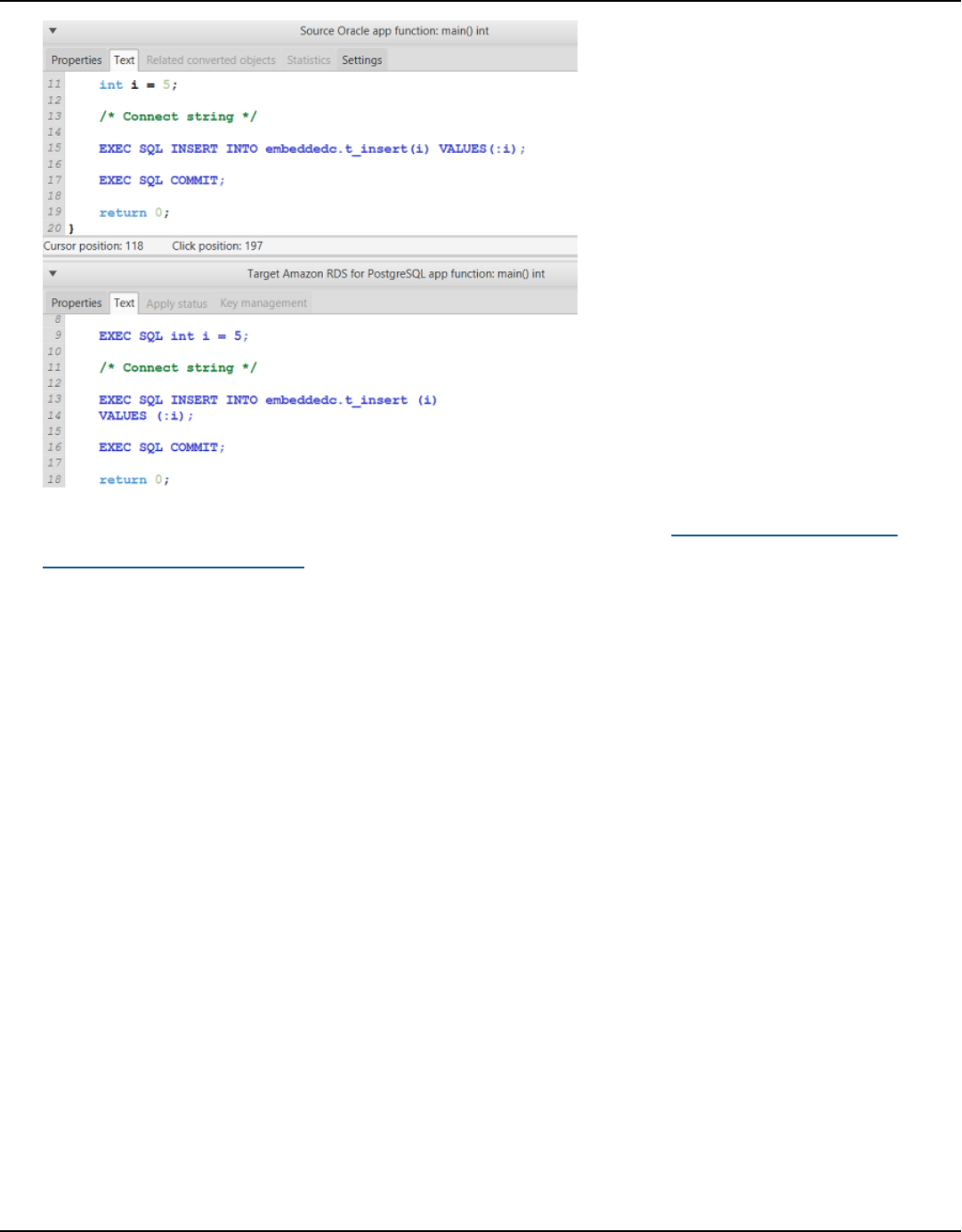

SQL code in Pro*C ................................................................................................................................... 468

Creating Pro*C application conversion projects ........................................................................... 468

Converting your Pro*C application SQL code ............................................................................... 470

Editing and saving your converted application code .................................................................. 471

Managing Pro*C application conversion projects ......................................................................... 472

Creating a Pro*C application conversion assessment report ..................................................... 473

Extension packs ........................................................................................................................... 475

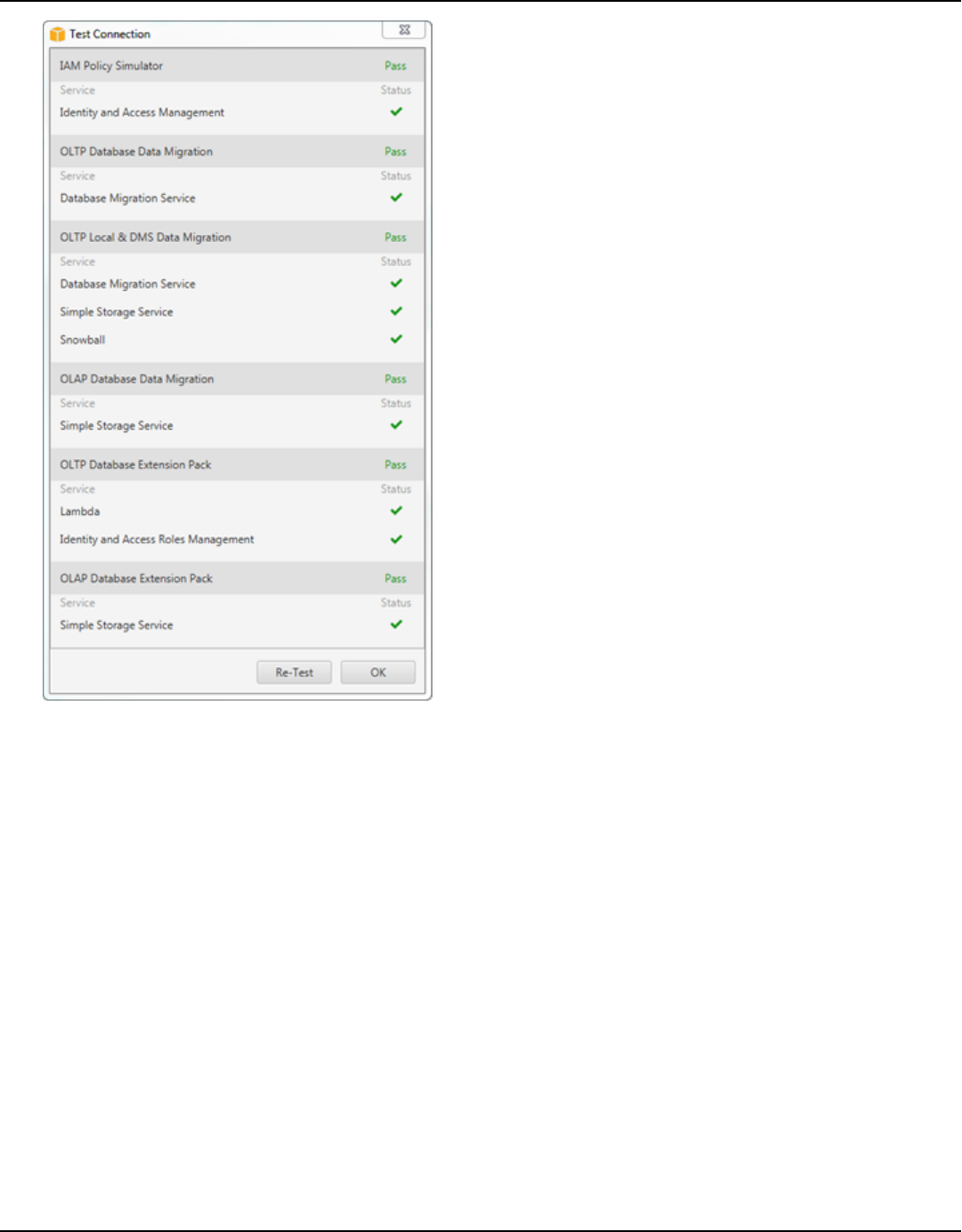

Permissions for using the extension pack .......................................................................................... 476

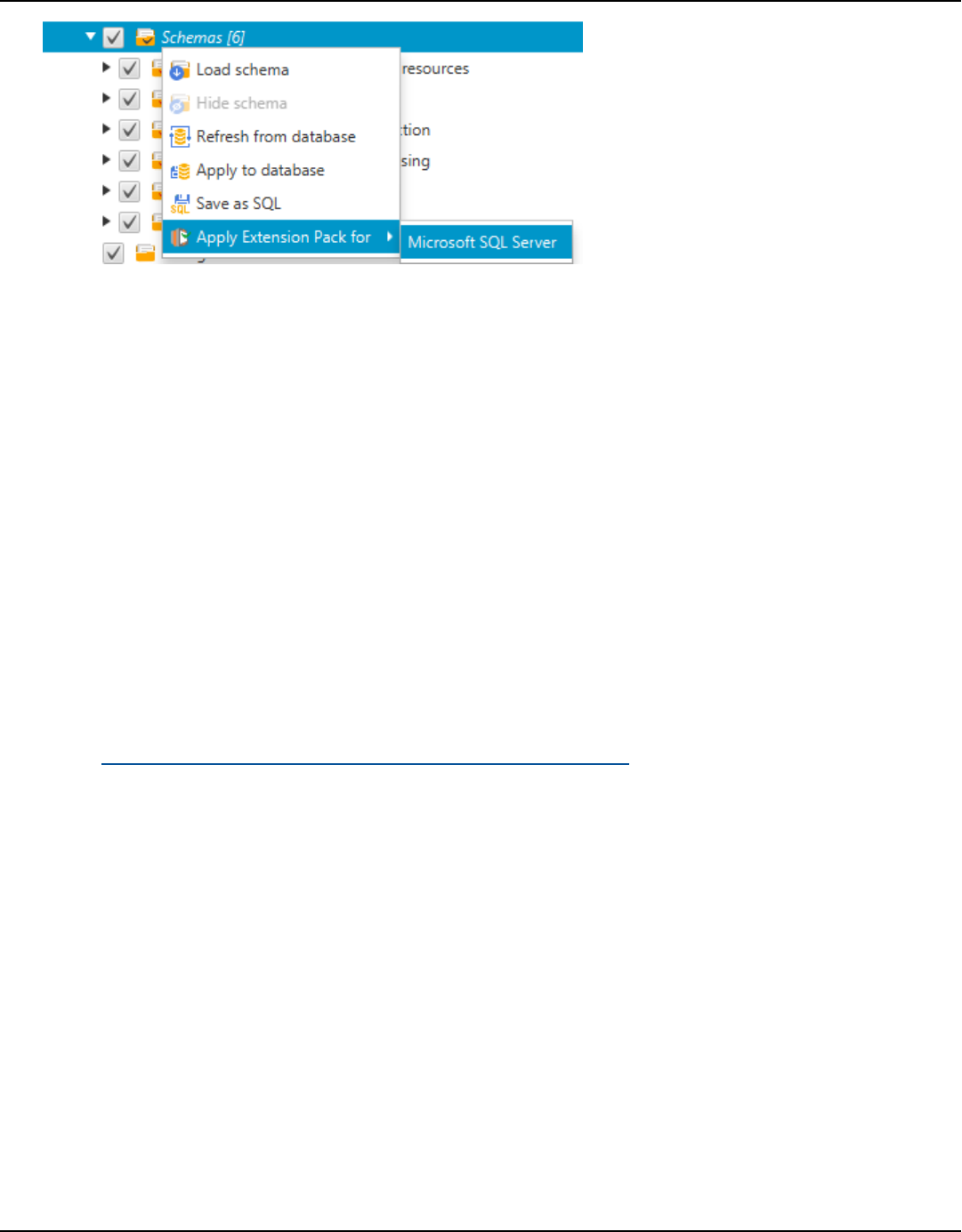

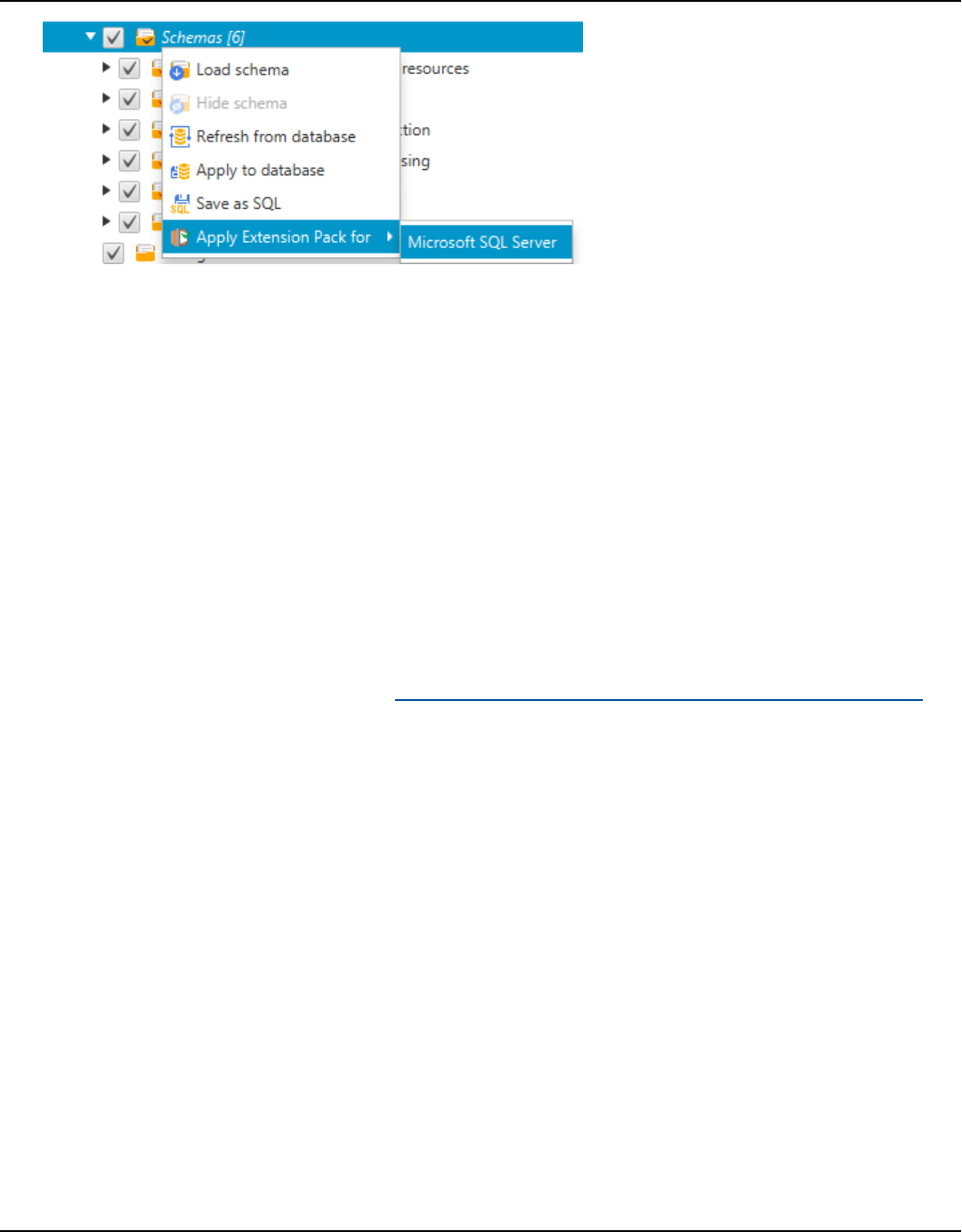

Using the extension pack schema ........................................................................................................ 477

Custom libraries for extension packs .................................................................................................. 478

Applying the extension pack ............................................................................................................ 478

Using the Lambda functions from the AWS SCT extension pack .................................................. 481

Using AWS Lambda functions to emulate database functionality ........................................... 481

Applying the extension pack to support Lambda functions ...................................................... 481

Configuring extension pack functions ................................................................................................. 483

Best practices ............................................................................................................................... 485

Configuring additional memory ........................................................................................................... 485

Default project folder ............................................................................................................................. 485

Increasing the data migration speed ................................................................................................... 486

Increasing logging information ............................................................................................................. 486

Troubleshooting ........................................................................................................................... 489

Cannot load objects from an Oracle source database ..................................................................... 489

Version 1.0.672 xi

AWS Schema Conversion Tool User Guide

Warning message ..................................................................................................................................... 489

CLI Reference ............................................................................................................................... 491

Prerequisites .............................................................................................................................................. 491

Interactive mode ...................................................................................................................................... 491

Examples ............................................................................................................................................... 493

Getting CLI scenarios .............................................................................................................................. 493

Examples ............................................................................................................................................... 497

Editing CLI scenarios ............................................................................................................................... 497

Script mode ............................................................................................................................................... 498

Examples ............................................................................................................................................... 499

Reference material ................................................................................................................................... 499

Release notes ............................................................................................................................... 500

Release notes – 676 ................................................................................................................................ 500

Release notes – 675 ................................................................................................................................ 505

Release notes – 674 ................................................................................................................................ 507

Release notes – 673 ................................................................................................................................ 514

Release notes – 672 ................................................................................................................................ 519

Release notes – 671 ................................................................................................................................ 527

Release notes – 670 ................................................................................................................................ 536

Release notes – 669 ................................................................................................................................ 541

Release notes – 668 ................................................................................................................................ 545

Release notes – 667 ................................................................................................................................ 552

Release notes – 666 ................................................................................................................................ 556

Release notes – 665 ................................................................................................................................ 561

Release notes – 664 ................................................................................................................................ 564

Release notes – 663 ................................................................................................................................ 568

Release notes – 662 ................................................................................................................................ 571

Release notes – 661 ................................................................................................................................ 576

Release notes – 660 ................................................................................................................................ 580

Release notes – 659 ................................................................................................................................ 583

Release notes – 658 ................................................................................................................................ 588

Release notes – 657 ................................................................................................................................ 593

Release notes – 656 ................................................................................................................................ 597

Release notes – 655 ................................................................................................................................ 600

Release notes – 654 ................................................................................................................................ 603

Release notes – 653 ................................................................................................................................ 606

Version 1.0.672 xii

AWS Schema Conversion Tool User Guide

Release notes – 652 ................................................................................................................................ 609

Release notes – 651 ................................................................................................................................ 611

Release notes – 650 ................................................................................................................................ 613

Release notes – 649 ................................................................................................................................ 615

Release notes – 648 ................................................................................................................................ 617

Release notes – 647 ................................................................................................................................ 619

Release notes – 646 ................................................................................................................................ 621

Release notes – 645 ................................................................................................................................ 622

Release notes – 644 ................................................................................................................................ 624

Release notes – 642 ................................................................................................................................ 626

Release notes – 641 ................................................................................................................................ 627

Release notes – 640 ................................................................................................................................ 628

Release 1.0.640 Oracle changes ...................................................................................................... 628

Release 1.0.640 Microsoft SQL Server changes ........................................................................... 634

Release 1.0.640 MySQL Changes .................................................................................................... 638

Release 1.0.640 PostgreSQL changes ............................................................................................ 639

Release 1.0.640 Db2 LUW changes ................................................................................................ 642

Release 1.0.640 Teradata changes .................................................................................................. 643

Release 1.0.640 changes for other engines .................................................................................. 644

Document history ........................................................................................................................ 647

Earlier updates .......................................................................................................................................... 661

Version 1.0.672 xiii

AWS Schema Conversion Tool User Guide

What is the AWS Schema Conversion Tool?

You can use the AWS Schema Conversion Tool (AWS SCT) to convert your existing database schema

from one database engine to another. You can convert relational OLTP schema, or data warehouse

schema. Your converted schema is suitable for an Amazon Relational Database Service (Amazon

RDS) MySQL, MariaDB, Oracle, SQL Server, PostgreSQL DB, an Amazon Aurora DB cluster, or an

Amazon Redshift cluster. The converted schema can also be used with a database on an Amazon

EC2 instance or stored as data on an Amazon S3 bucket.

AWS SCT supports several industry standards, including Federal Information Processing Standards

(FIPS), for connections to an Amazon S3 bucket or another AWS resource. AWS SCT is also

compliant with Federal Risk and Authorization Management Program (FedRAMP). For details about

AWS and compliance efforts, see AWS services in scope by compliance program.

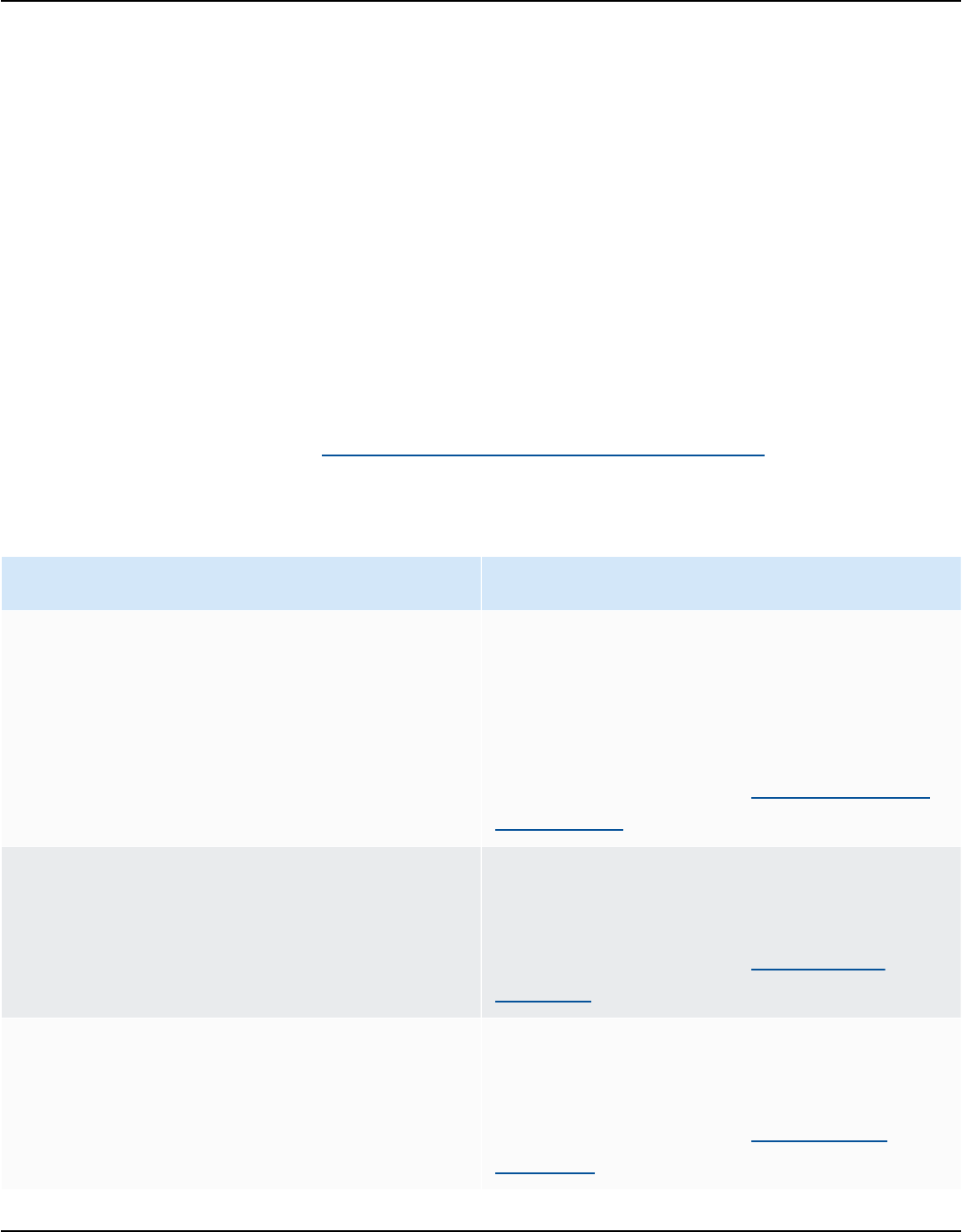

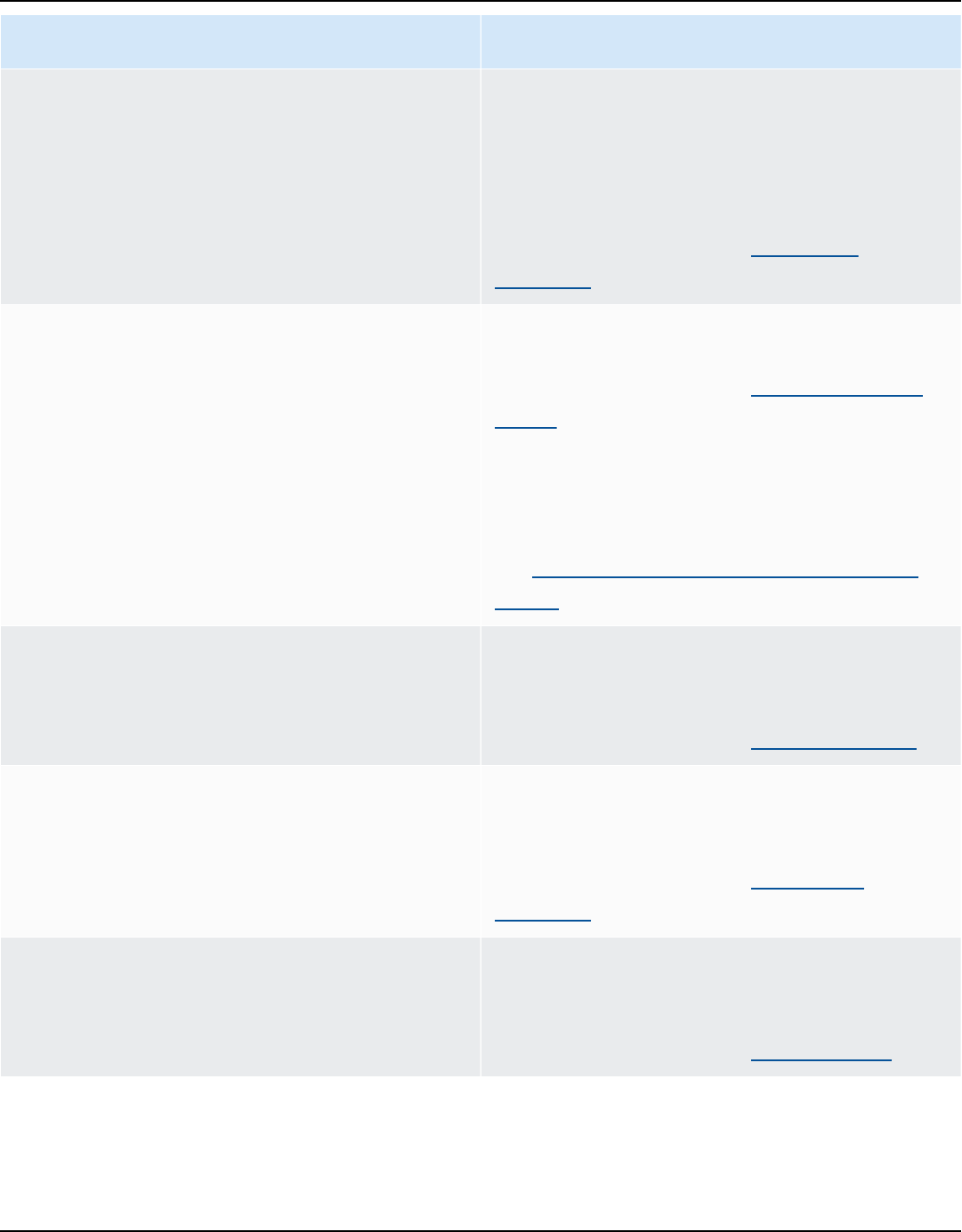

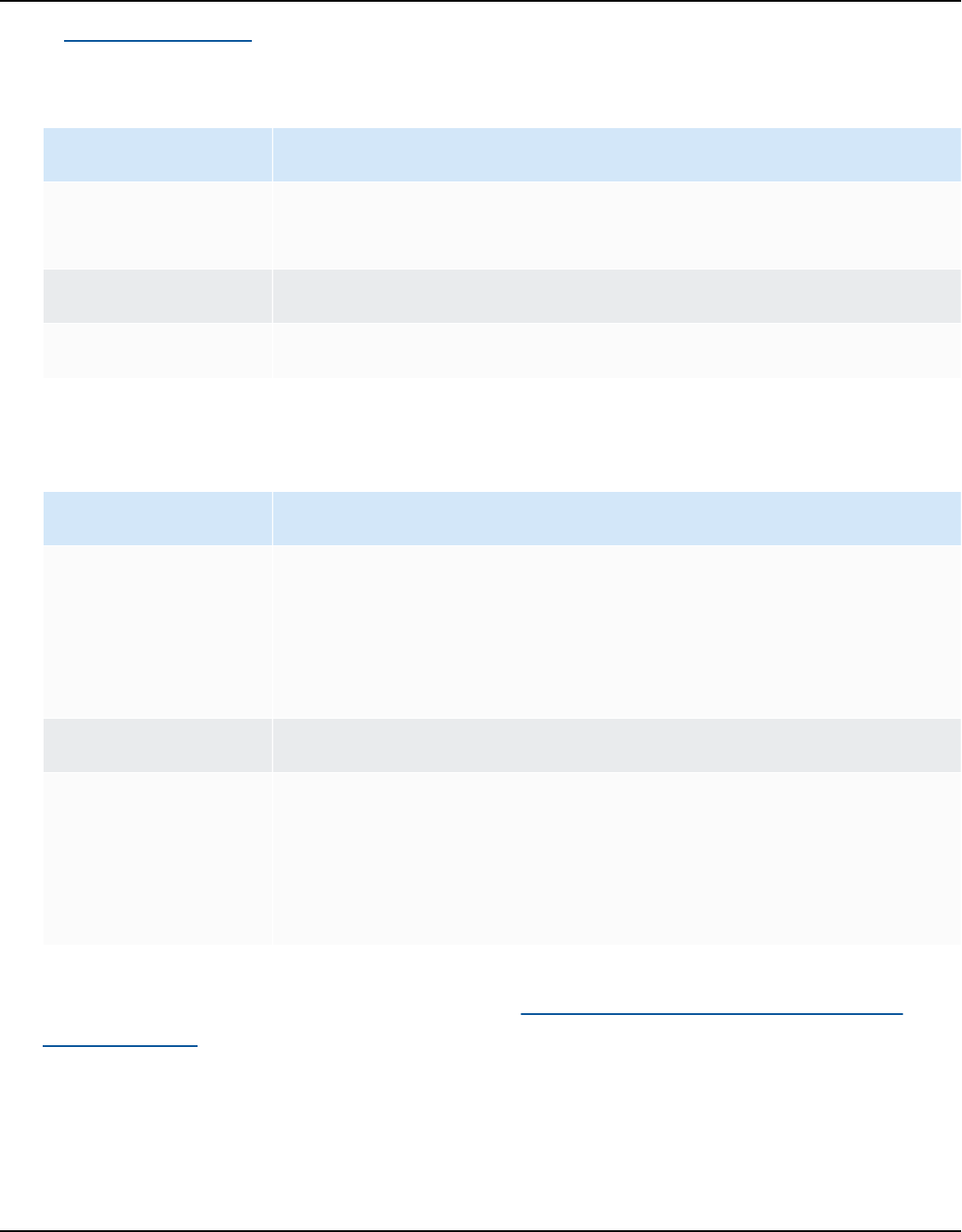

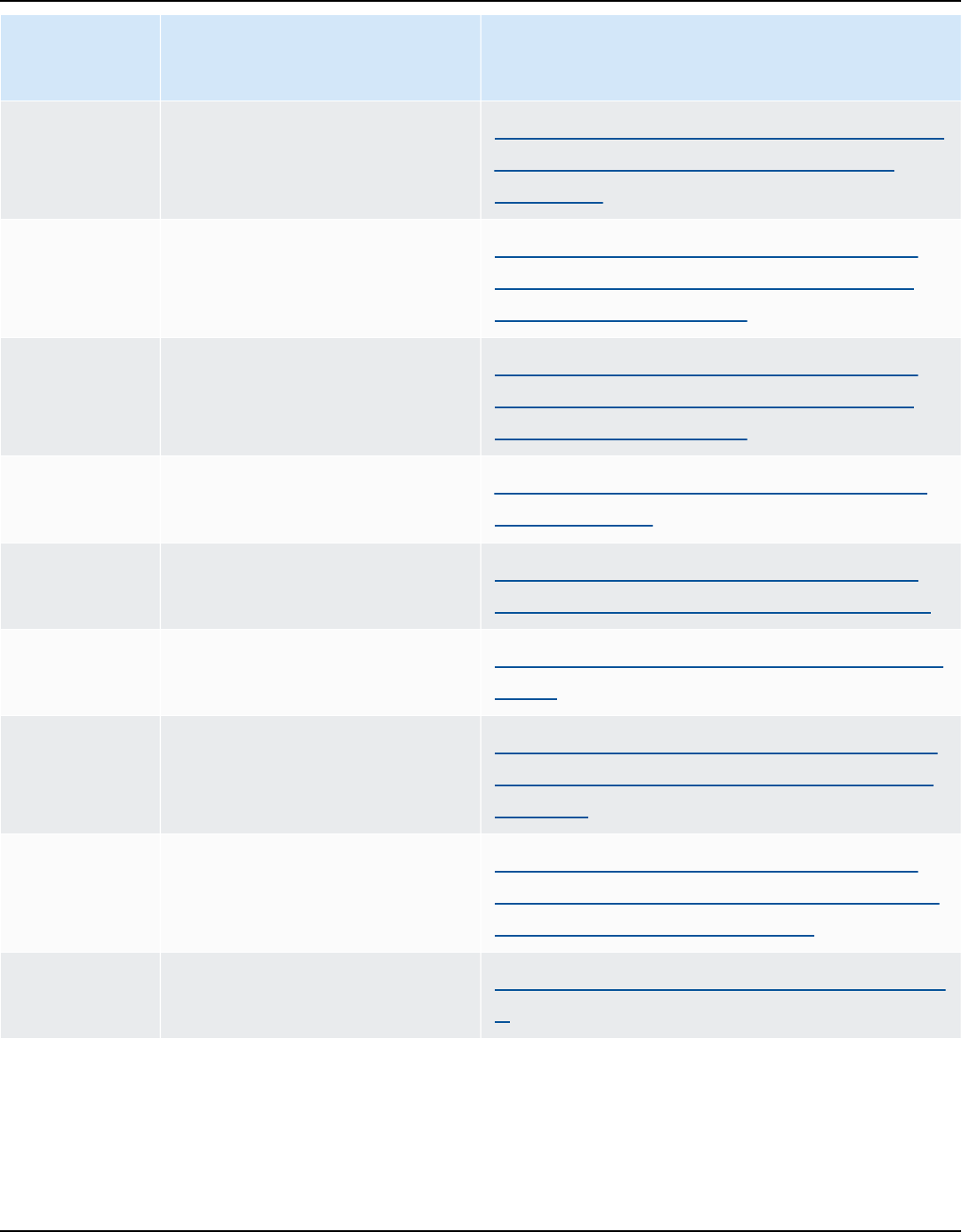

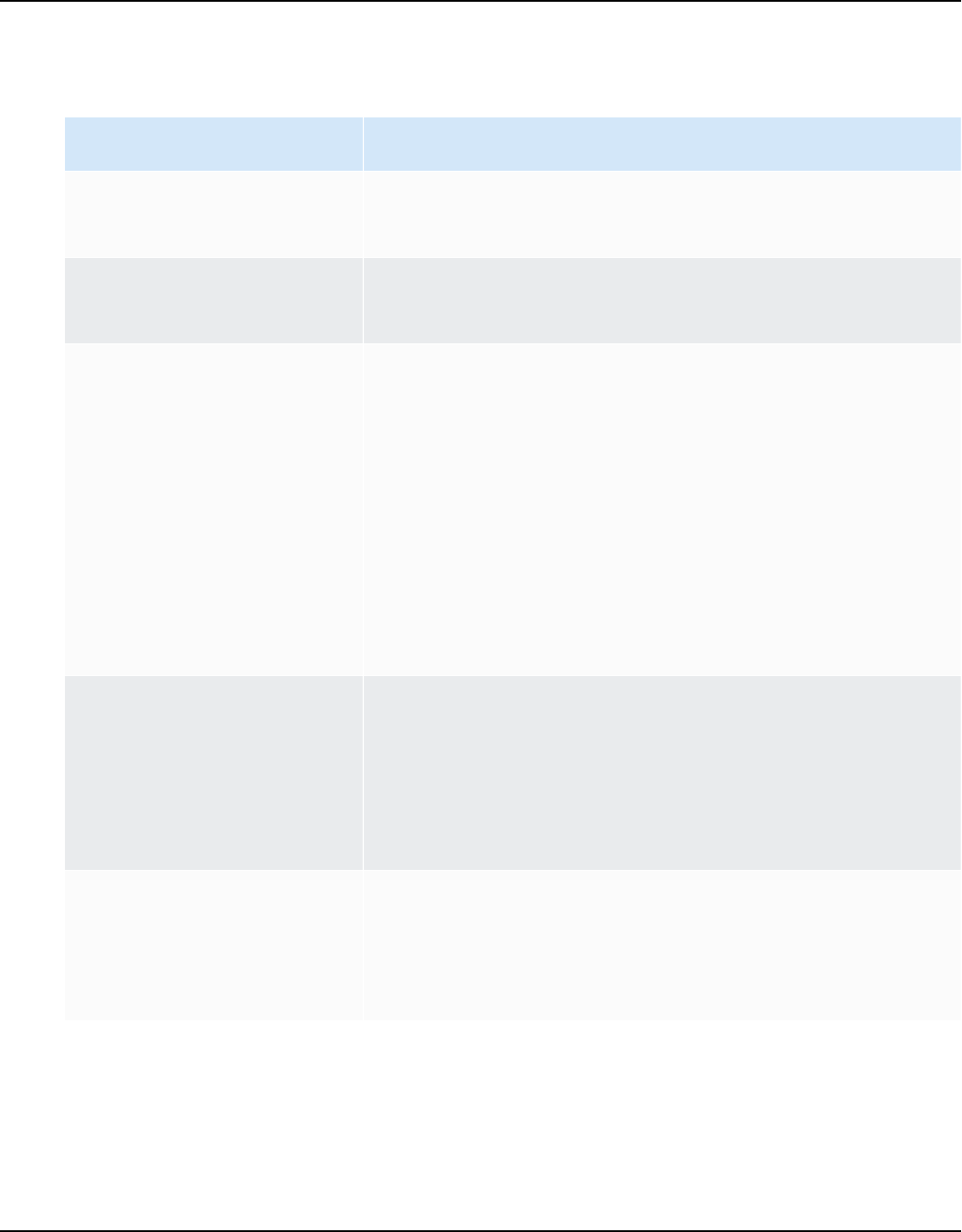

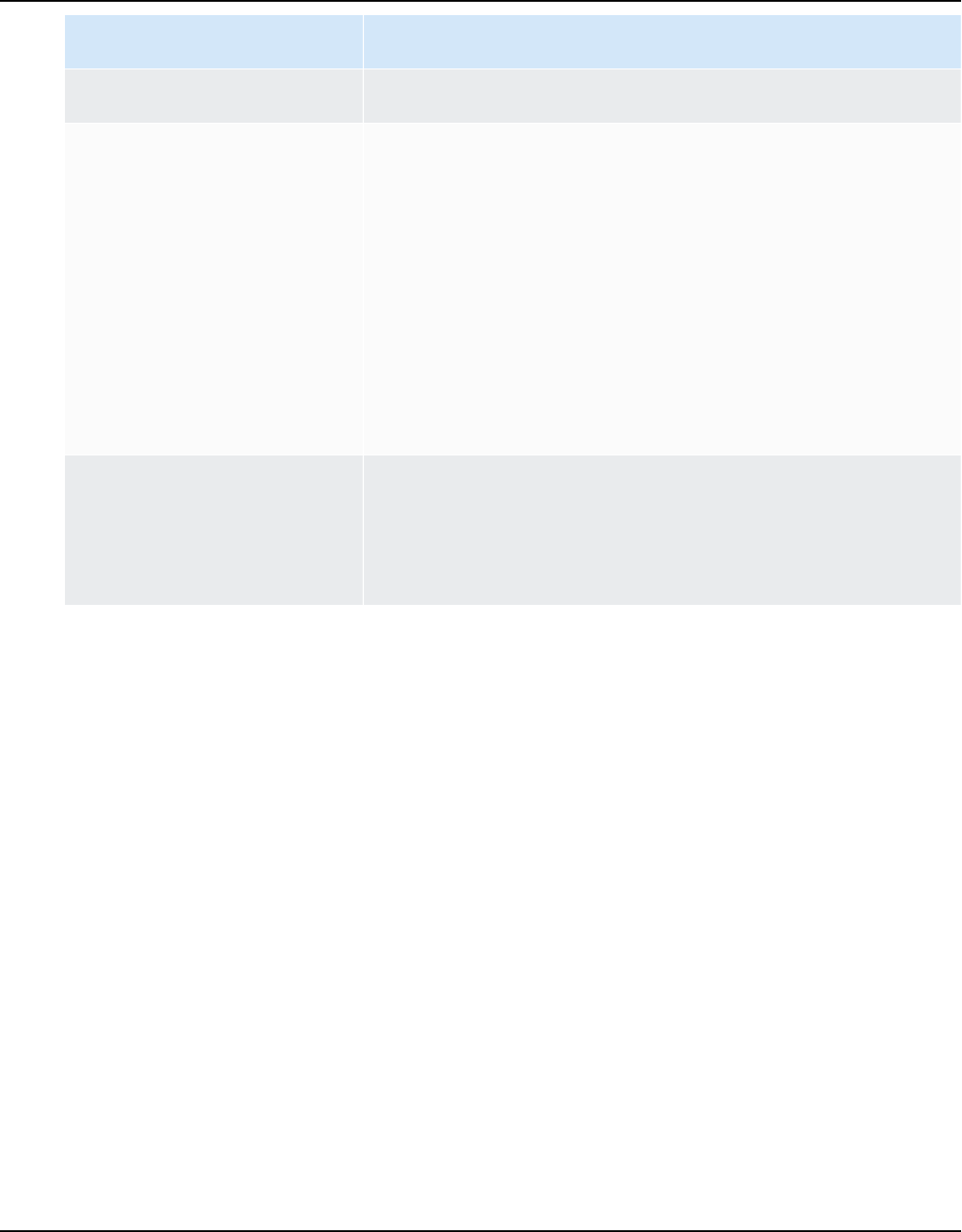

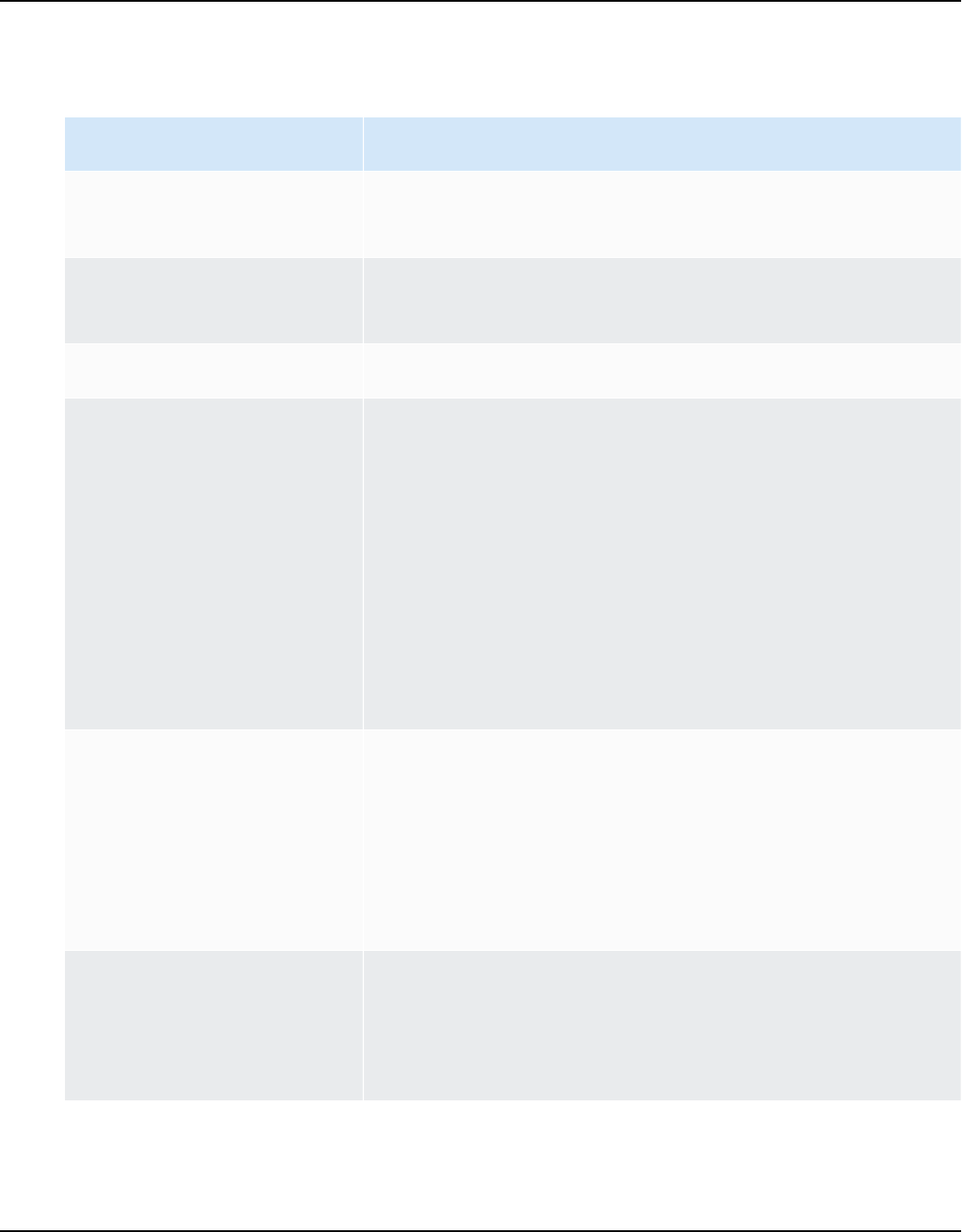

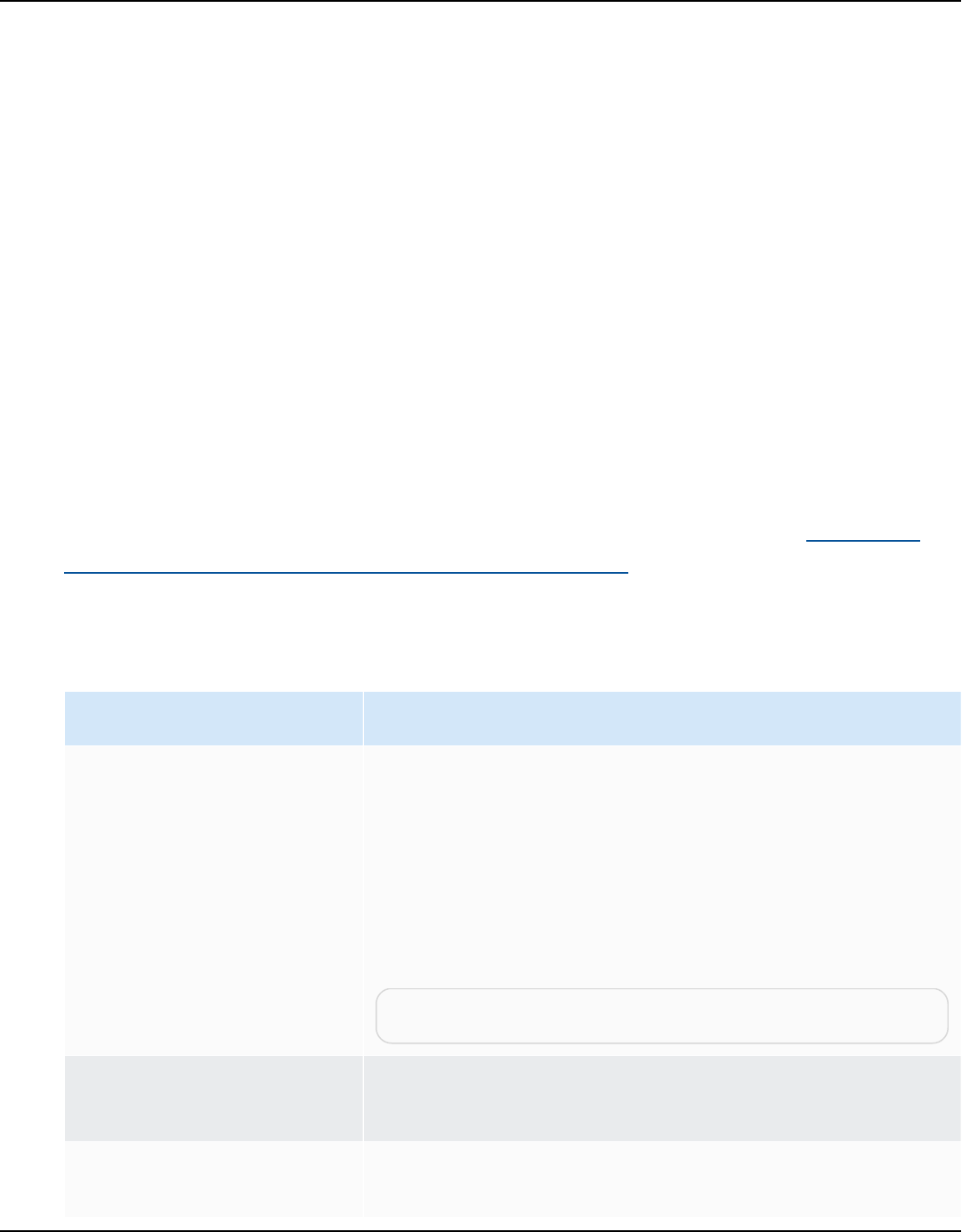

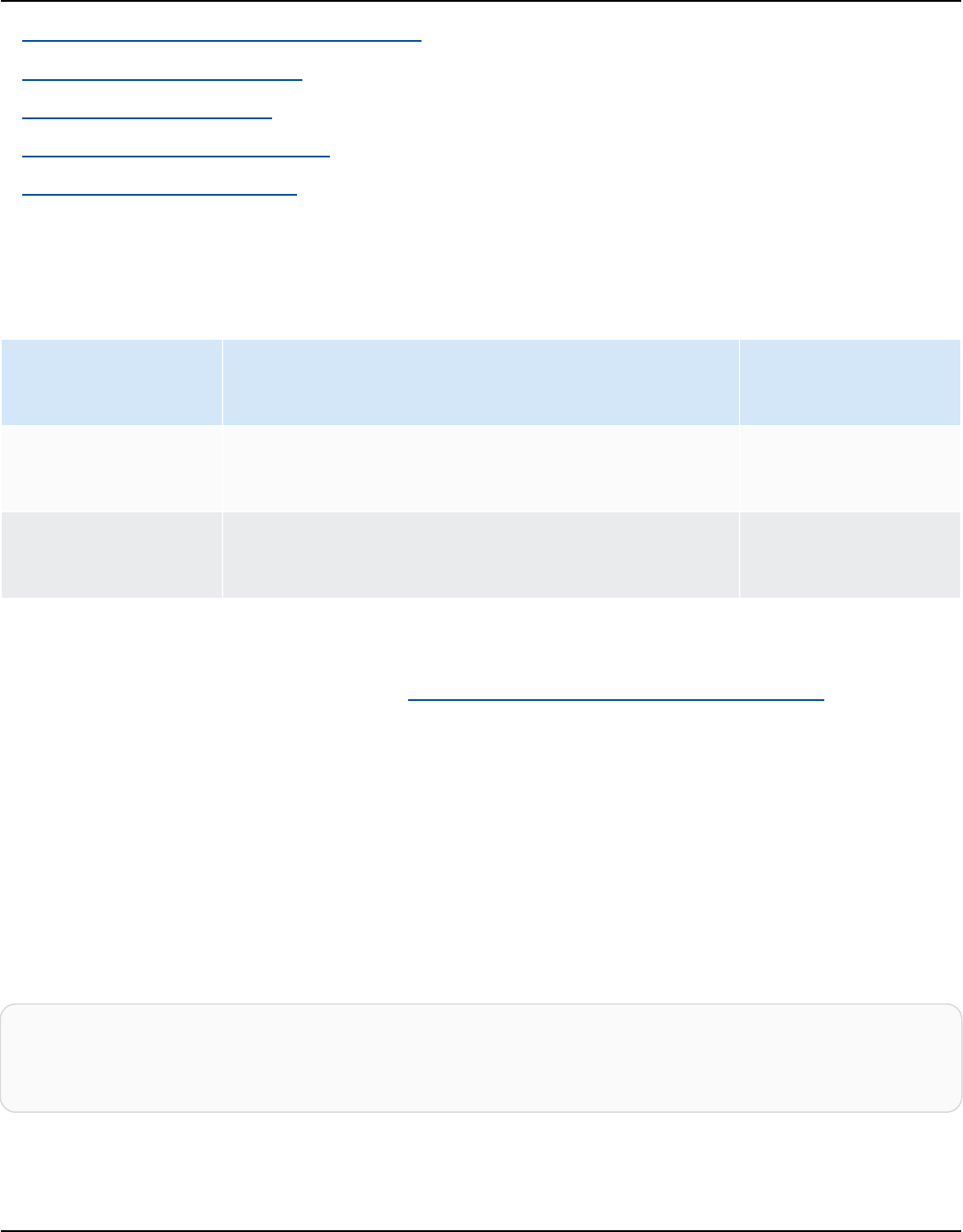

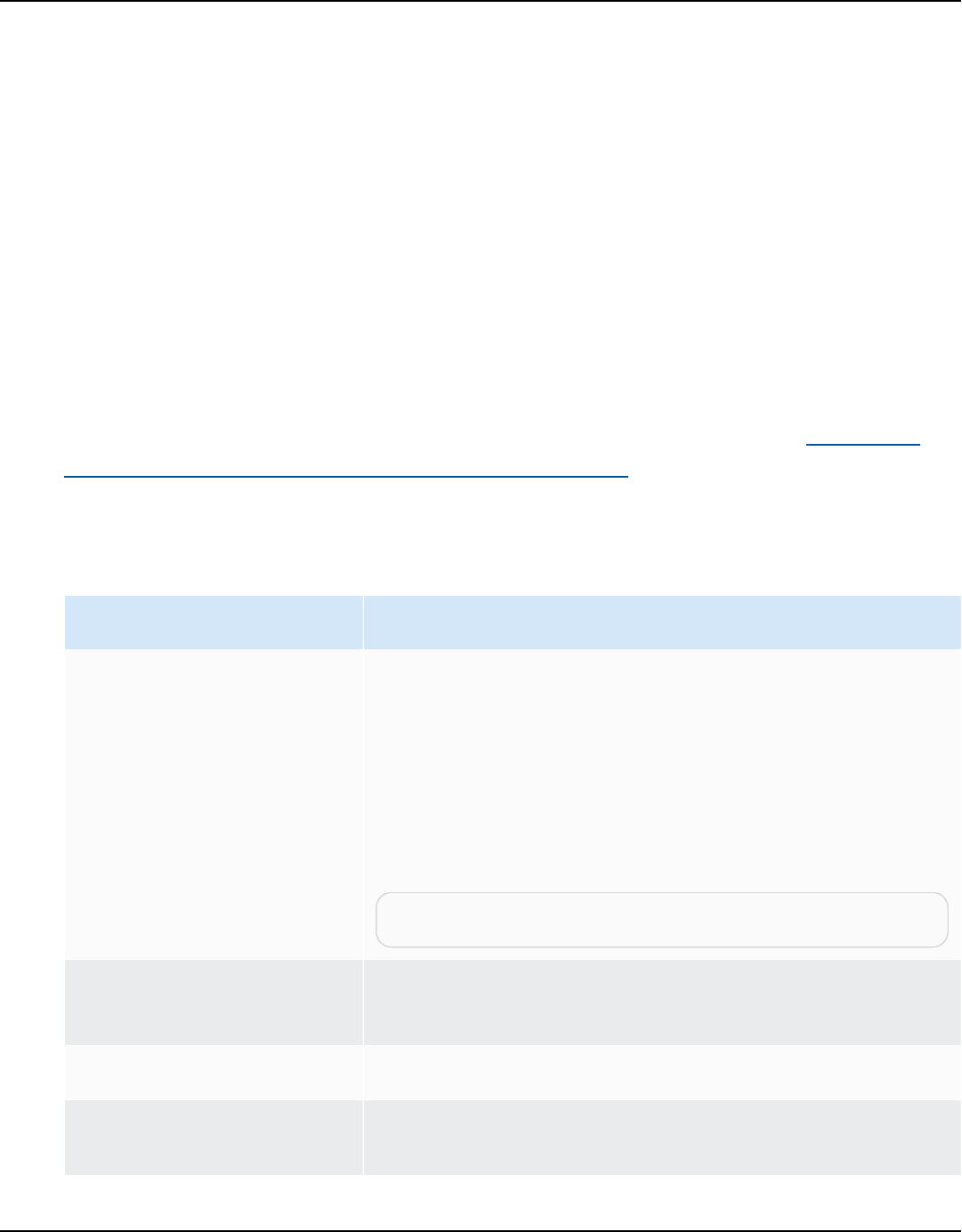

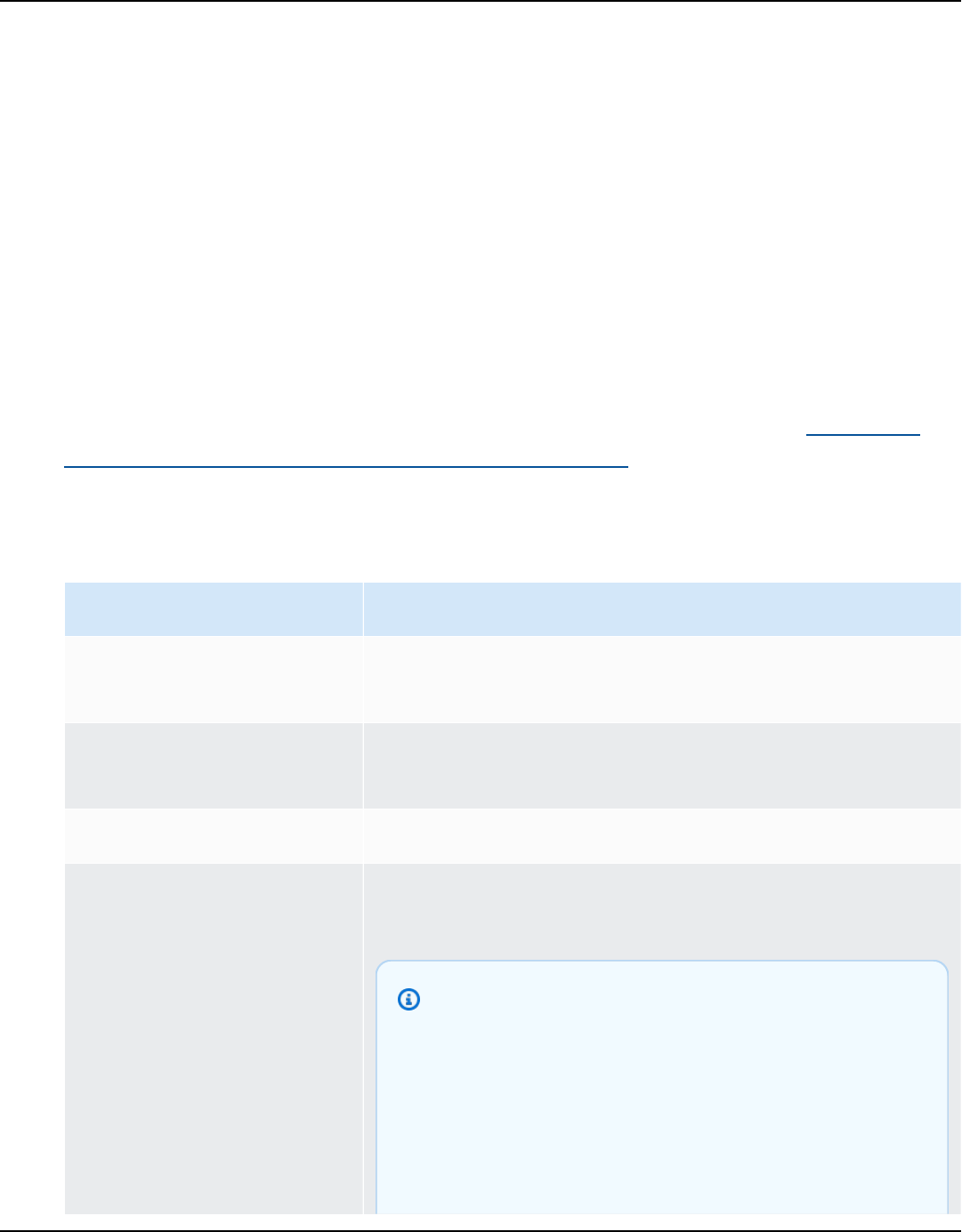

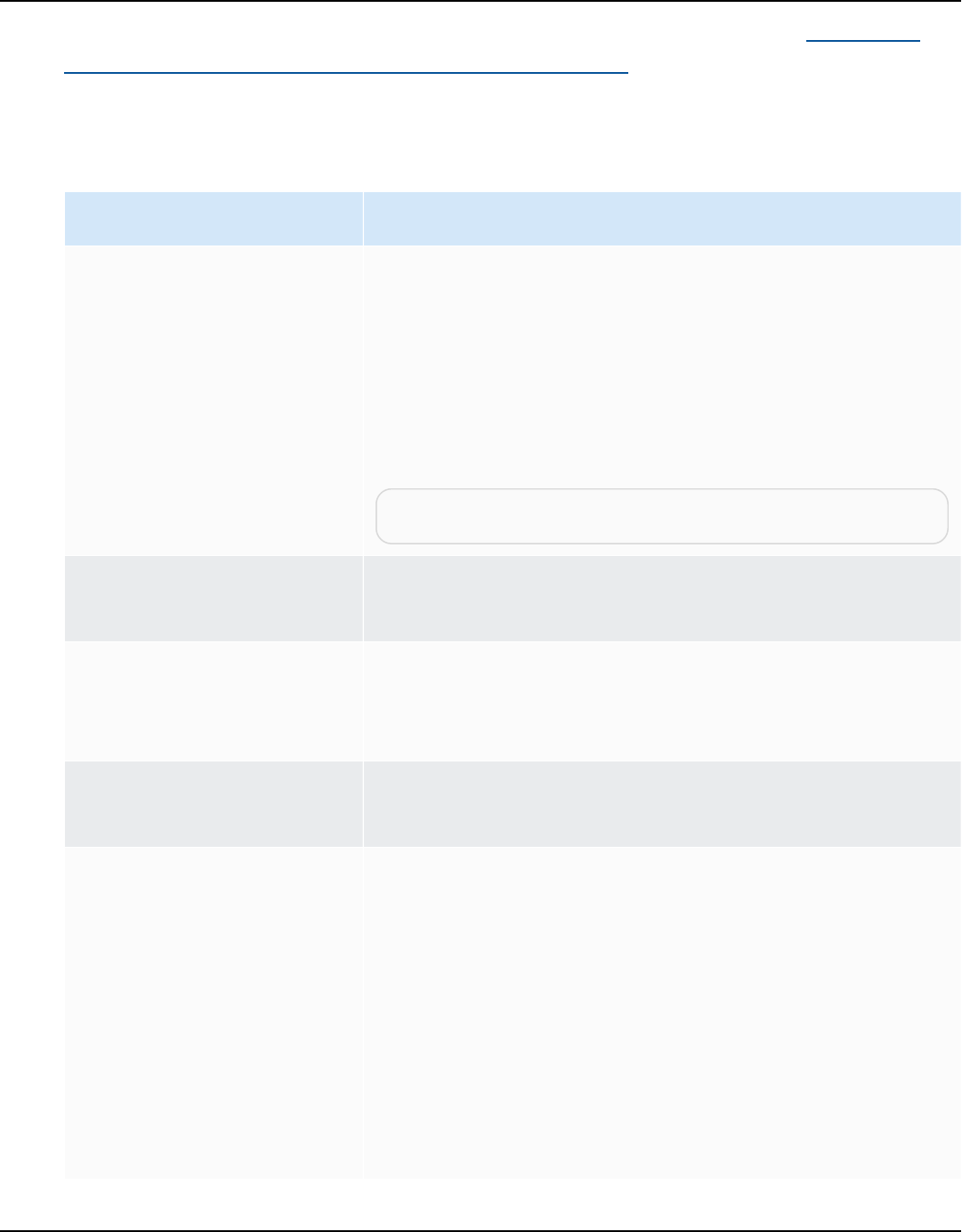

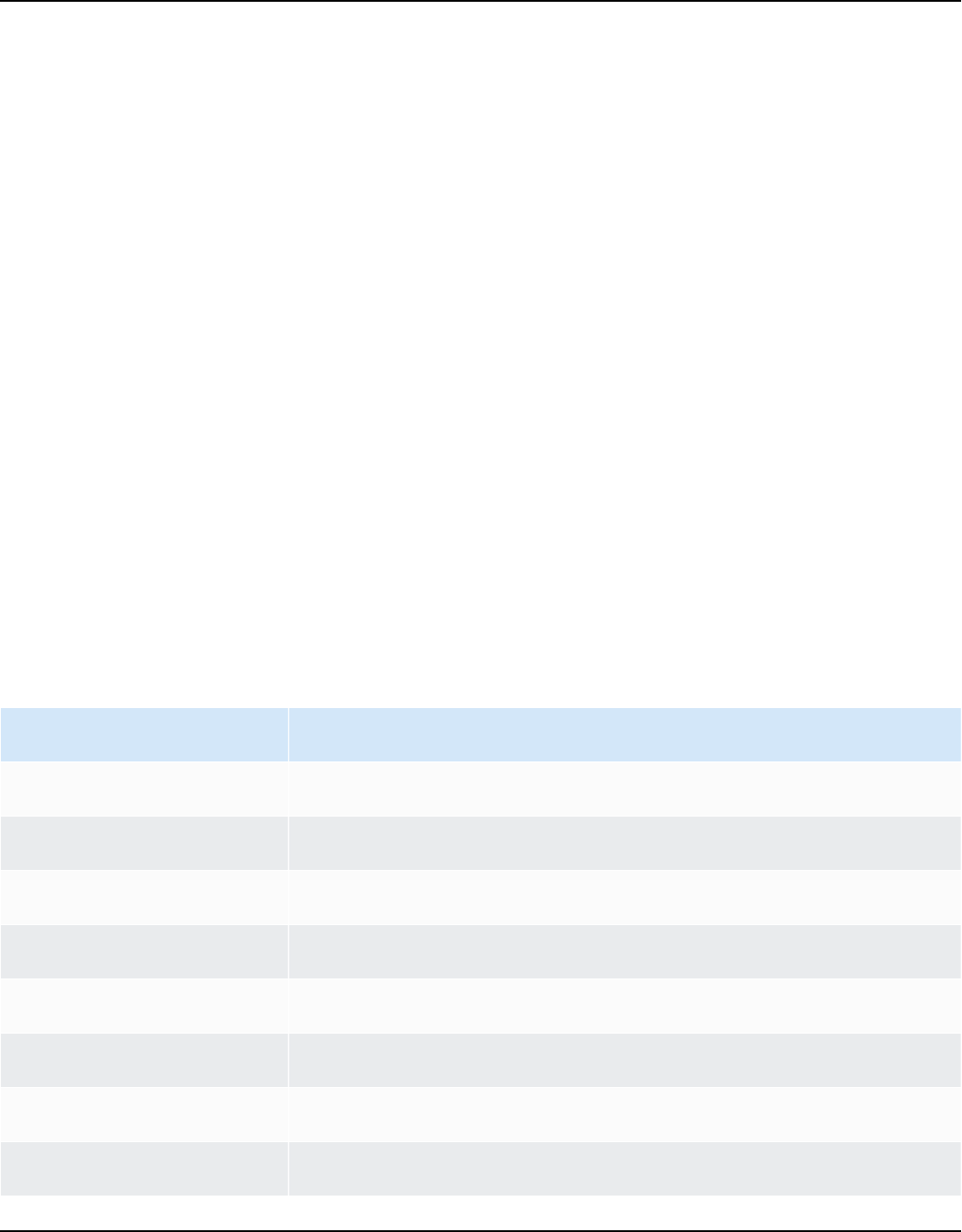

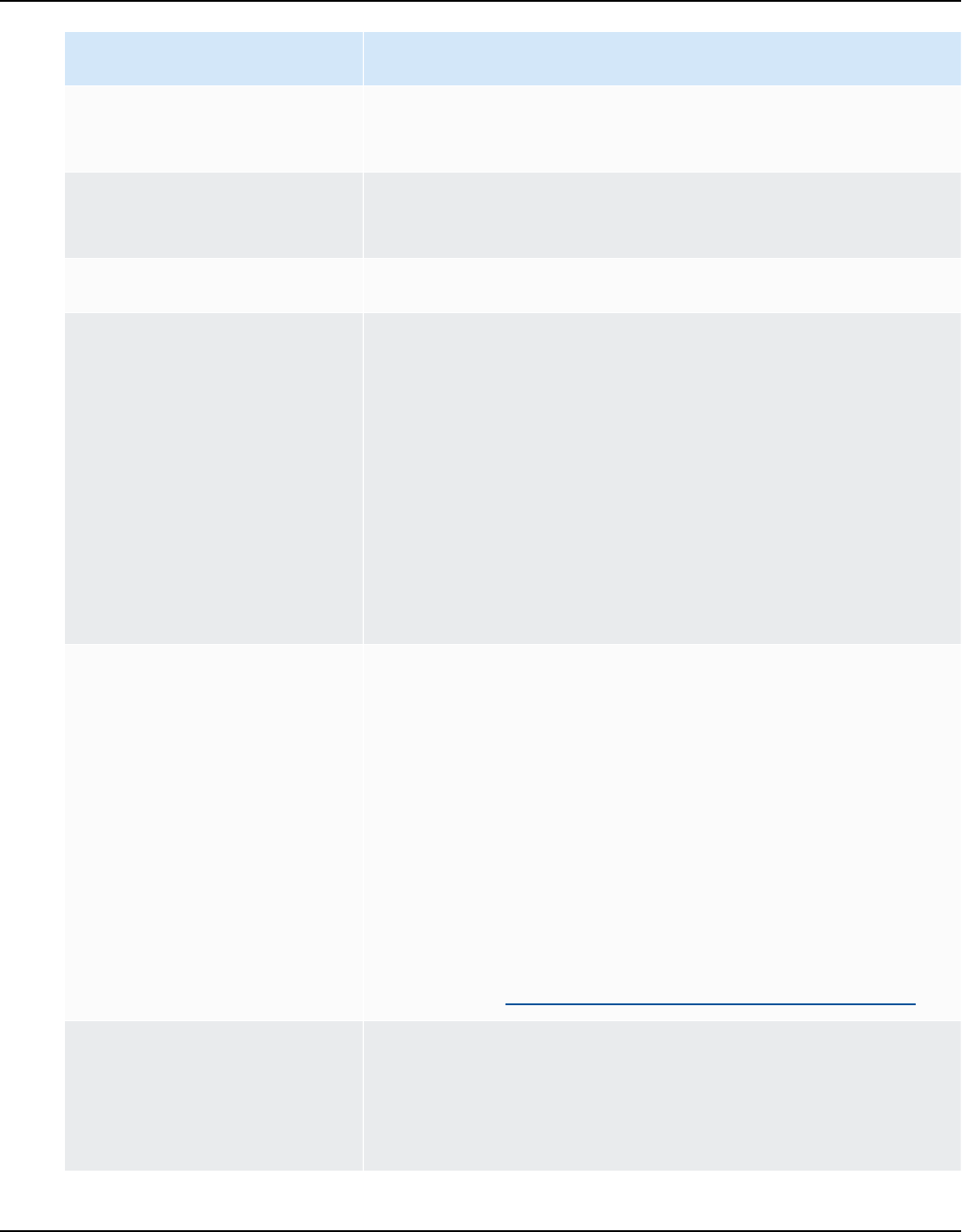

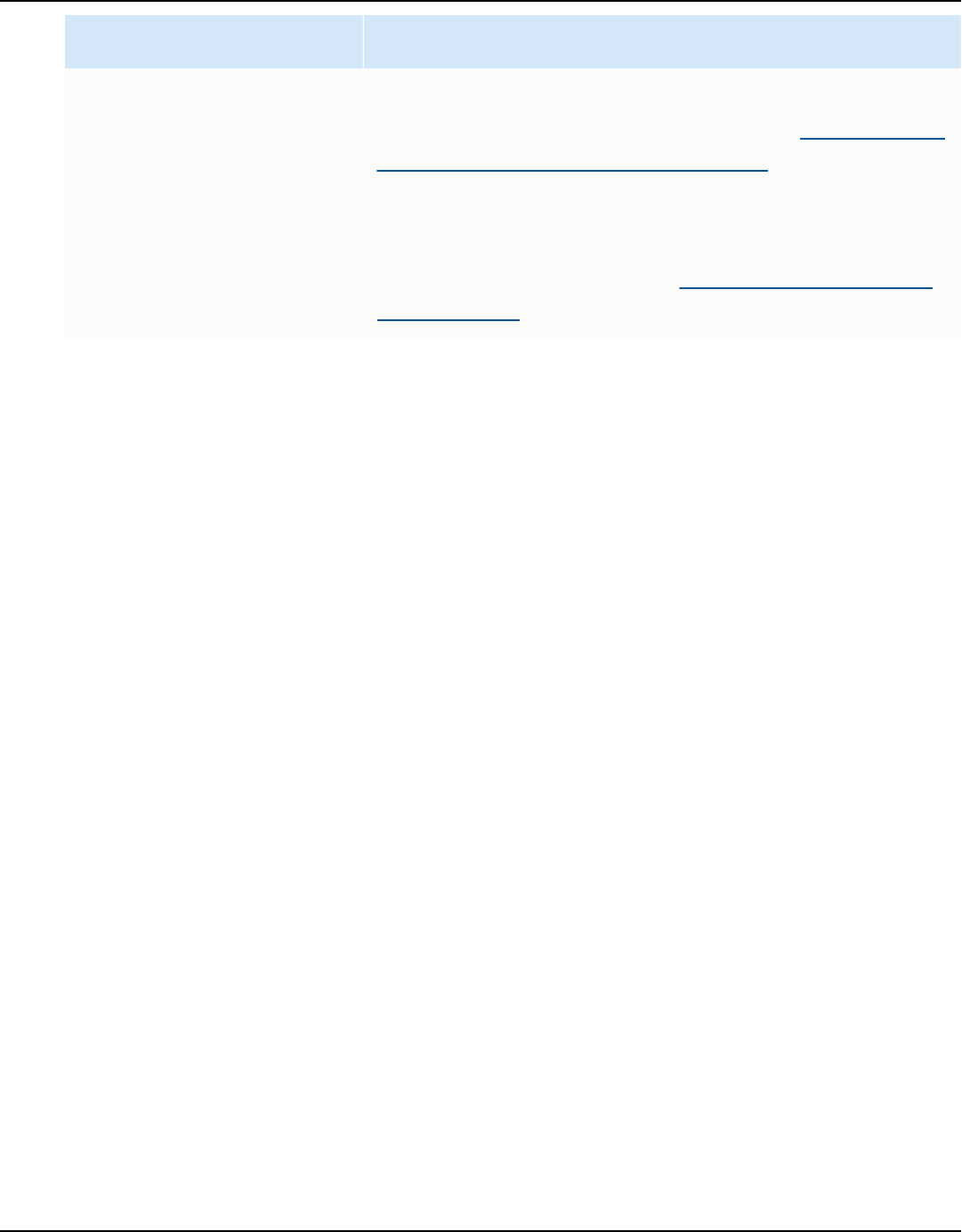

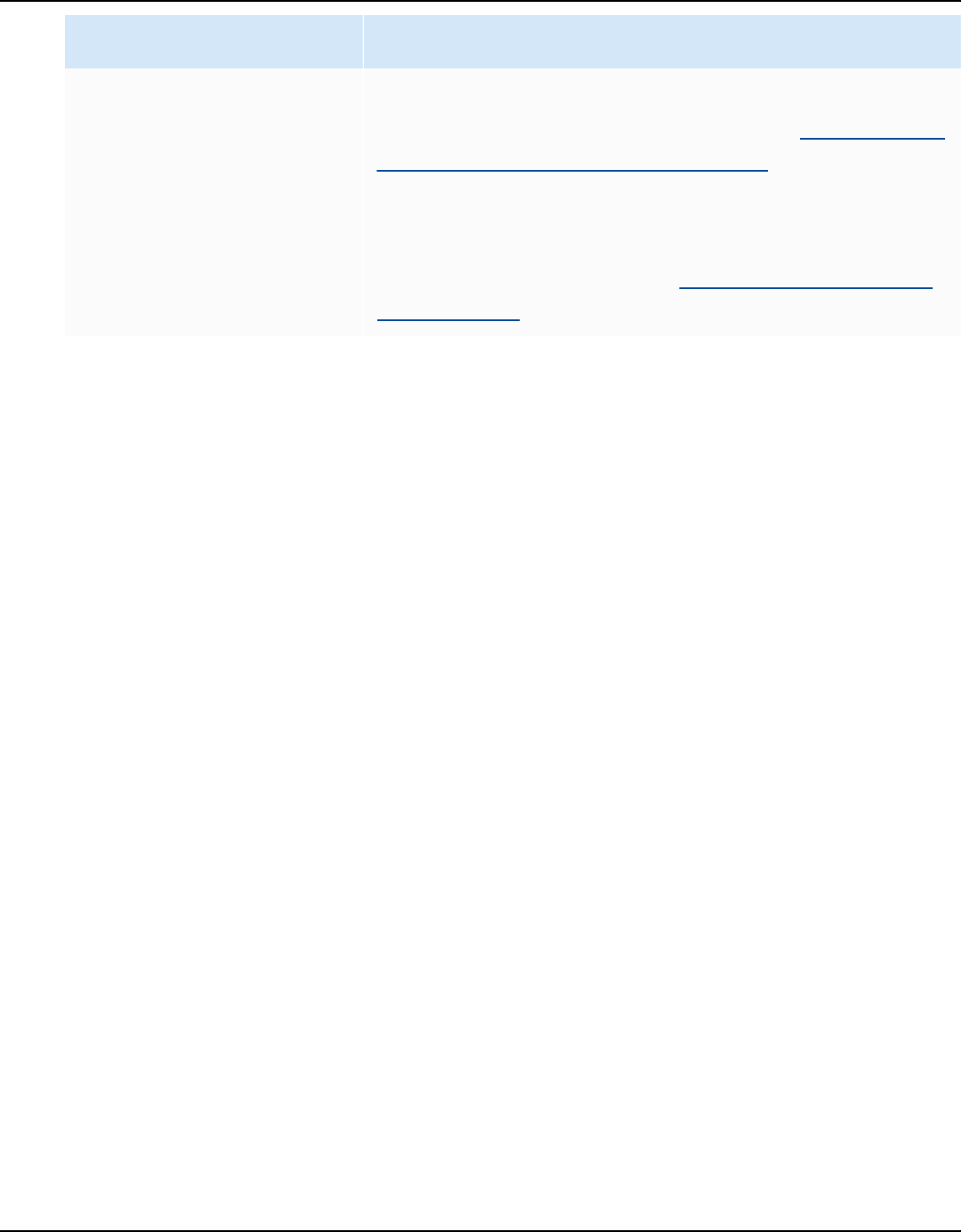

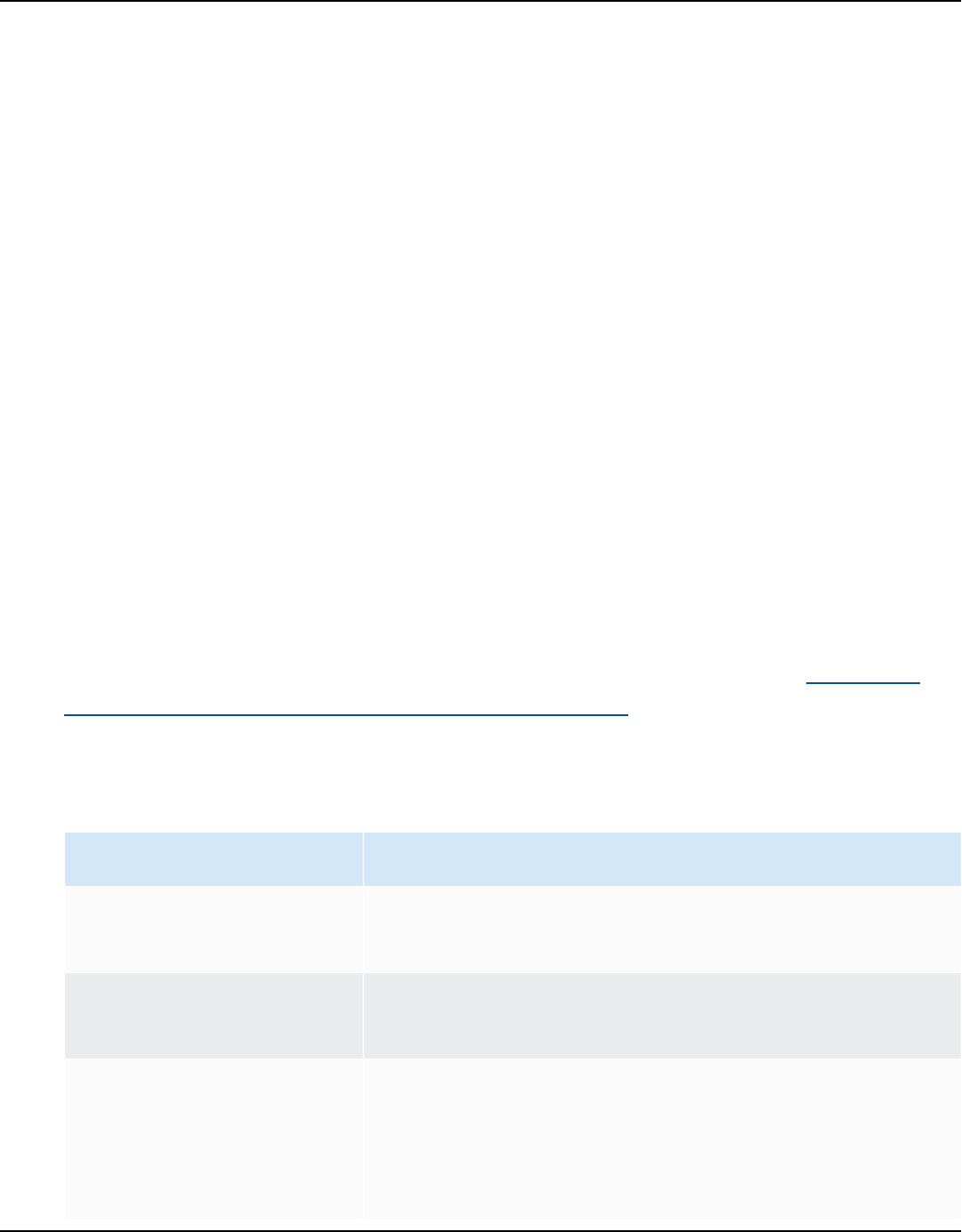

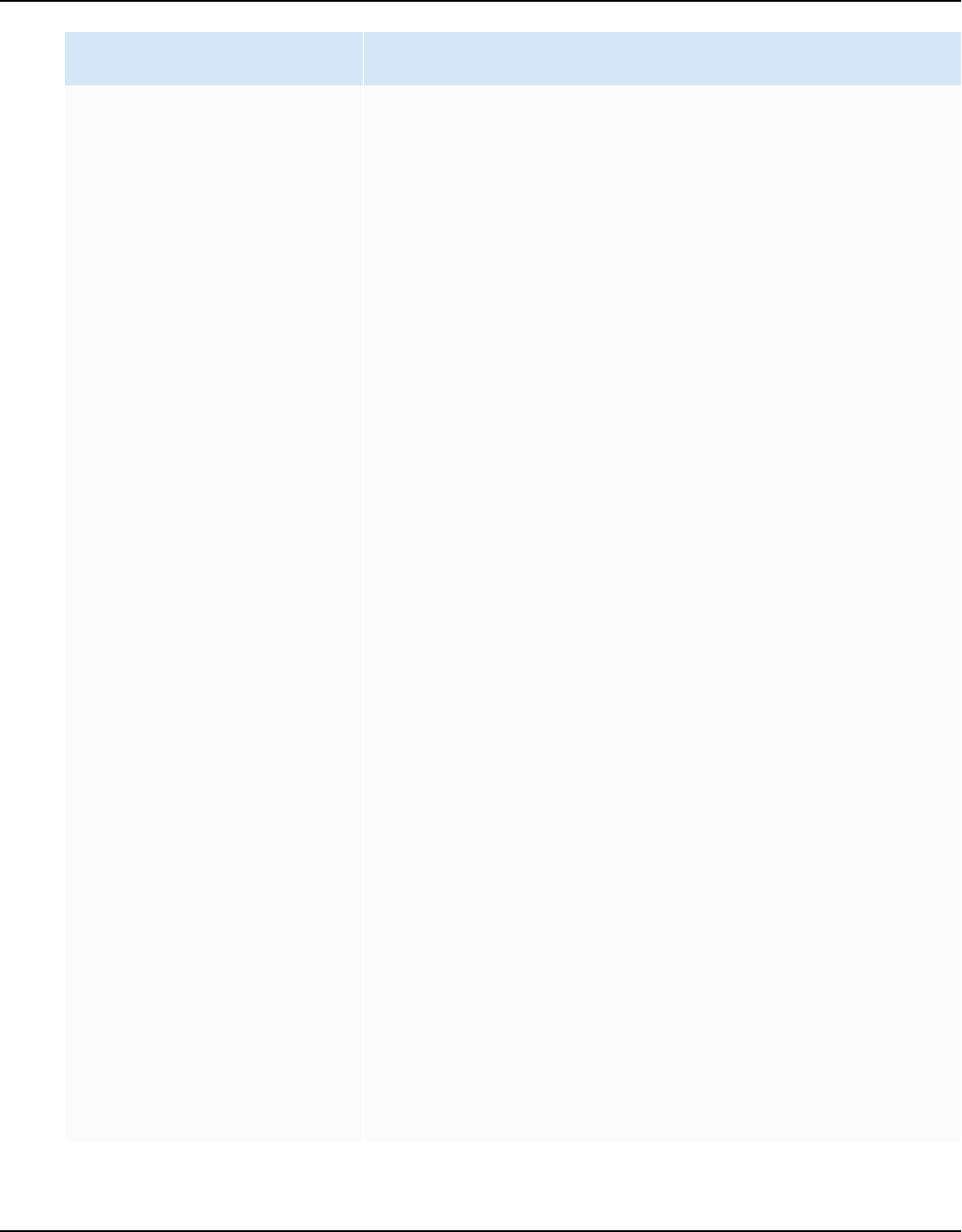

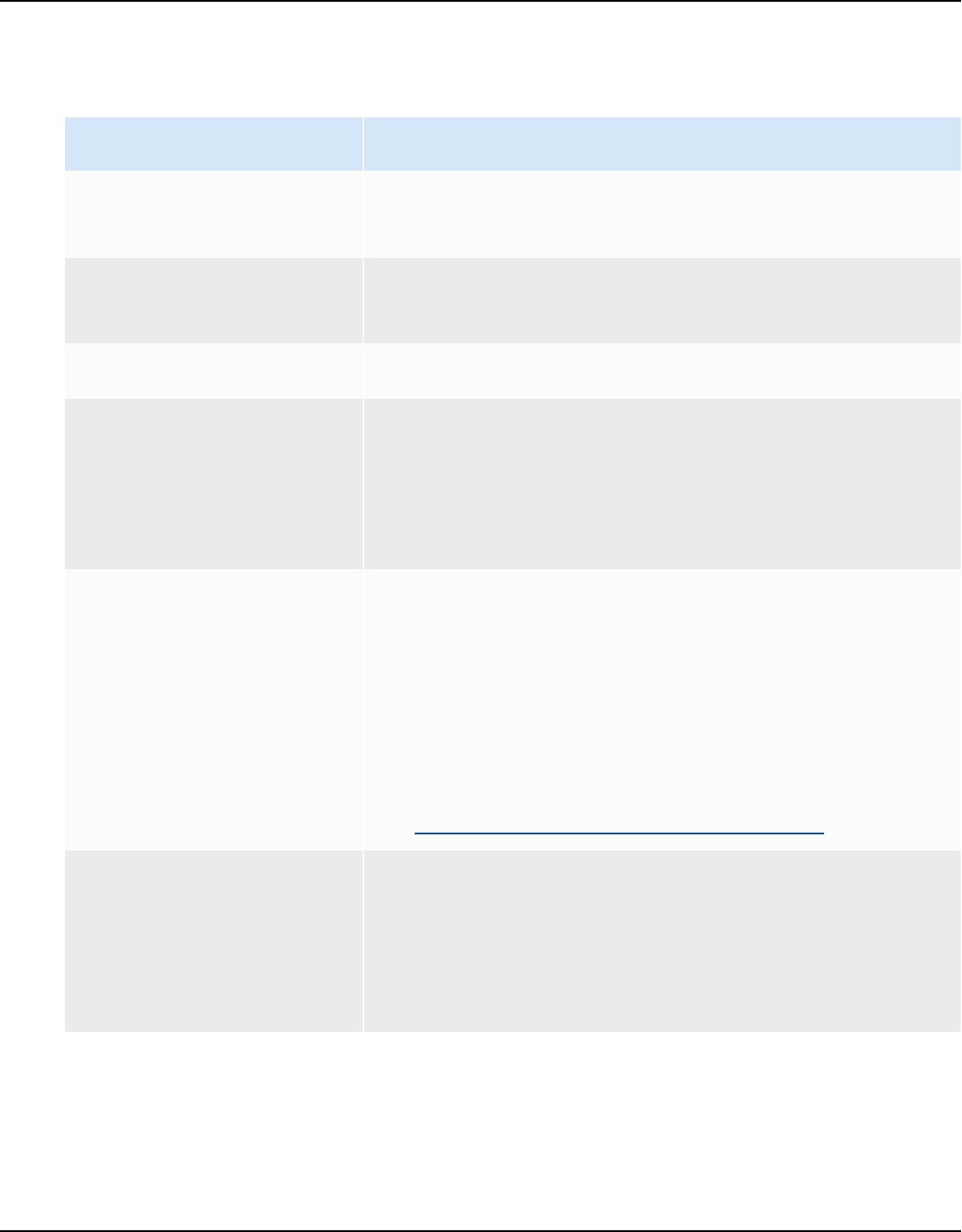

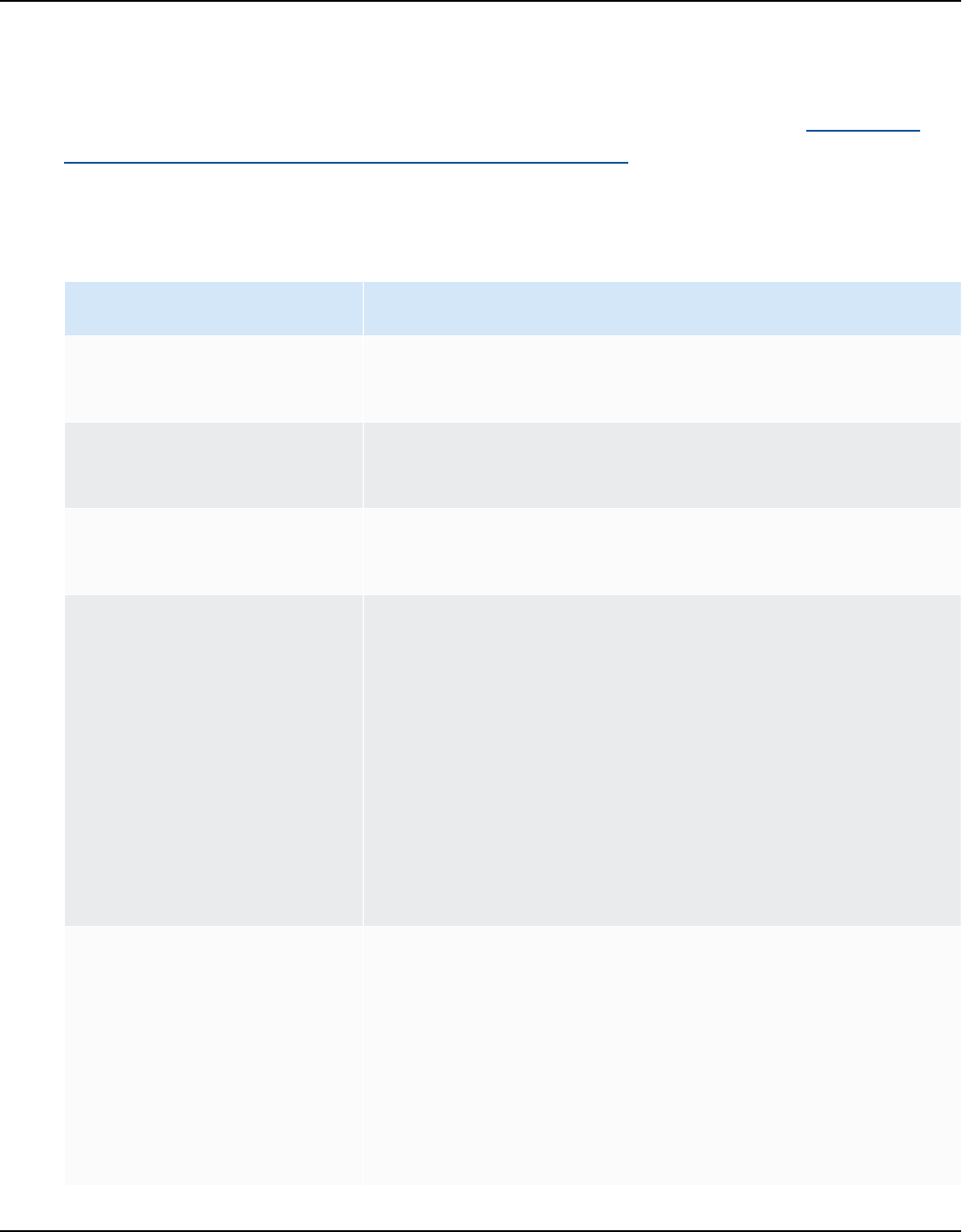

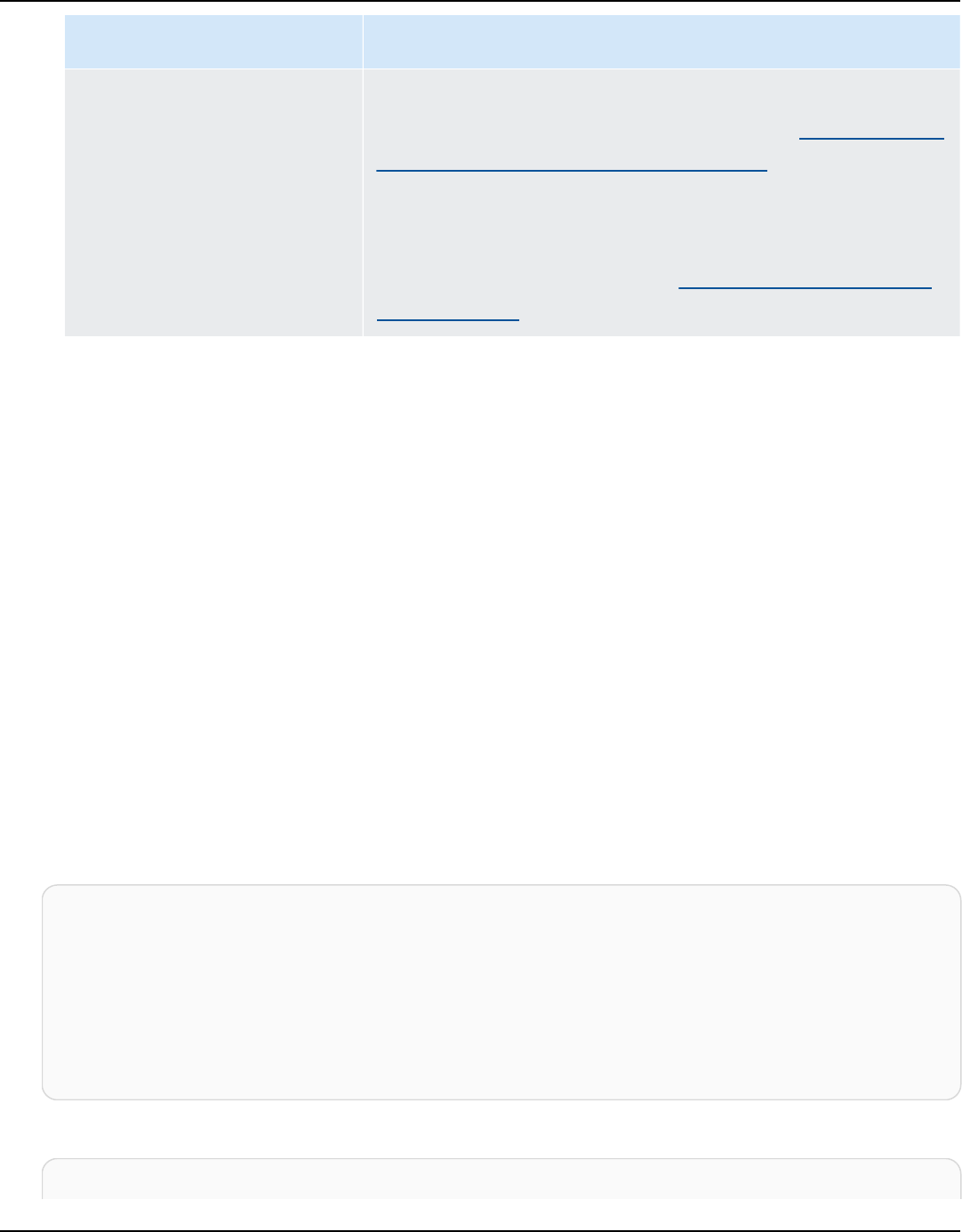

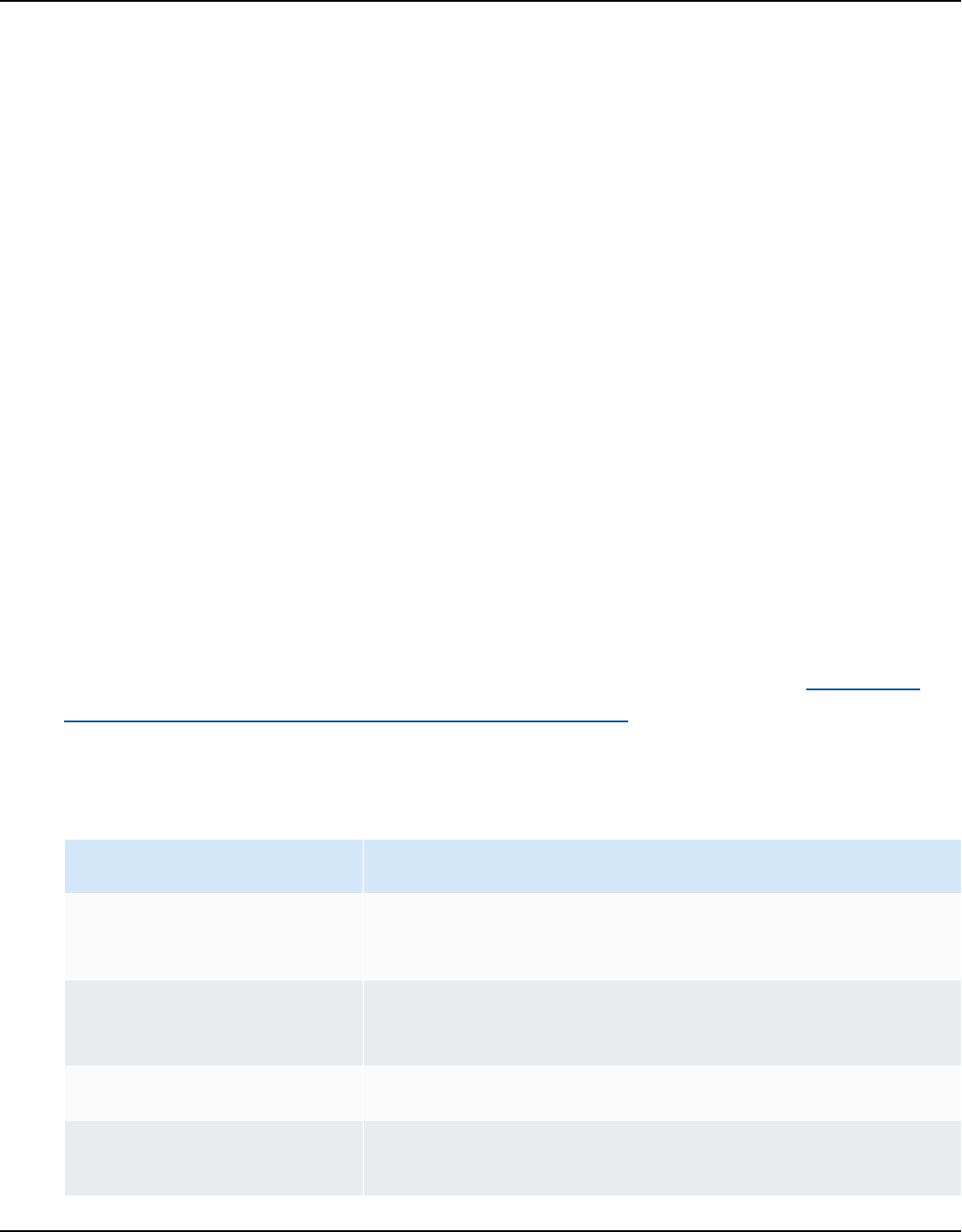

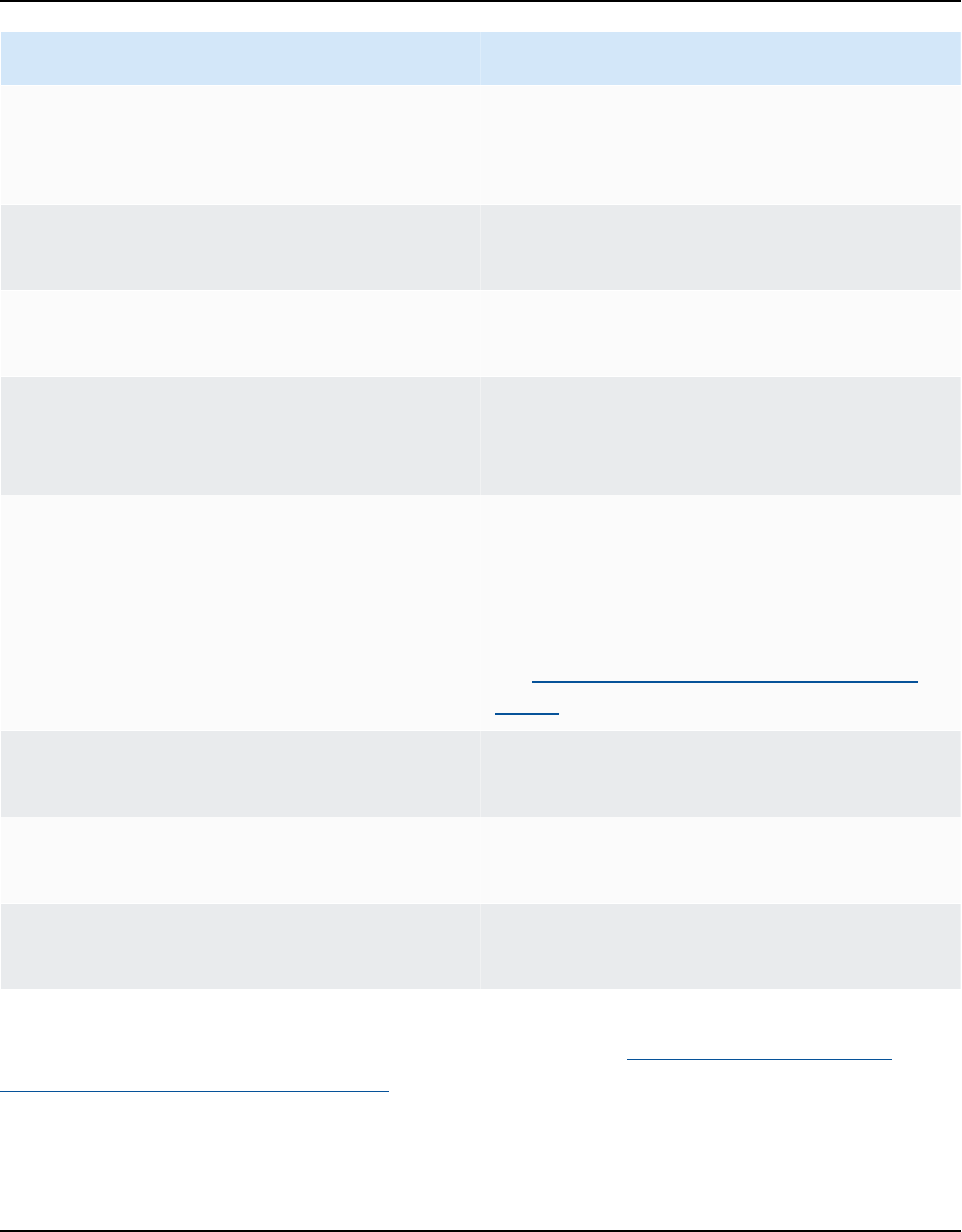

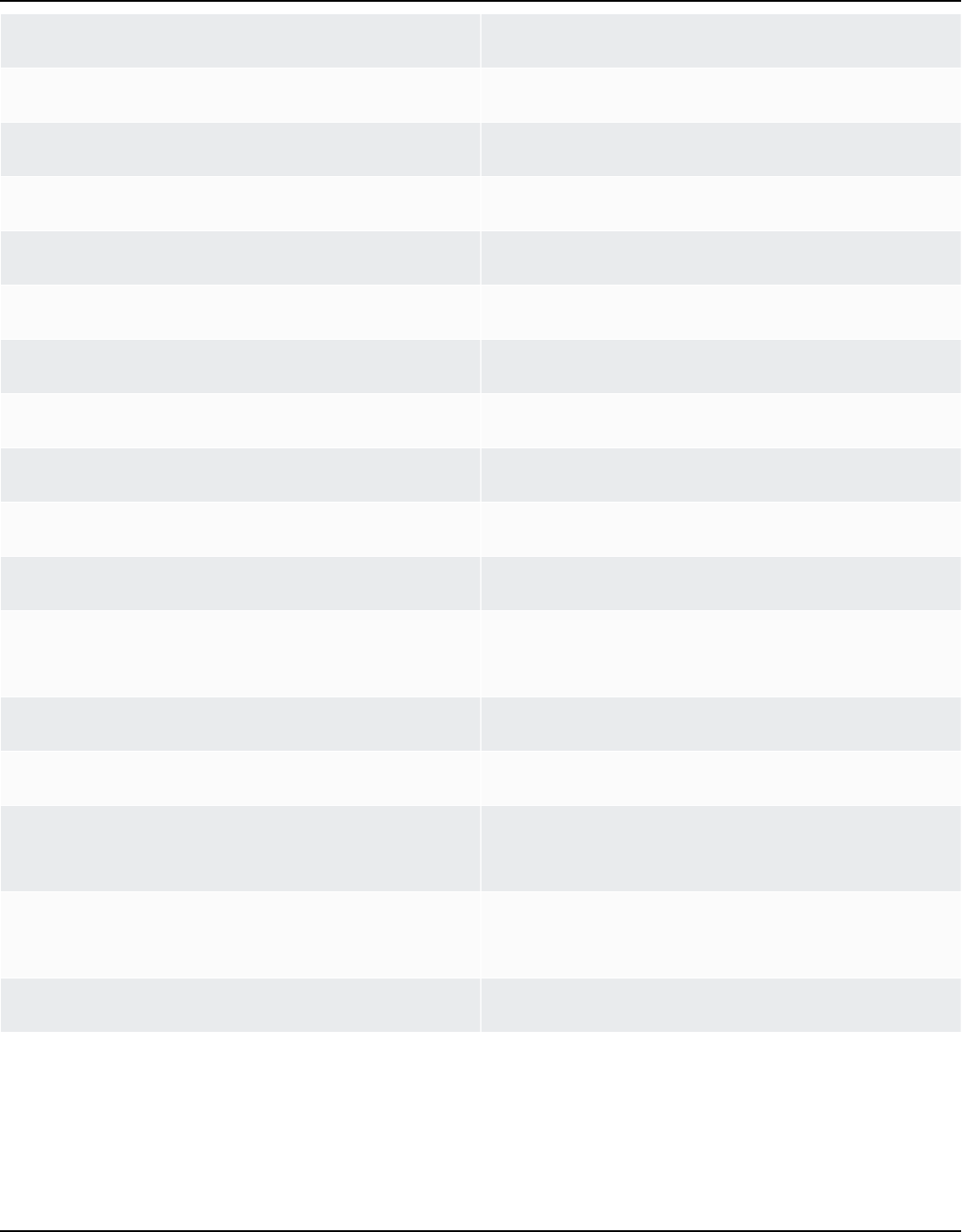

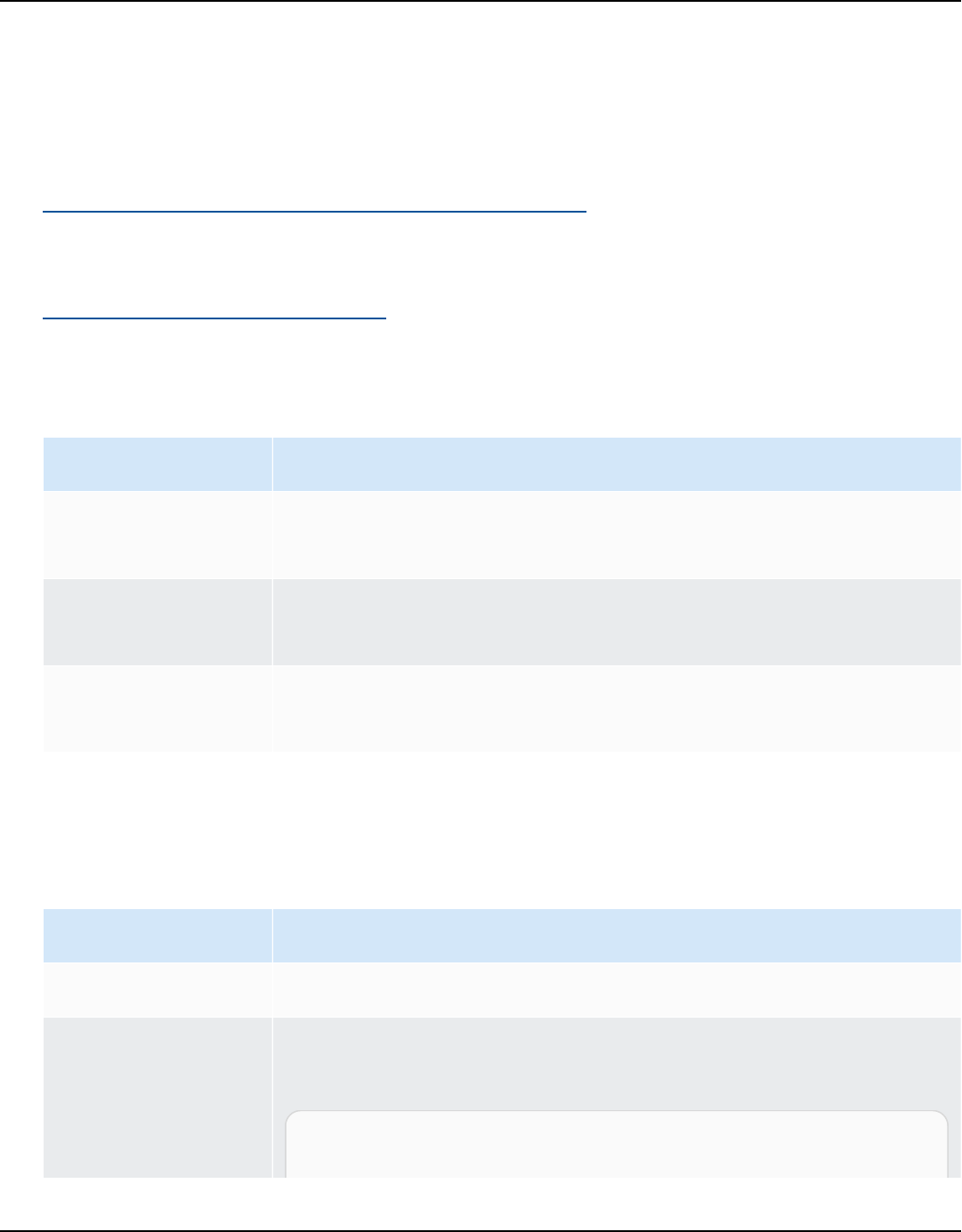

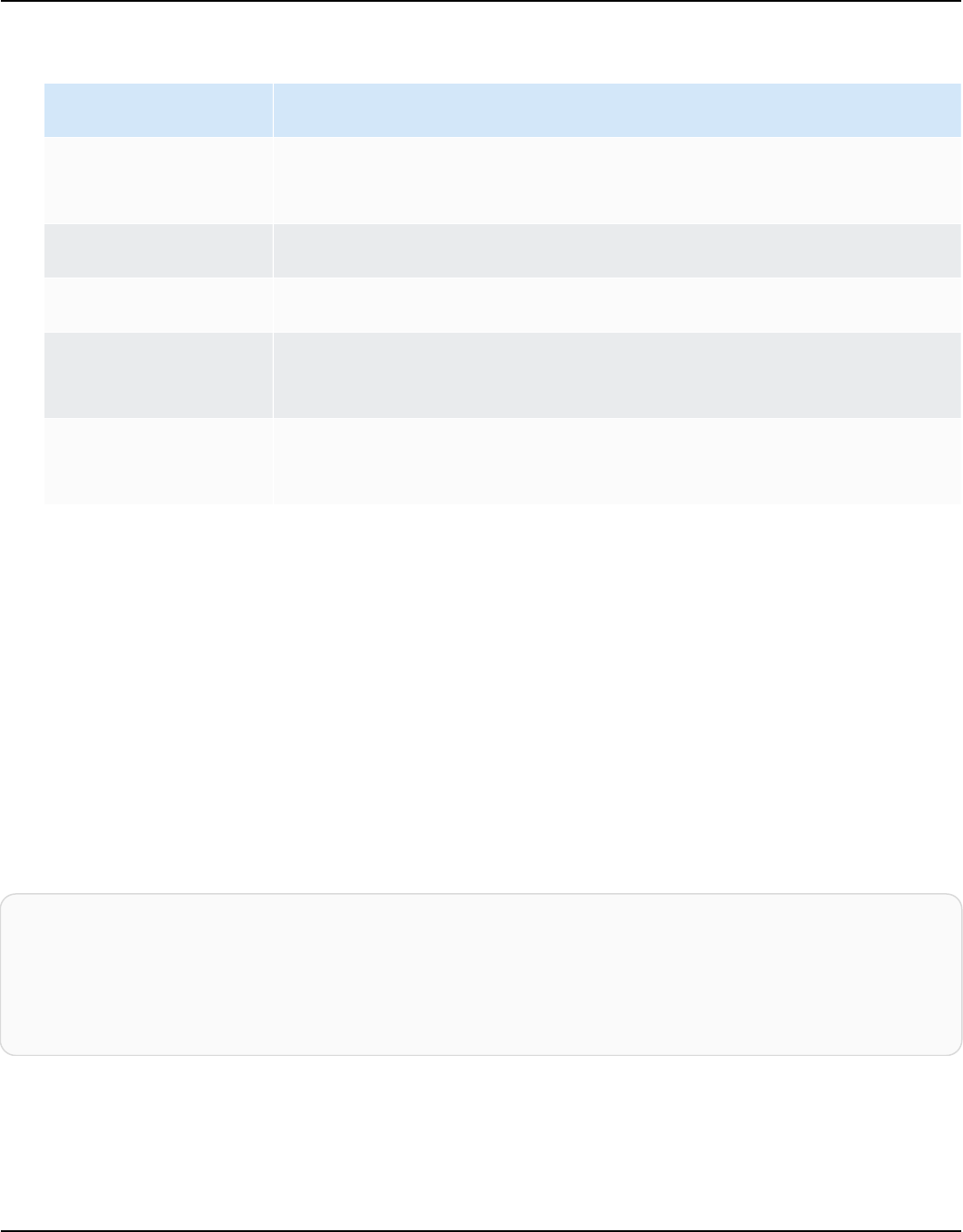

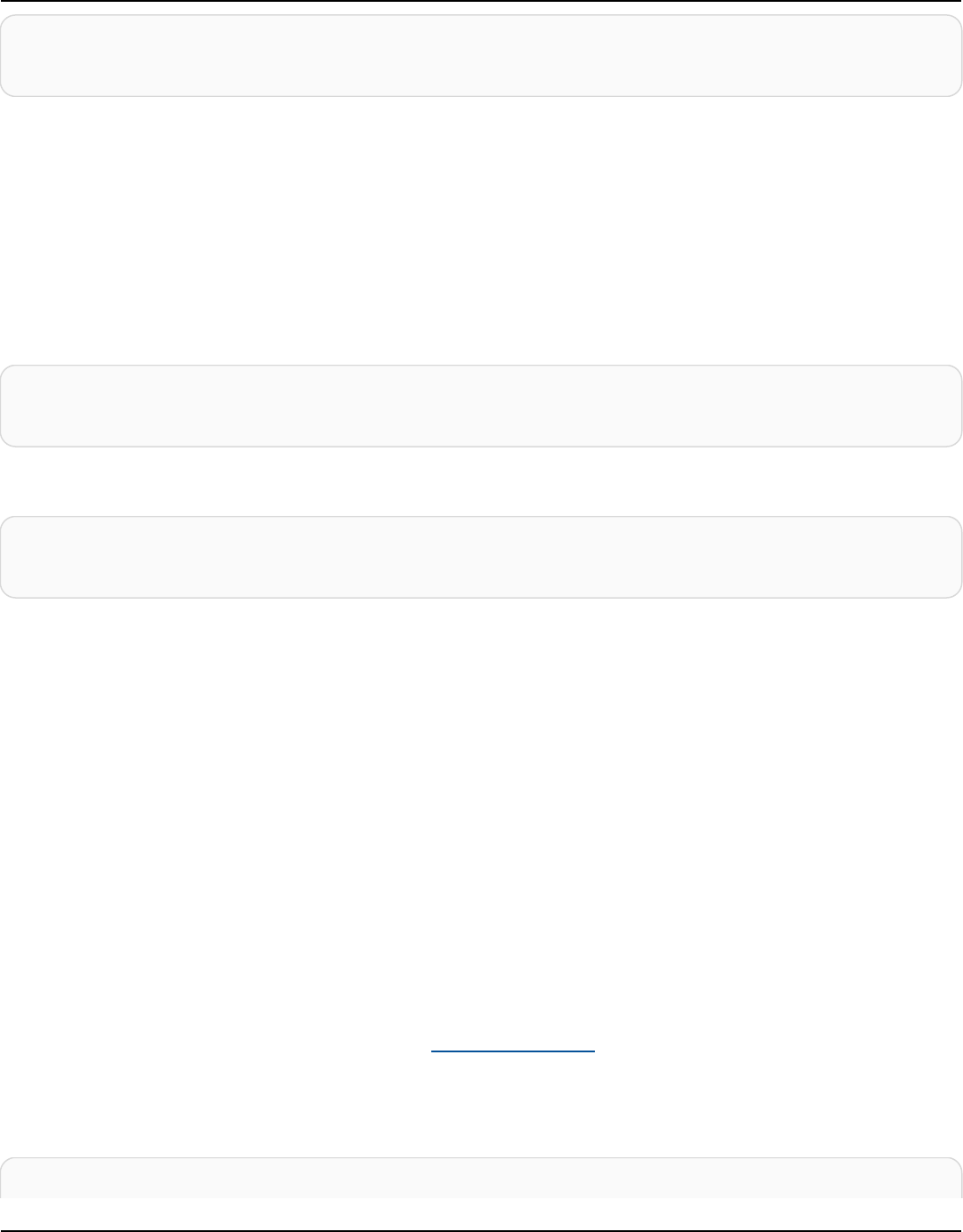

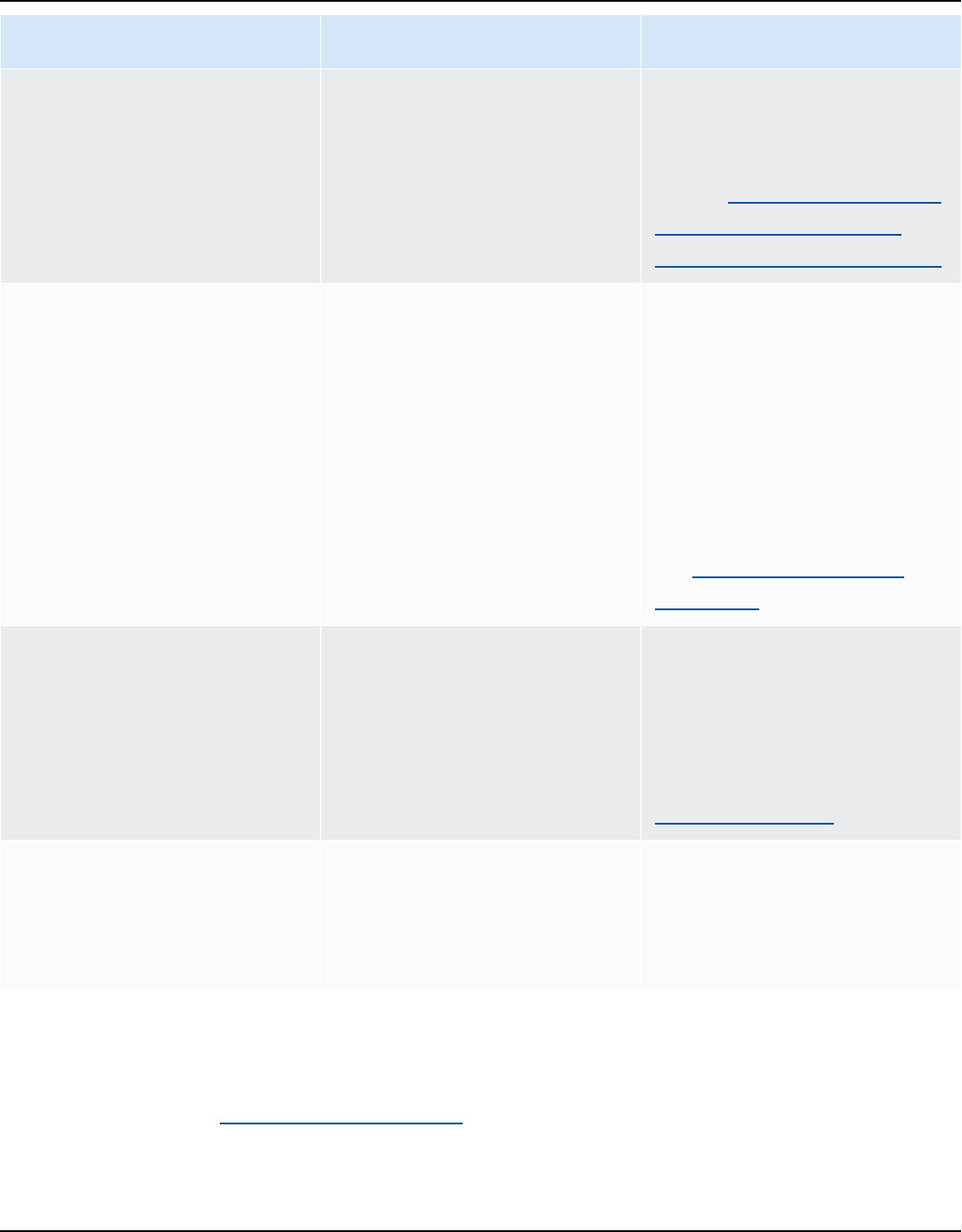

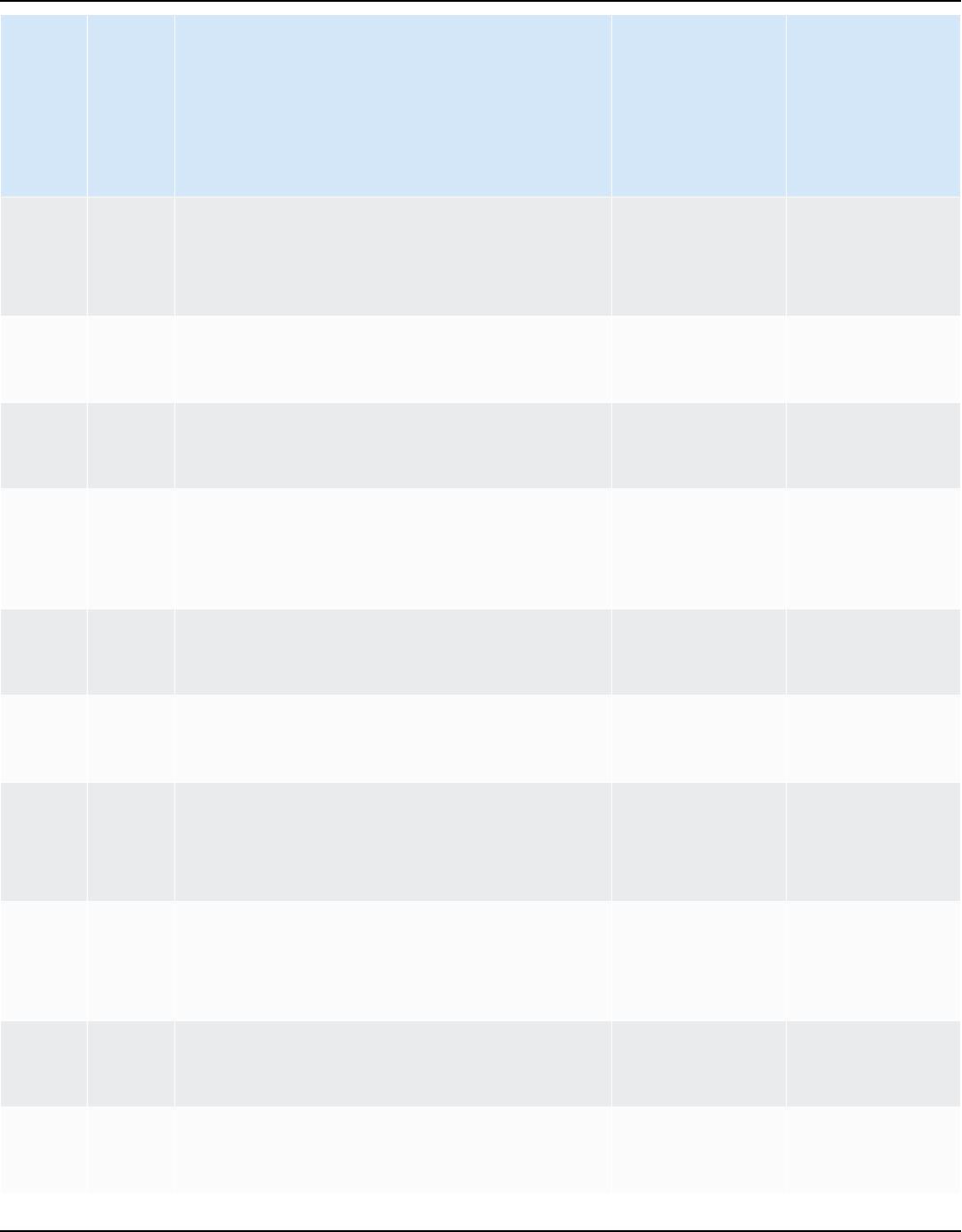

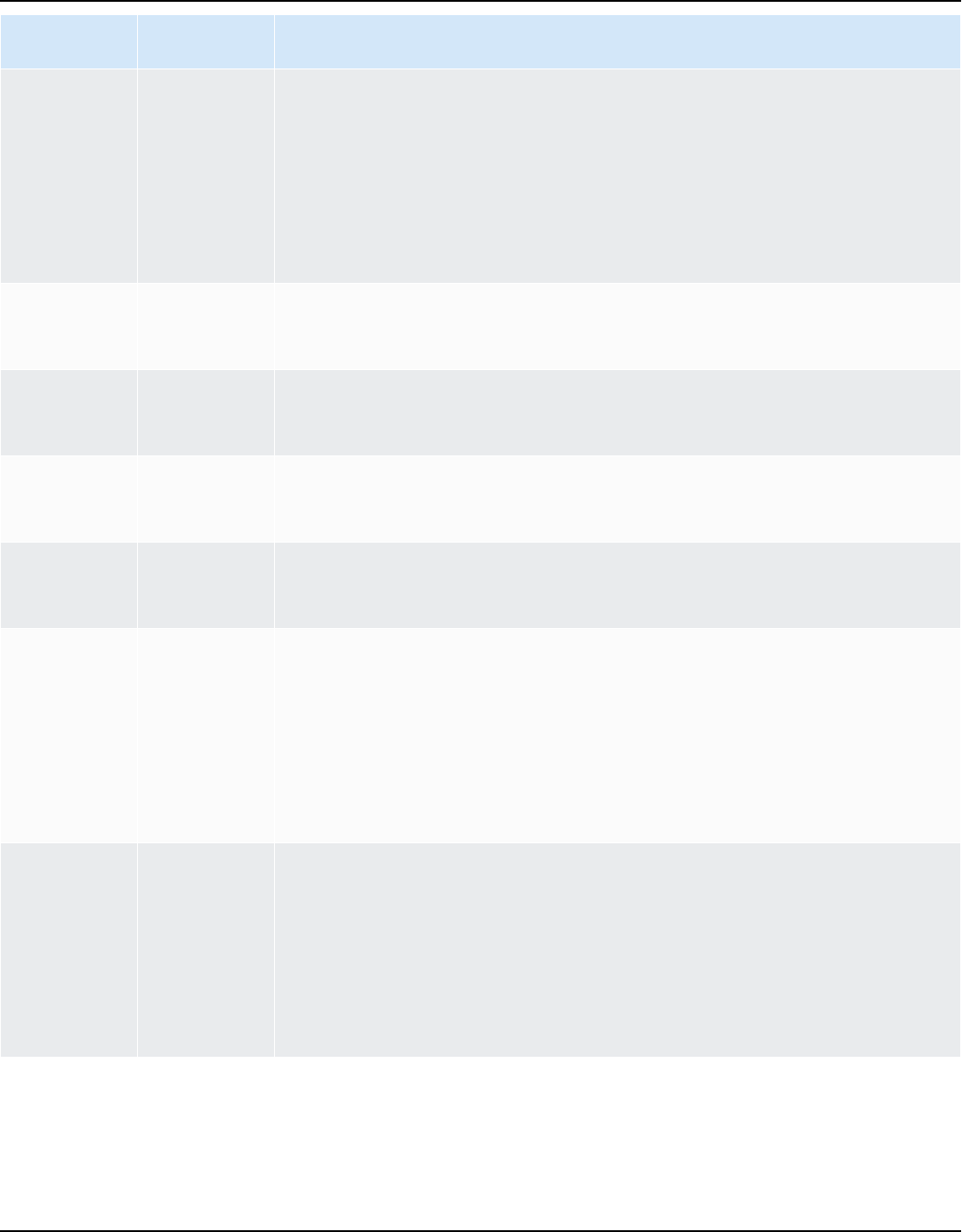

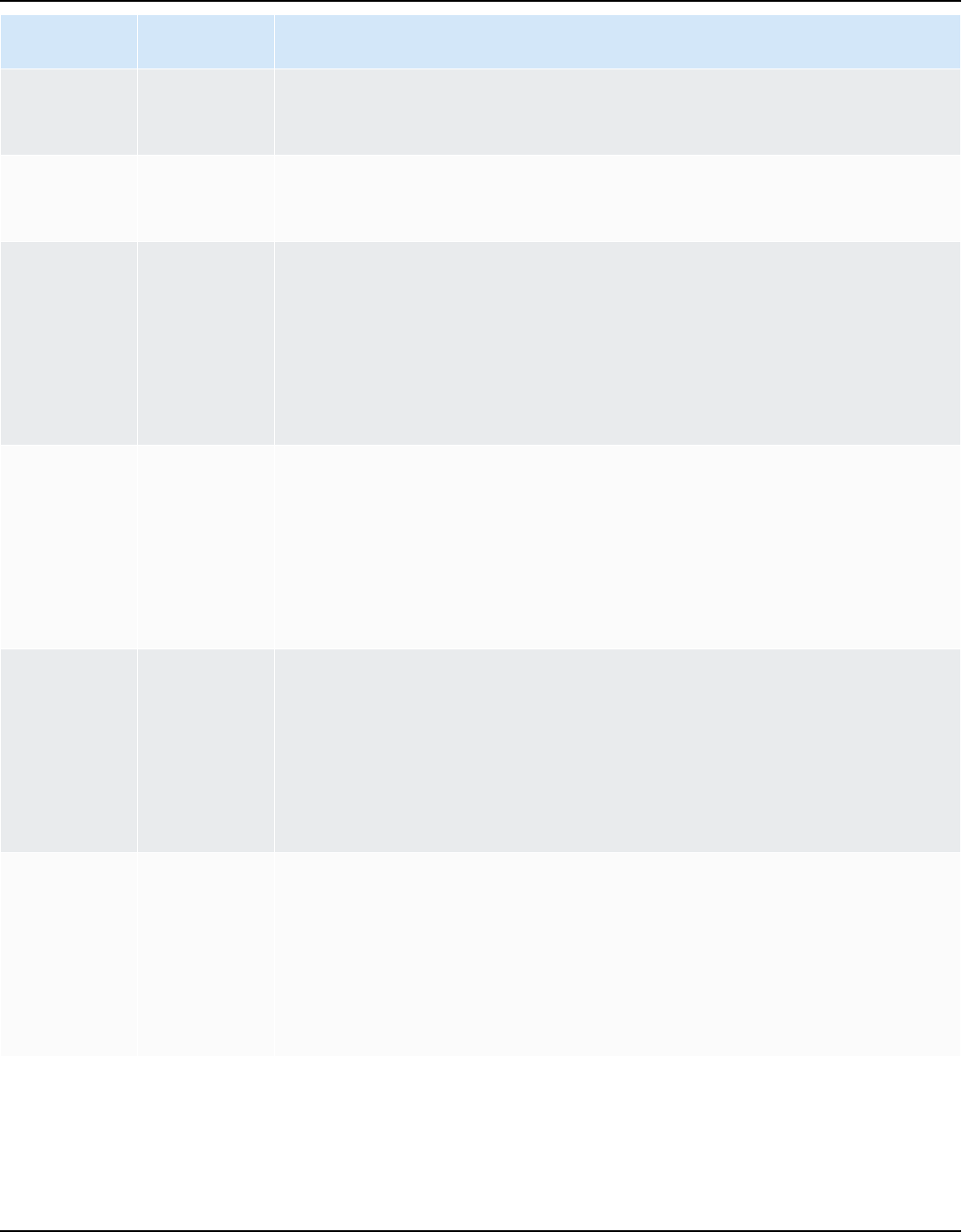

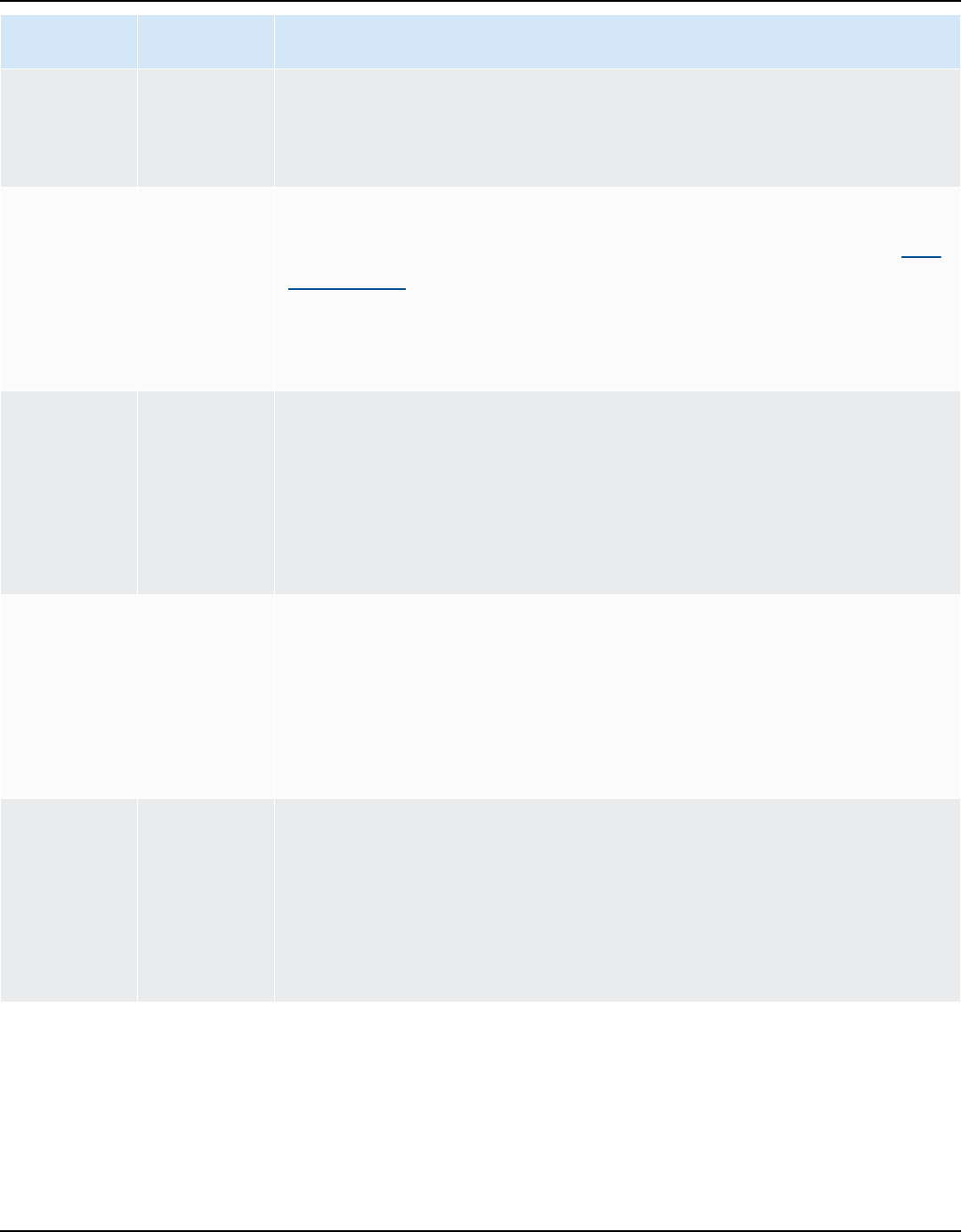

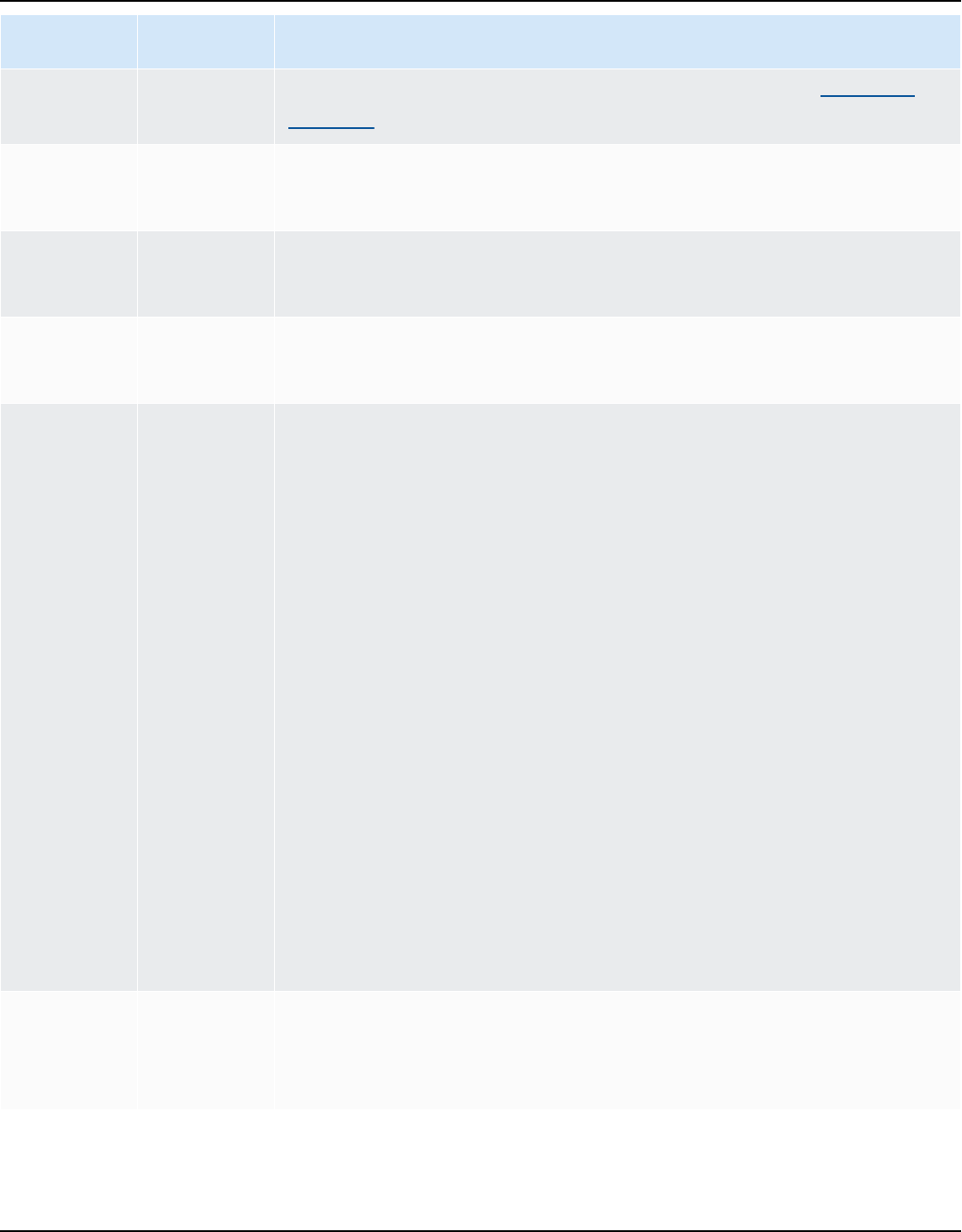

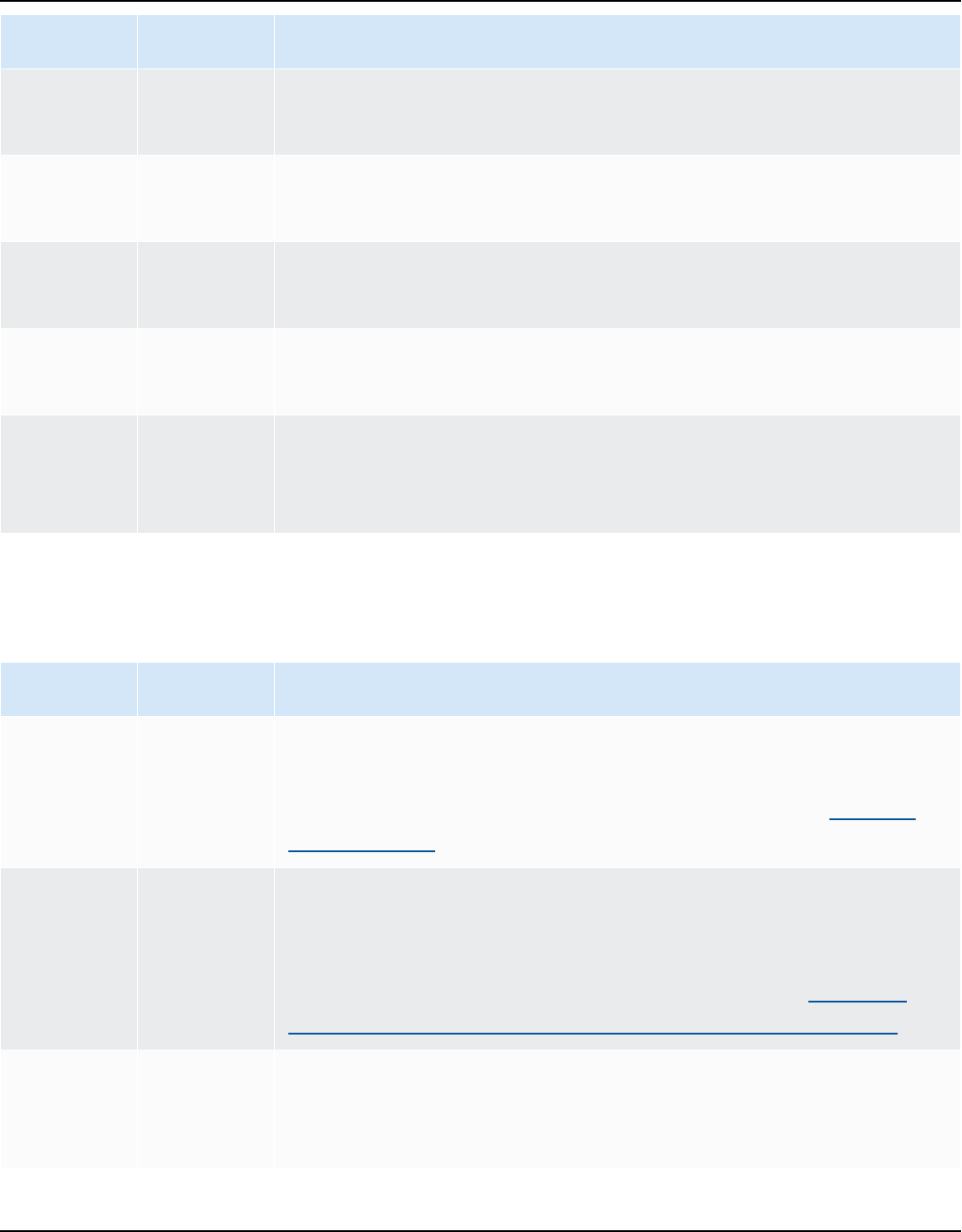

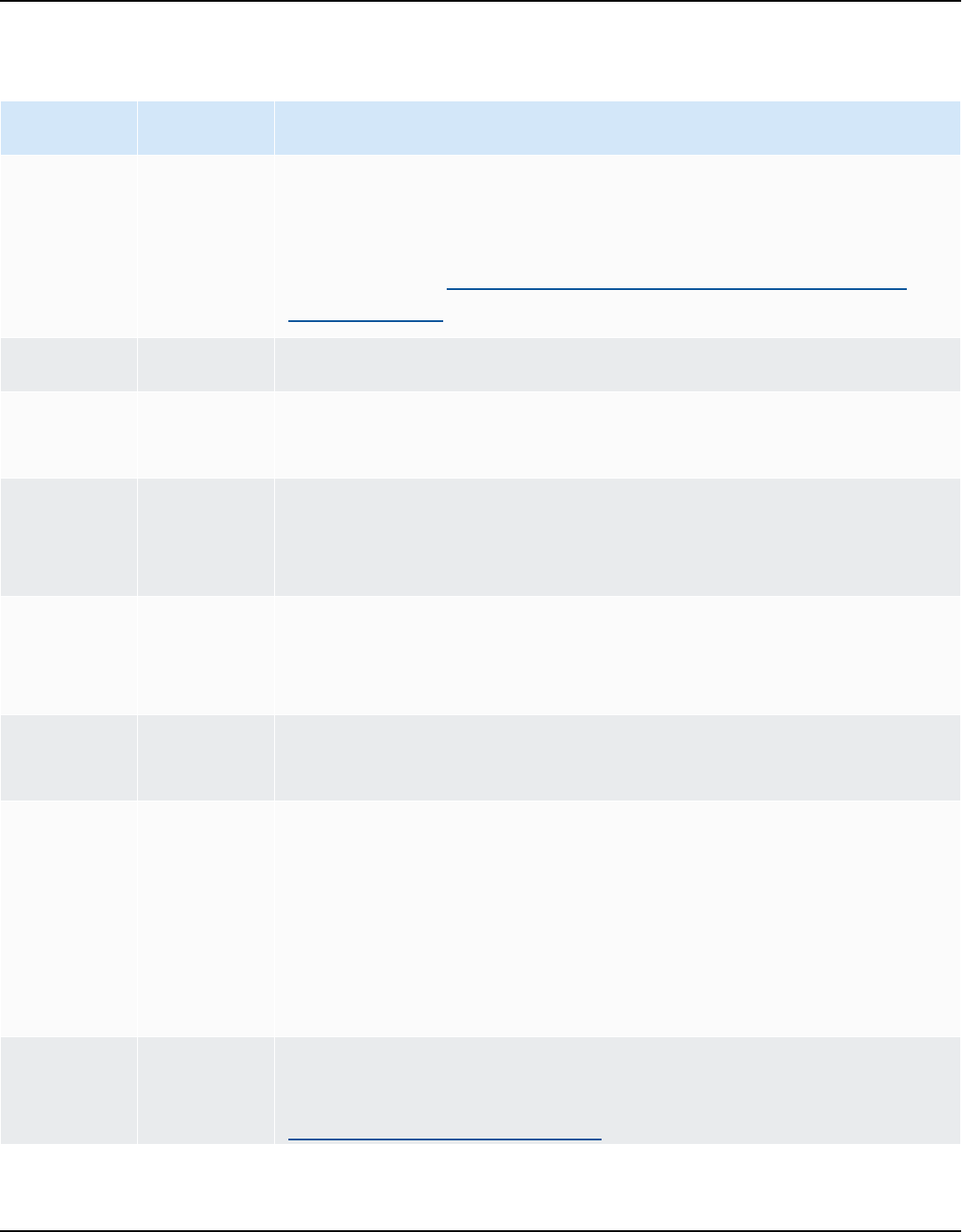

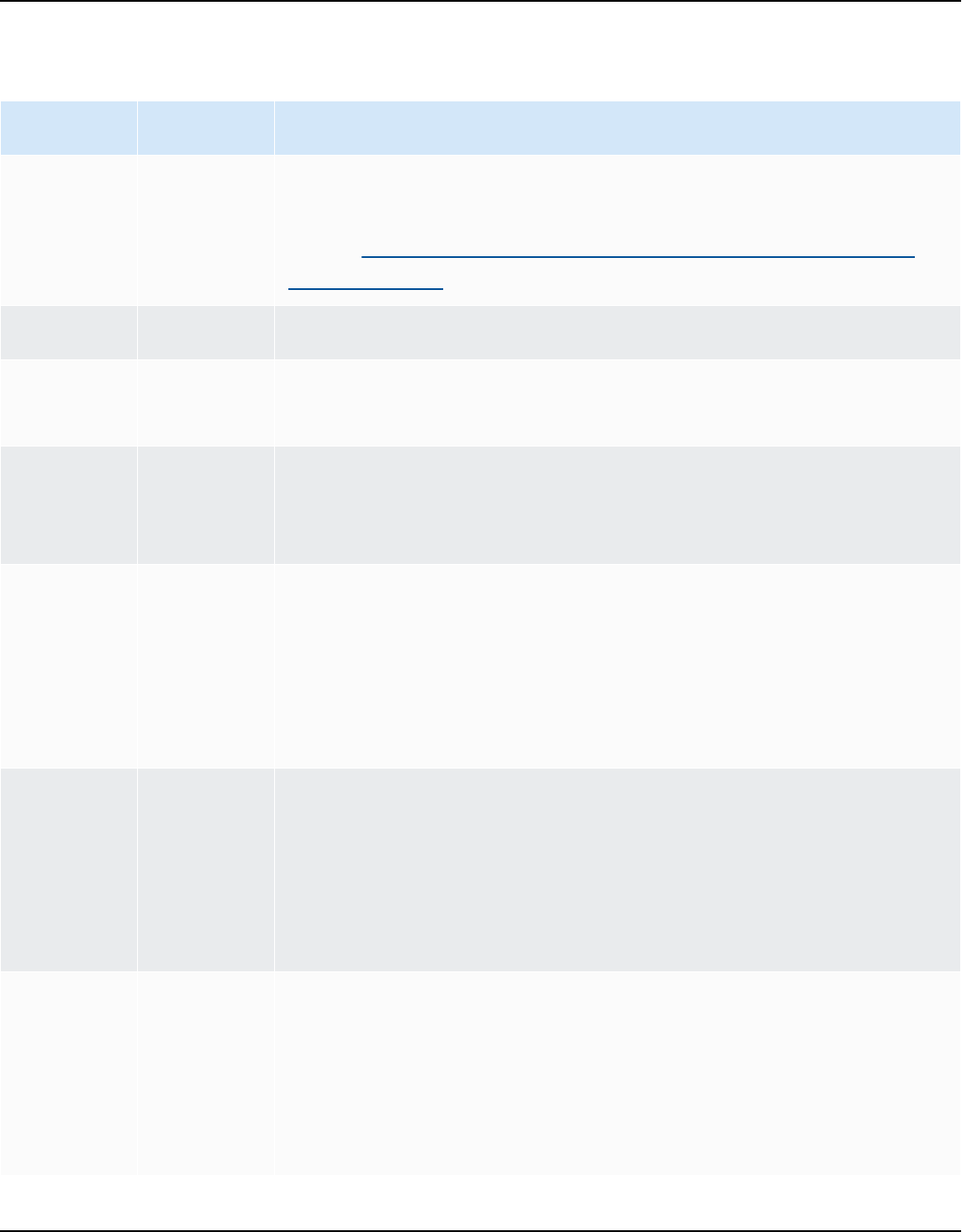

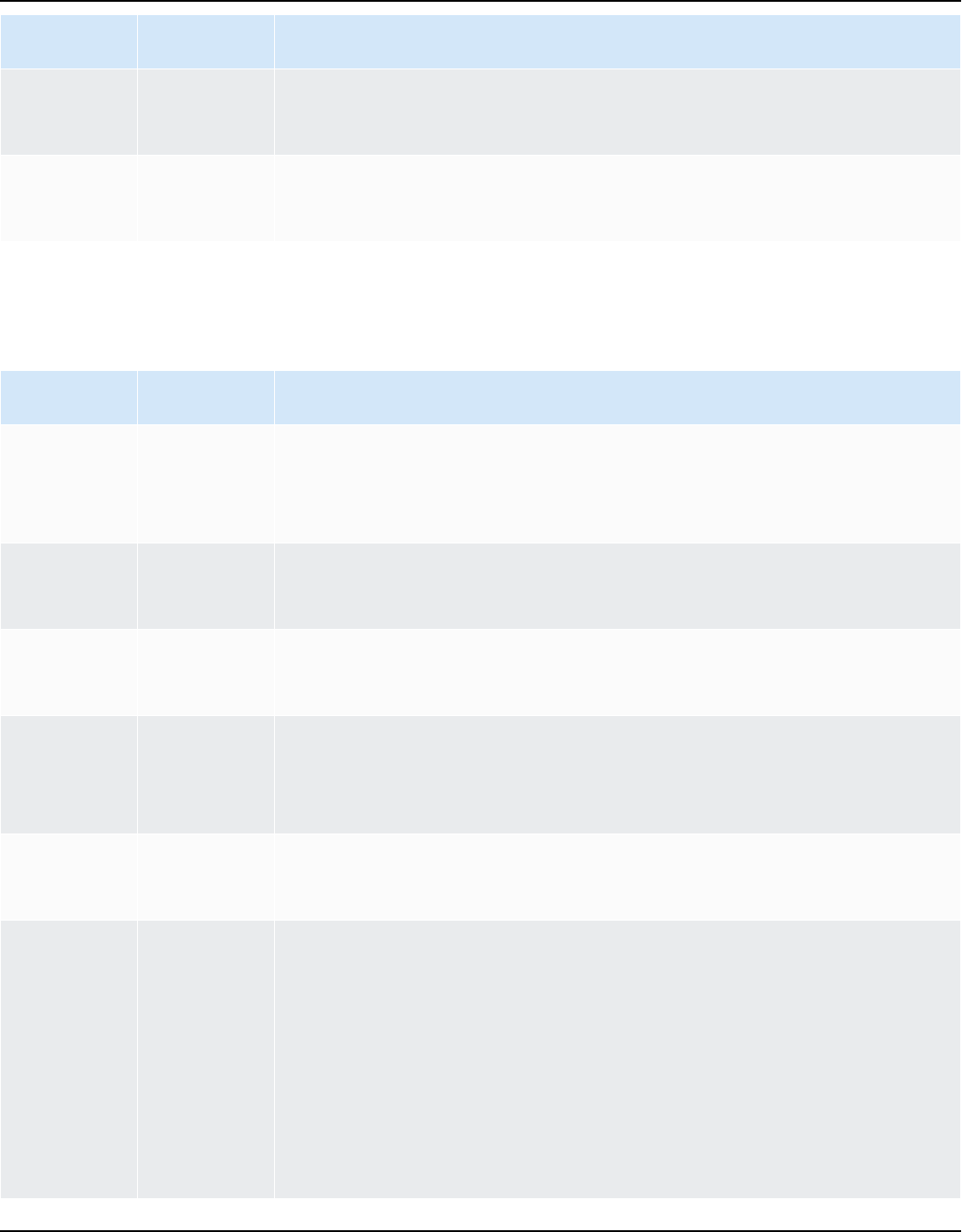

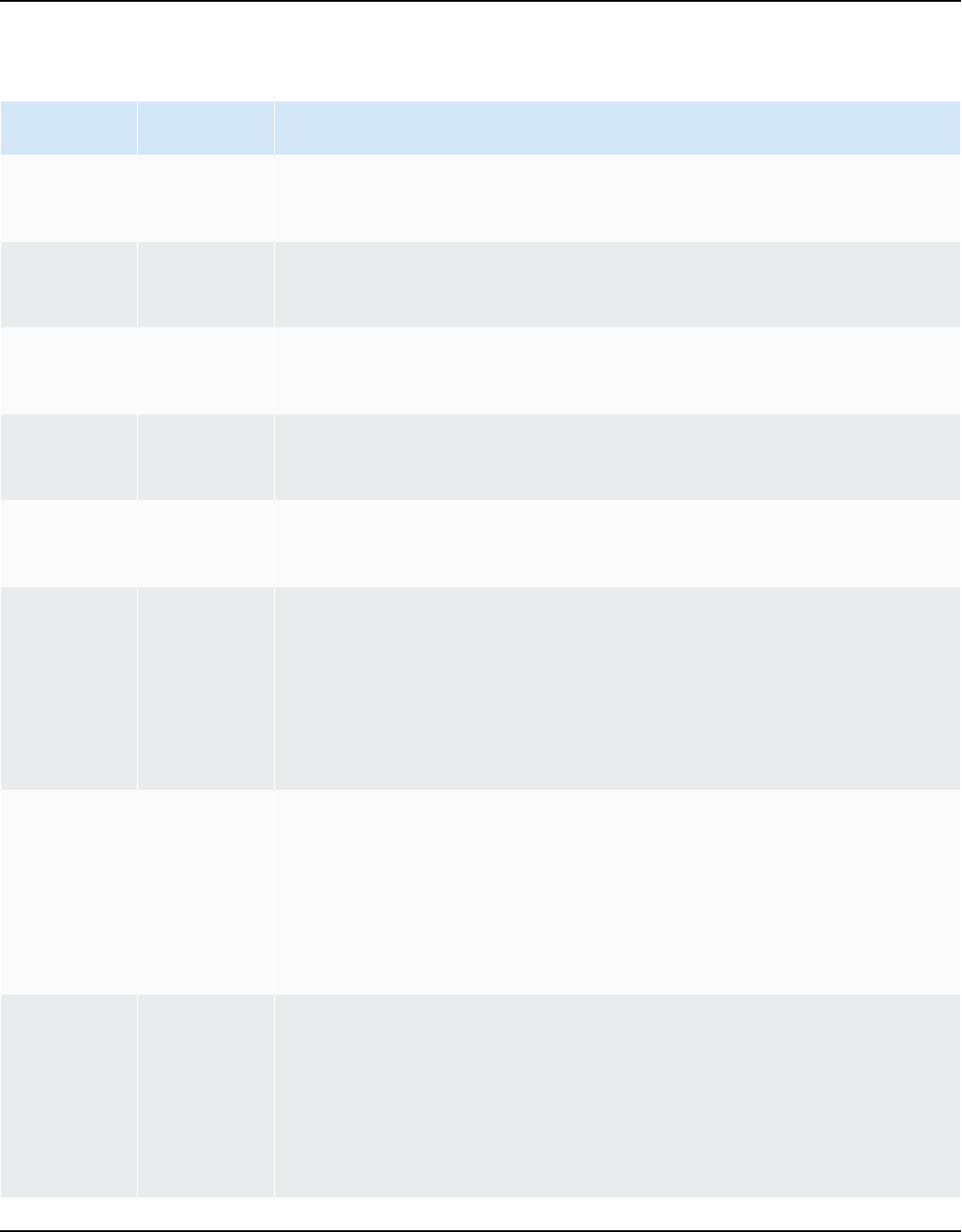

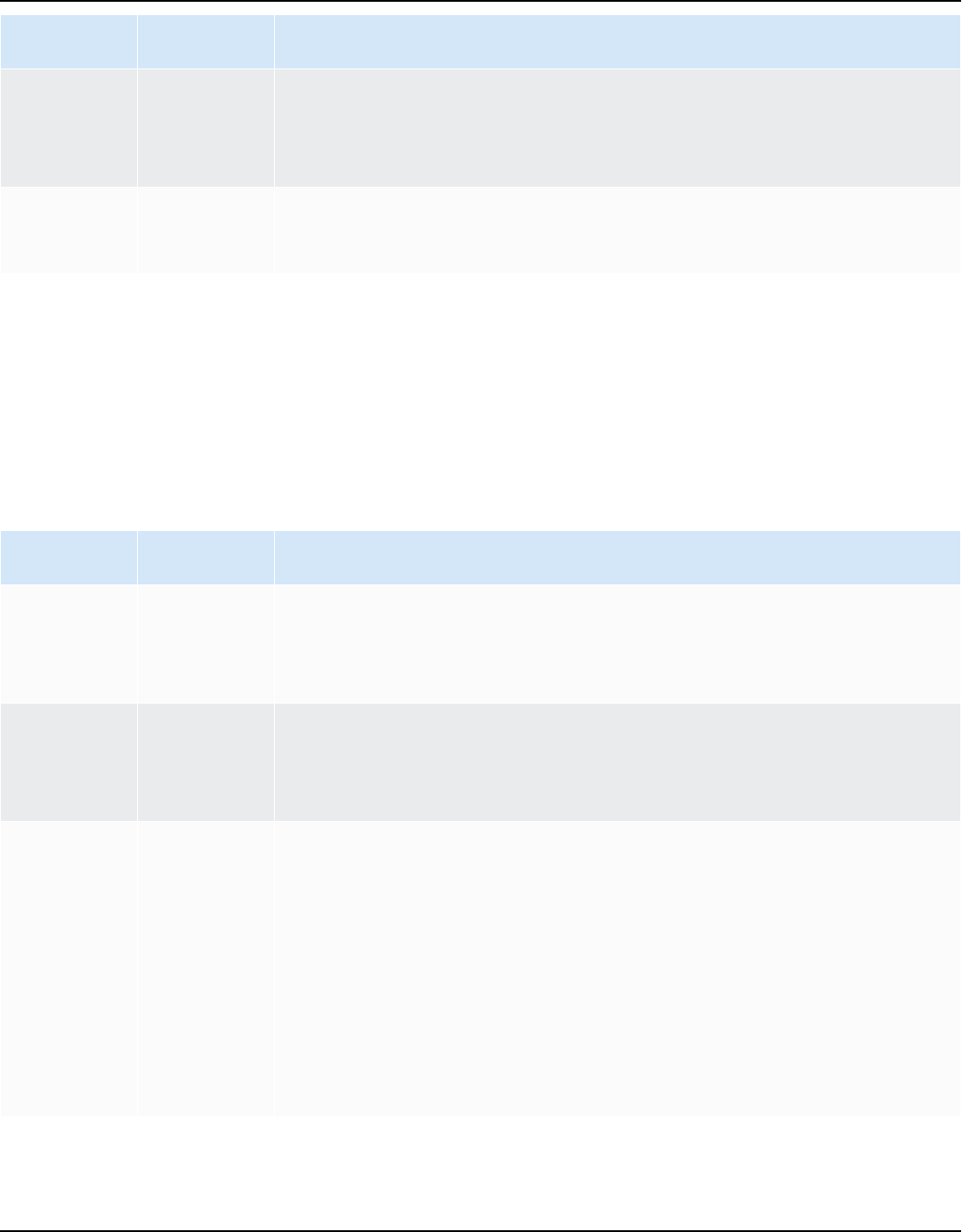

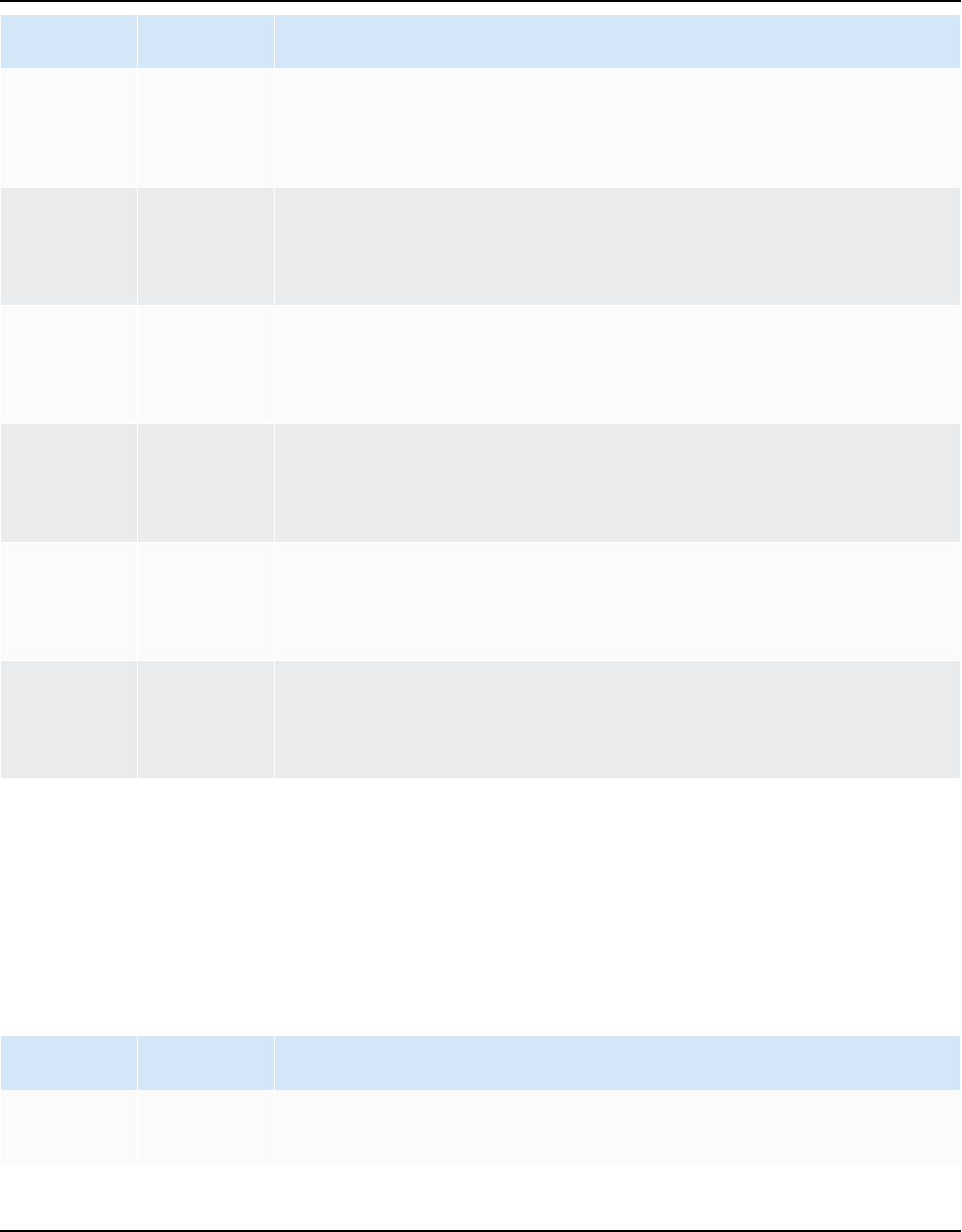

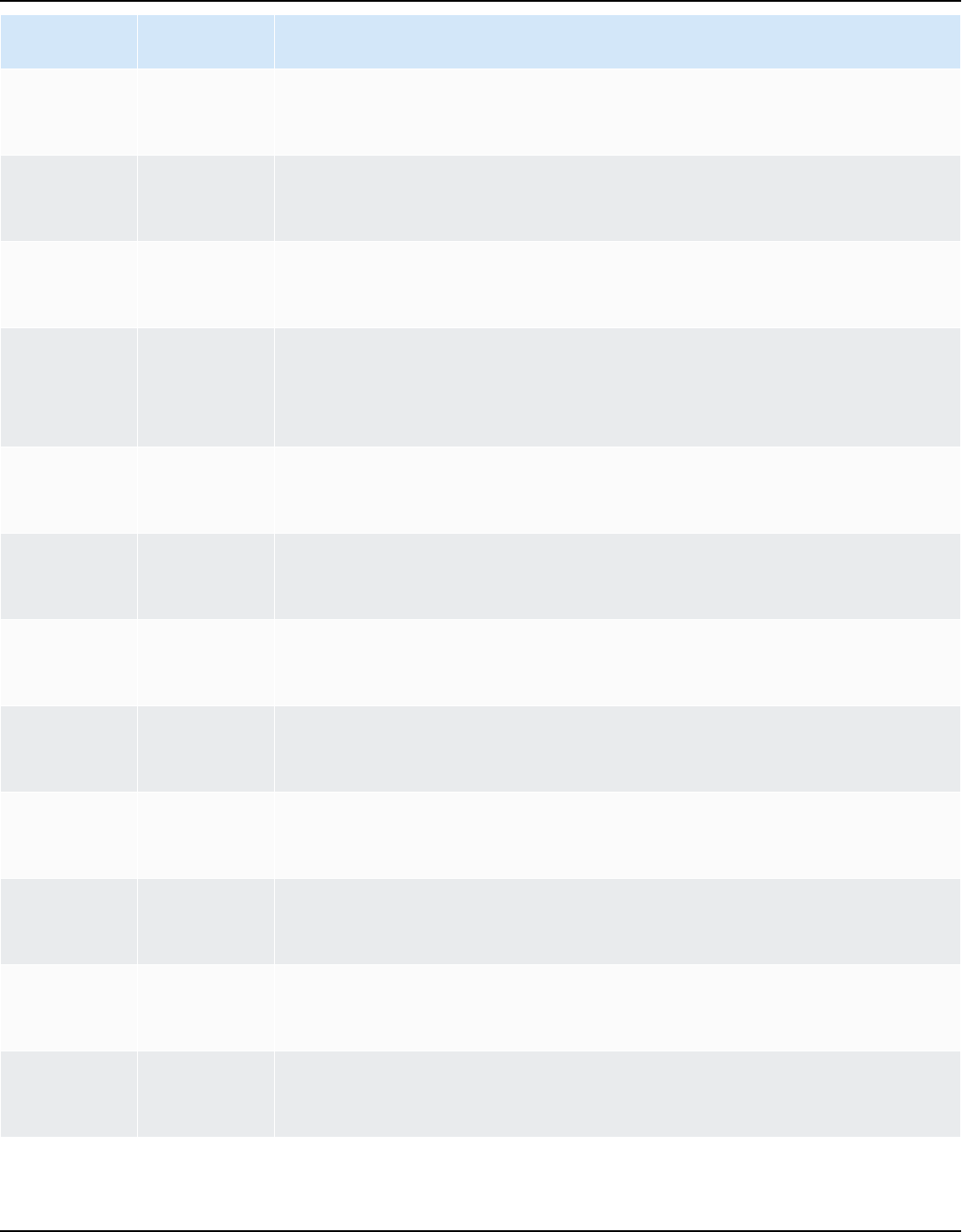

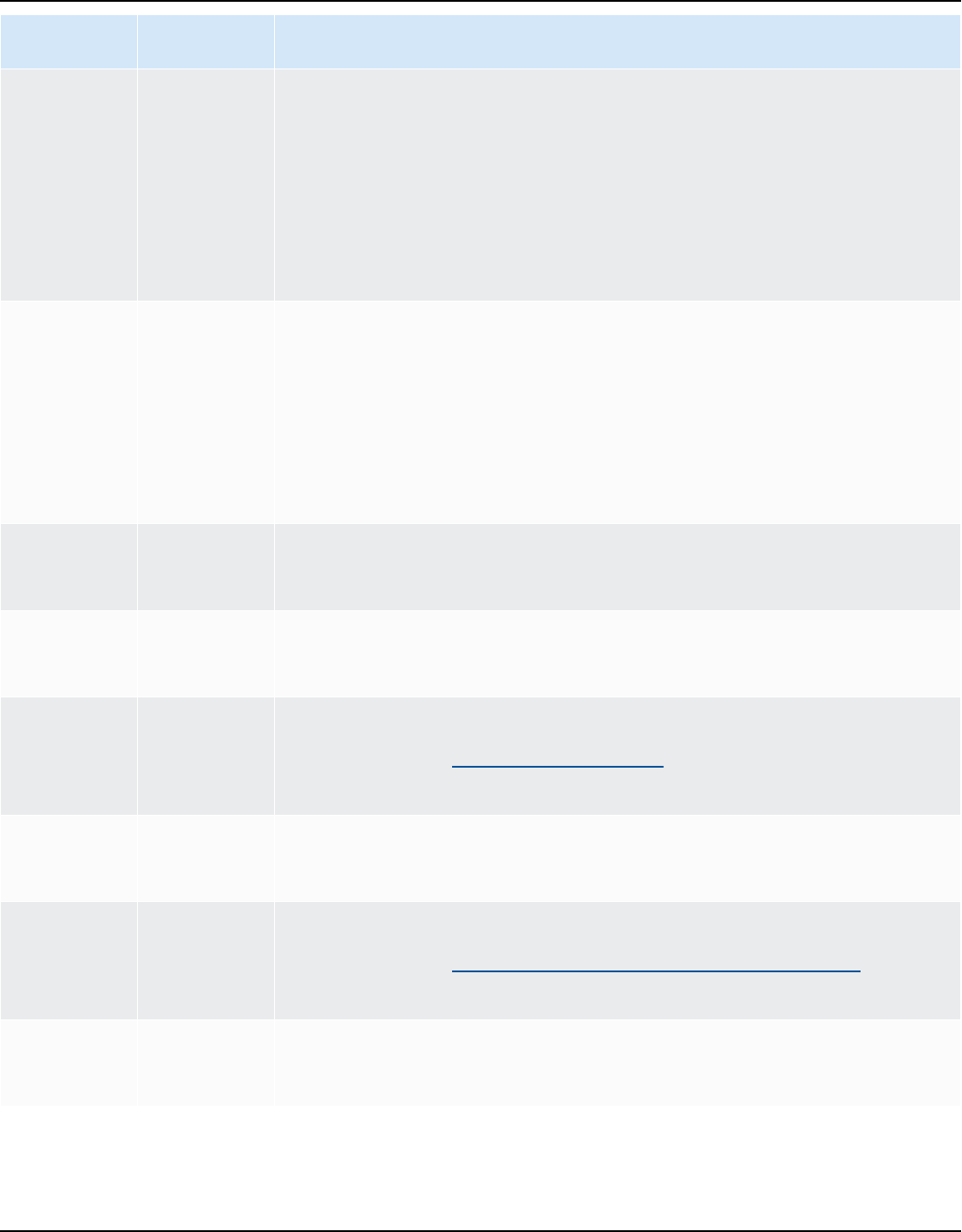

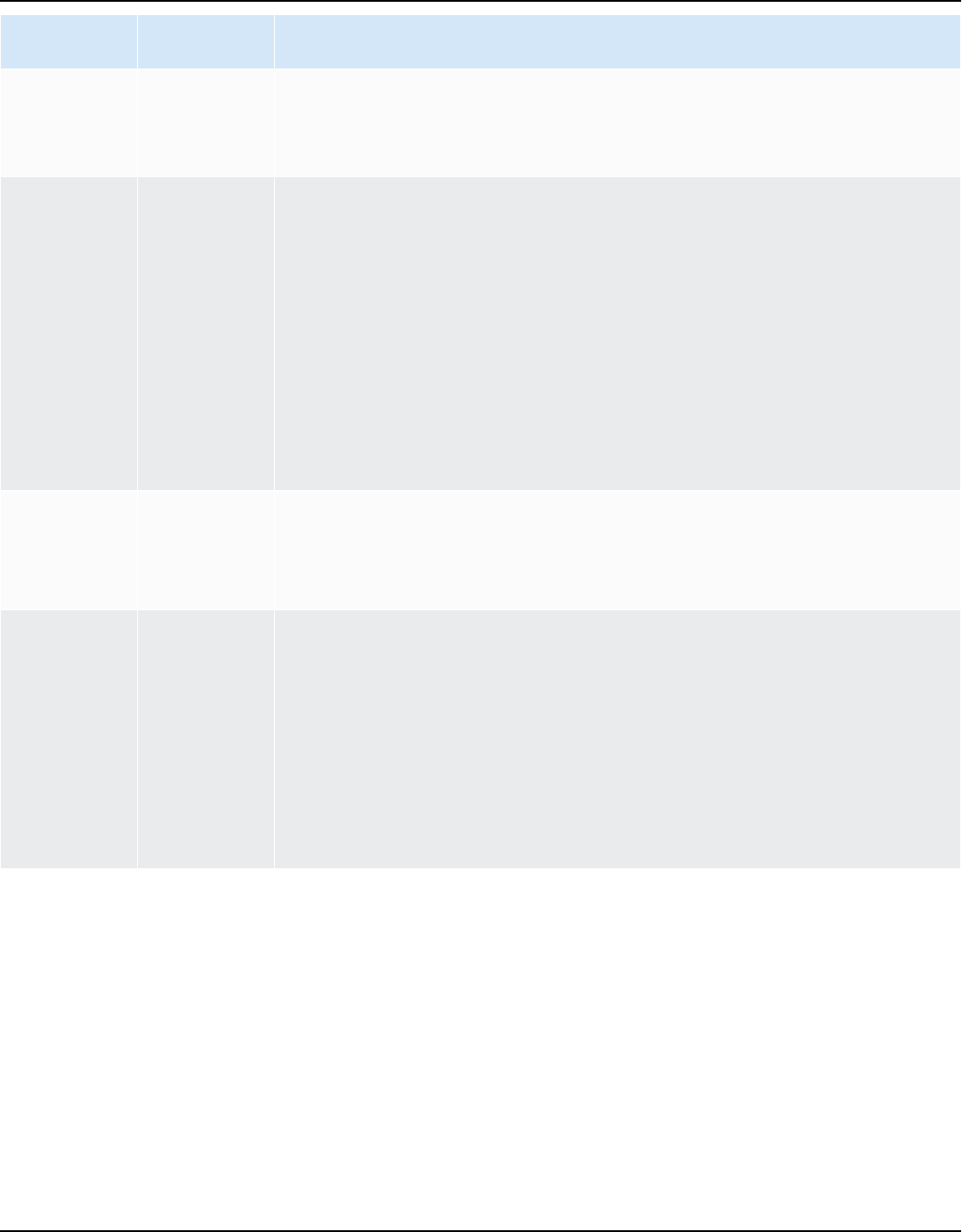

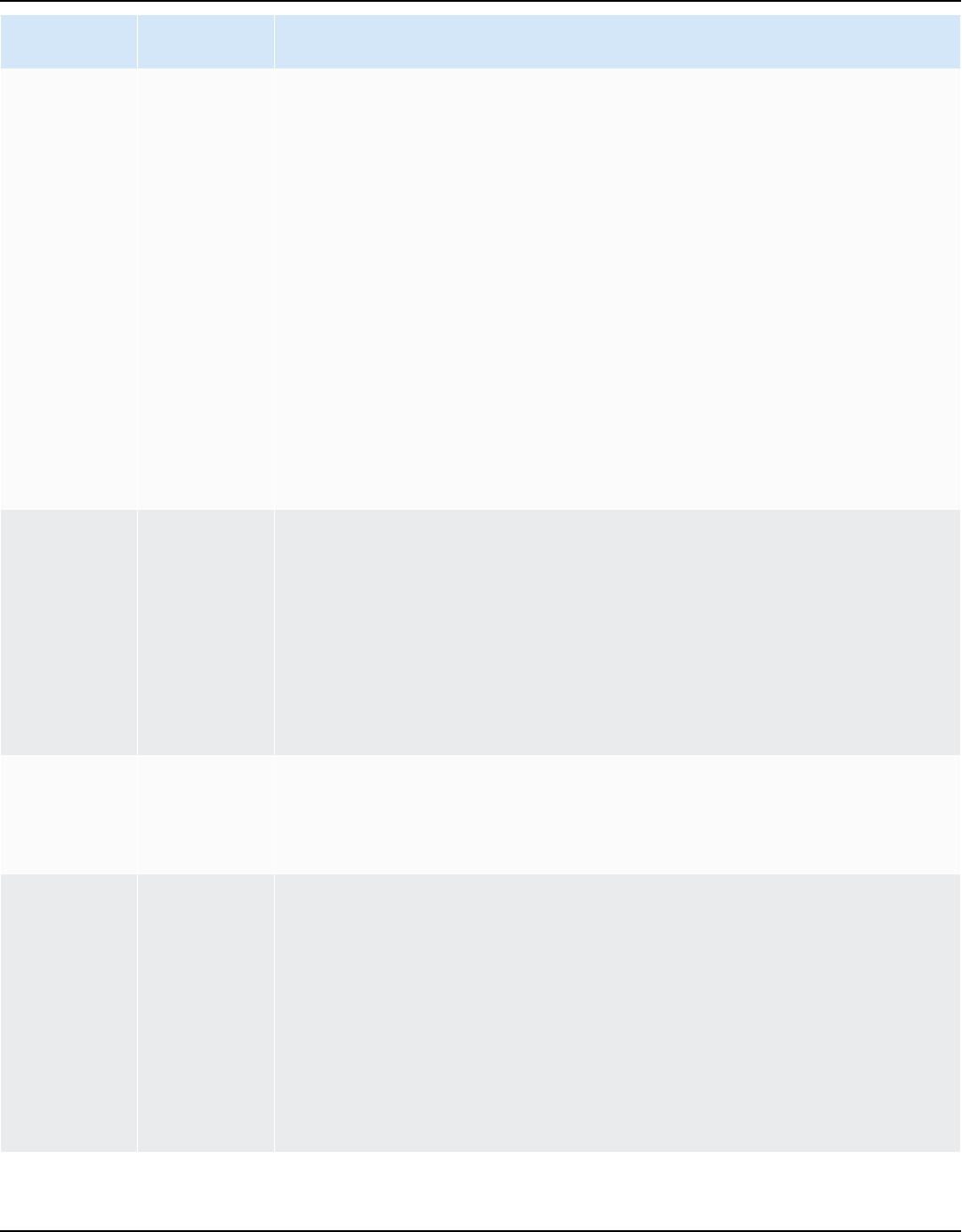

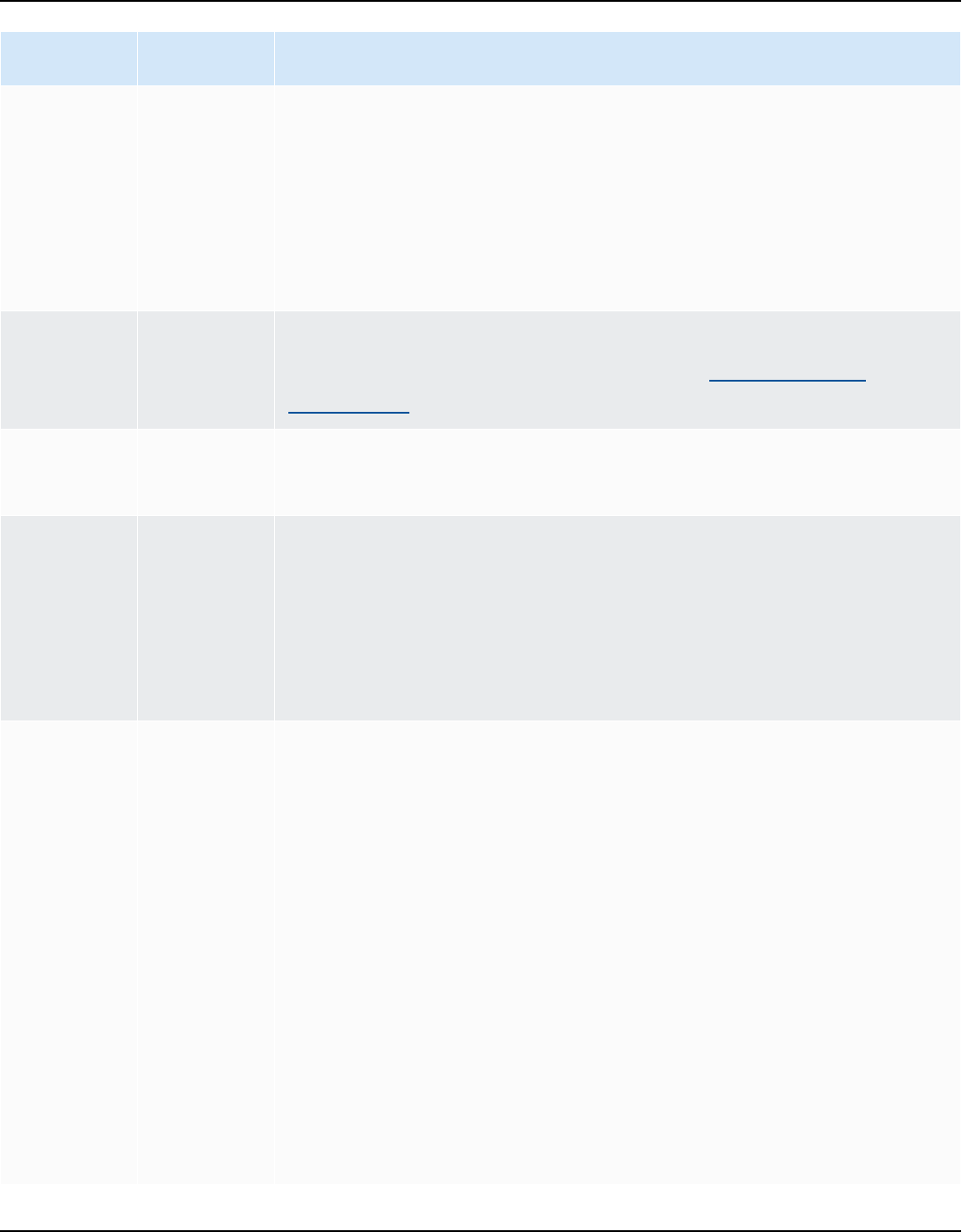

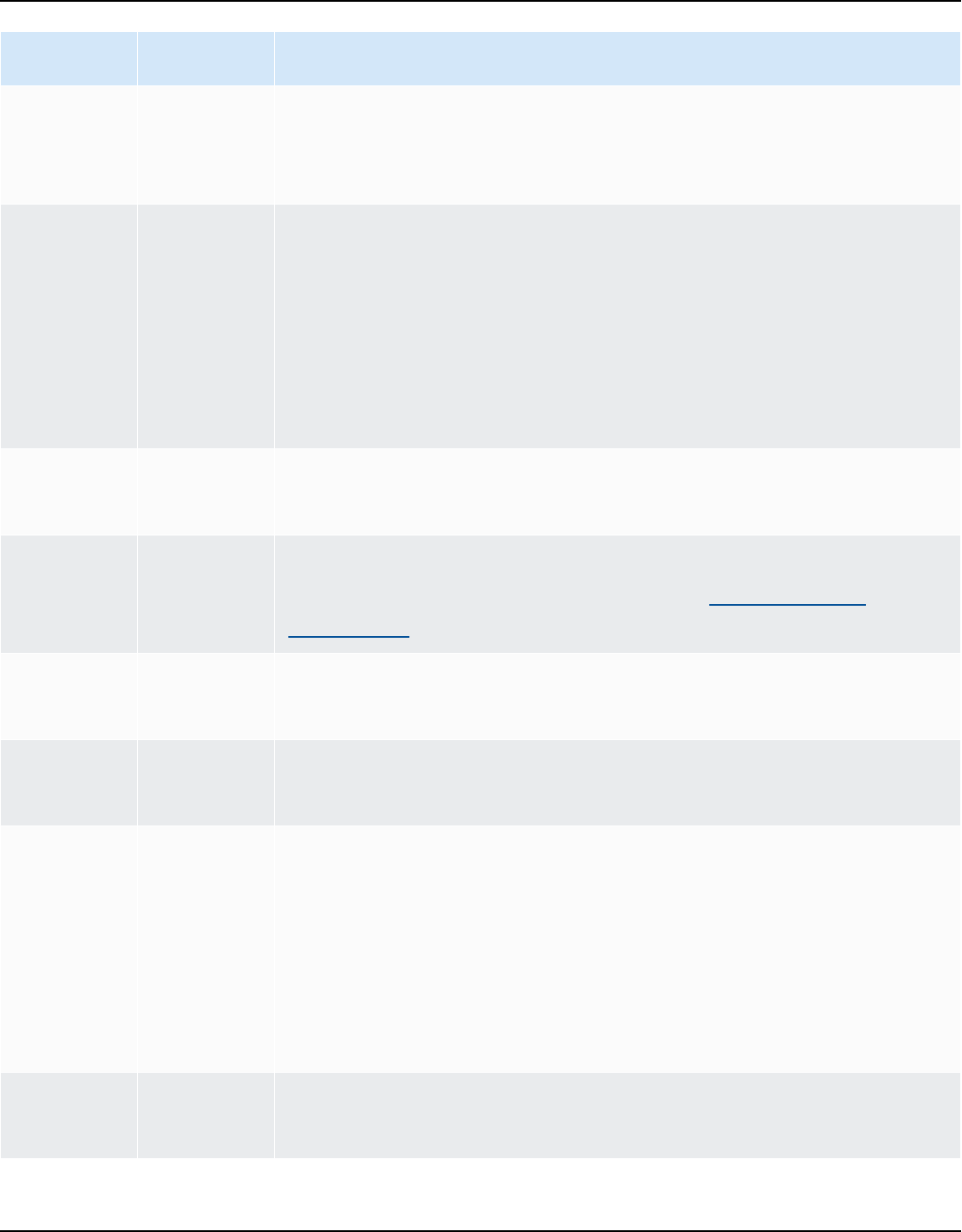

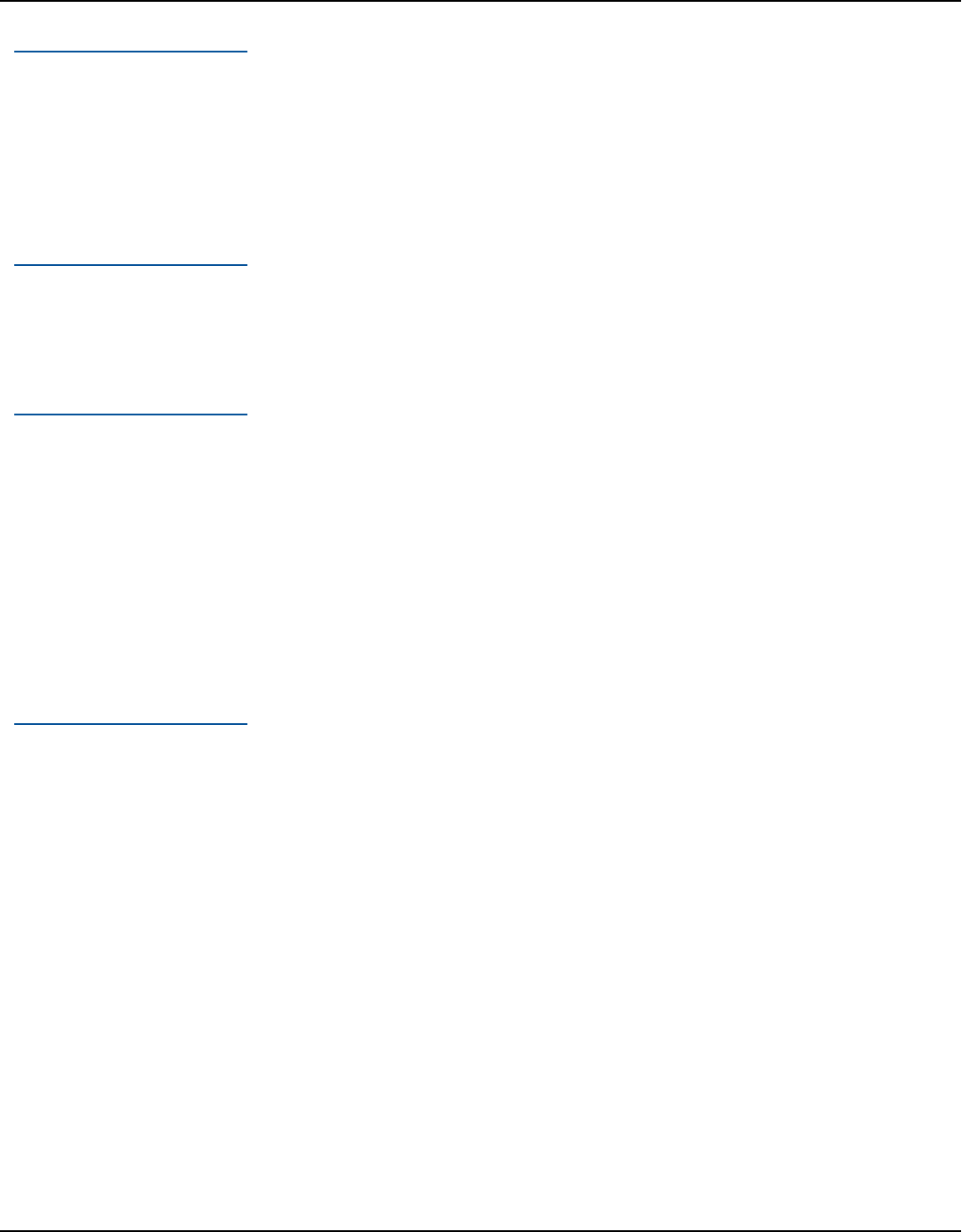

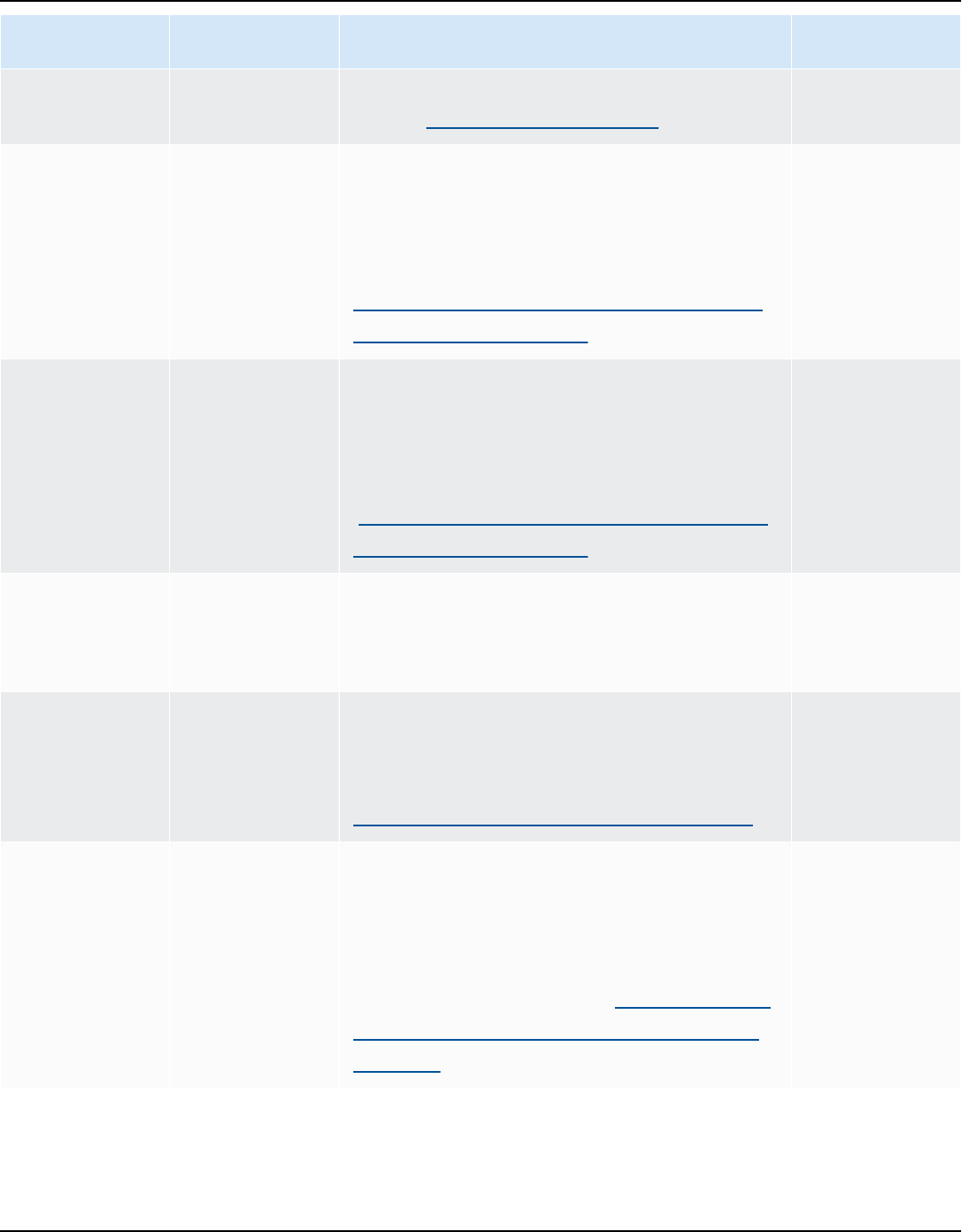

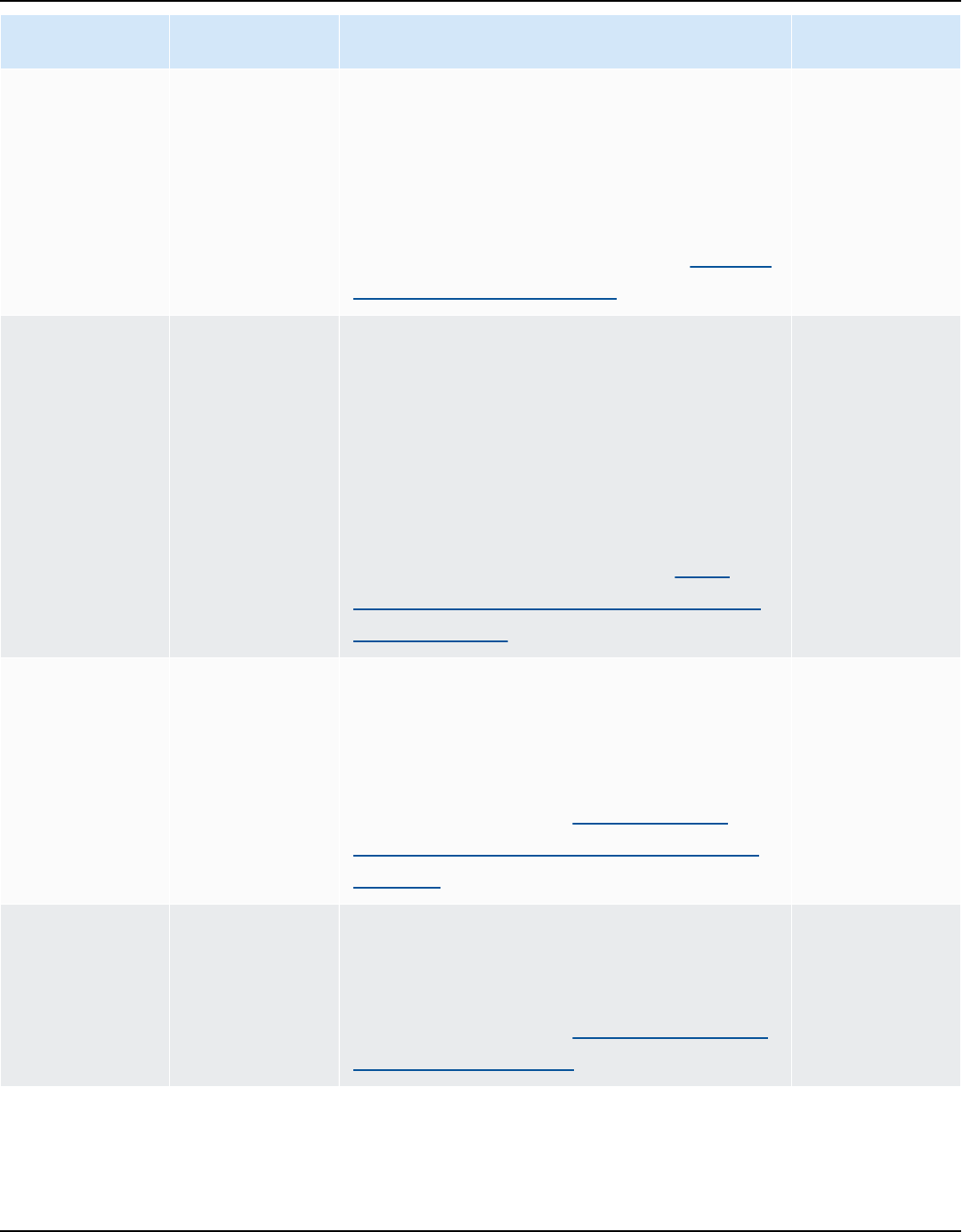

AWS SCT supports the following OLTP conversions.

Source database Target database

IBM Db2 for z/OS (version 12) Amazon Aurora MySQL-Compatible Edition

(Aurora MySQL), Amazon Aurora PostgreSQ

L-Compatible Edition (Aurora PostgreSQL),

MySQL, PostgreSQL

For more information, see Connecting to IBM

DB2 for z/OS.

IBM Db2 LUW (versions 9.1, 9.5, 9.7, 10.5,

11.1, and 11.5)

Aurora MySQL, Aurora PostgreSQL, MariaDB,

MySQL, PostgreSQL

For more information, see IBM Db2 LUW

databases.

Microsoft Azure SQL Database Aurora MySQL, Aurora PostgreSQL, MySQL,

PostgreSQL

For more information, see Connecting to

Azure SQL.

Version 1.0.672 1

AWS Schema Conversion Tool User Guide

Source database Target database

Microsoft SQL Server (version 2008 R2, 2012,

2014, 2016, 2017, 2019, and 2022)

Aurora MySQL, Aurora PostgreSQL, Babelfish

for Aurora PostgreSQL (only for assessmen

t reports), MariaDB, Microsoft SQL Server,

MySQL, PostgreSQL

For more information, see SQL Server

databases.

MySQL (version 5.5 and higher) Aurora PostgreSQL, MySQL, PostgreSQL

For more information, see Using MySQL as a

source.

You can migrate schema and data from

MySQL to an Aurora MySQL DB cluster

without using AWS SCT. For more information,

see Migrating data to an Amazon Aurora DB

cluster.

Oracle (version 10.1 and higher) Aurora MySQL, Aurora PostgreSQL, MariaDB,

MySQL, Oracle, PostgreSQL

For more information, see Oracle databases.

PostgreSQL (version 9.1 and higher) Aurora MySQL, Aurora PostgreSQL, MySQL,

PostgreSQL

For more information, see PostgreSQL

databases.

SAP ASE (versions 12.5.4, 15.0.2, 15.5, 15.7,

and 16.0)

Aurora MySQL, Aurora PostgreSQL, MariaDB,

MySQL, PostgreSQL

For more information, see SAP databases.

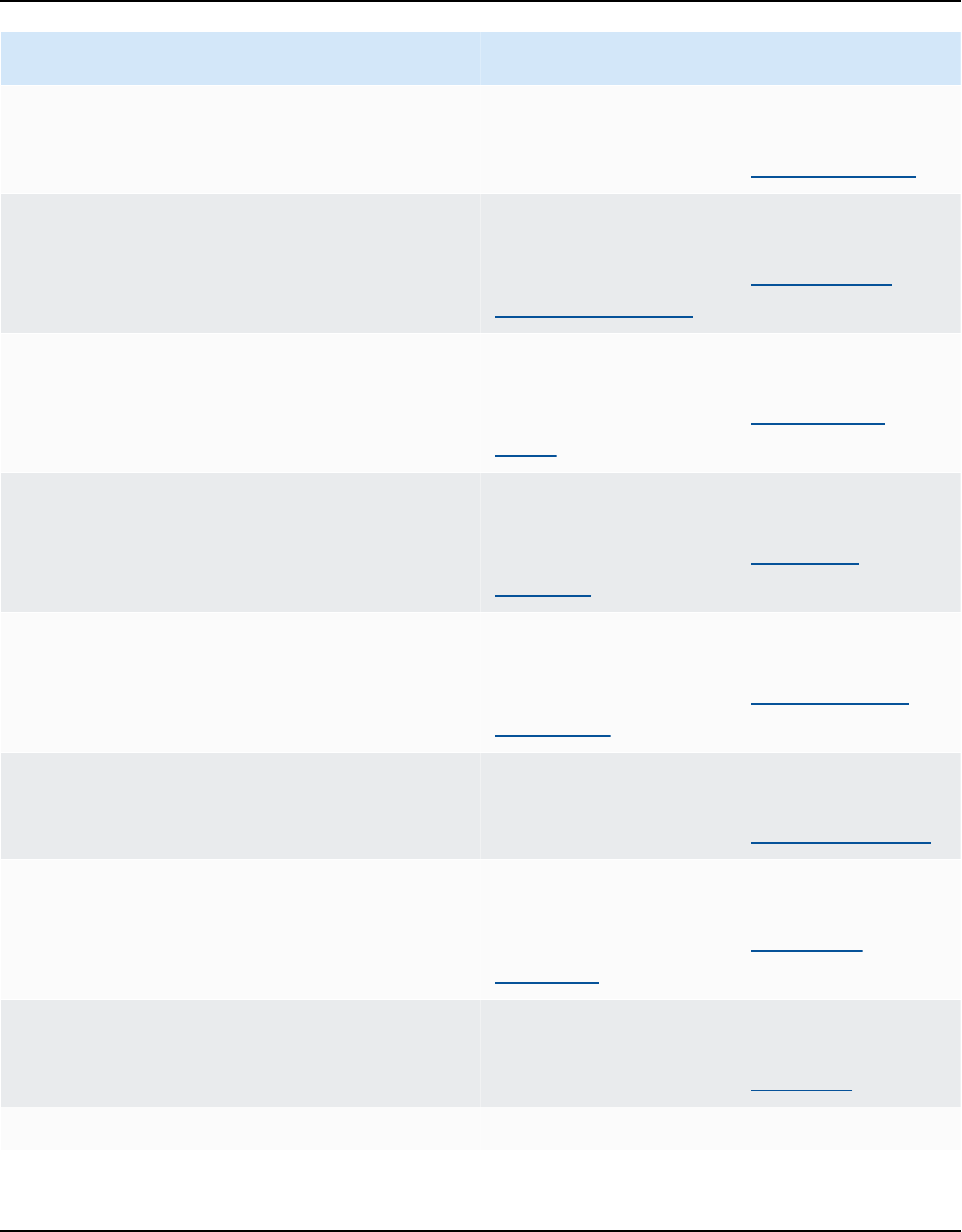

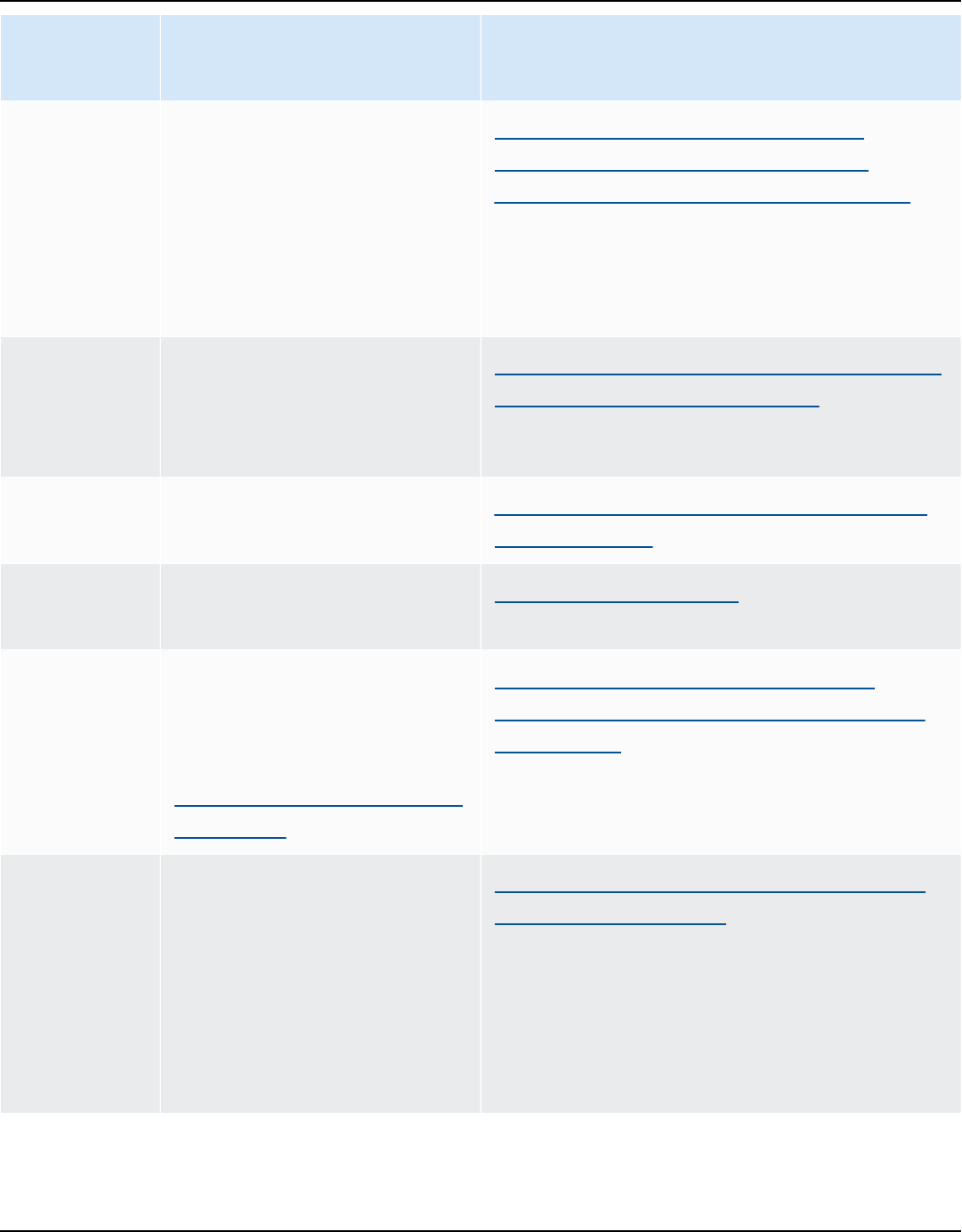

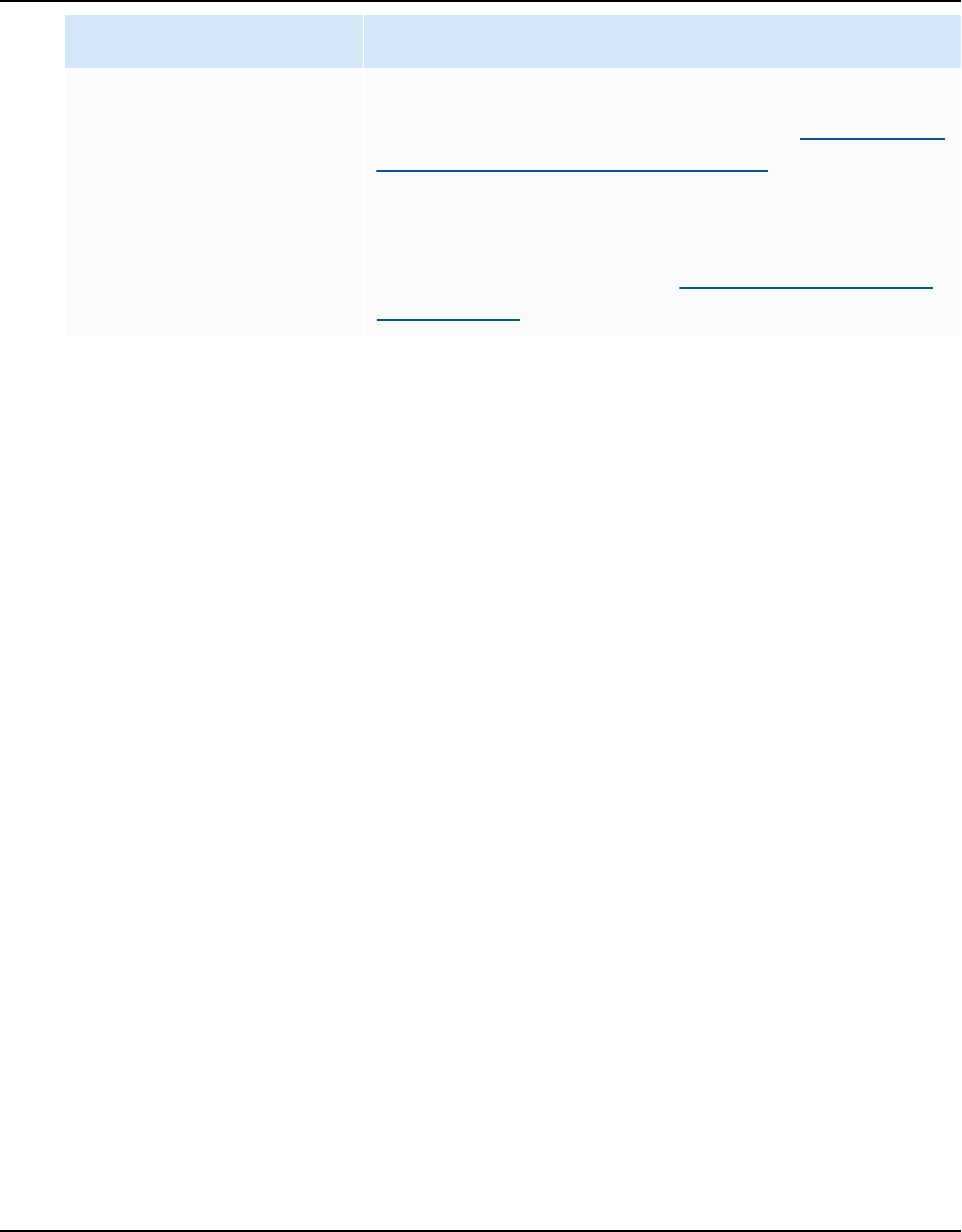

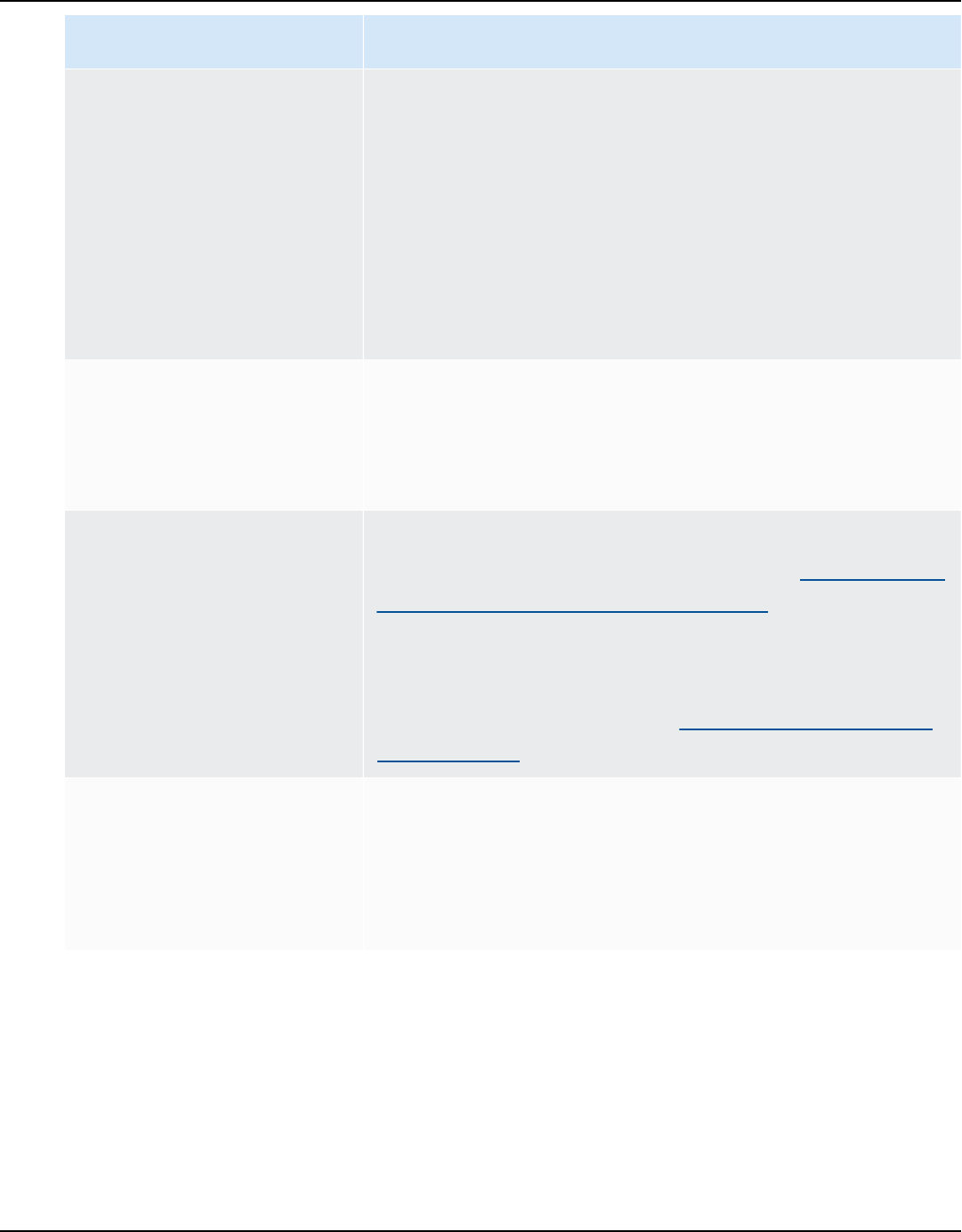

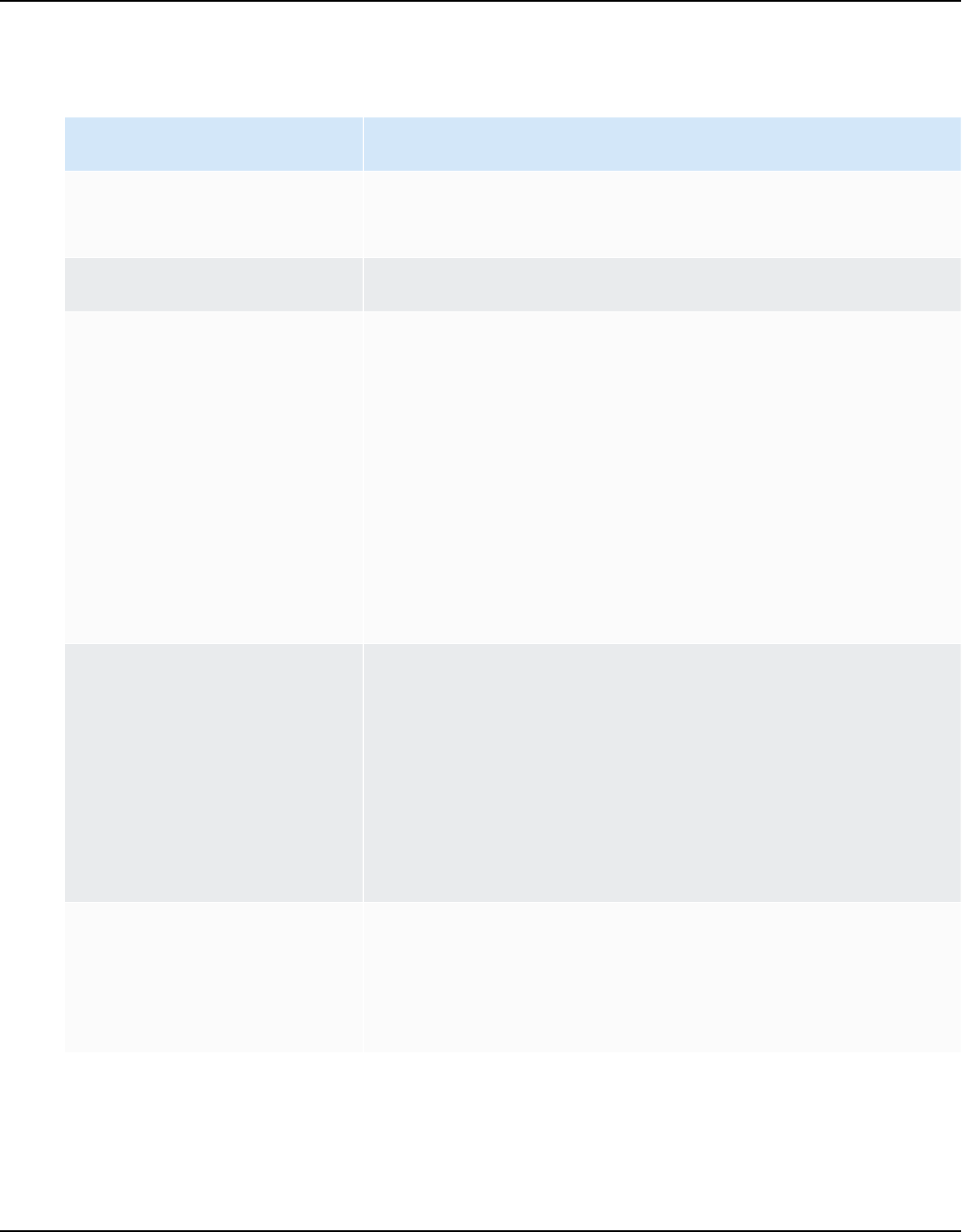

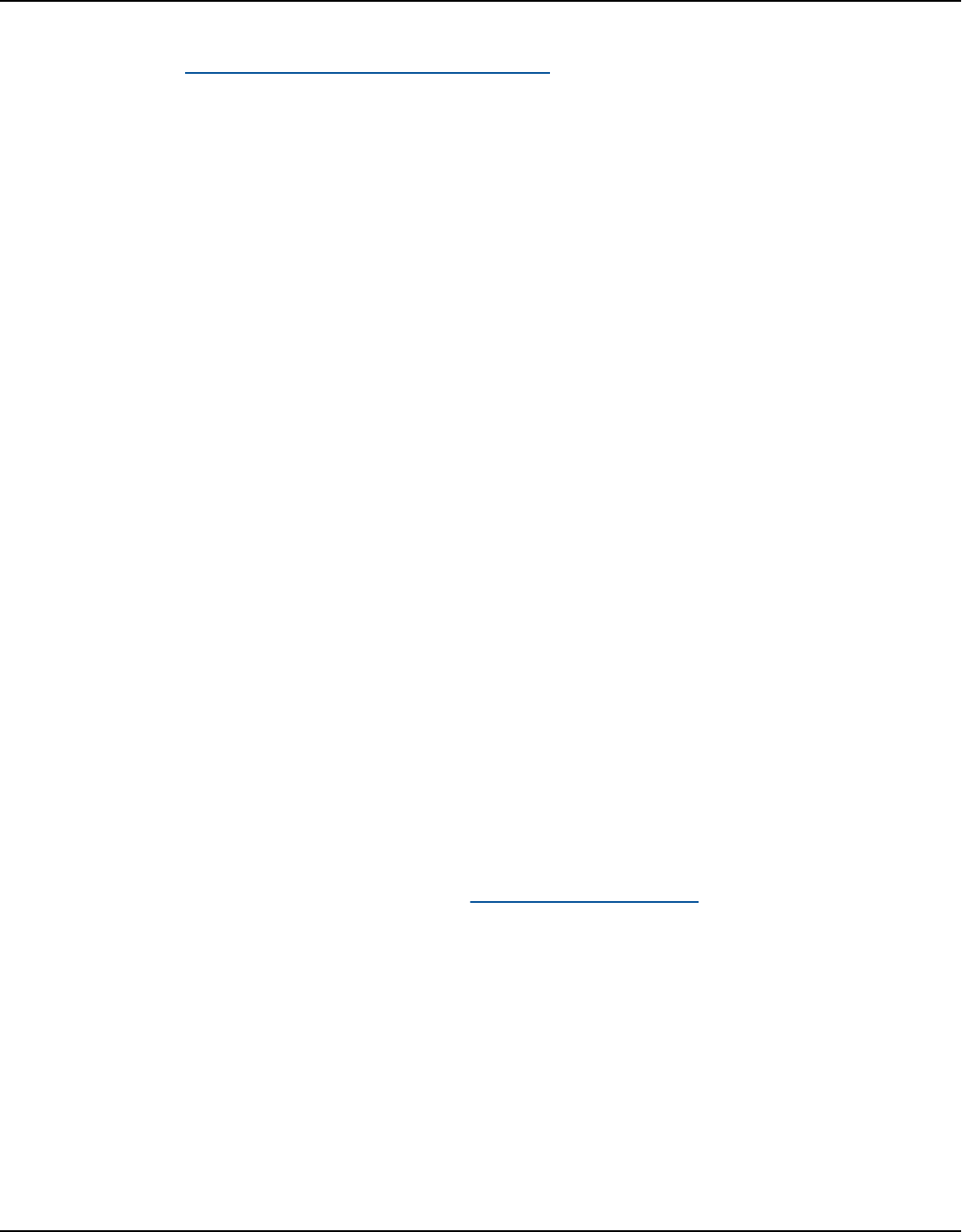

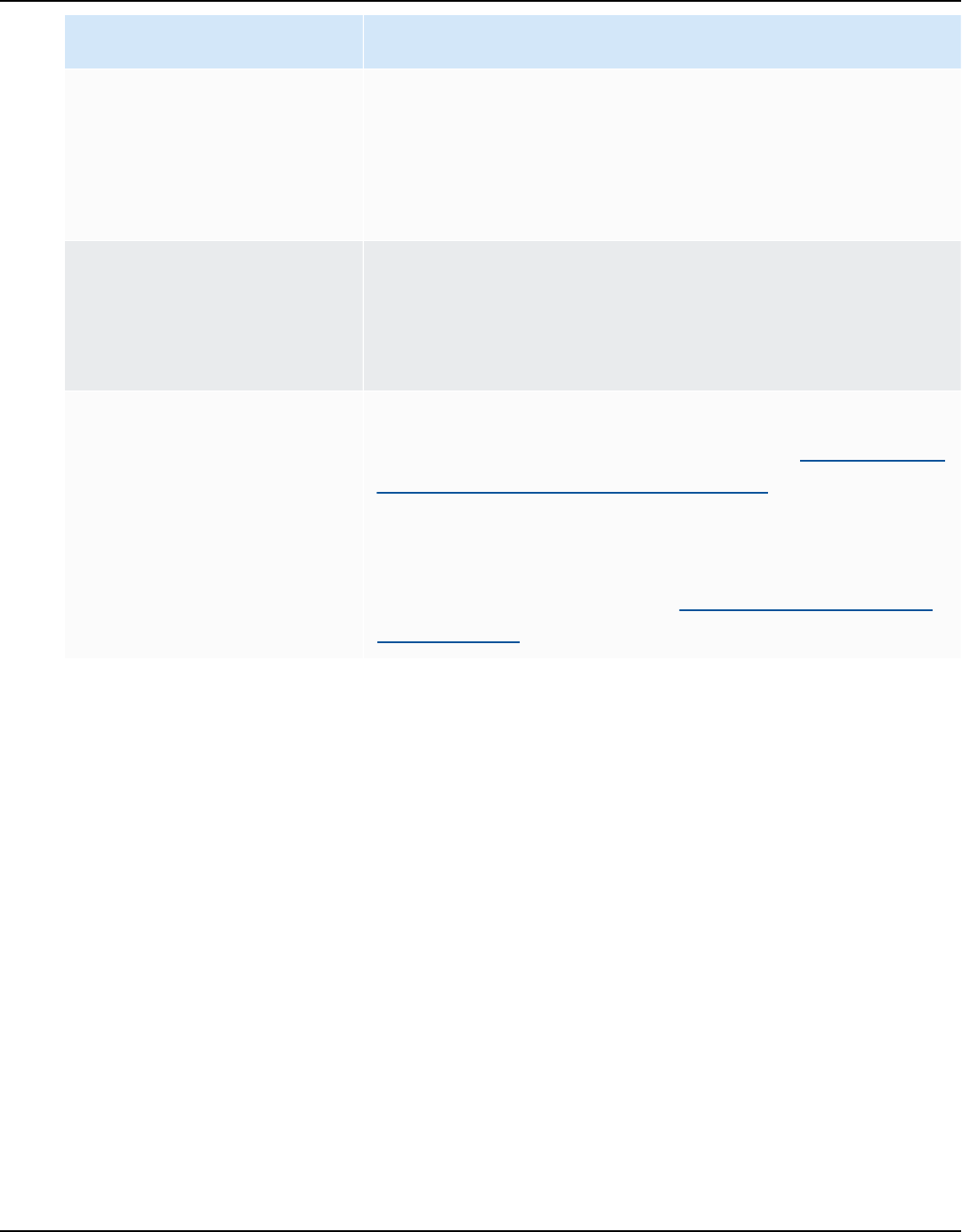

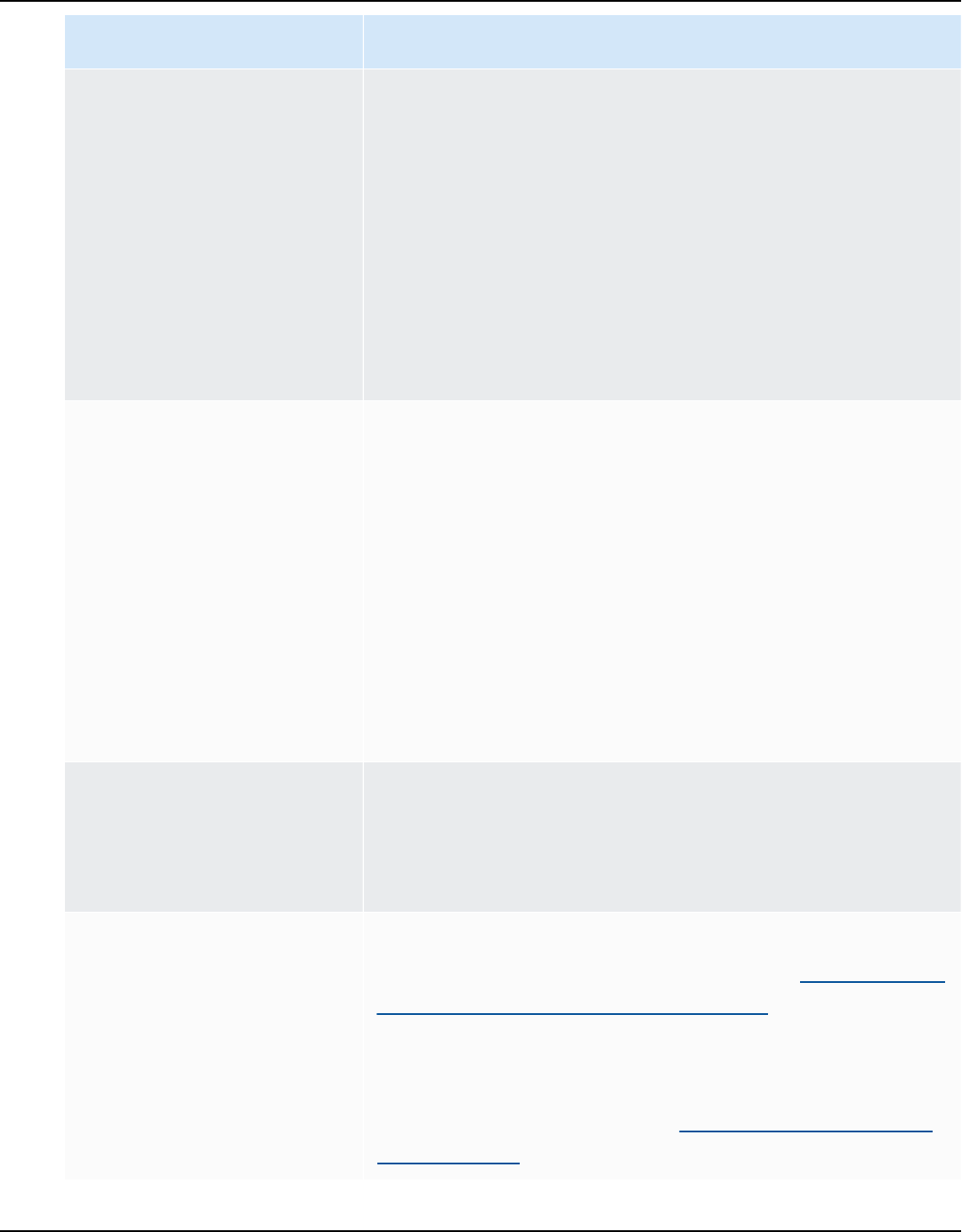

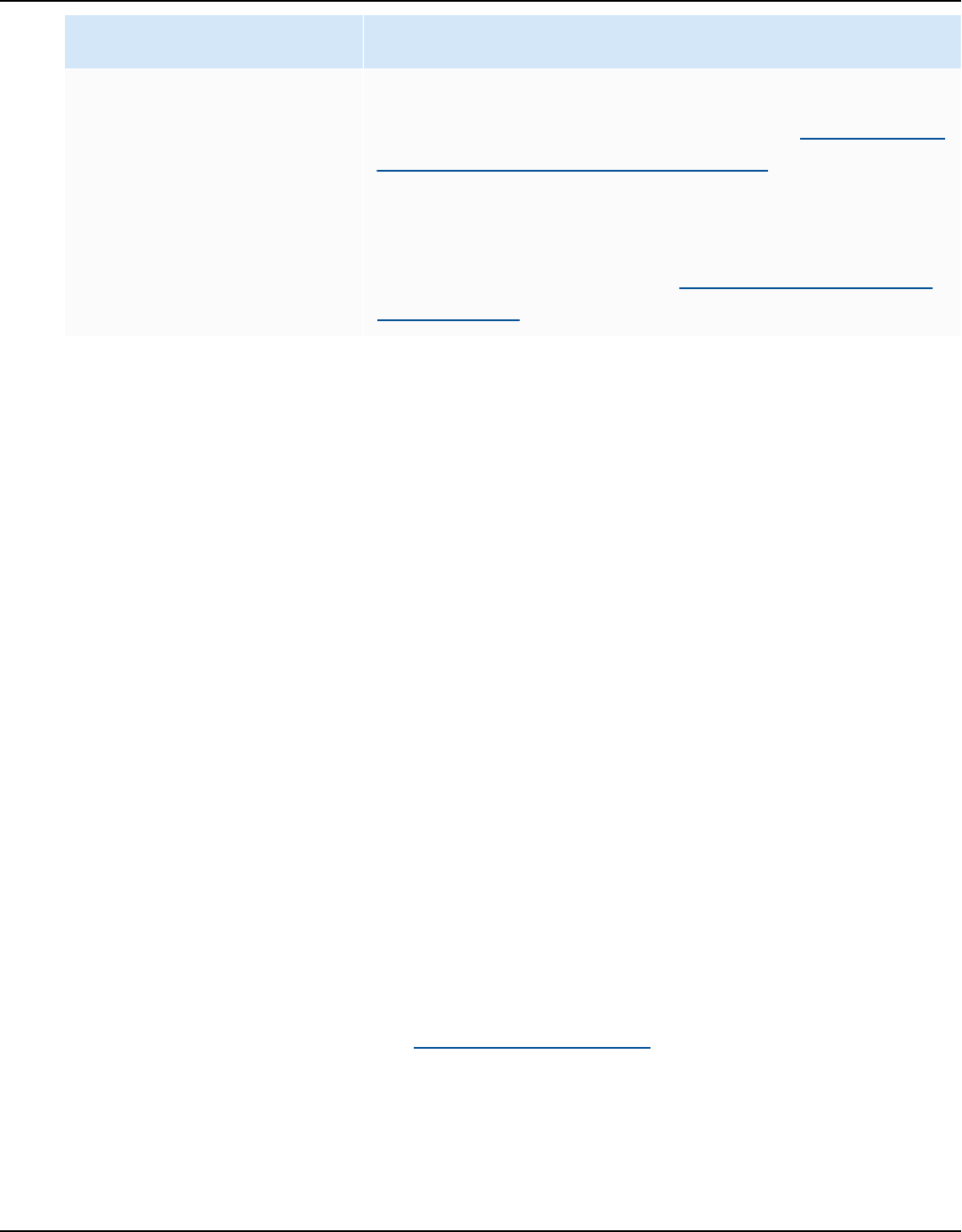

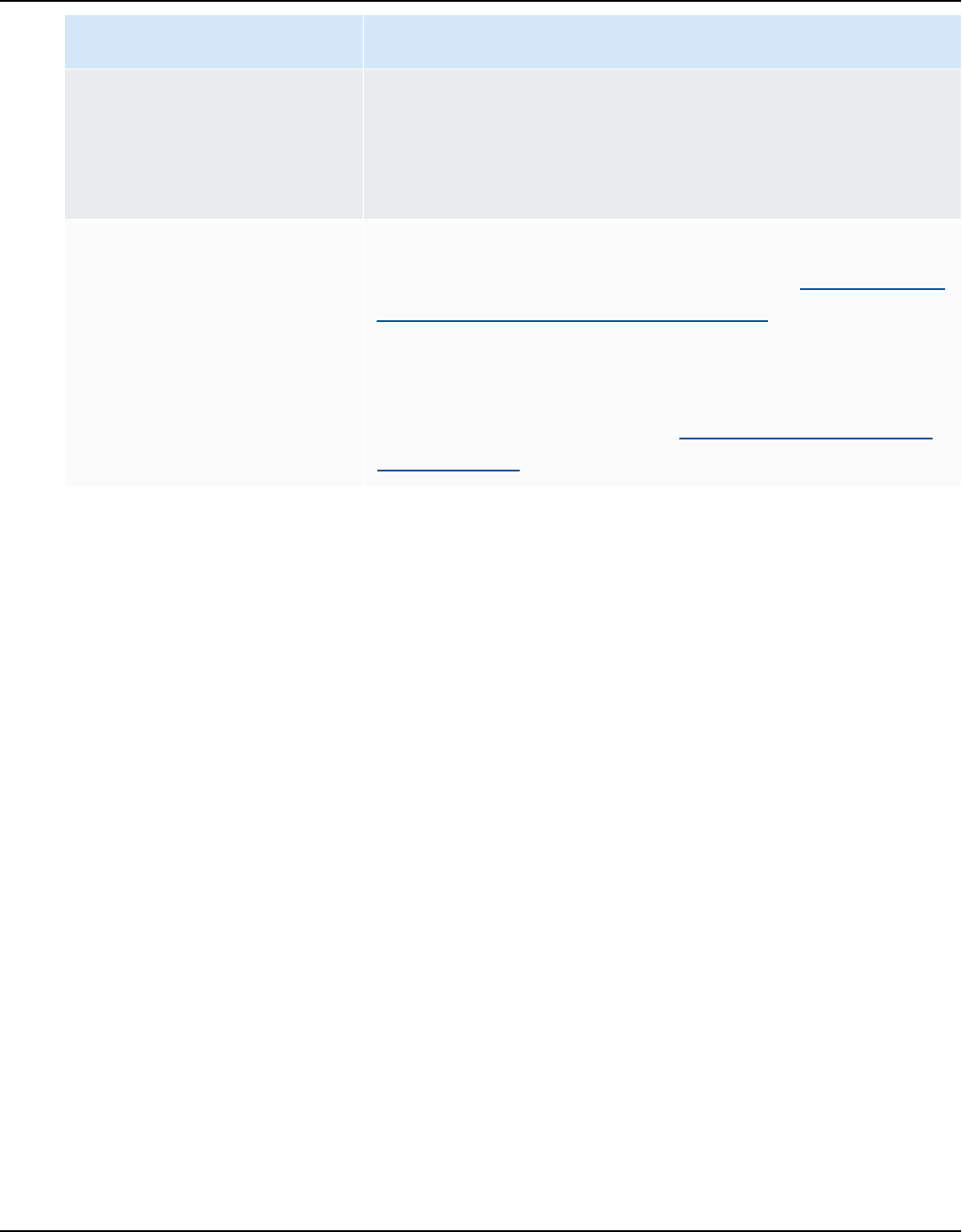

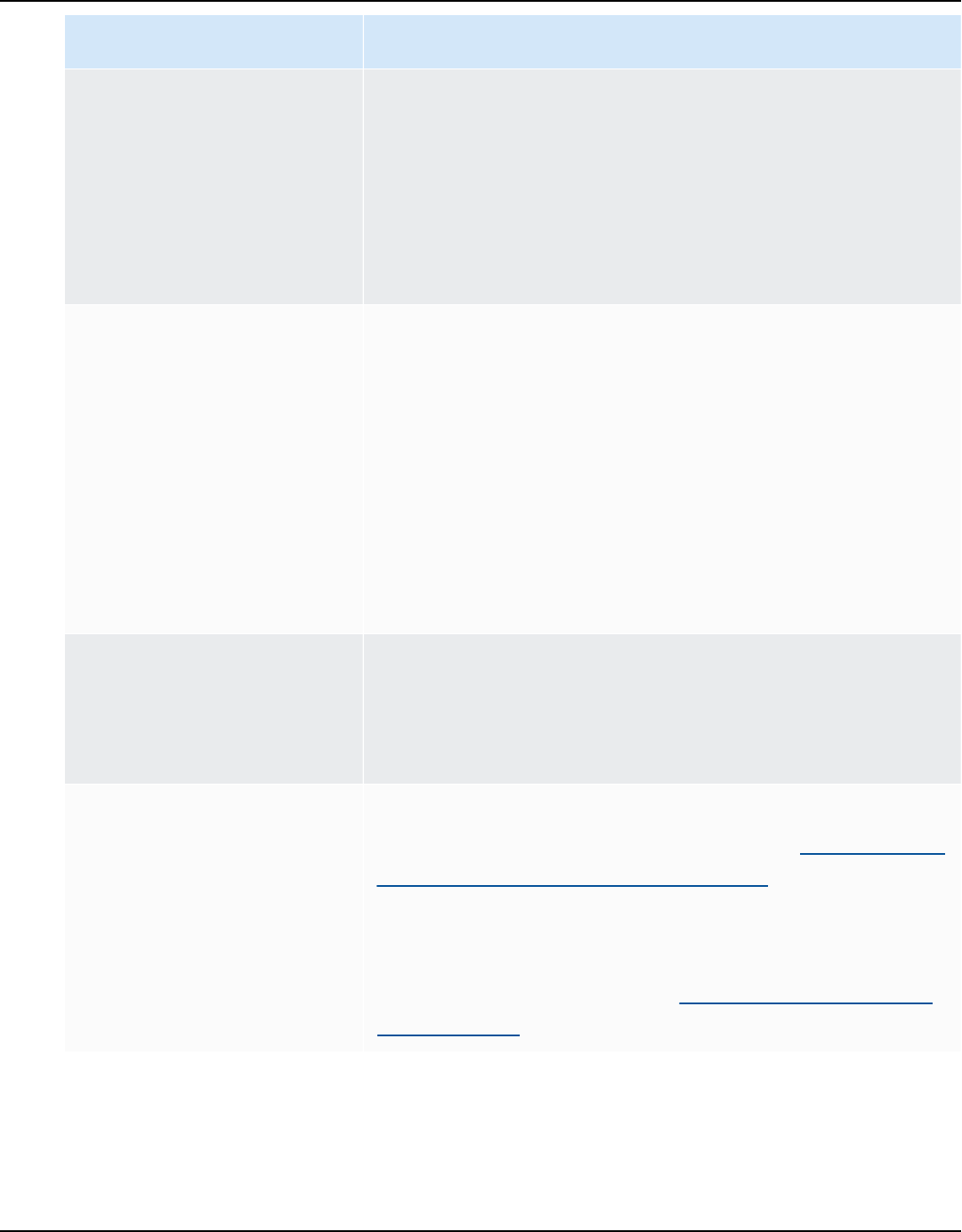

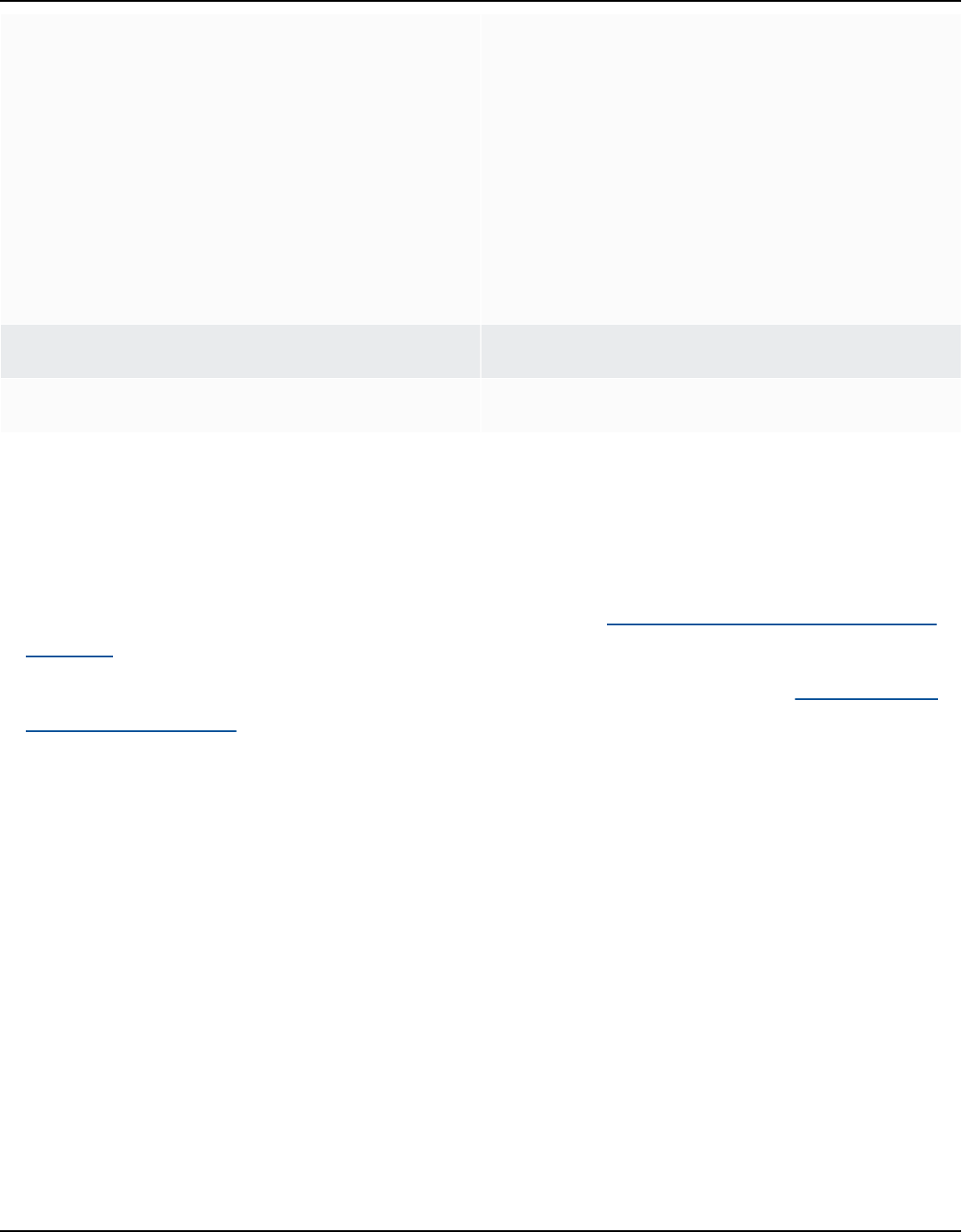

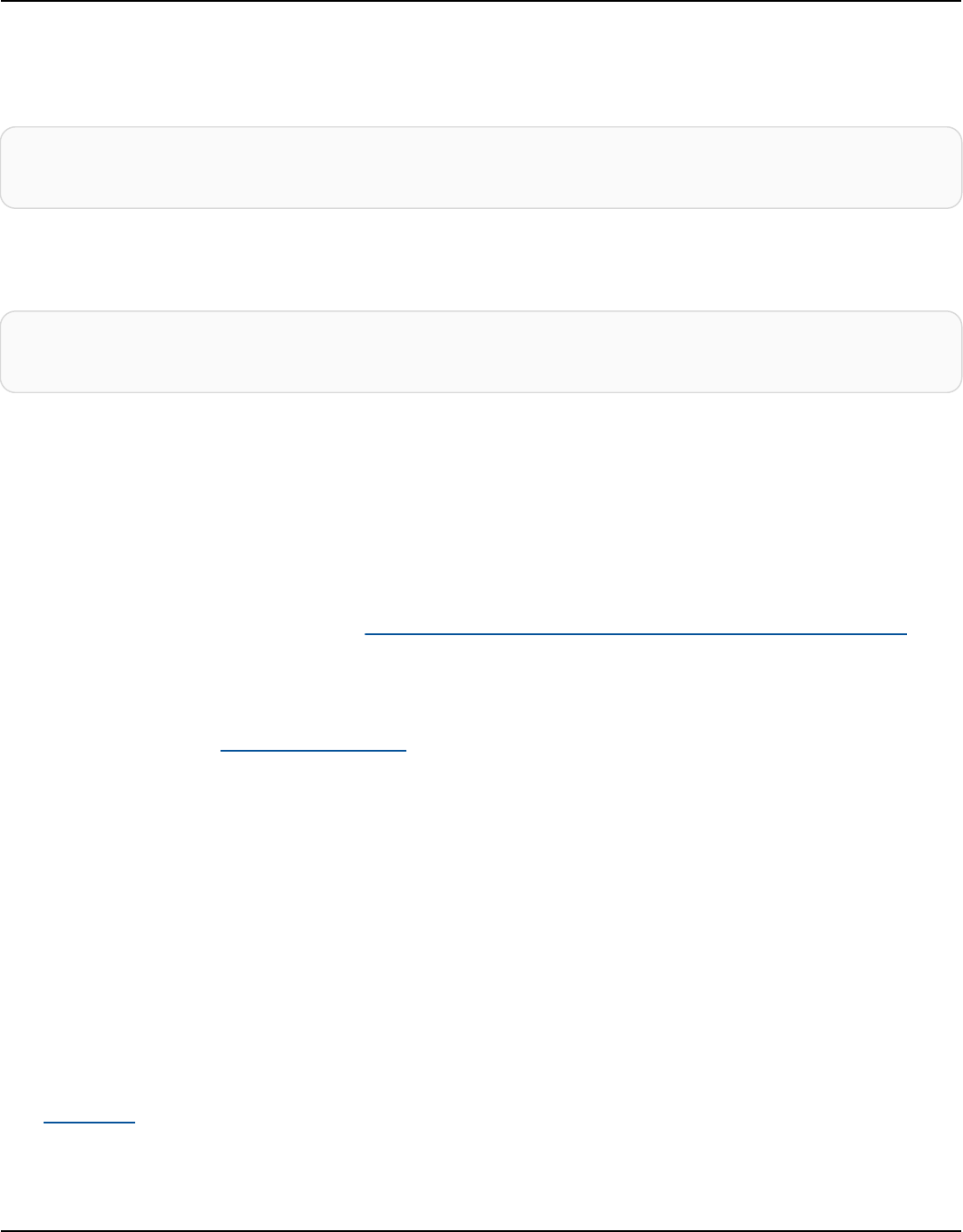

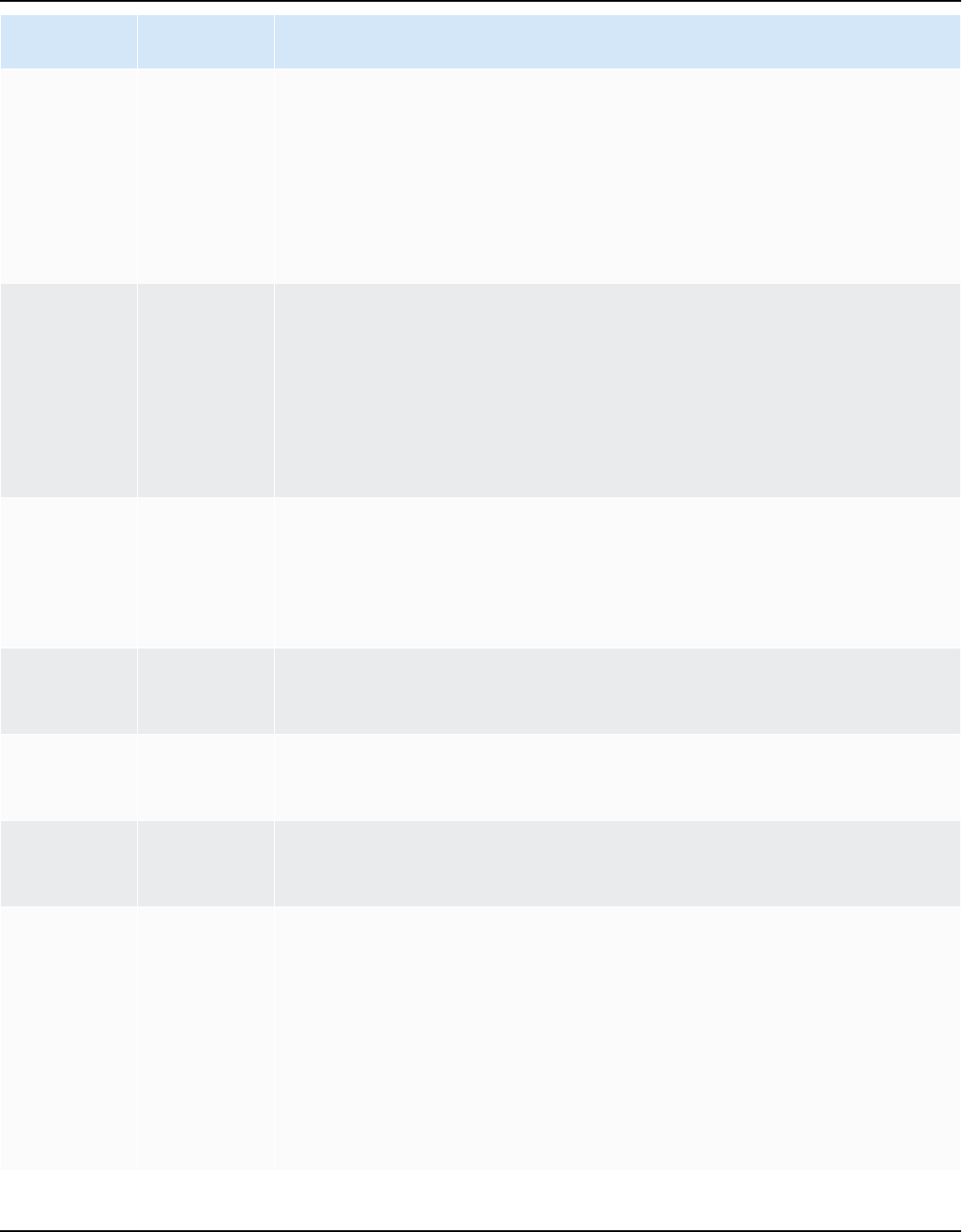

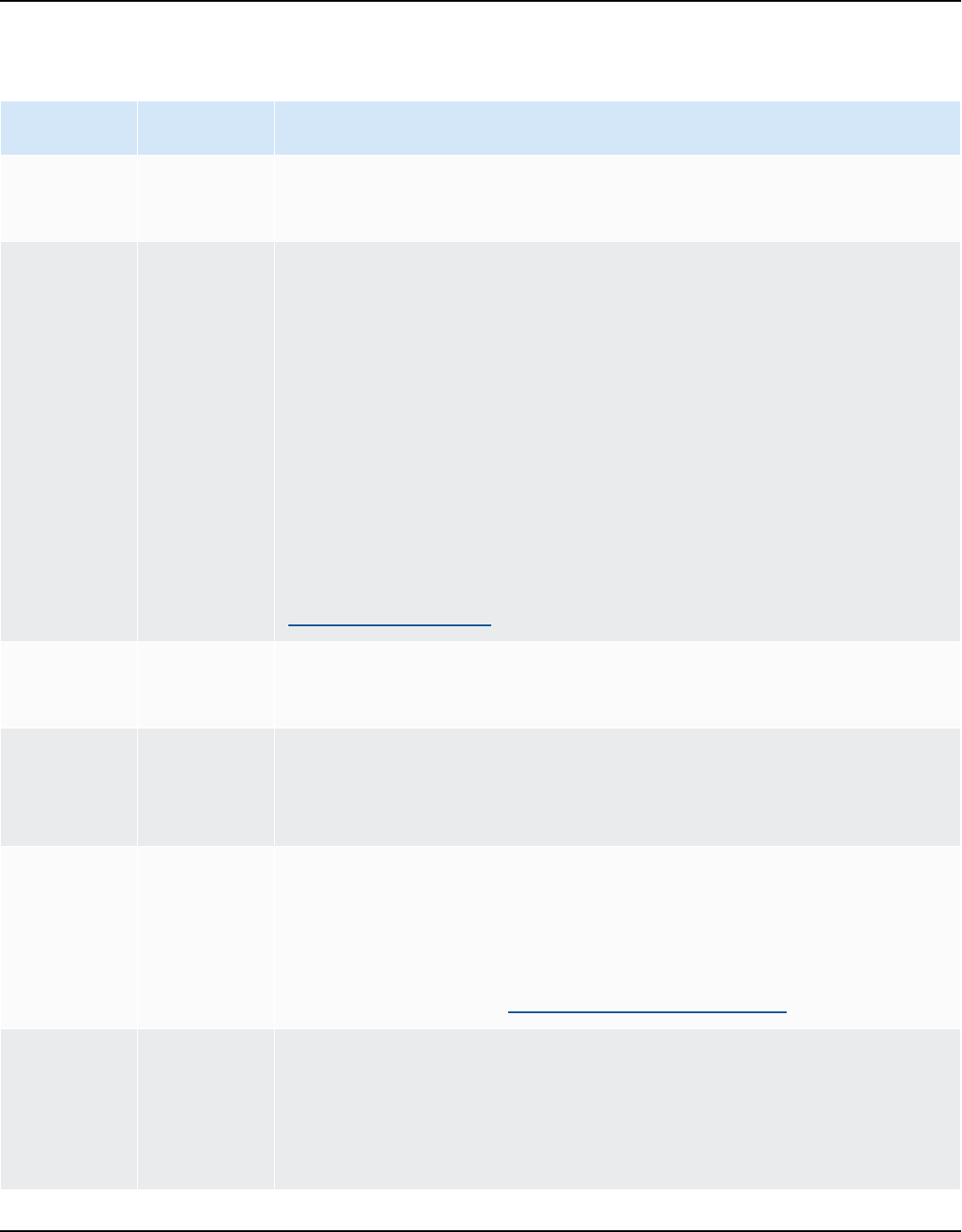

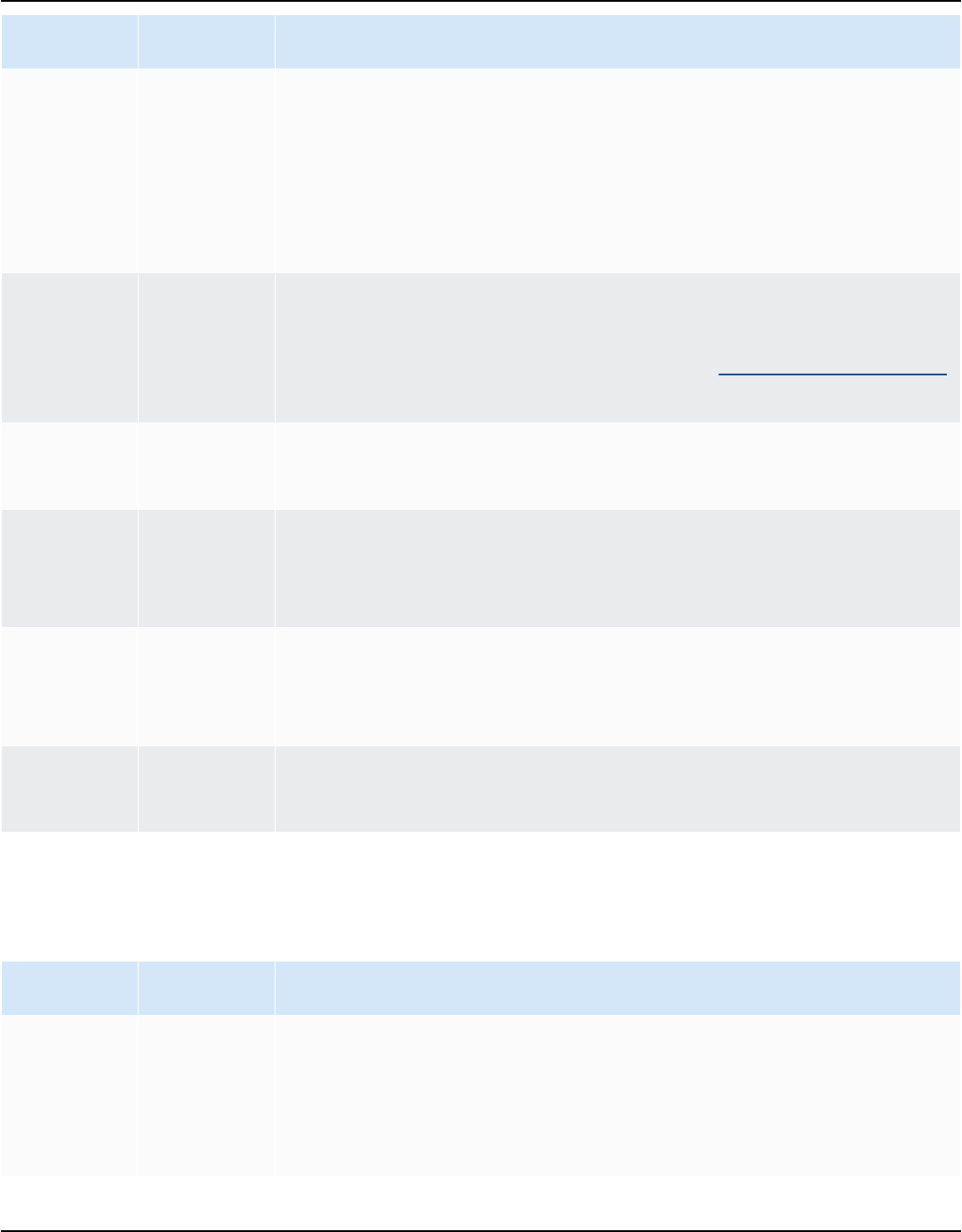

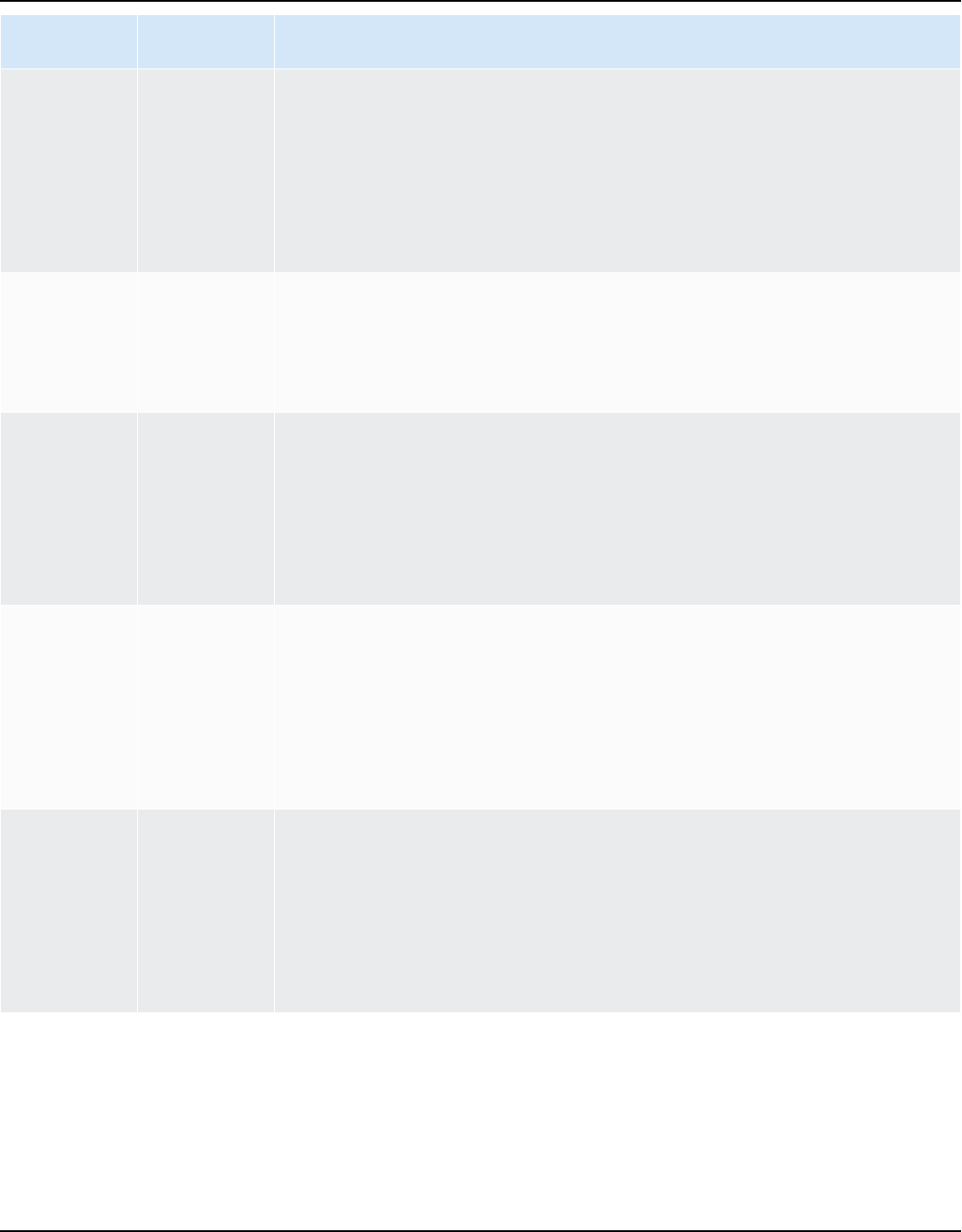

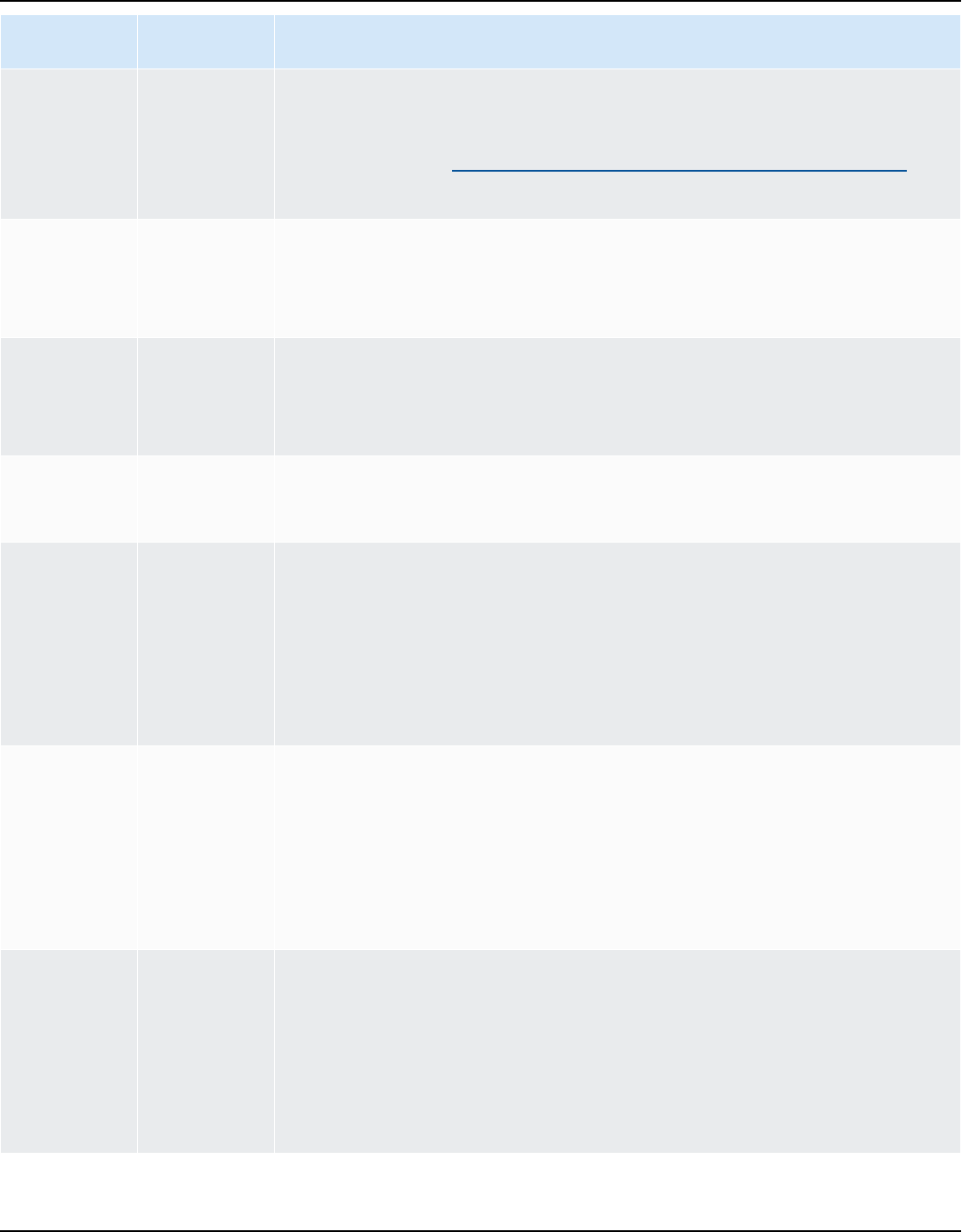

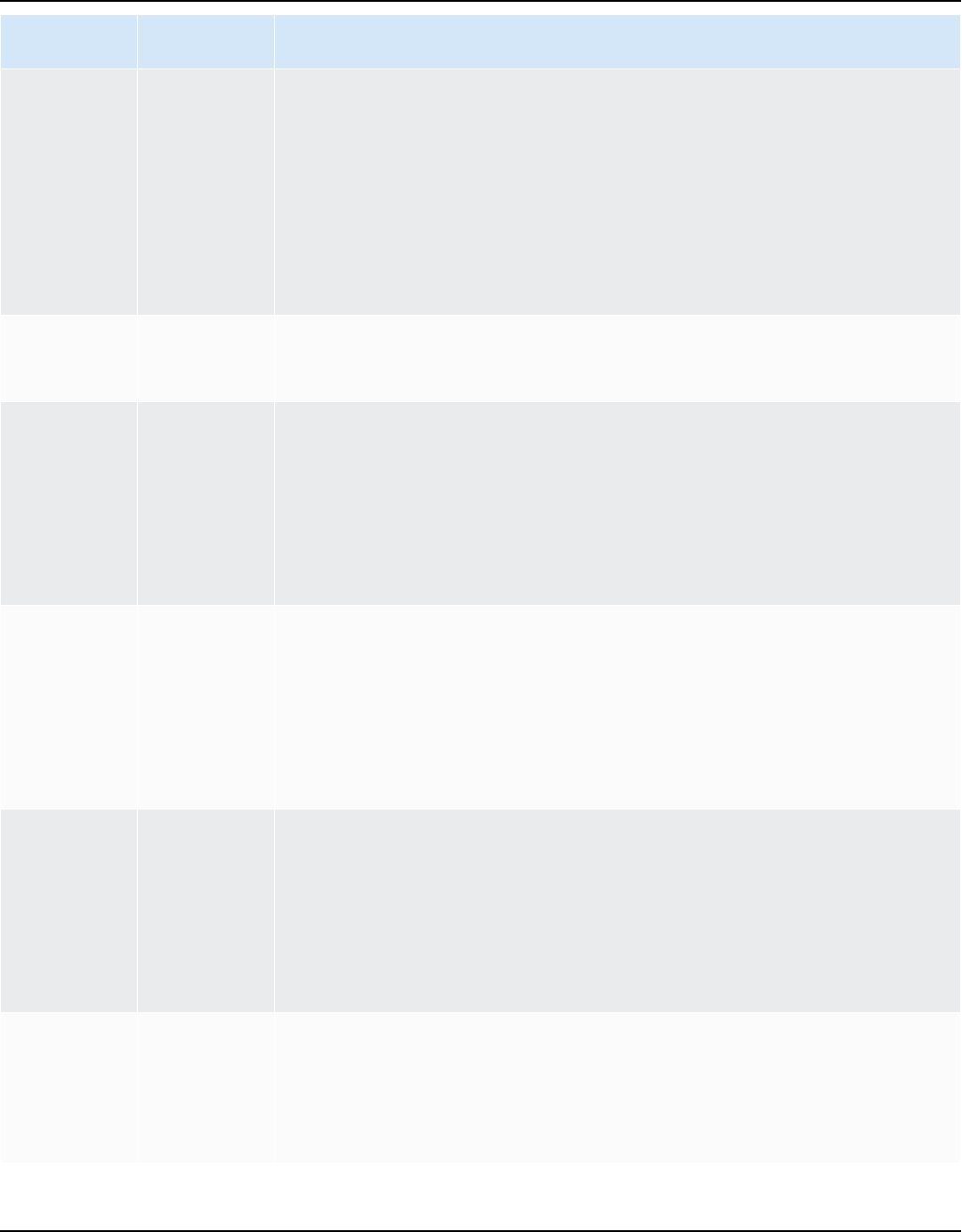

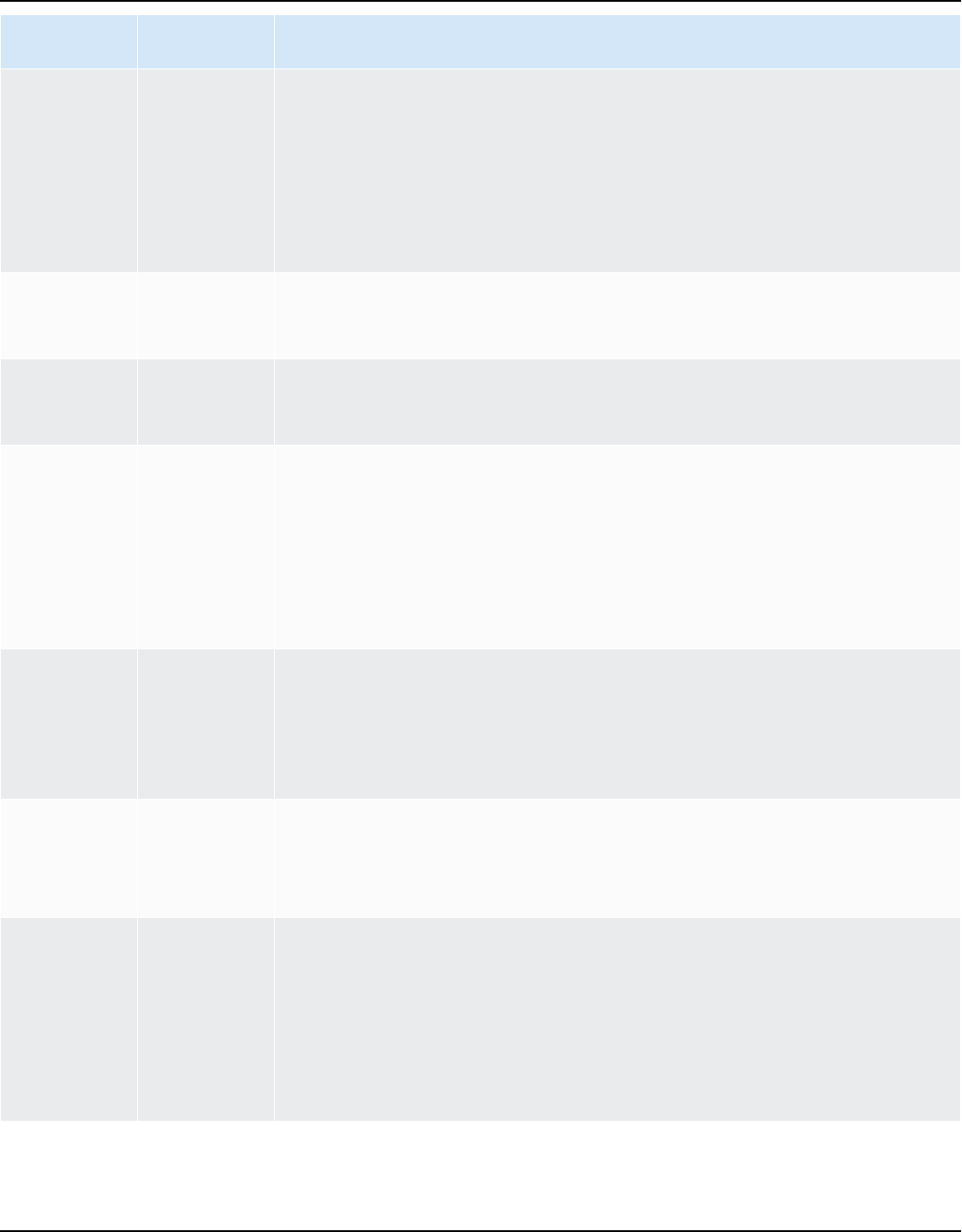

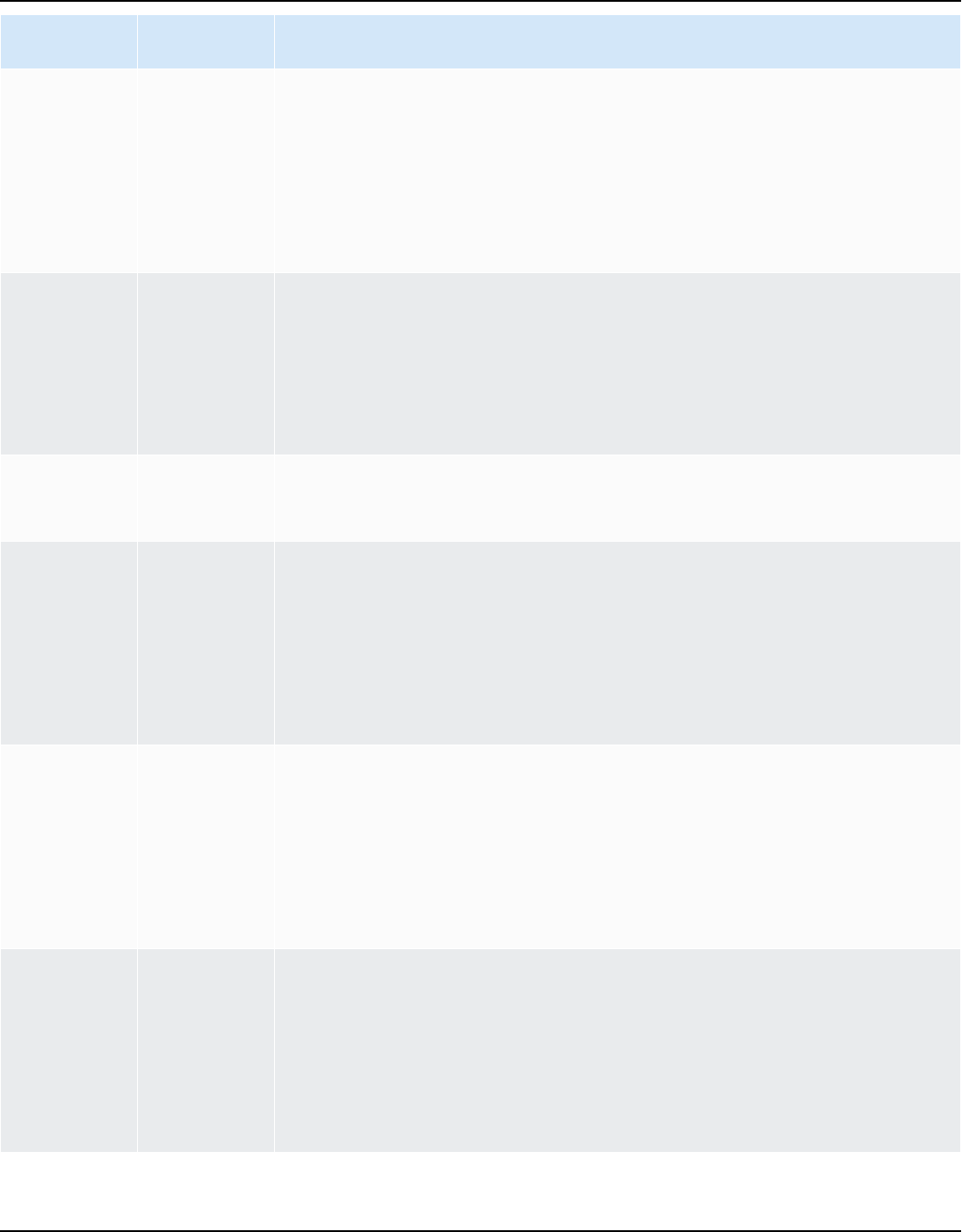

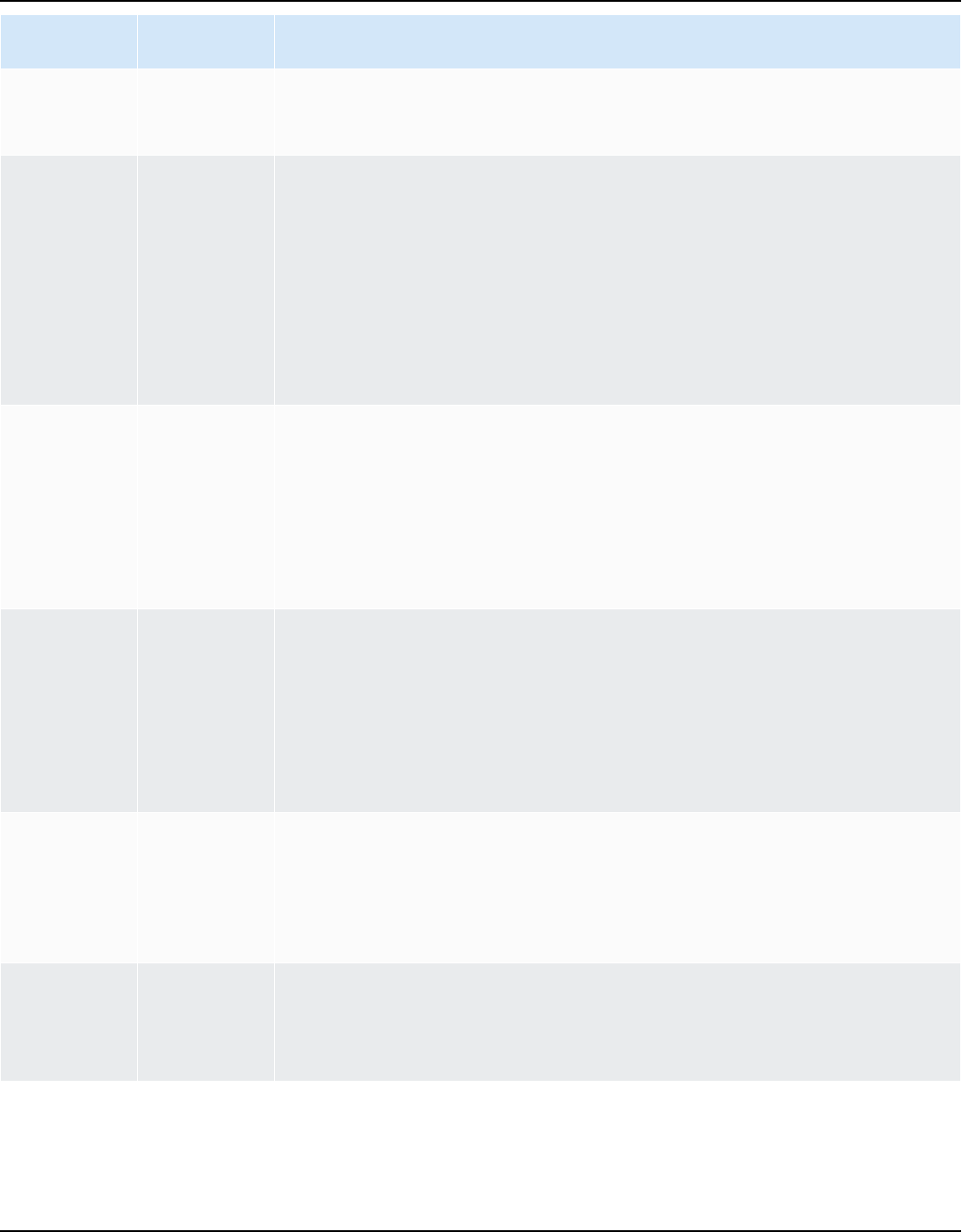

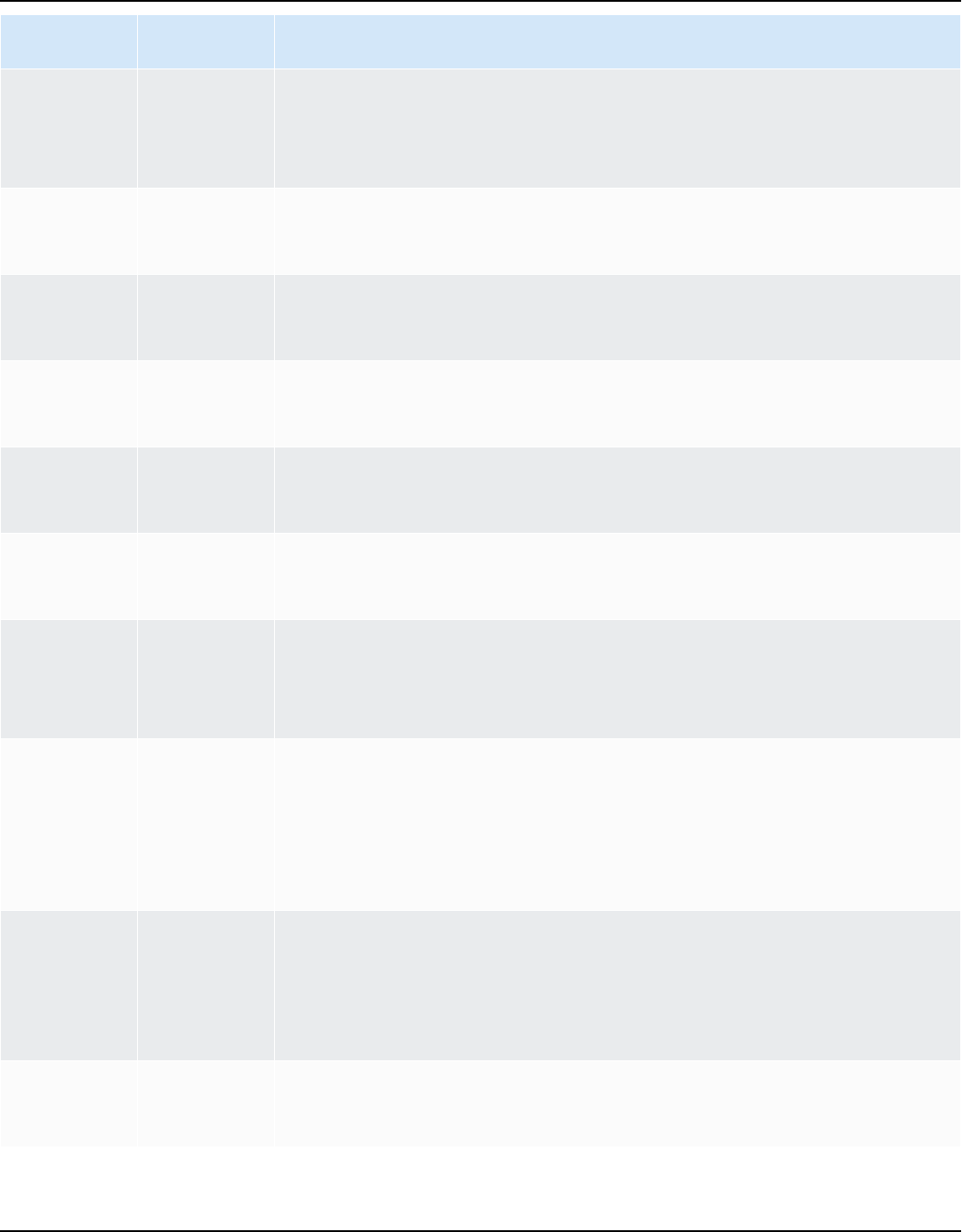

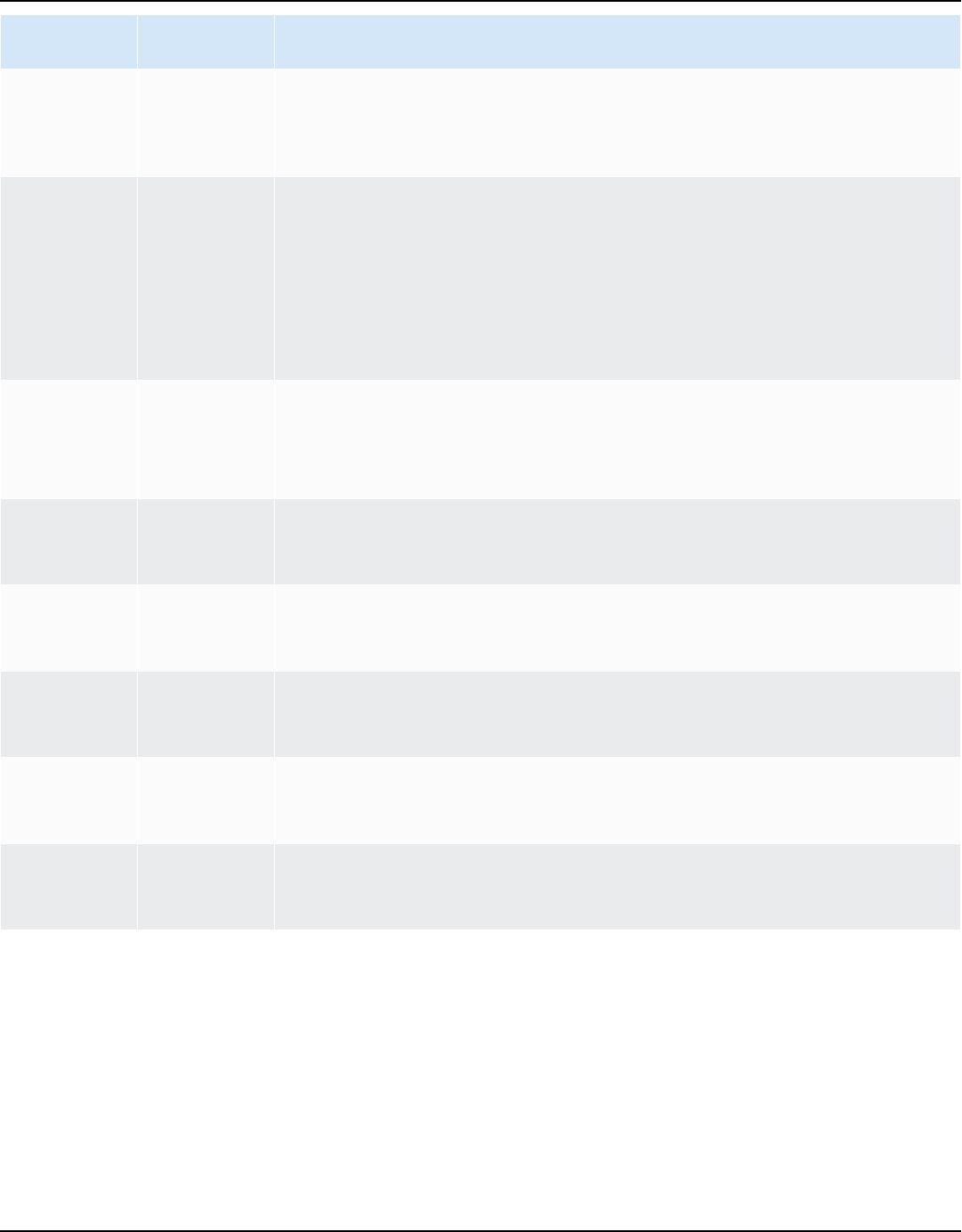

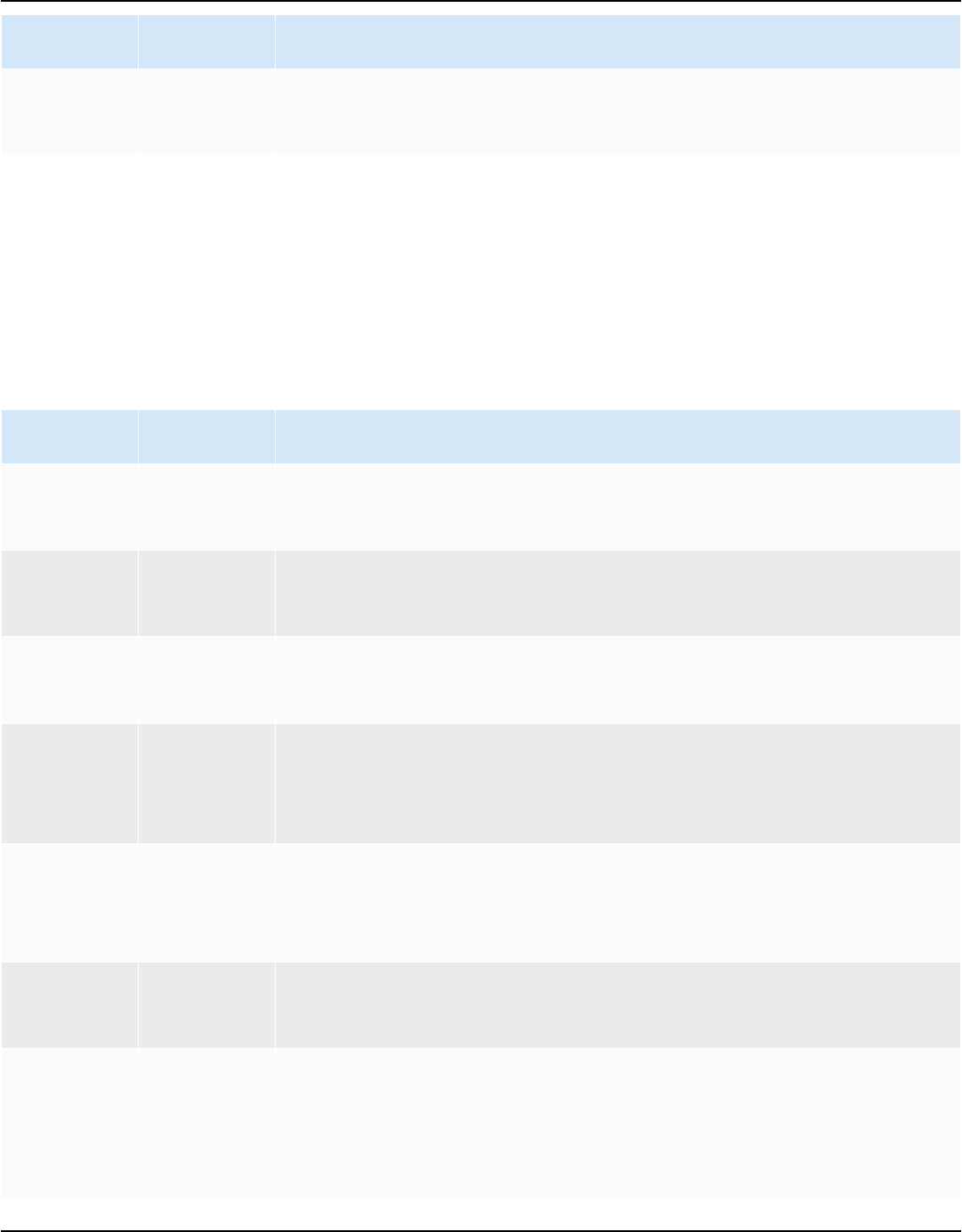

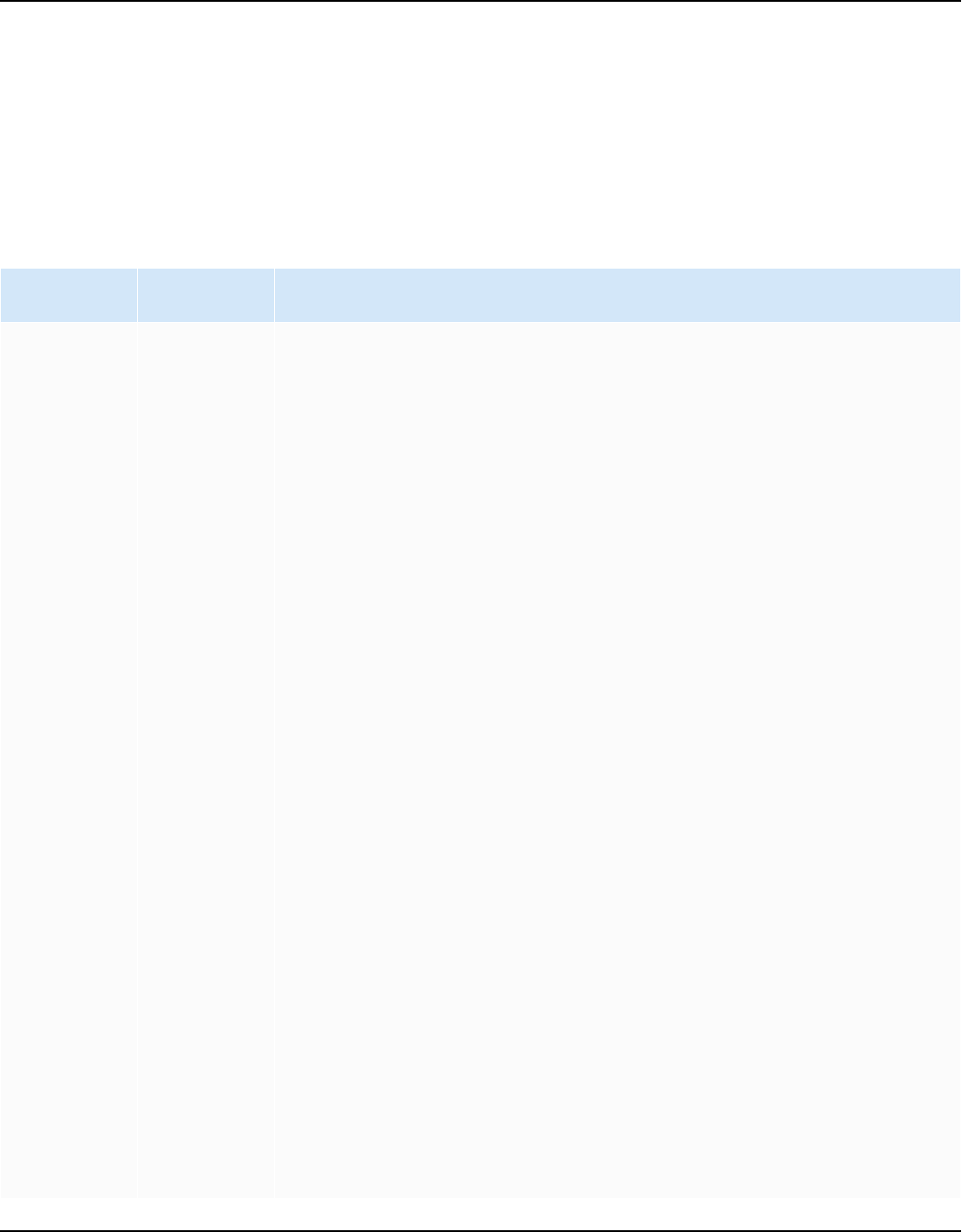

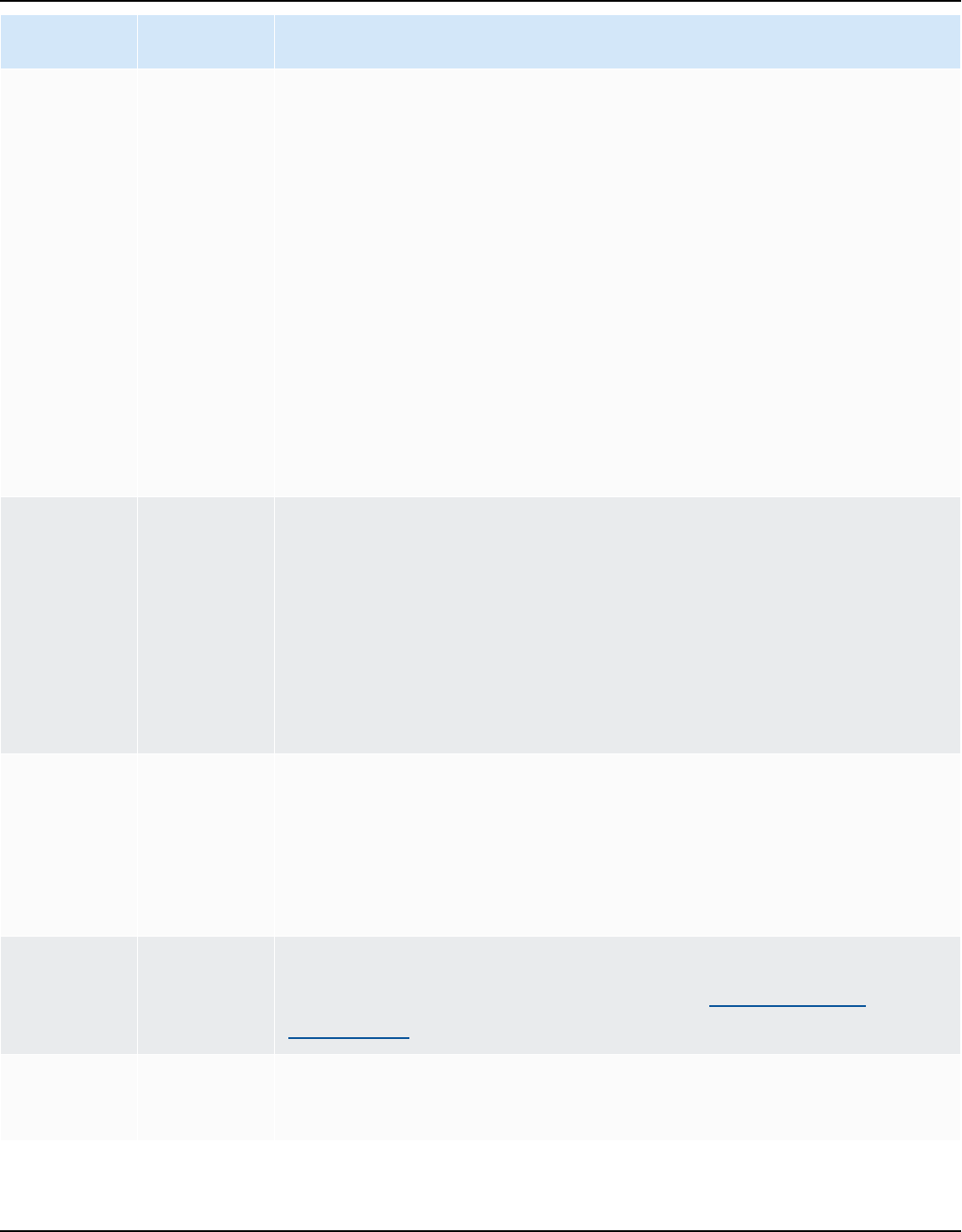

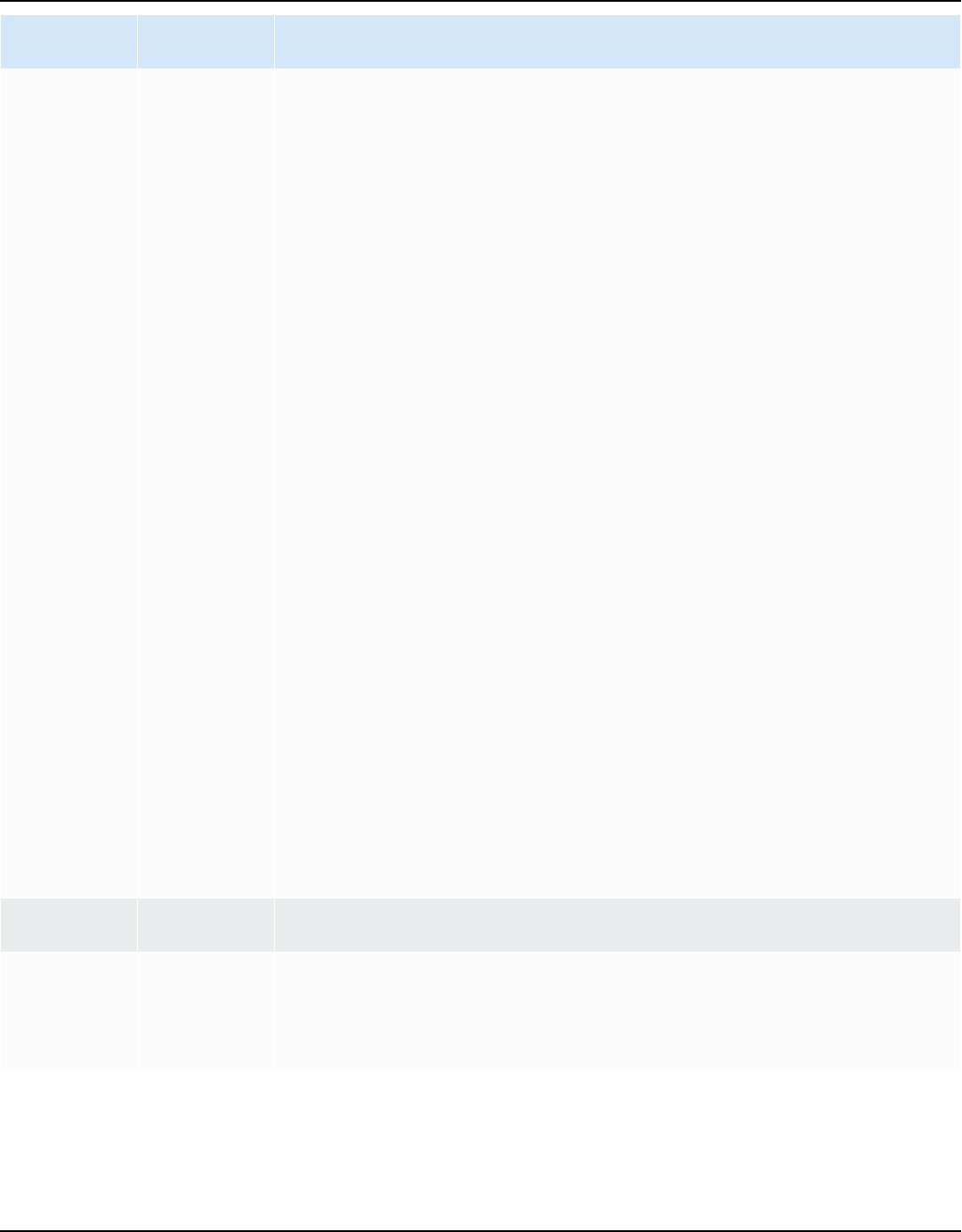

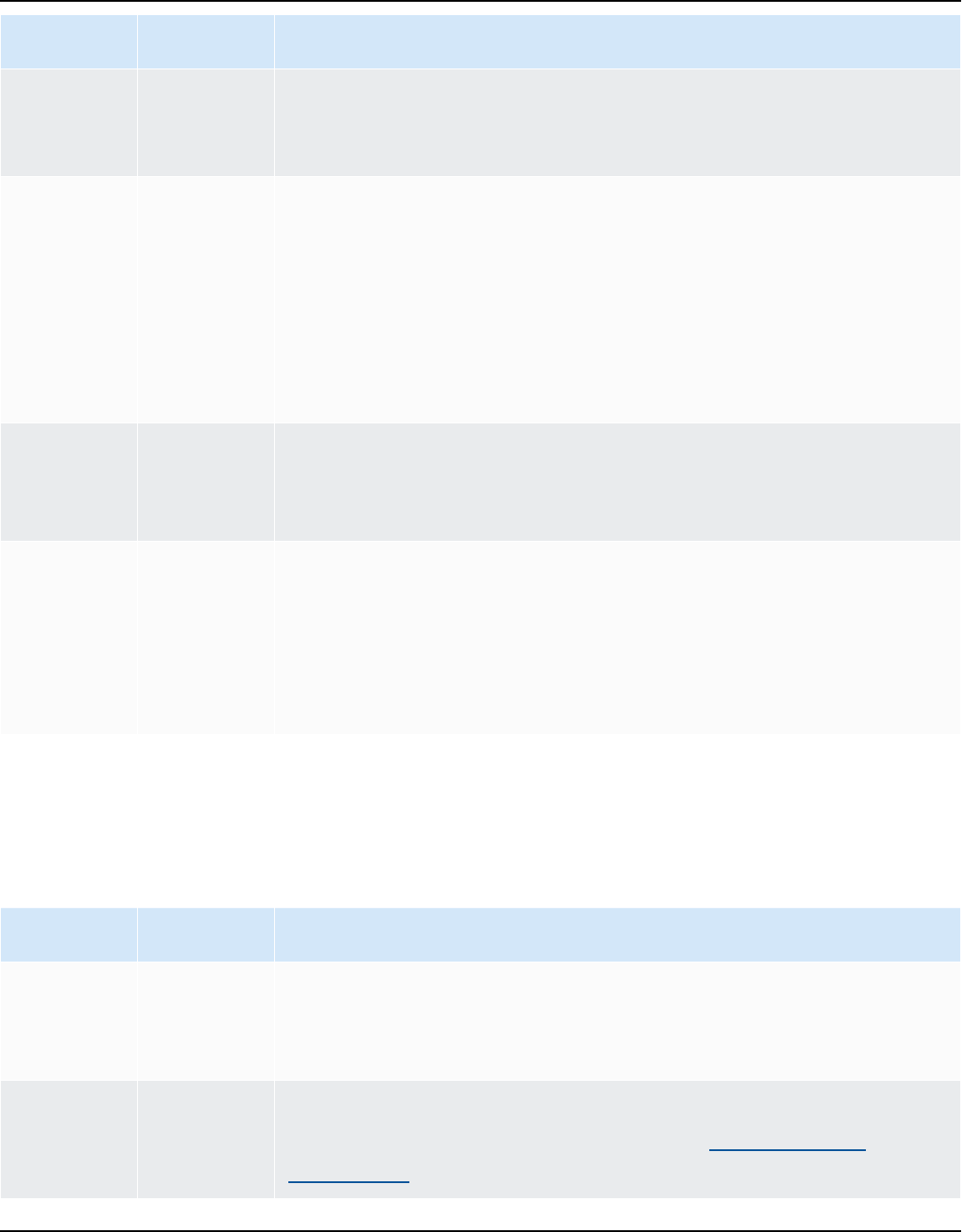

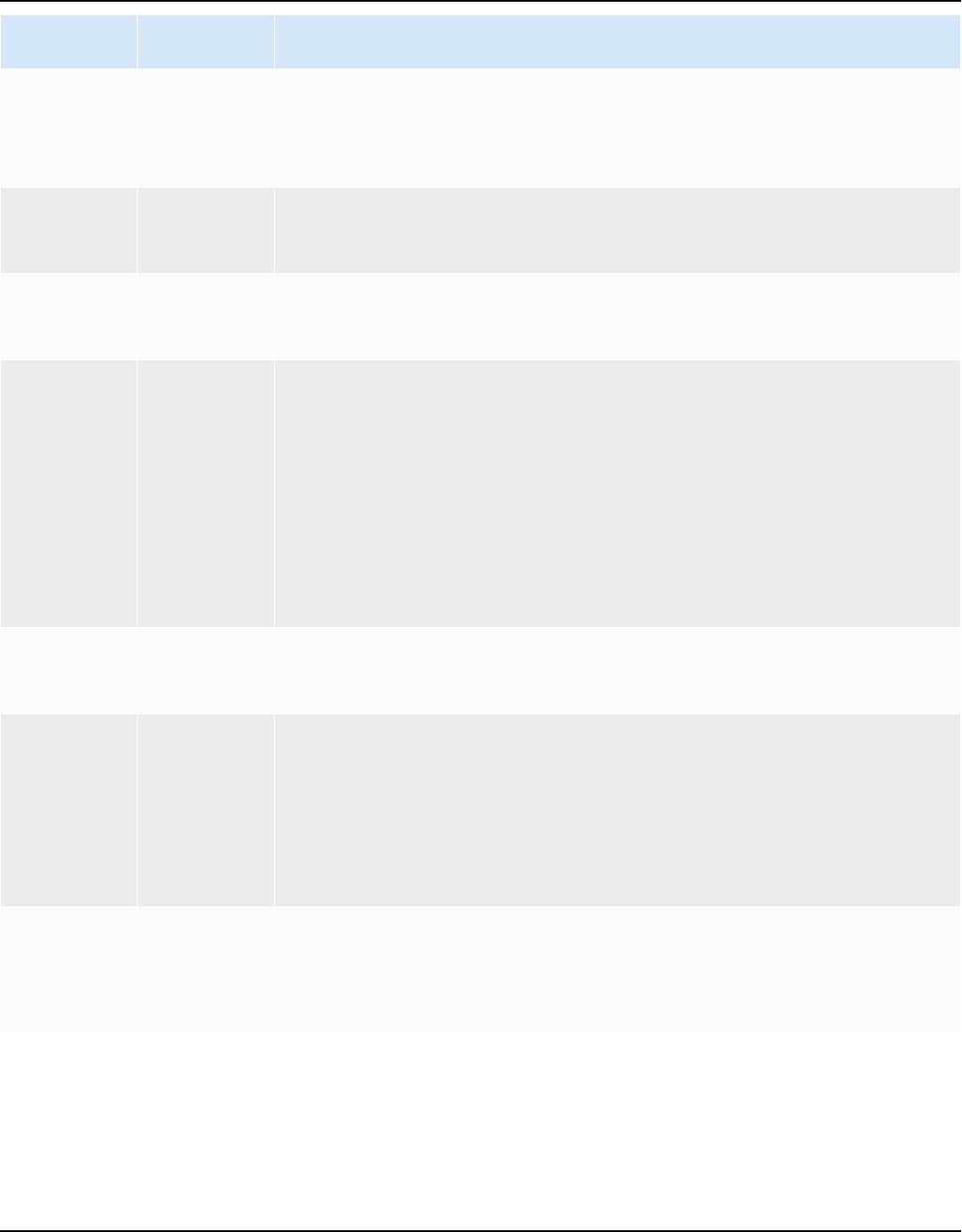

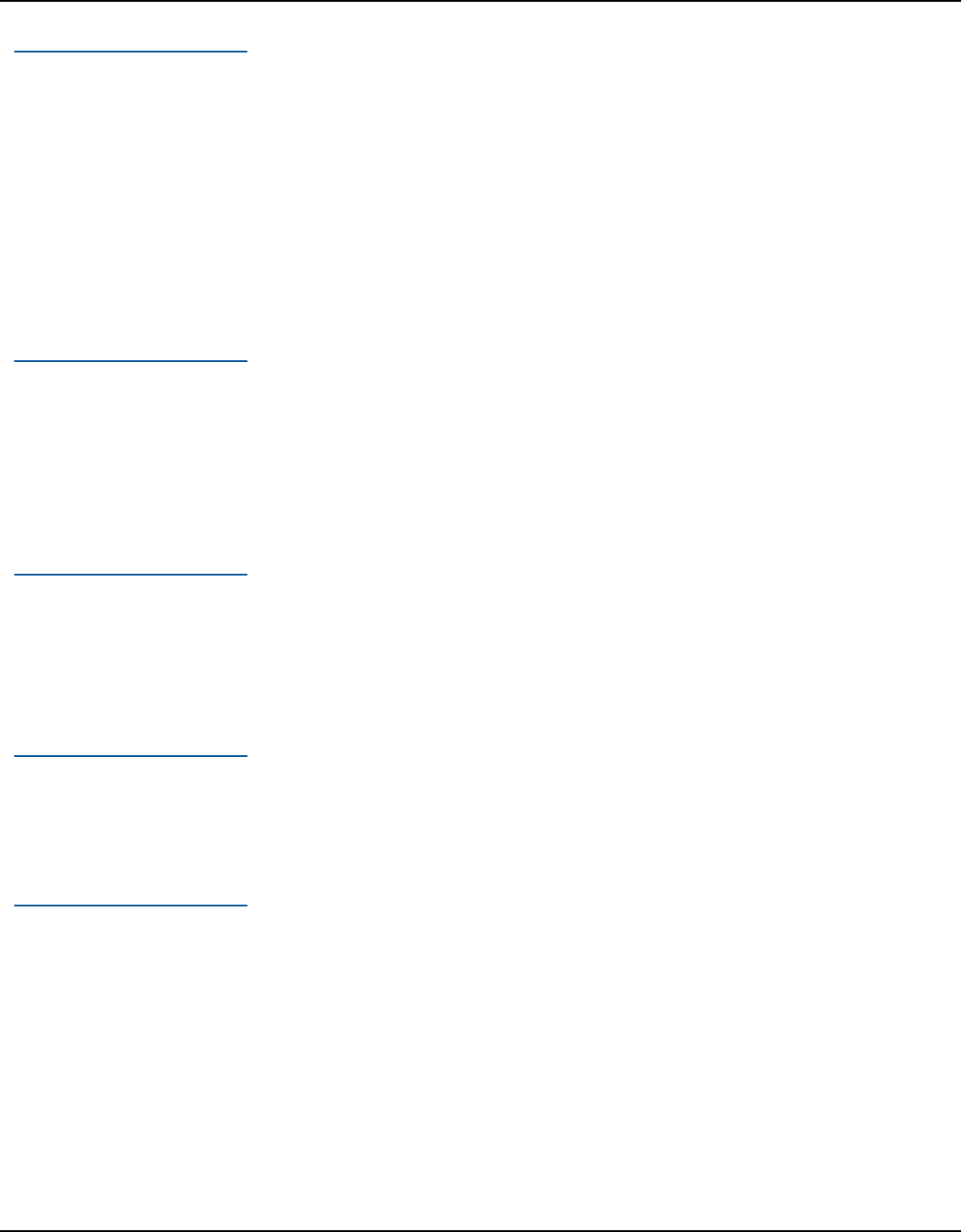

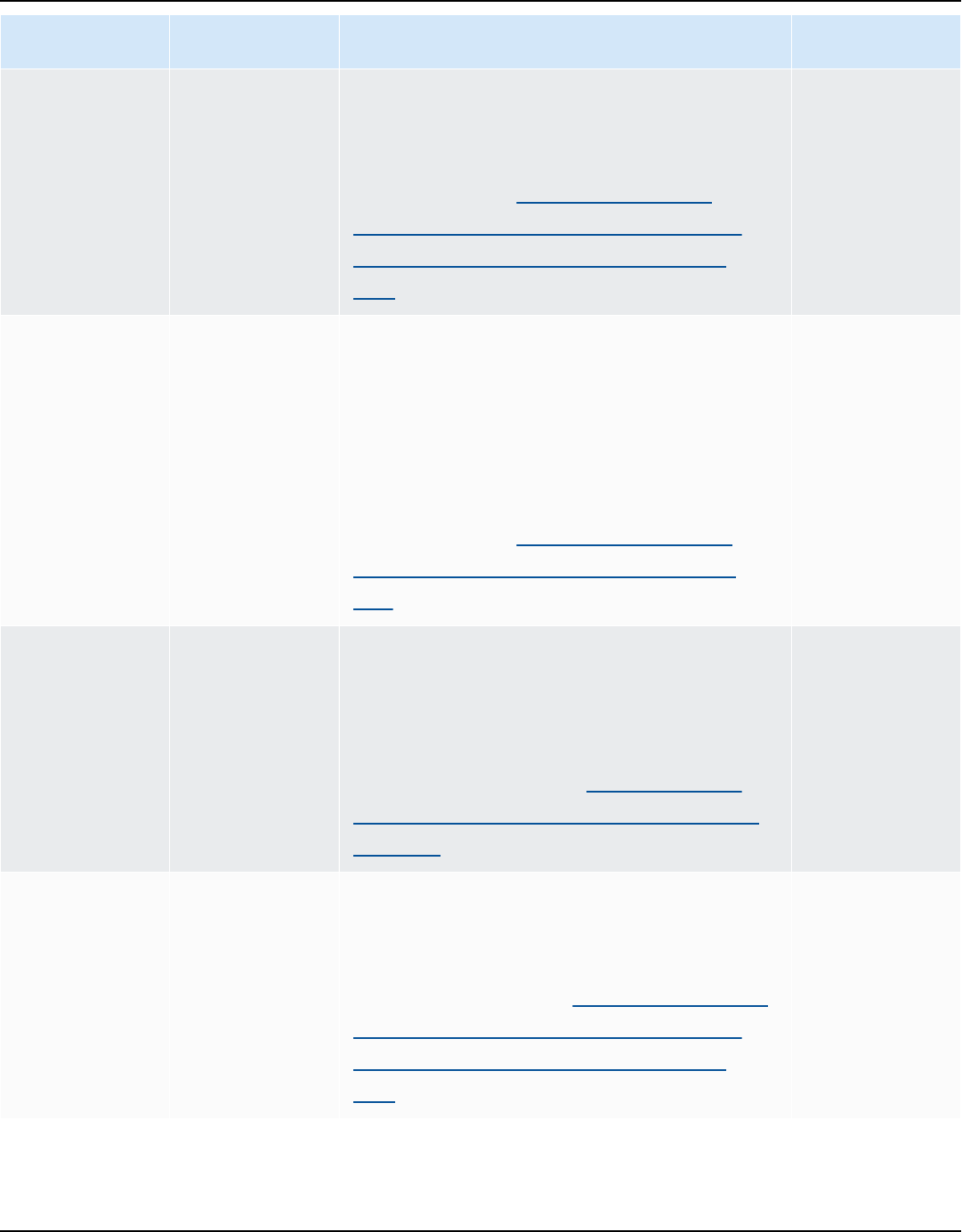

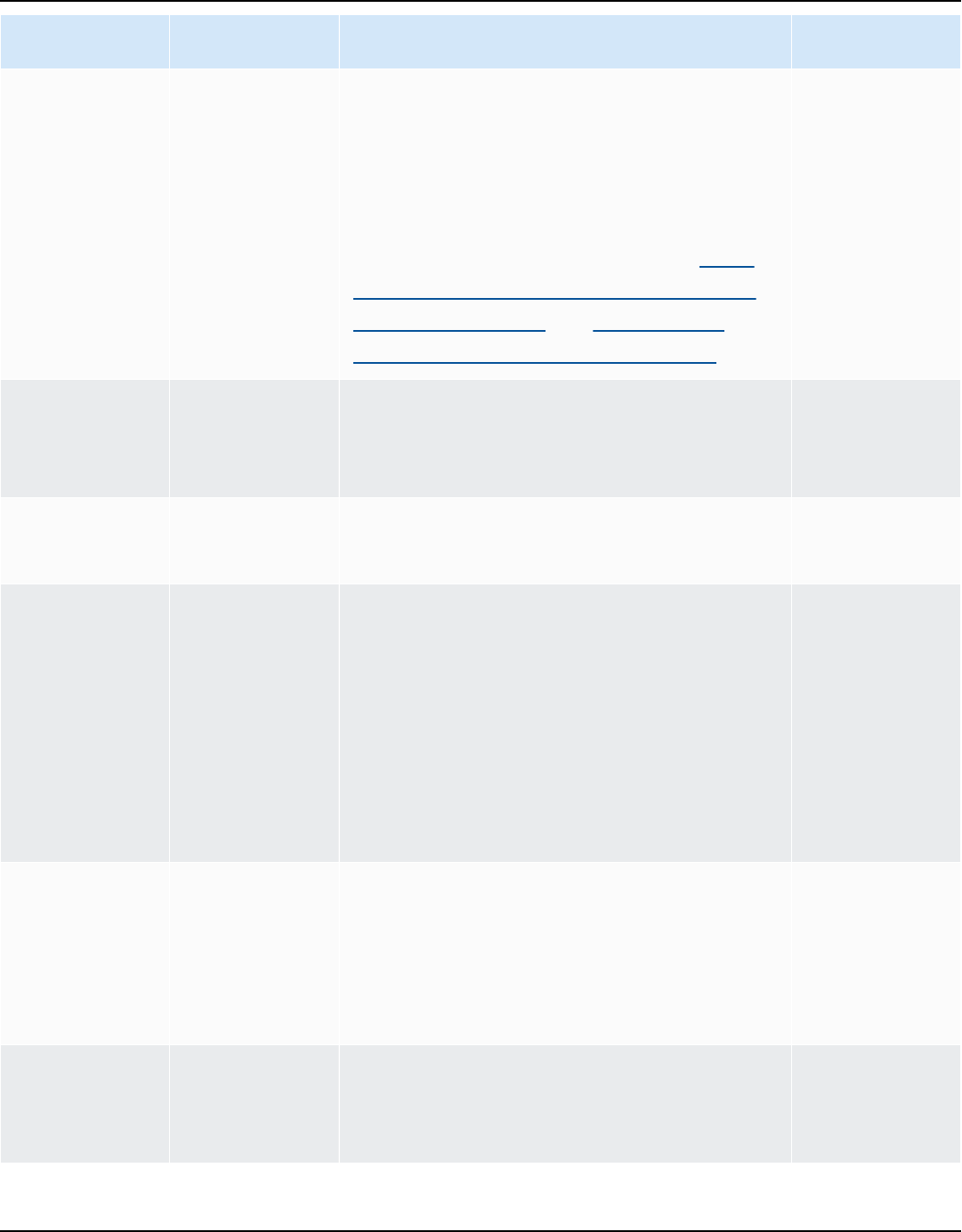

AWS SCT supports the following data warehouse conversions.

Version 1.0.672 2

AWS Schema Conversion Tool User Guide

Source data warehouse Target data warehouse

Amazon Redshift Amazon Redshift

For more information, see Amazon Redshift.

Azure Synapse Analytics Amazon Redshift

For more information, see Azure Synapse

Analytics as a source.

BigQuery Amazon Redshift

For more information, see BigQuery as a

source.

Greenplum Database (versions 4.3 and 6.21) Amazon Redshift

For more information, see Greenplum

databases.

Microsoft SQL Server (version 2008 and

higher)

Amazon Redshift

For more information, see SQL Server Data

Warehouses.

Netezza (version 7.0.3 and higher) Amazon Redshift

For more information, see Netezza databases.

Oracle (version 10.1 and higher) Amazon Redshift

For more information, see Oracle data

warehouse.

Snowflake (version 3) Amazon Redshift

For more information, see Snowflake.

Teradata (version 13 and higher) Amazon Redshift

Version 1.0.672 3

AWS Schema Conversion Tool User Guide

Source data warehouse Target data warehouse

For more information, see Teradata databases.

Vertica (version 7.2.2 and higher) Amazon Redshift

For more information, see Vertica databases.

AWS SCT supports the following data NoSQL database conversions.

Source database Target database

Apache Cassandra (versions 2.1.x, 2.2.16, and

3.11.x)

Amazon DynamoDB

For more information, see Connecting to

Apache Cassandra.

AWS SCT supports conversions of the following extract, transform, and load (ETL) processes. For

more information, see Converting Data Using ETL.

Source Target

Informatica ETL scripts Informatica

Microsoft SQL Server Integration Services

(SSIS) ETL packages

AWS Glue or AWS Glue Studio

Shell scripts with embedded commands from

Teradata Basic Teradata Query (BTEQ)

Amazon Redshift RSQL

Teradata BTEQ ETL scripts AWS Glue or Amazon Redshift RSQL

Teradata FastExport job scripts Amazon Redshift RSQL

Teradata FastLoad job scripts Amazon Redshift RSQL

Teradata MultiLoad job scripts Amazon Redshift RSQL

Version 1.0.672 4

AWS Schema Conversion Tool User Guide

AWS SCT supports the following big data framework migrations. For more information, see

Migrating big data frameworks.

Source Target

Apache Hive (version 0.13.0 and higher) Hive on Amazon EMR

Apache HDFS Amazon S3 or HDFS on Amazon EMR

Apache Oozie AWS Step Functions

Schema conversion overview

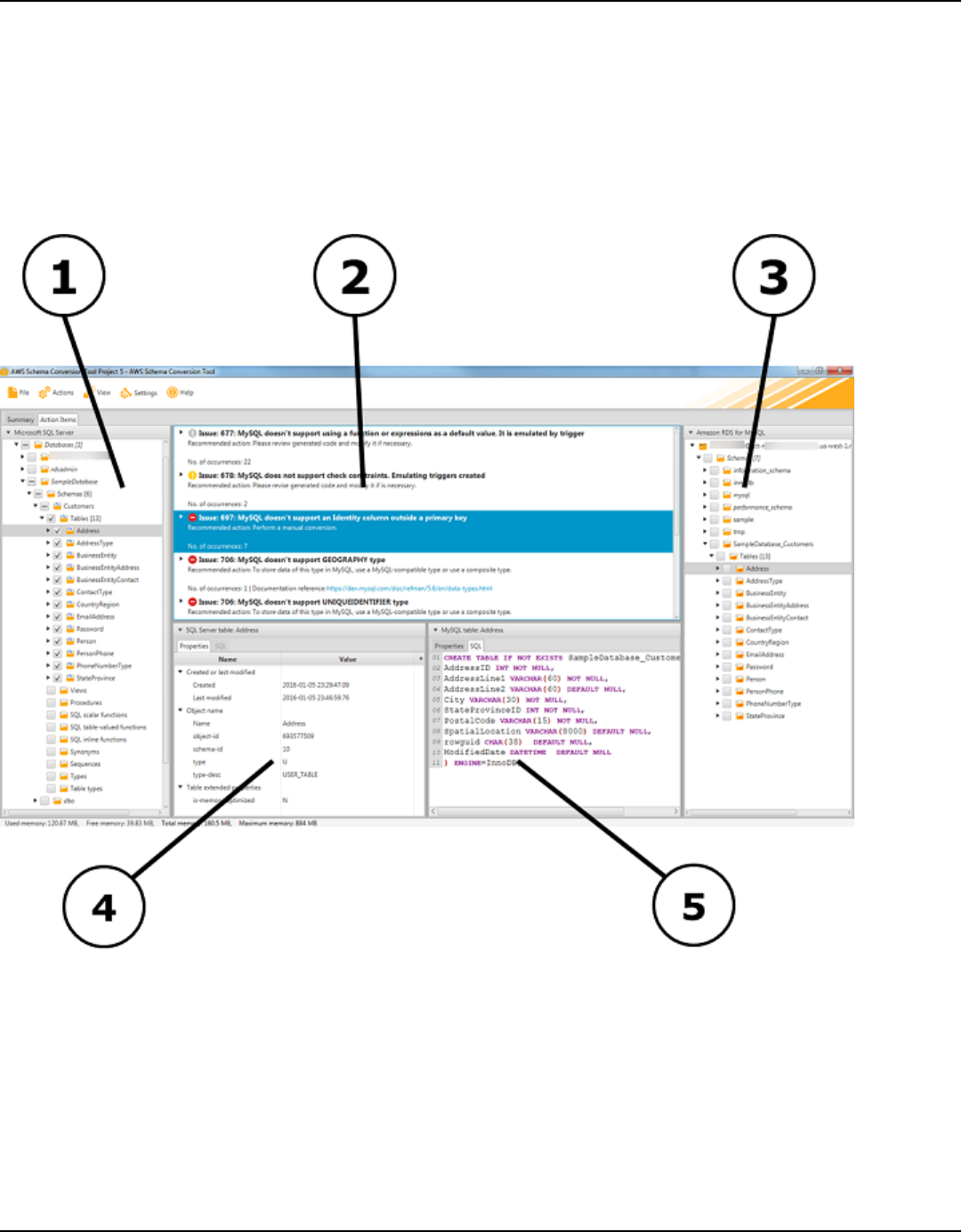

AWS SCT provides a project-based user interface to automatically convert the database schema of

your source database into a format compatible with your target Amazon RDS instance. If schema

from your source database can't be converted automatically, AWS SCT provides guidance on how

you can create equivalent schema in your target Amazon RDS database.

For information about how to install AWS SCT, see Installing and Configuring AWS Schema

Conversion Tool.

For an introduction to the AWS SCT user interface, see Navigating the user interface of the AWS

SCT.

For information on the conversion process, see Converting database schemas in AWS Schema

Conversion Tool.

In addition to converting your existing database schema from one database engine to another,

AWS SCT has some additional features that help you move your data and applications to the AWS

Cloud:

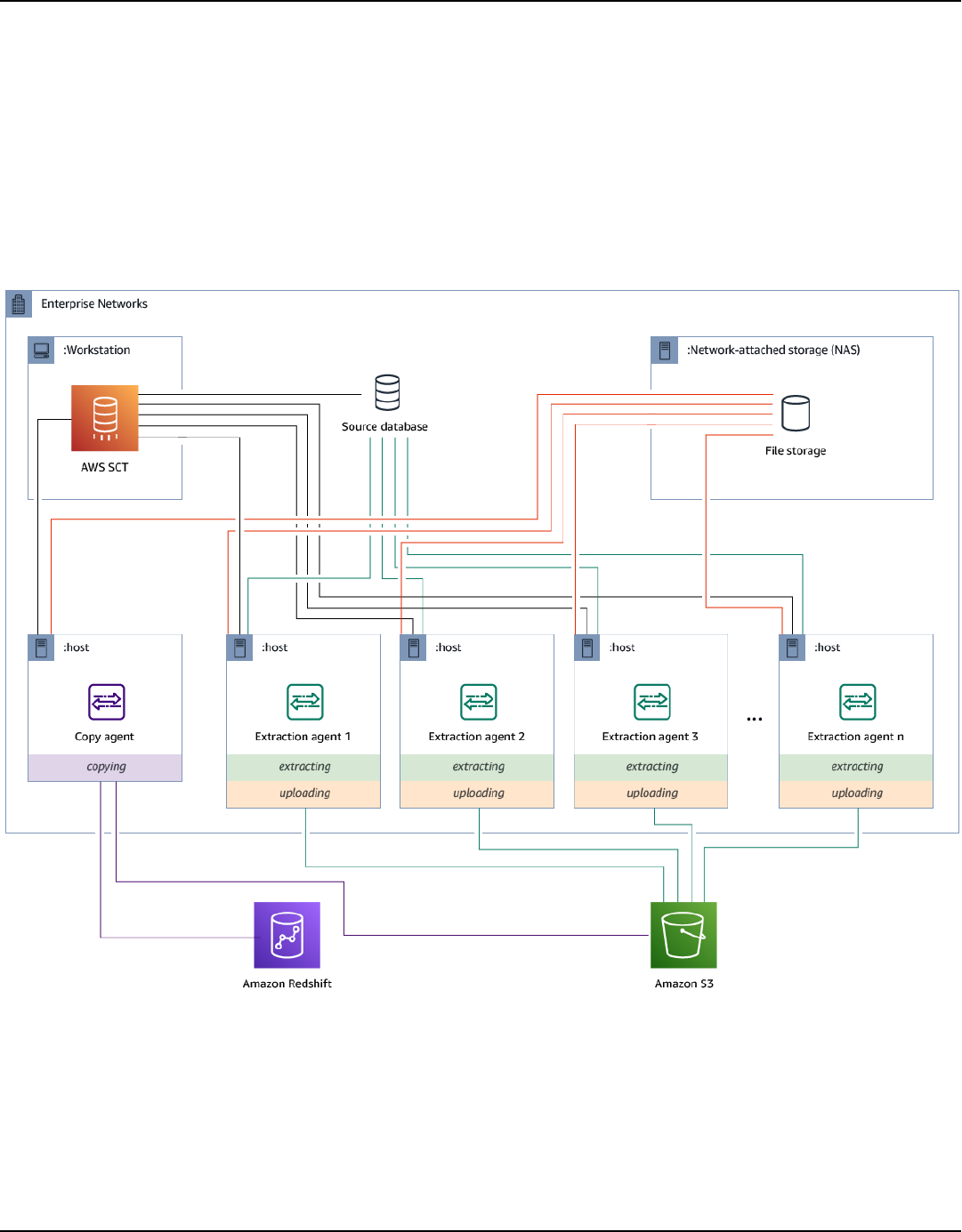

• You can use data extraction agents to extract data from your data warehouse to prepare to

migrate it to Amazon Redshift. To manage the data extraction agents, you can use AWS SCT. For

more information, see Migrating data from on-premises data warehouse to Amazon Redshift

with AWS Schema Conversion Tool.

• You can use AWS SCT to create AWS DMS endpoints and tasks. You can run and monitor these

tasks from AWS SCT. For more information, see Integrating AWS Database Migration Service with

AWS Schema Conversion Tool.

Schema conversion overview Version 1.0.672 5

AWS Schema Conversion Tool User Guide

• In some cases, database features can't be converted to equivalent Amazon RDS or Amazon

Redshift features. The AWS SCT extension pack wizard can help you install AWS Lambda

functions and Python libraries to emulate the features that can't be converted. For more

information, see Using extension packs with AWS Schema Conversion Tool.

• You can use AWS SCT to optimize your existing Amazon Redshift database. AWS SCT

recommends sort keys and distribution keys to optimize your database. For more information,

see Converting data from Amazon Redshift using AWS Schema Conversion Tool.

• You can use AWS SCT to copy your existing on-premises database schema to an Amazon RDS DB

instance running the same engine. You can use this feature to analyze potential cost savings of

moving to the cloud and of changing your license type.

• You can use AWS SCT to convert SQL in your C++, C#, Java, or other application code. You can

view, analyze, edit, and save the converted SQL code. For more information, see Converting

application SQL using AWS SCT.

• You can use AWS SCT to migrate extraction, transformation, and load (ETL) processes. For more

information, see Converting Data Using ETL Processes in AWS Schema Conversion Tool.

Providing feedback

You can provide feedback about AWS SCT. You can file a bug report, submit a feature request, or

provide general information.

To provide feedback about AWS SCT

1. Start the AWS Schema Conversion Tool.

2. Open the Help menu and then choose Leave Feedback. The Leave Feedback dialog box

appears.

3. For Area, choose Information, Bug report, or Feature request.

4. For Source database, choose your source database. Choose Any if your feedback is not specific

to a particular database.

5. For Target database, choose your target database. Choose Any if your feedback is not specific

to a particular database.

6. For Title, type a title for your feedback.

7. For Message, type your feedback.

8. Choose Send to submit your feedback.

Giving feedback Version 1.0.672 6

AWS Schema Conversion Tool User Guide

Installing and Configuring AWS Schema Conversion Tool

The AWS Schema Conversion Tool (AWS SCT) is a standalone application that provides a project-

based user interface. AWS SCT is available for Microsoft Windows, Fedora Linux, and Ubuntu Linux.

AWS SCT is supported only on 64-bit operating systems.

To ensure that you get the correct version of the AWS SCT distribution file we provide verification

steps after you download the compressed file. You can verify the file using the steps provided.

AWS SCT is available as both a standalone application and a command-line tool. For information

about the command line tool, see AWS Schema Conversion Tool CLI.

Topics

• Installing AWS Schema Conversion Tool

• Validating the AWS Schema Conversion Tool insttallation

• Installing JDBC drivers for AWS Schema Conversion Tool

• Updating AWS Schema Conversion Tool

• AWS Schema Conversion Tool CLI

Installing AWS Schema Conversion Tool

You can install AWS SCT on the following operating systems:

• Microsoft Windows 10

• Fedora Linux 36 and higher

• Ubuntu Linux 18 and higher

To install AWS SCT

1. Download the compressed file that contains the AWS SCT installer, using the link for your

operating system. All compressed files have a .zip extension. When you extract the AWS SCT

installer file, it will be in the appropriate format for your operating system.

• Microsoft Windows

• Ubuntu Linux (.deb)

Installing AWS SCT Version 1.0.672 7

AWS Schema Conversion Tool User Guide

• Fedora Linux (.rpm)

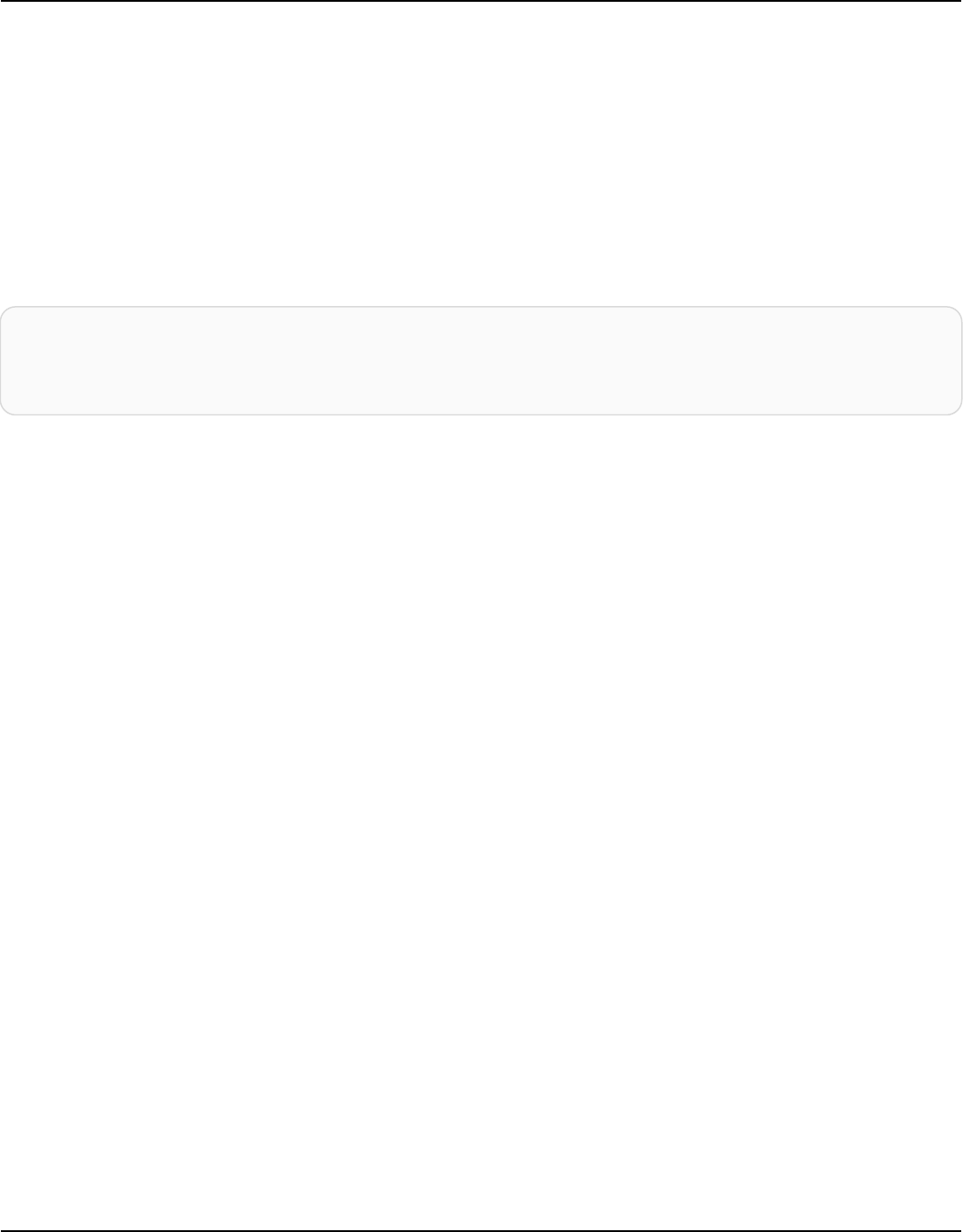

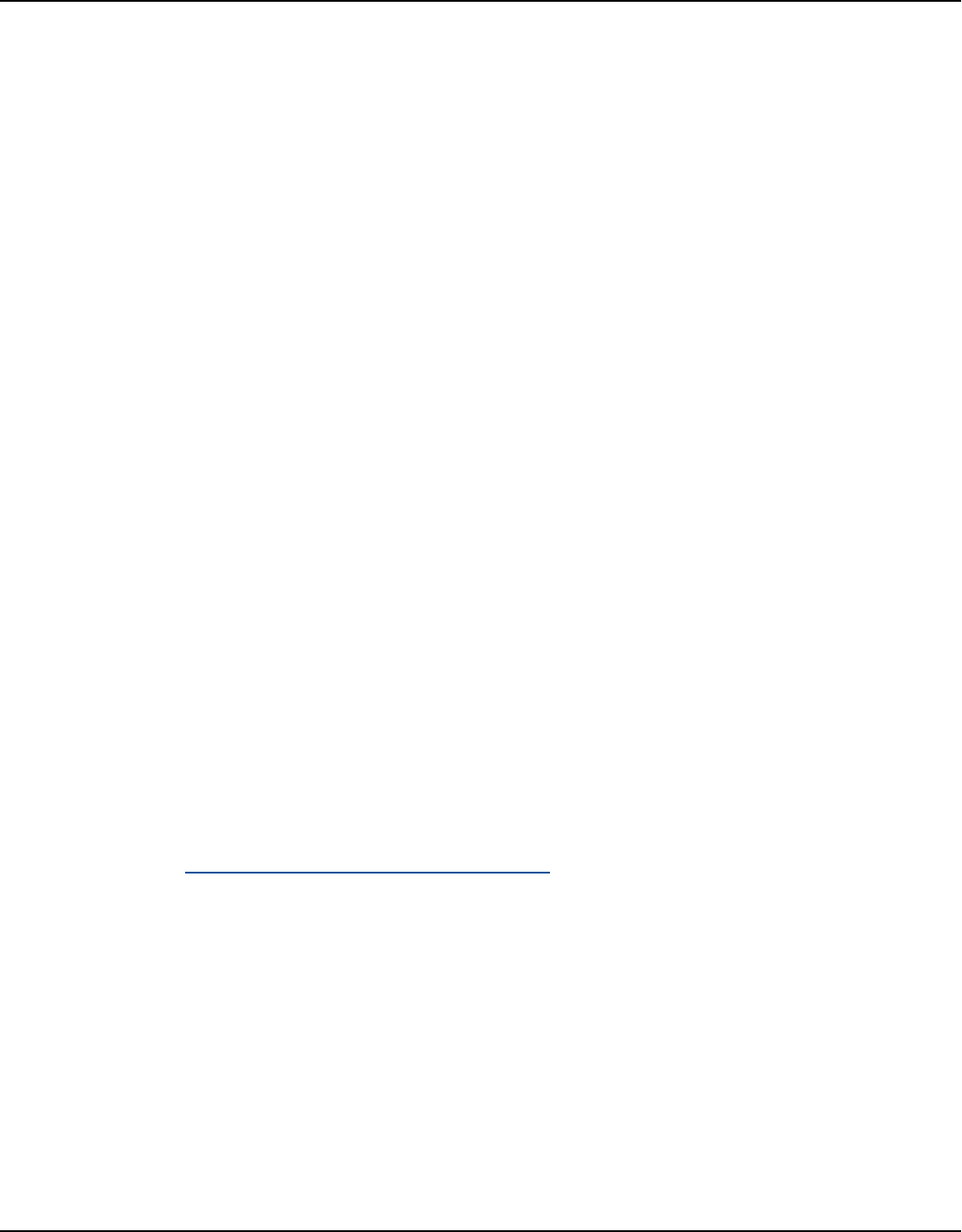

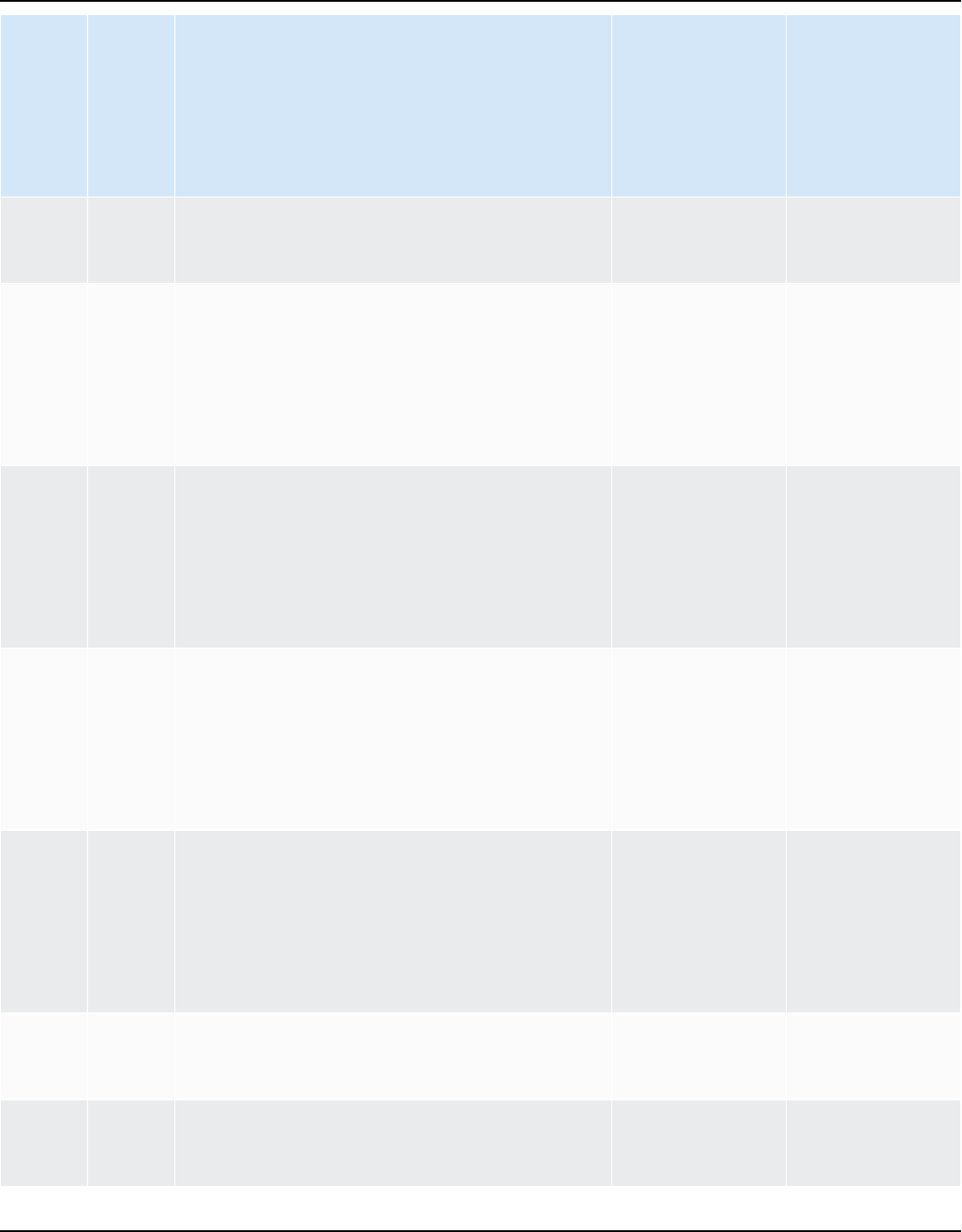

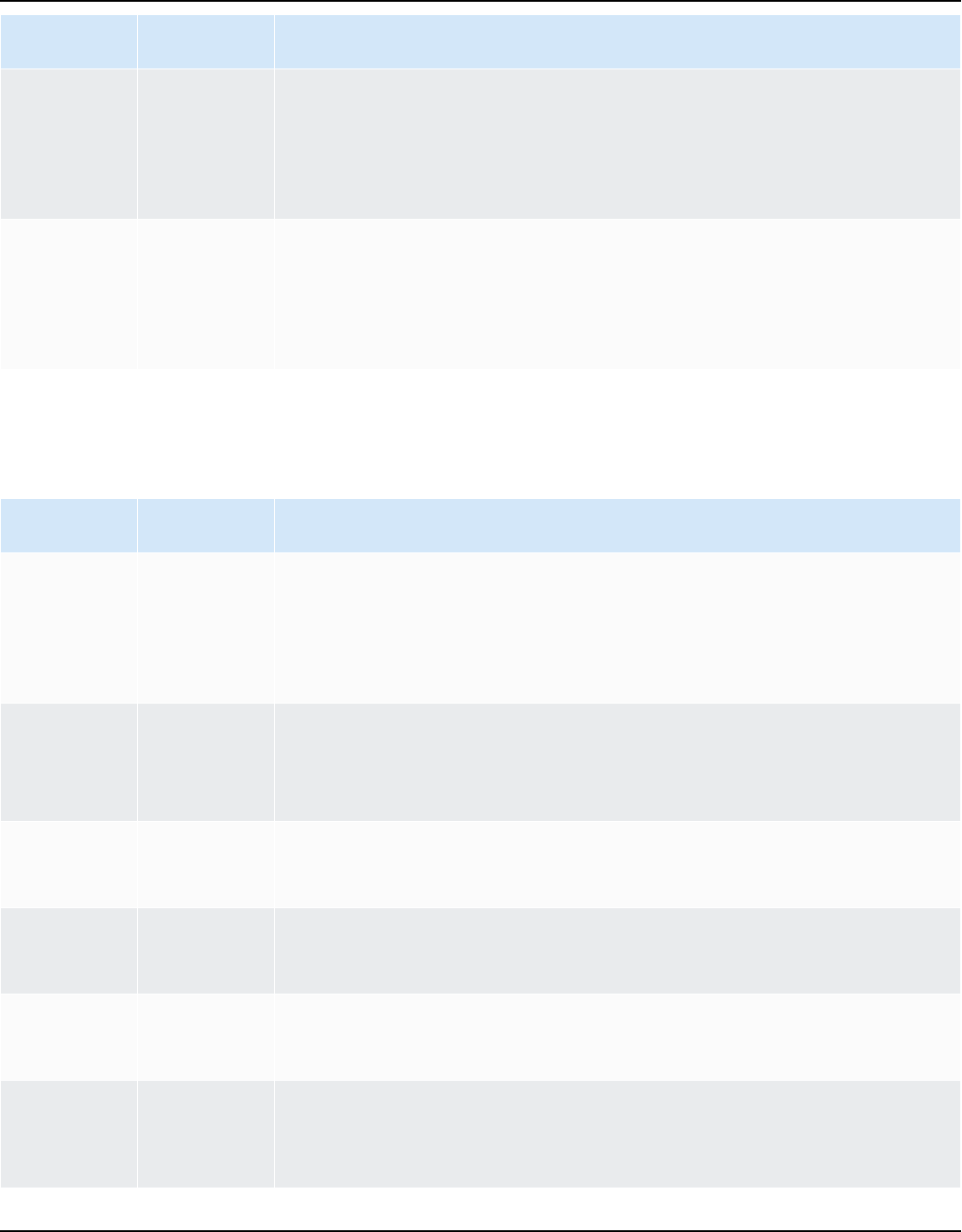

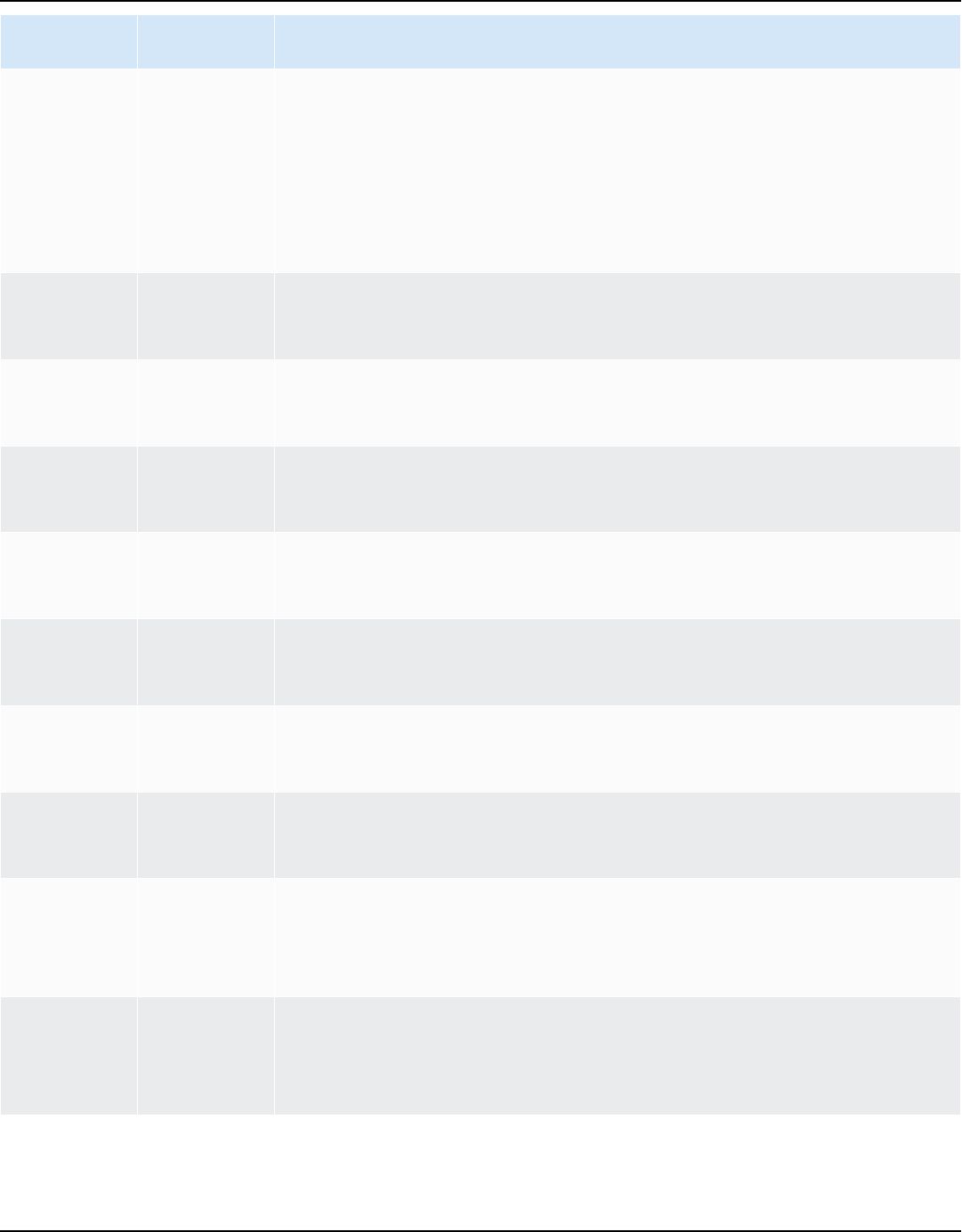

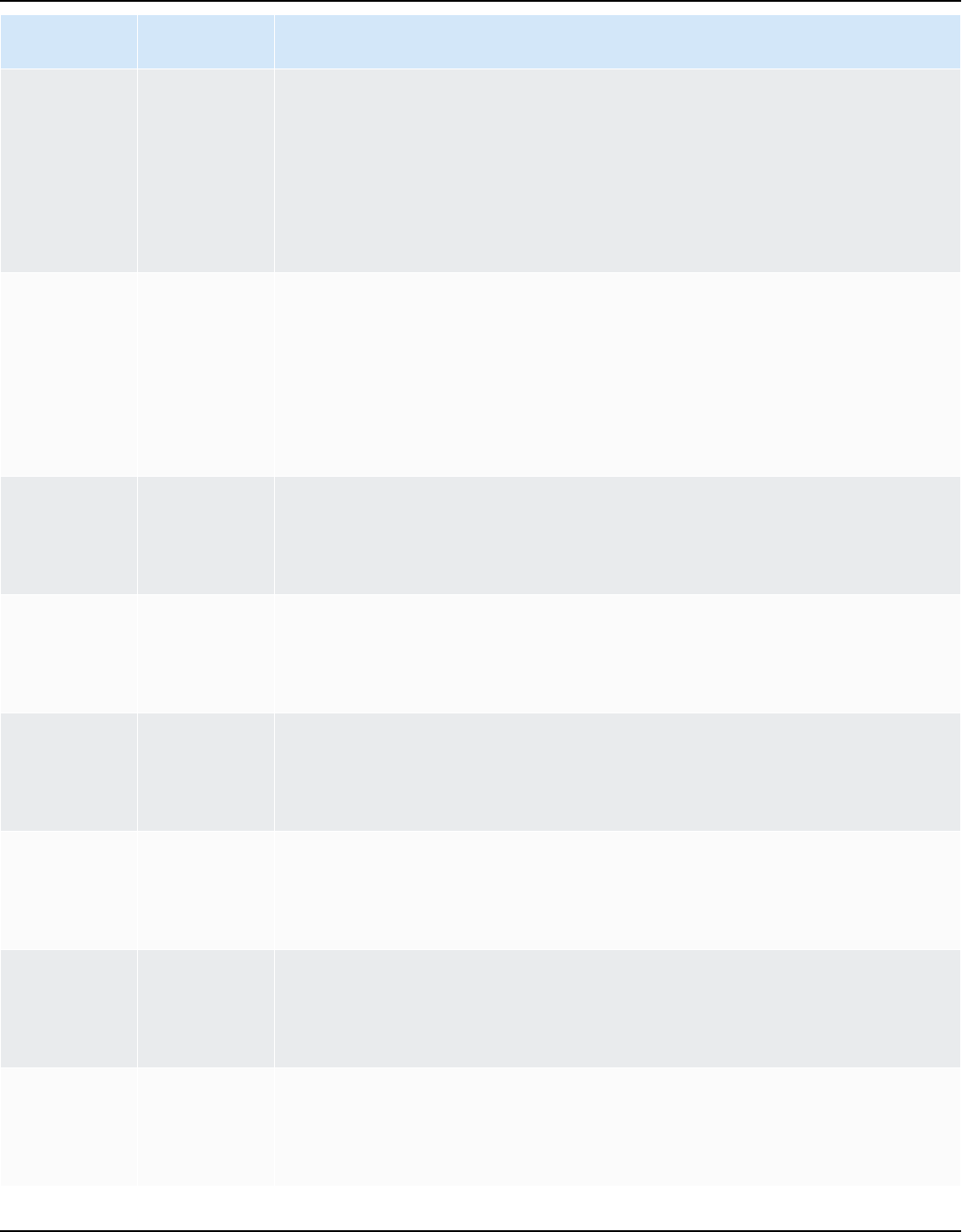

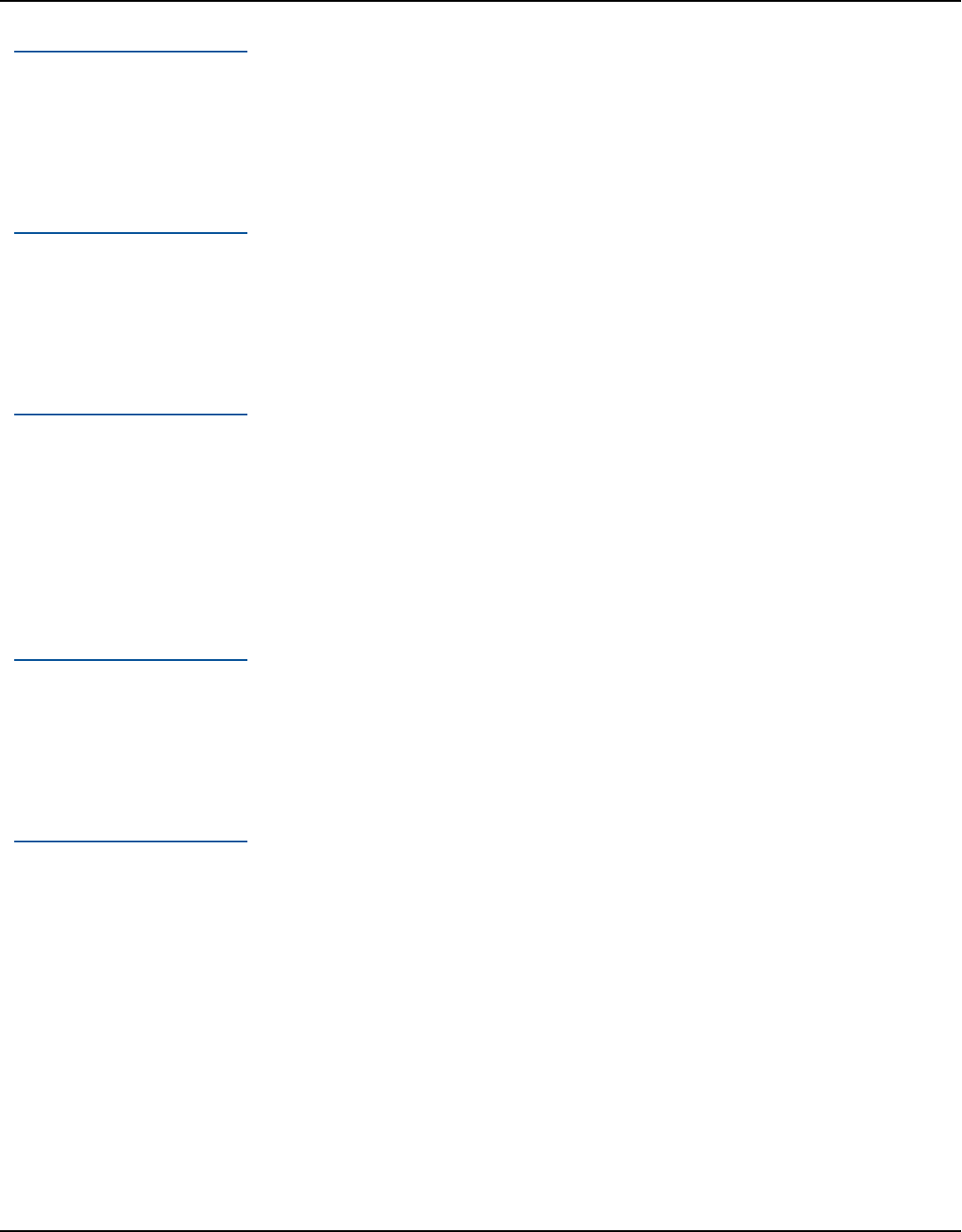

2. Extract the AWS SCT installer file for your operating system, shown following.

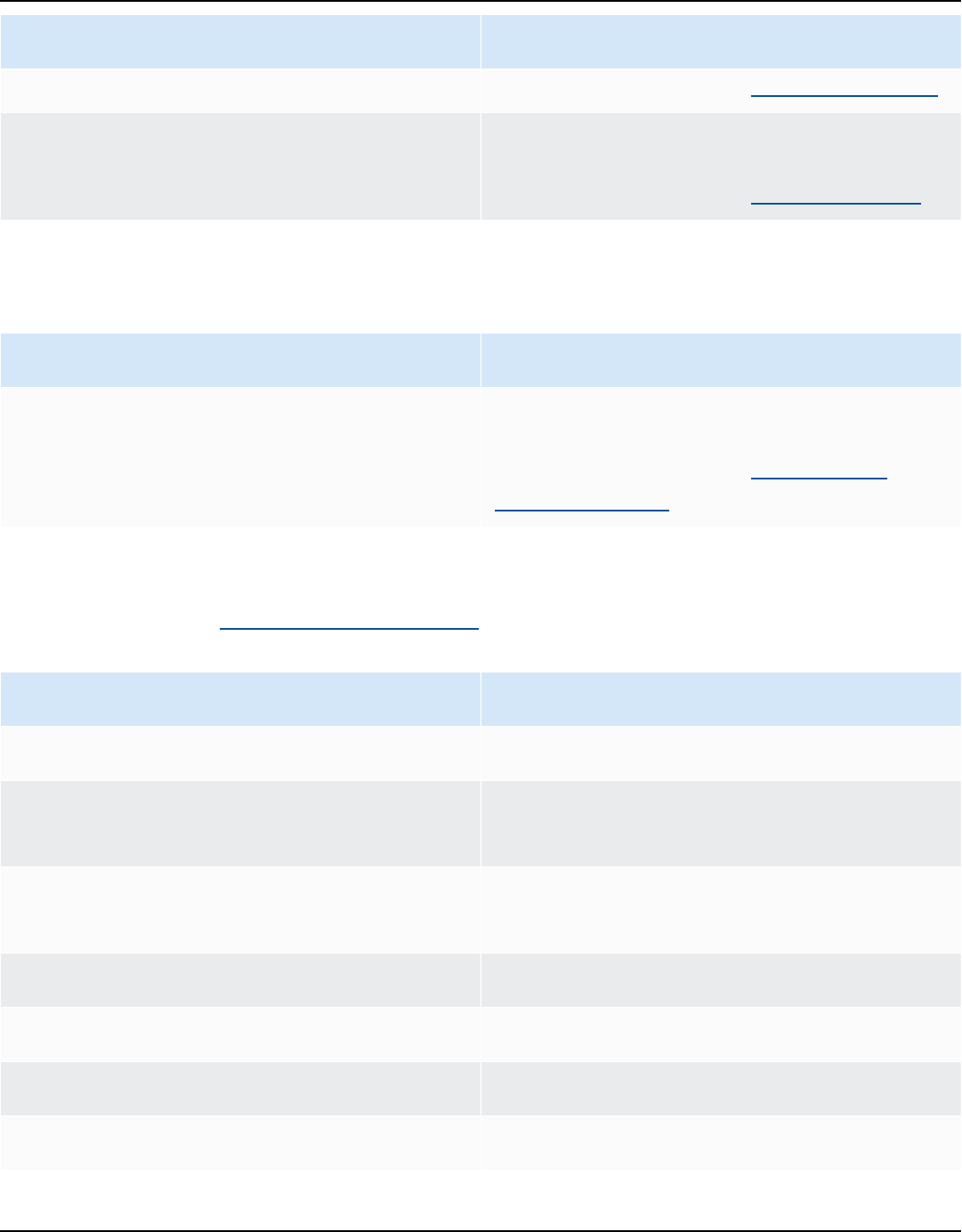

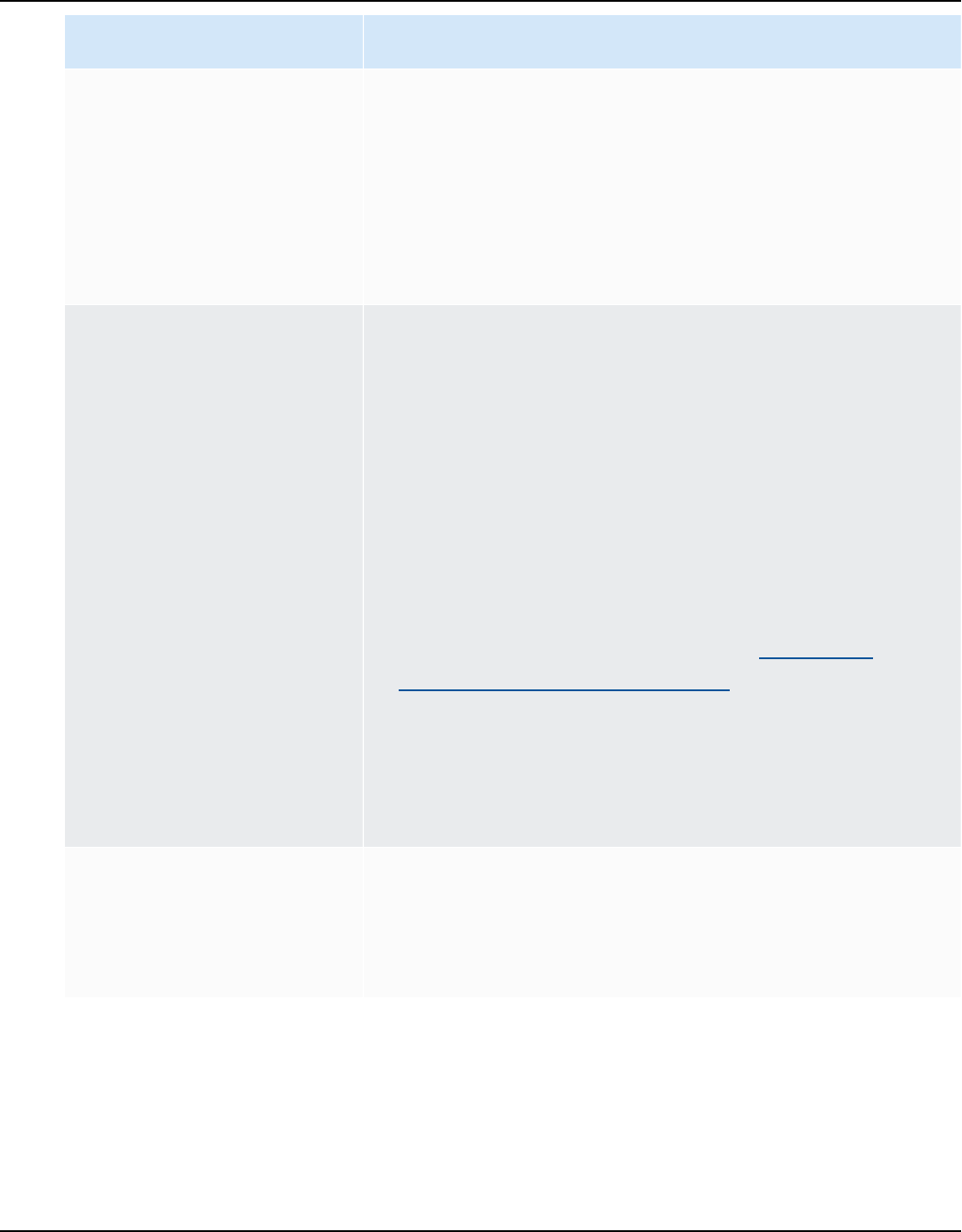

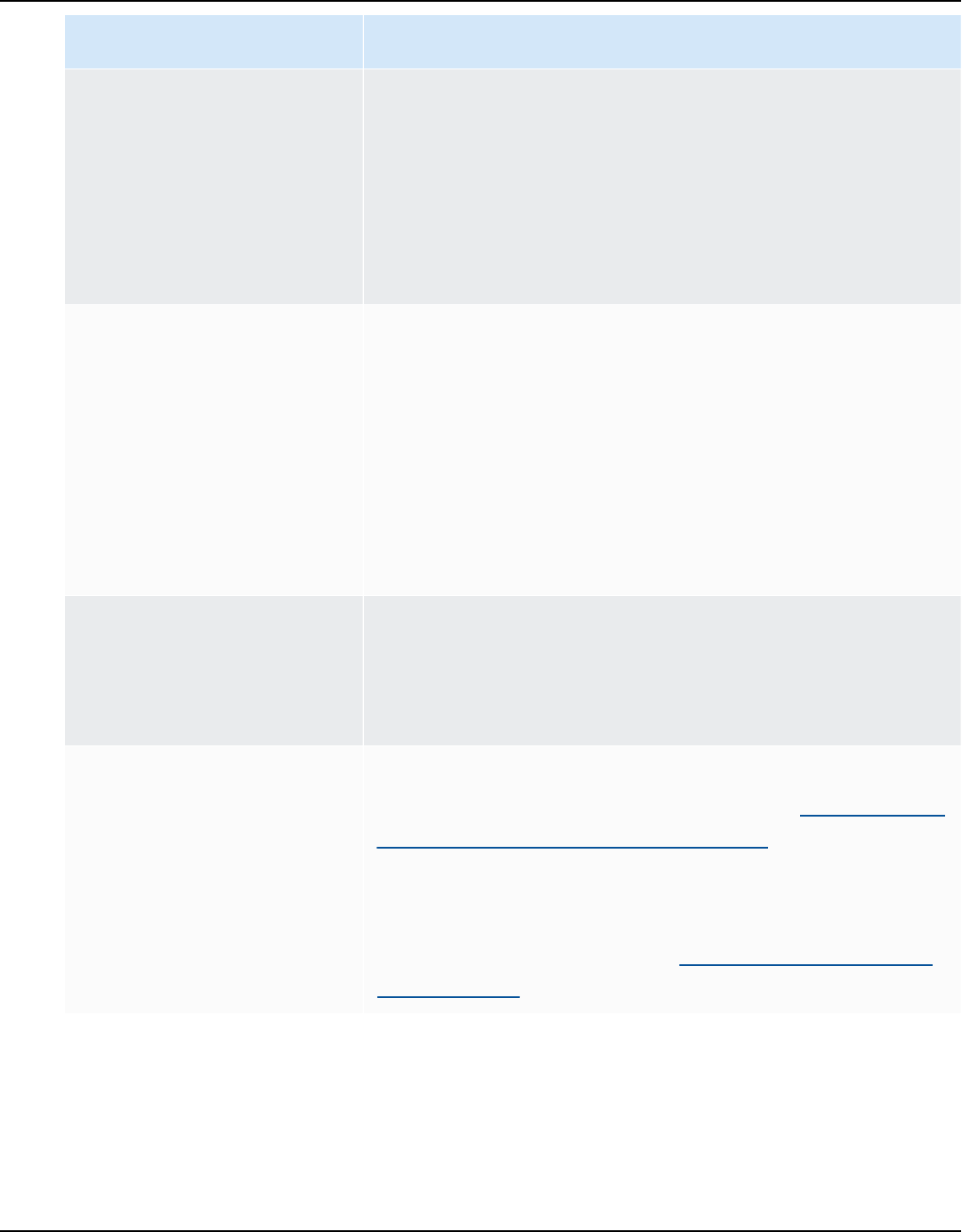

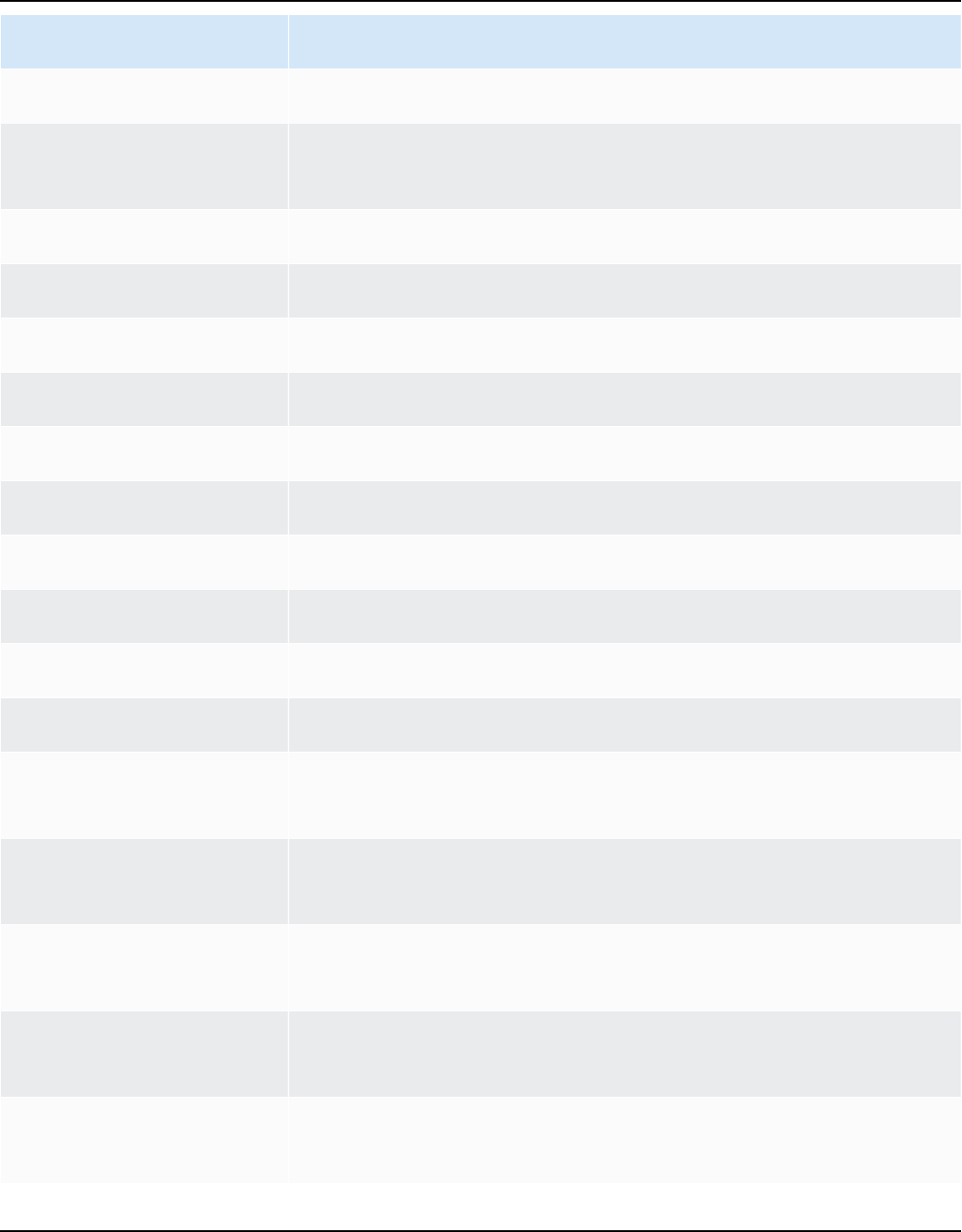

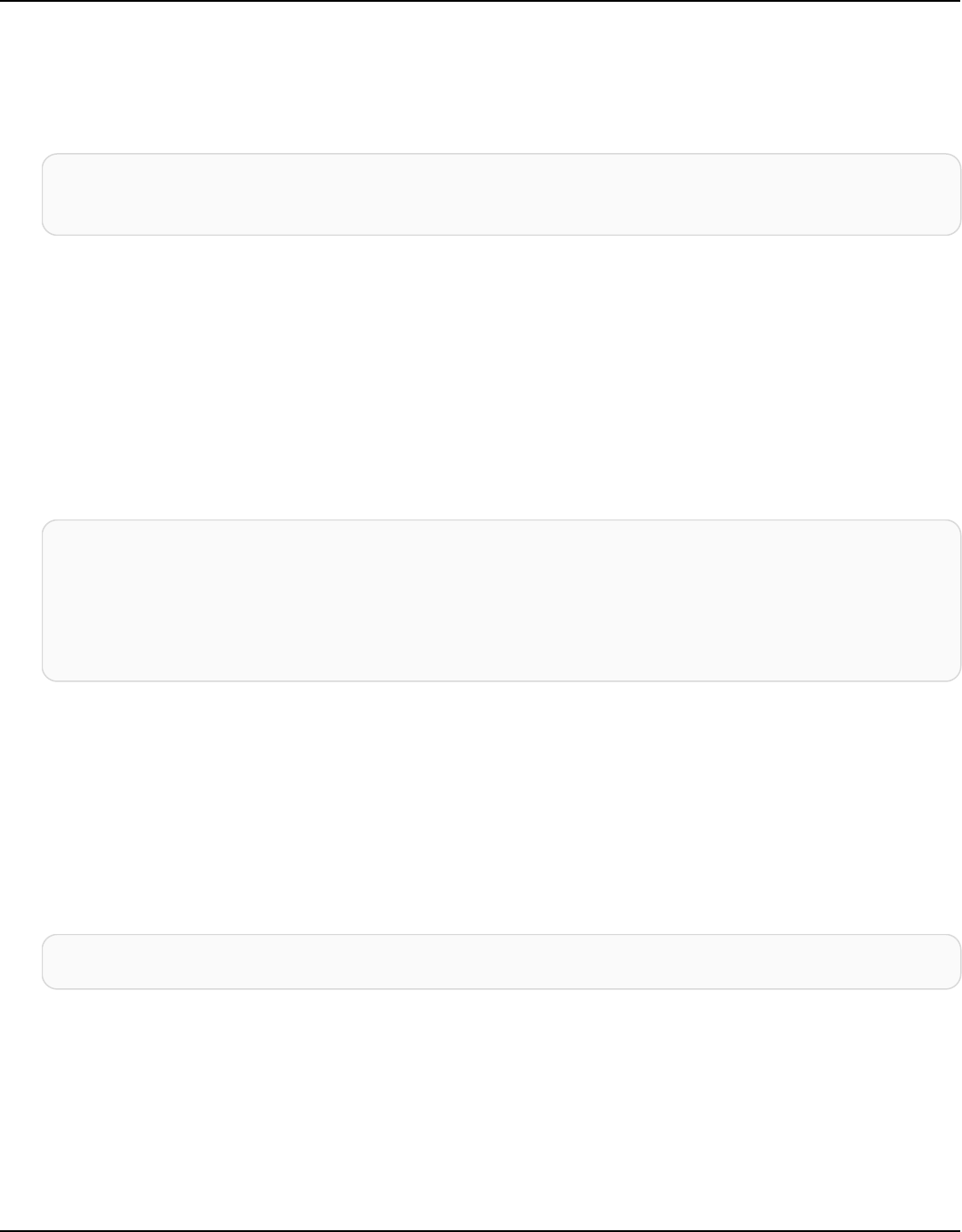

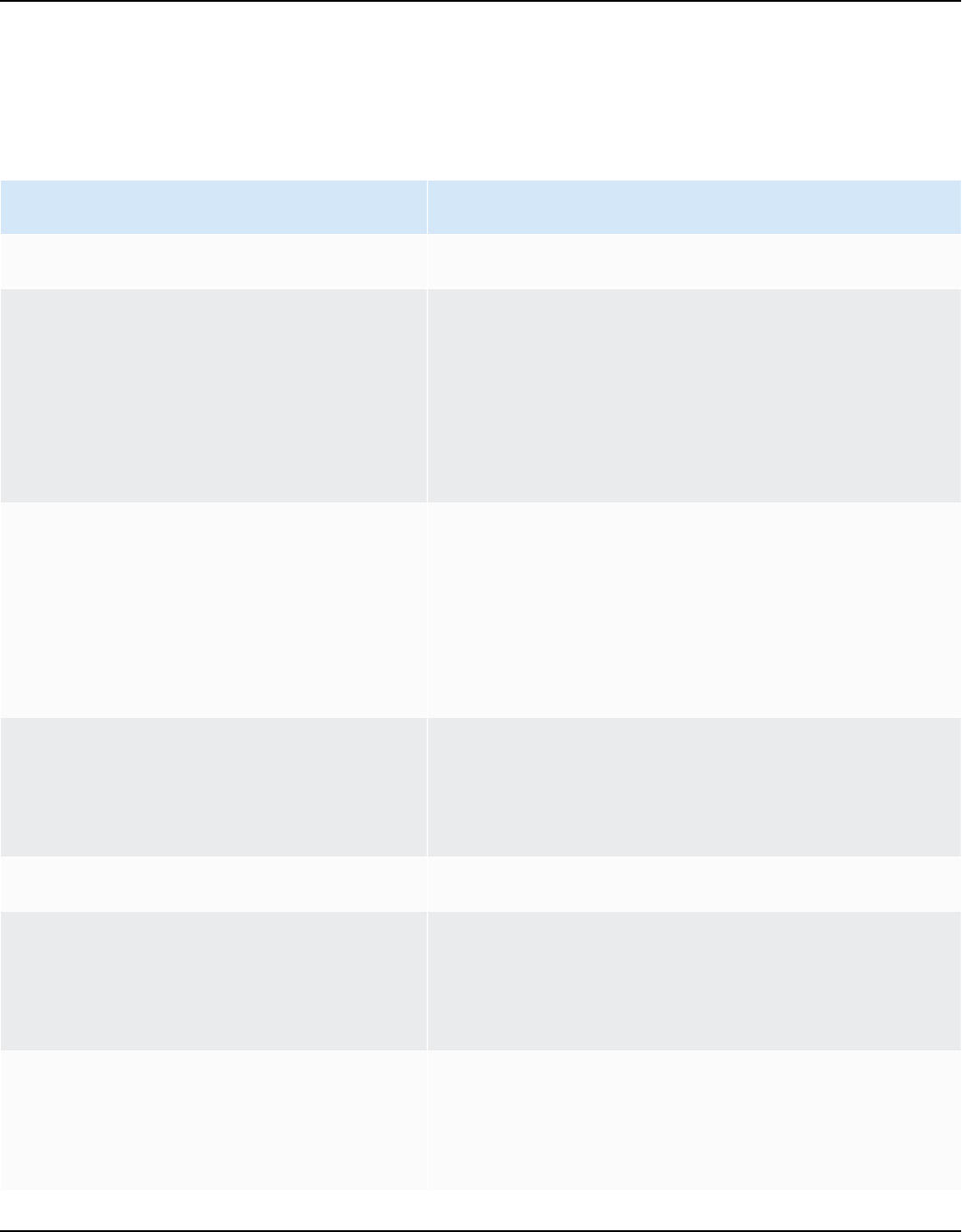

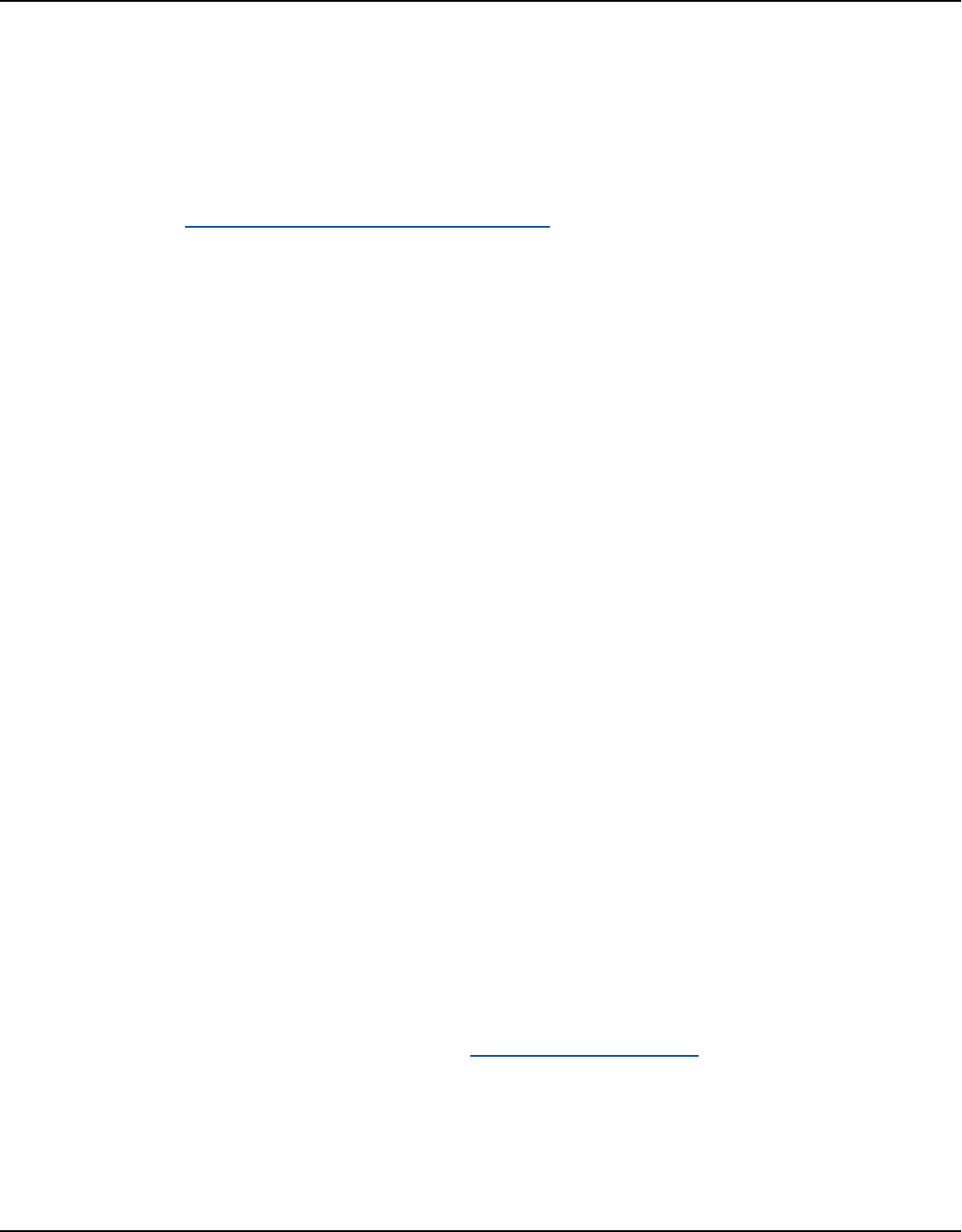

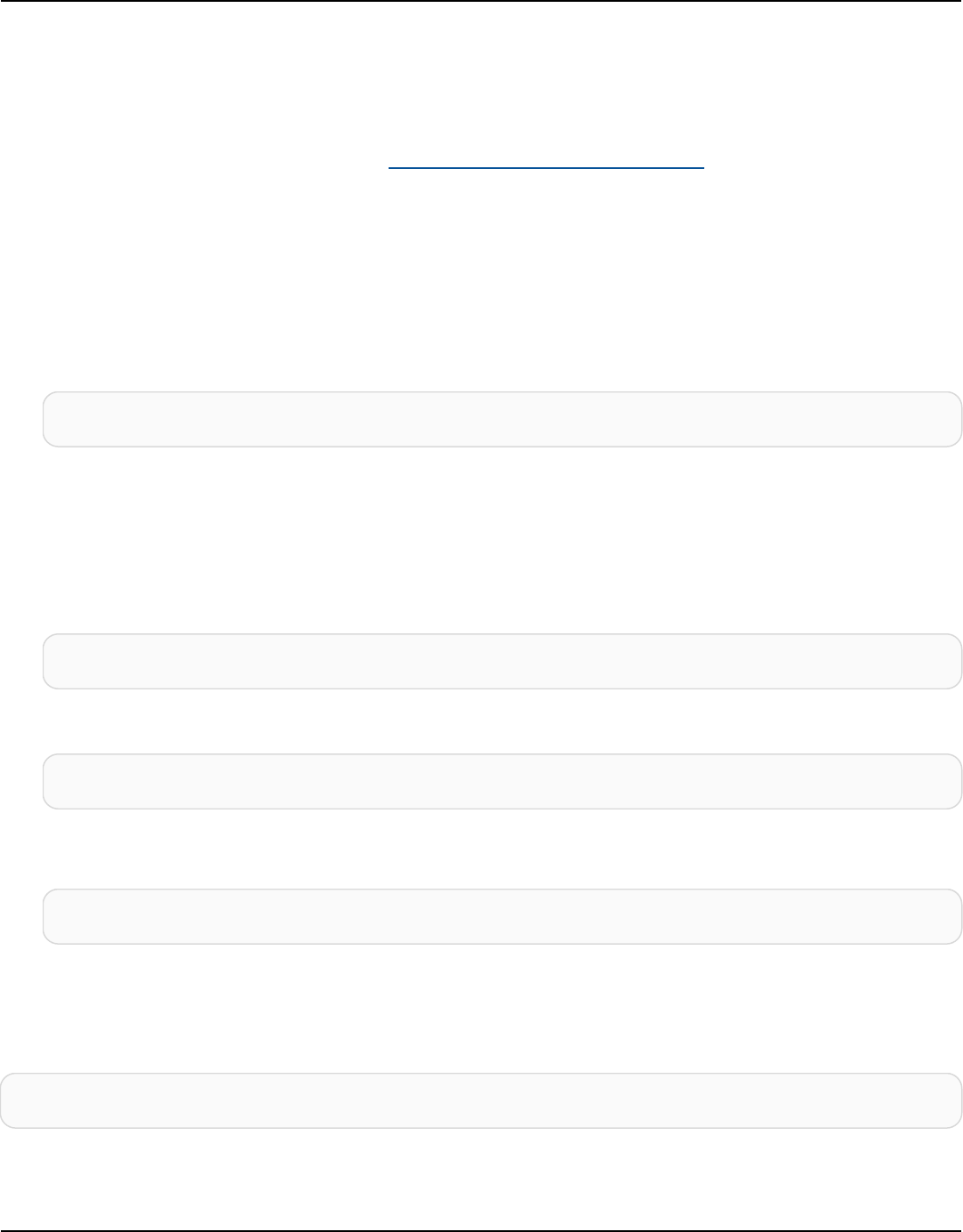

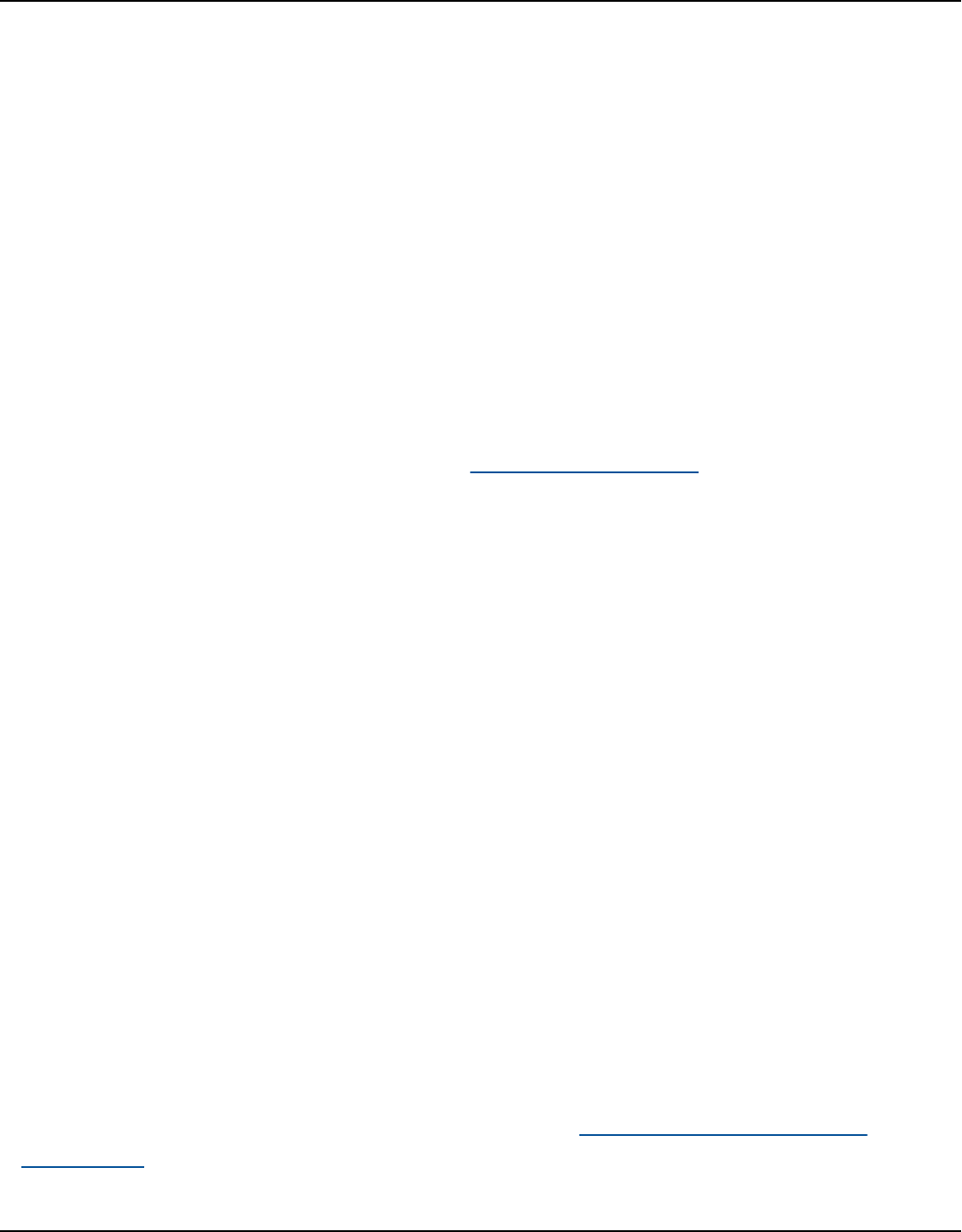

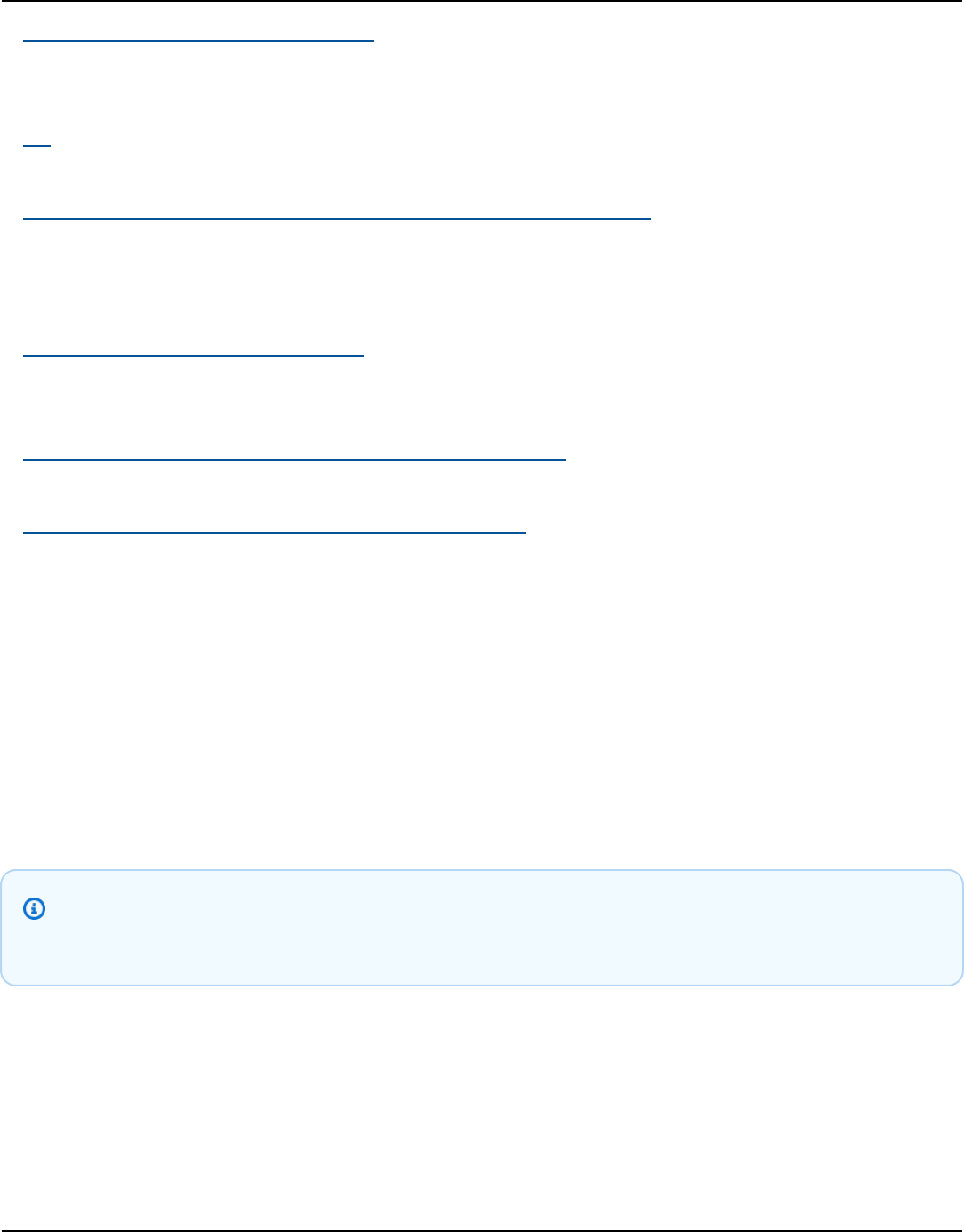

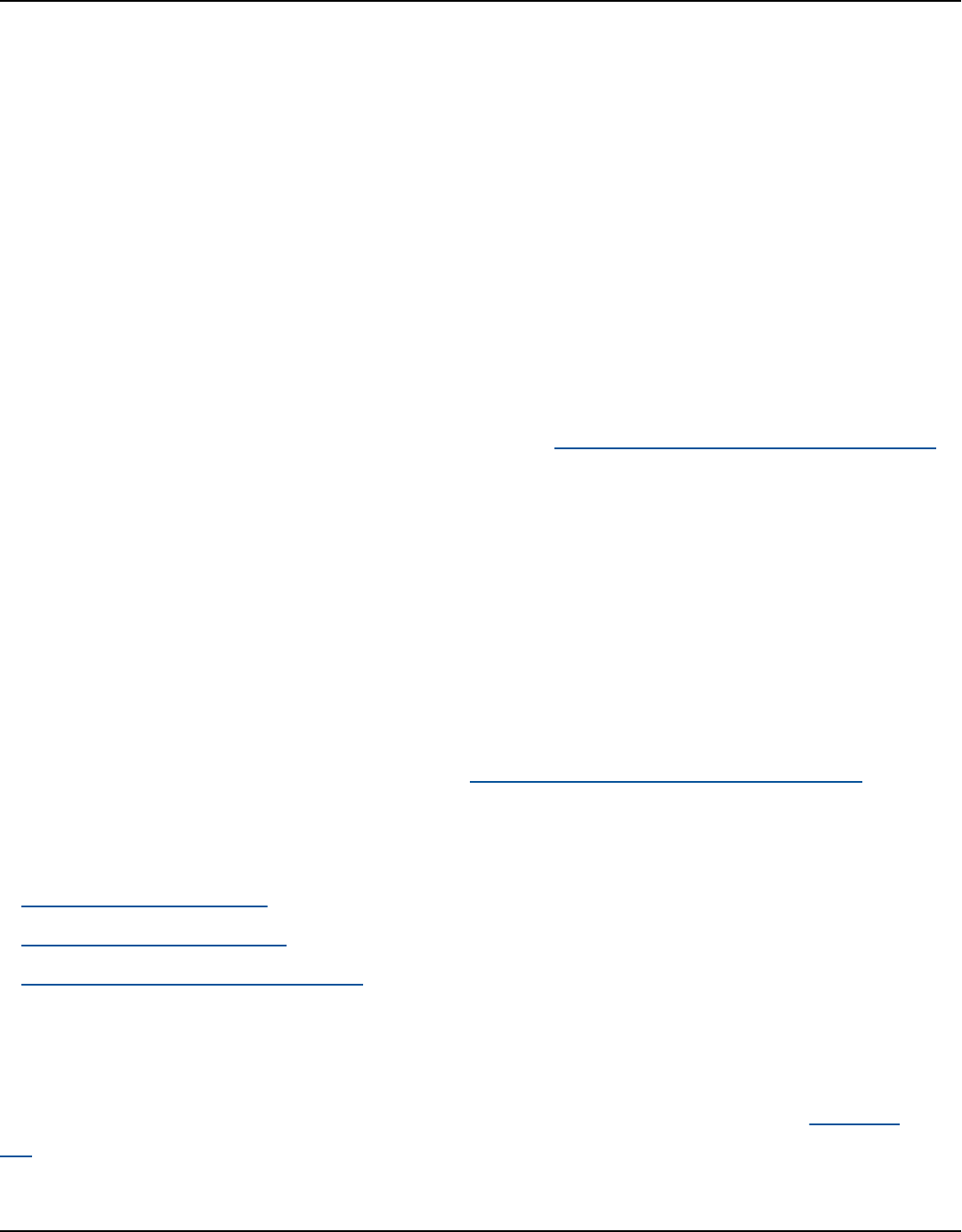

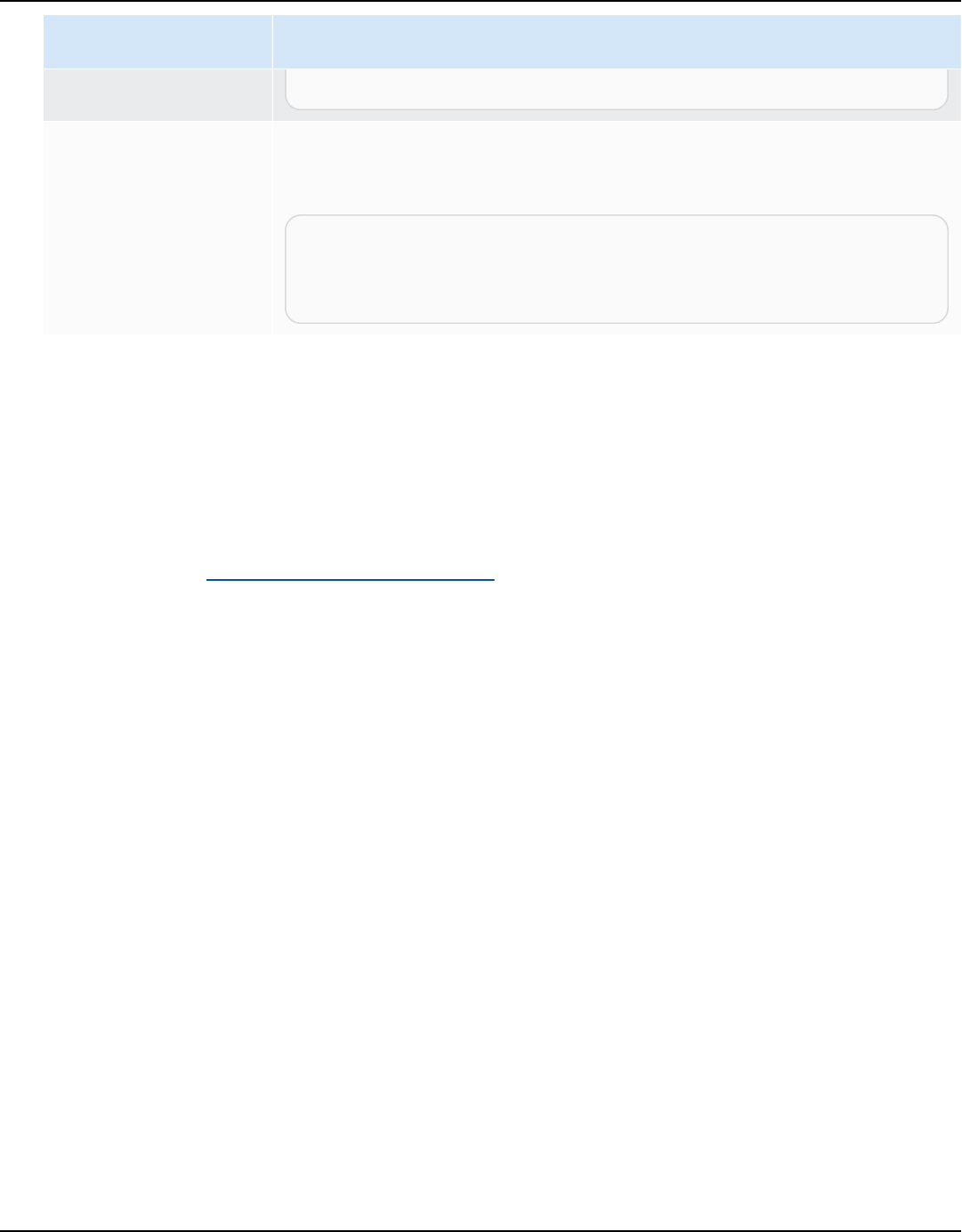

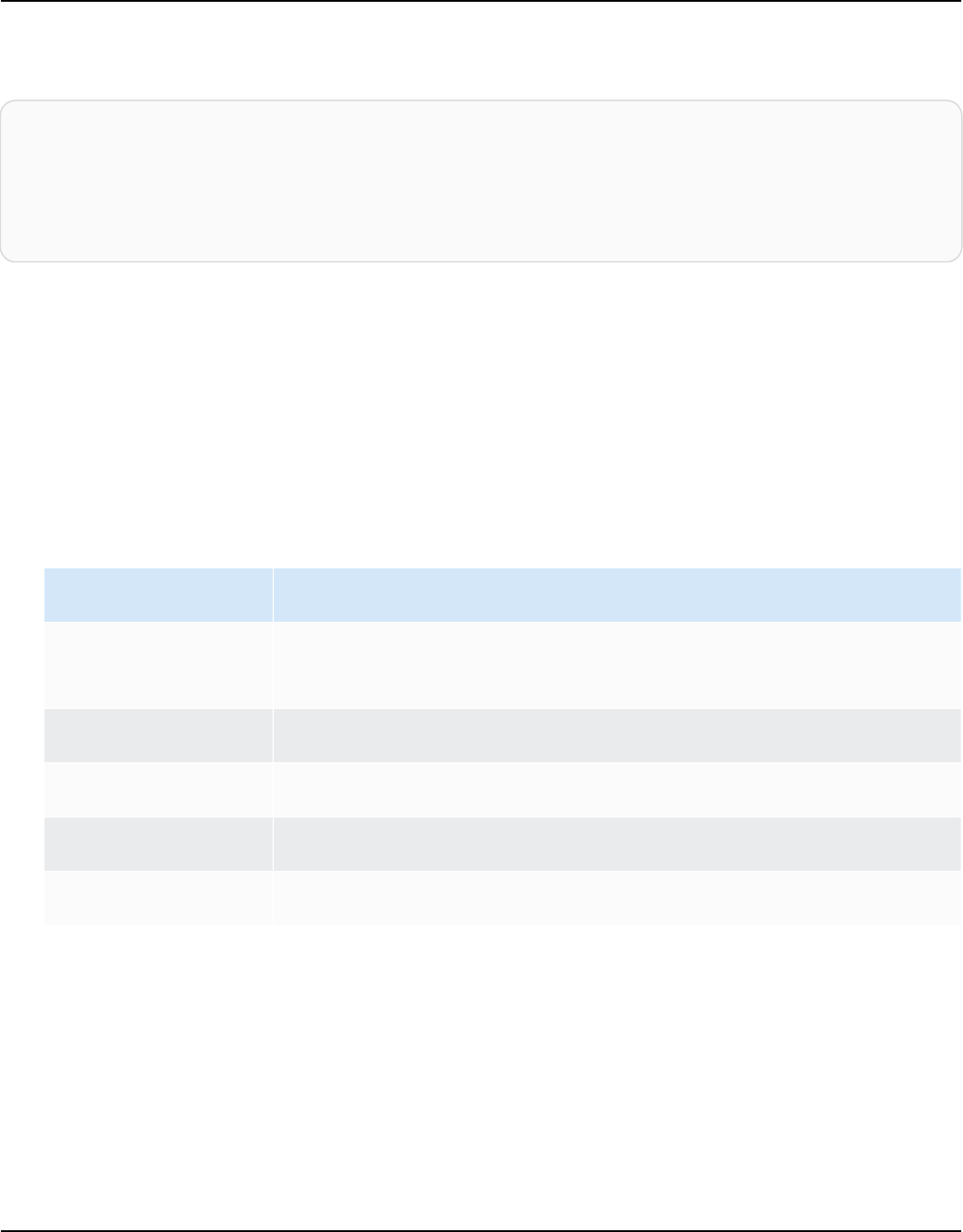

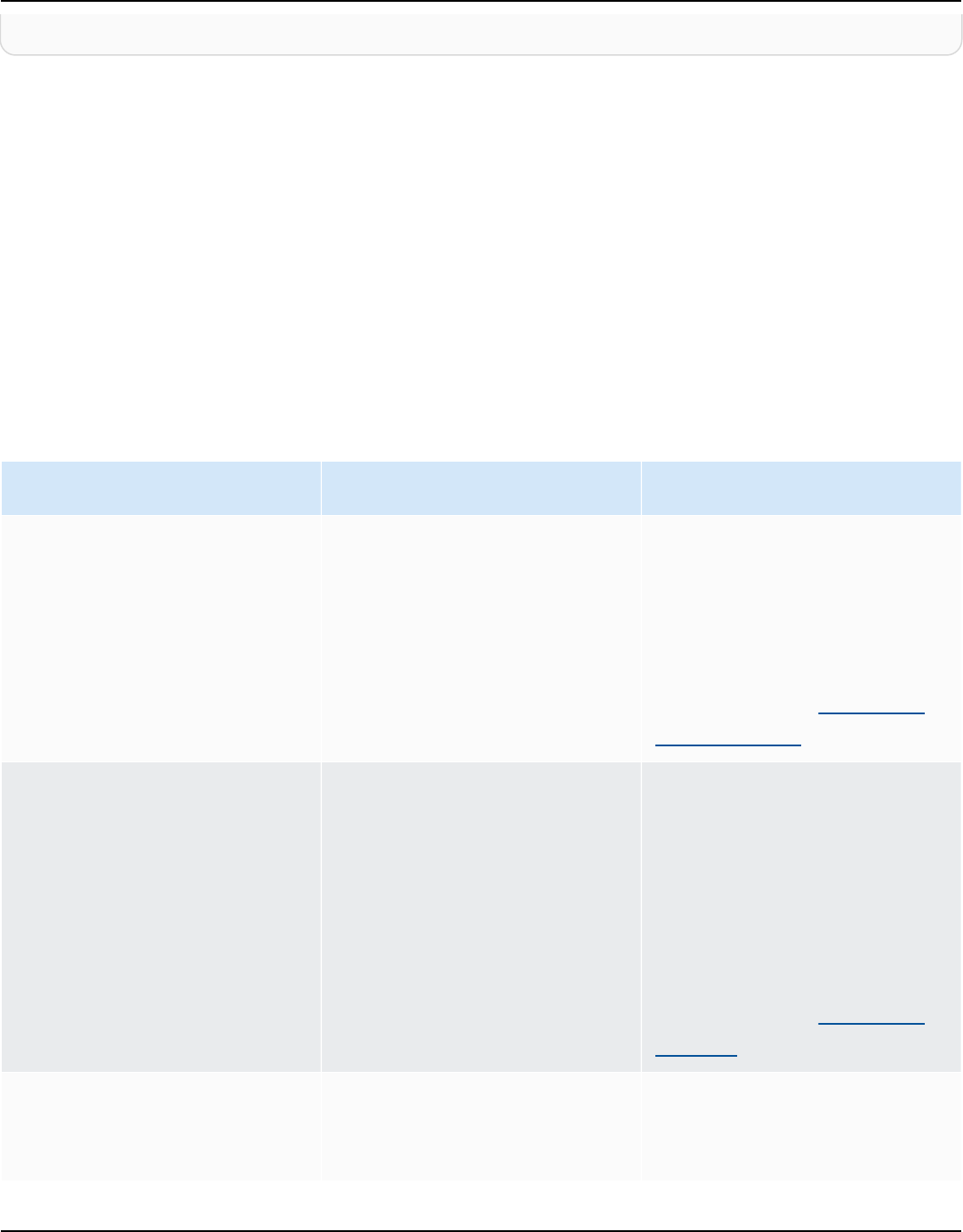

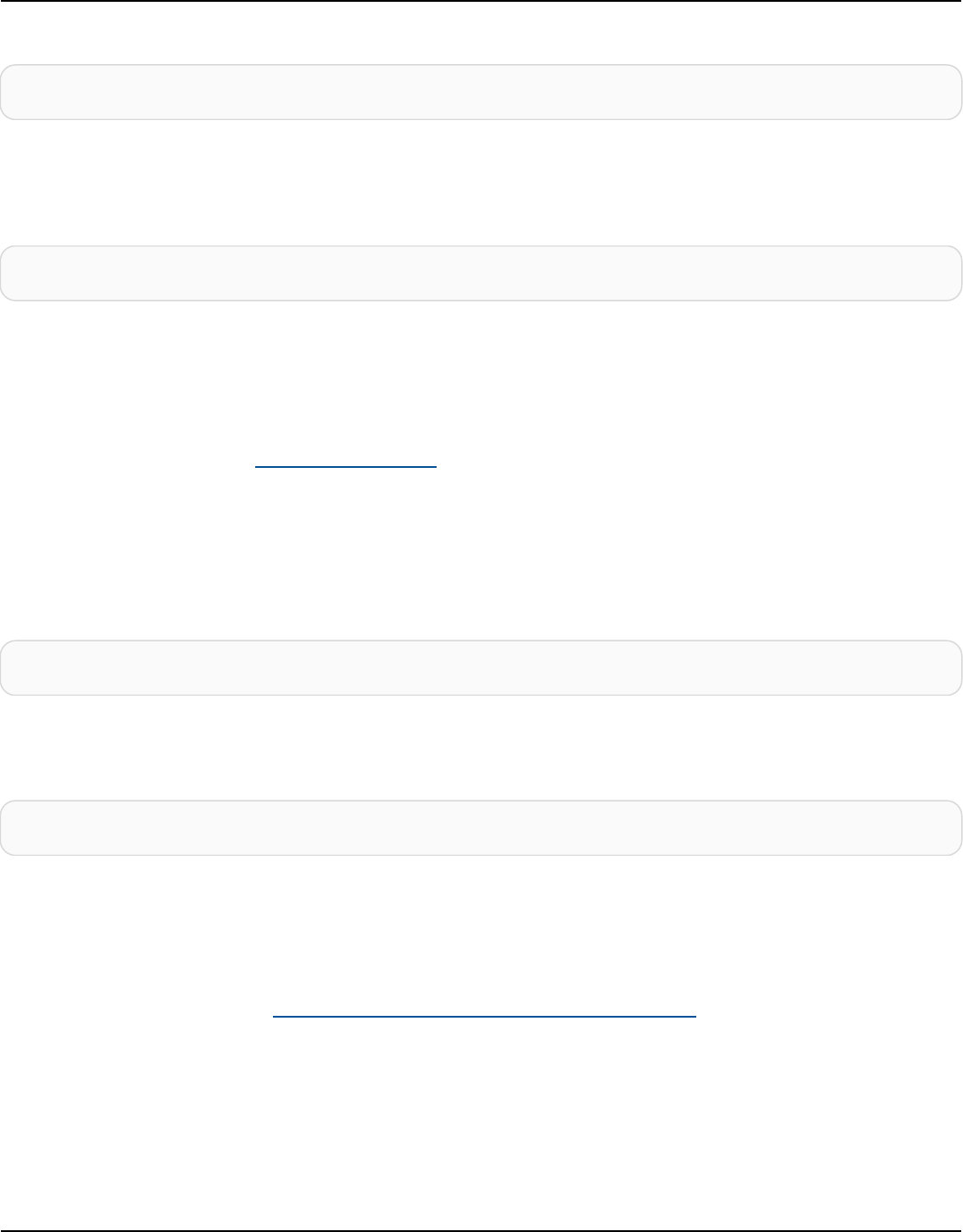

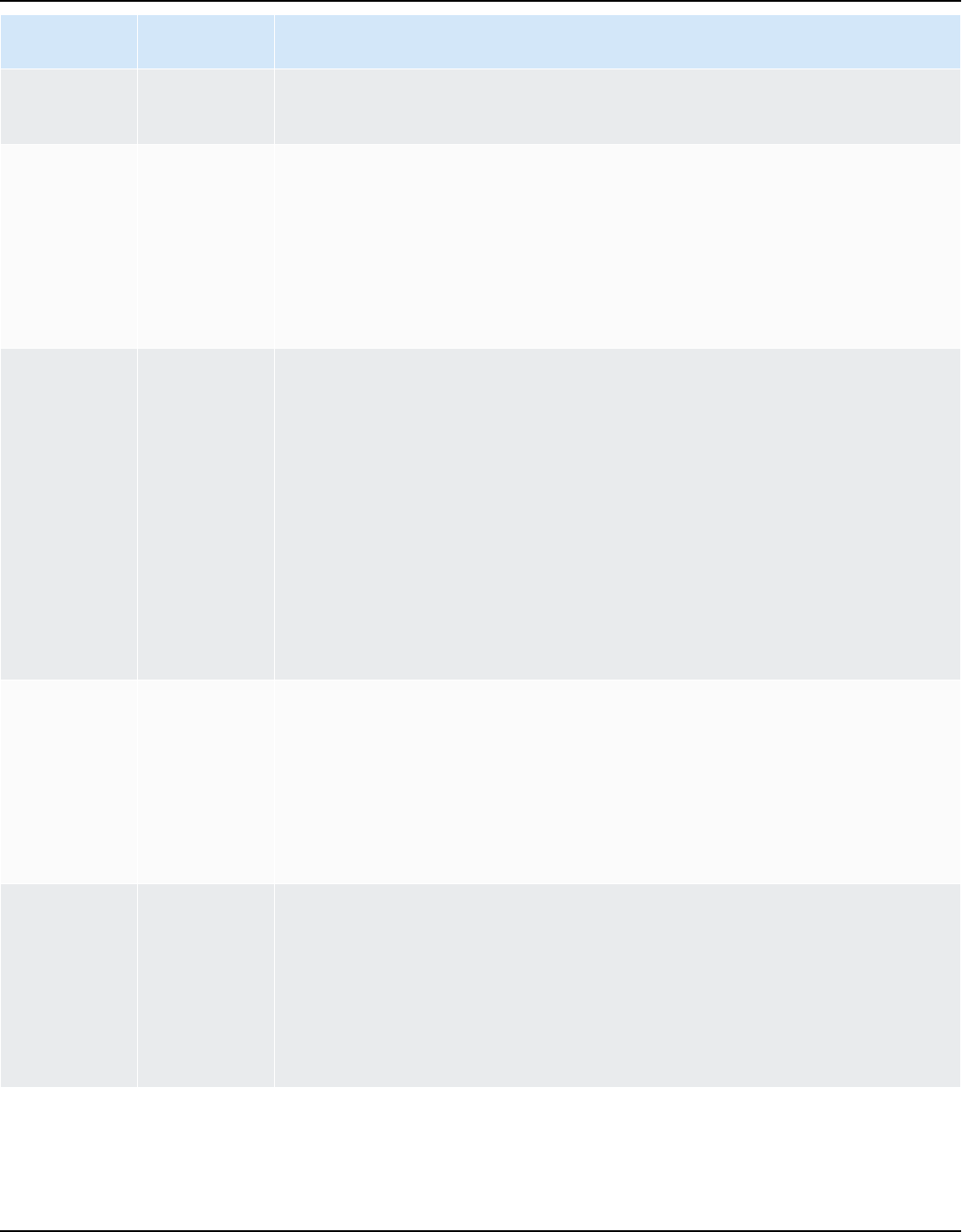

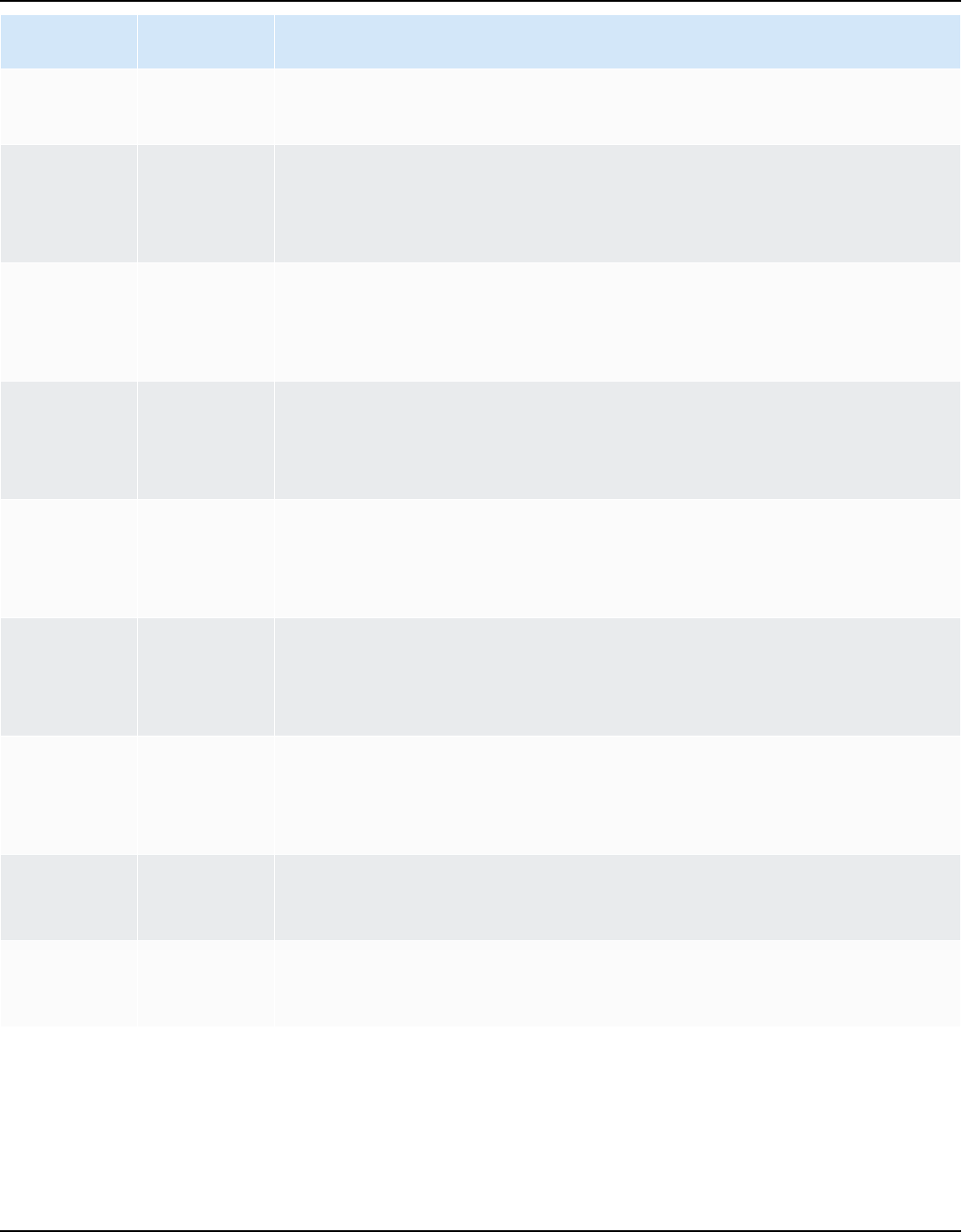

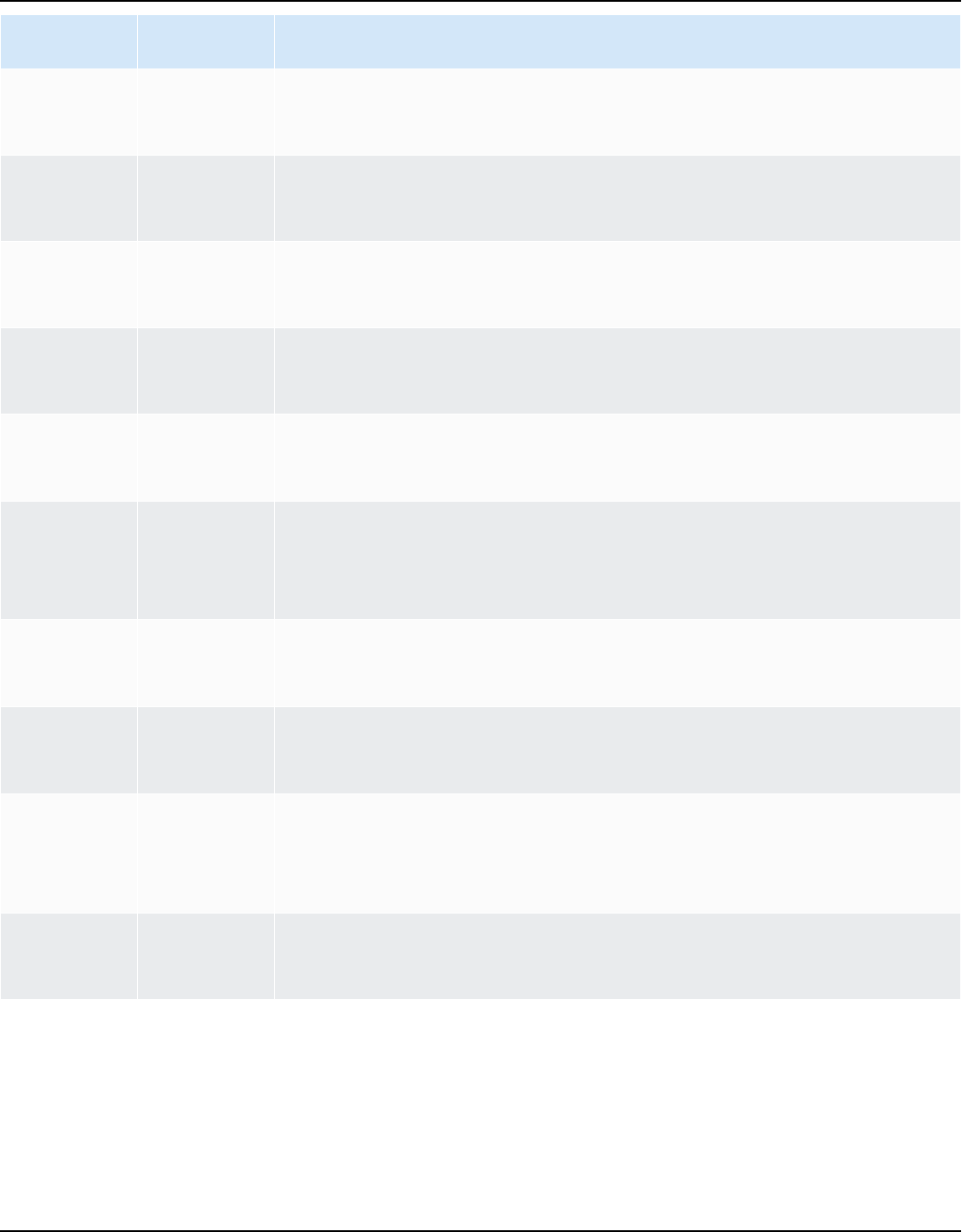

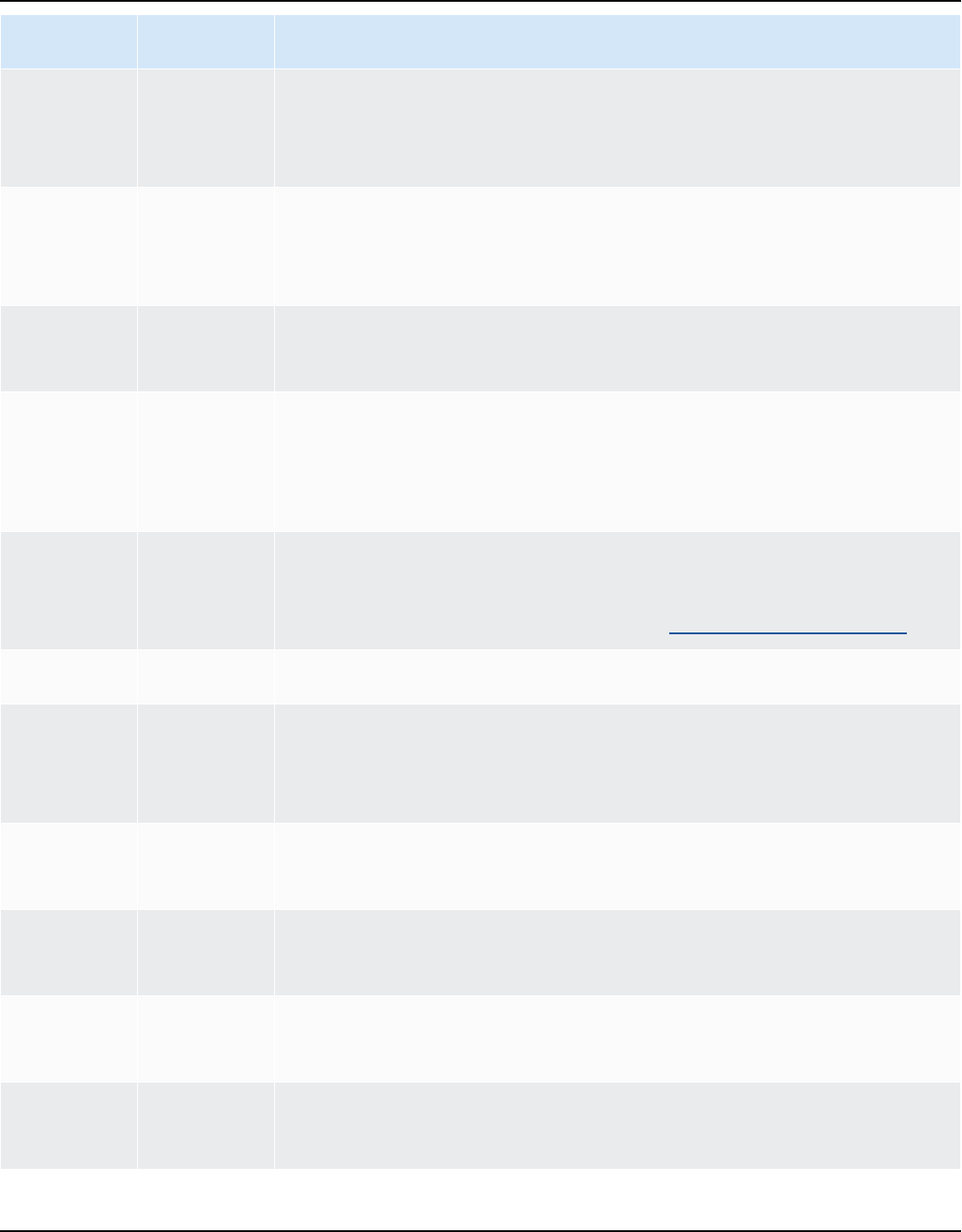

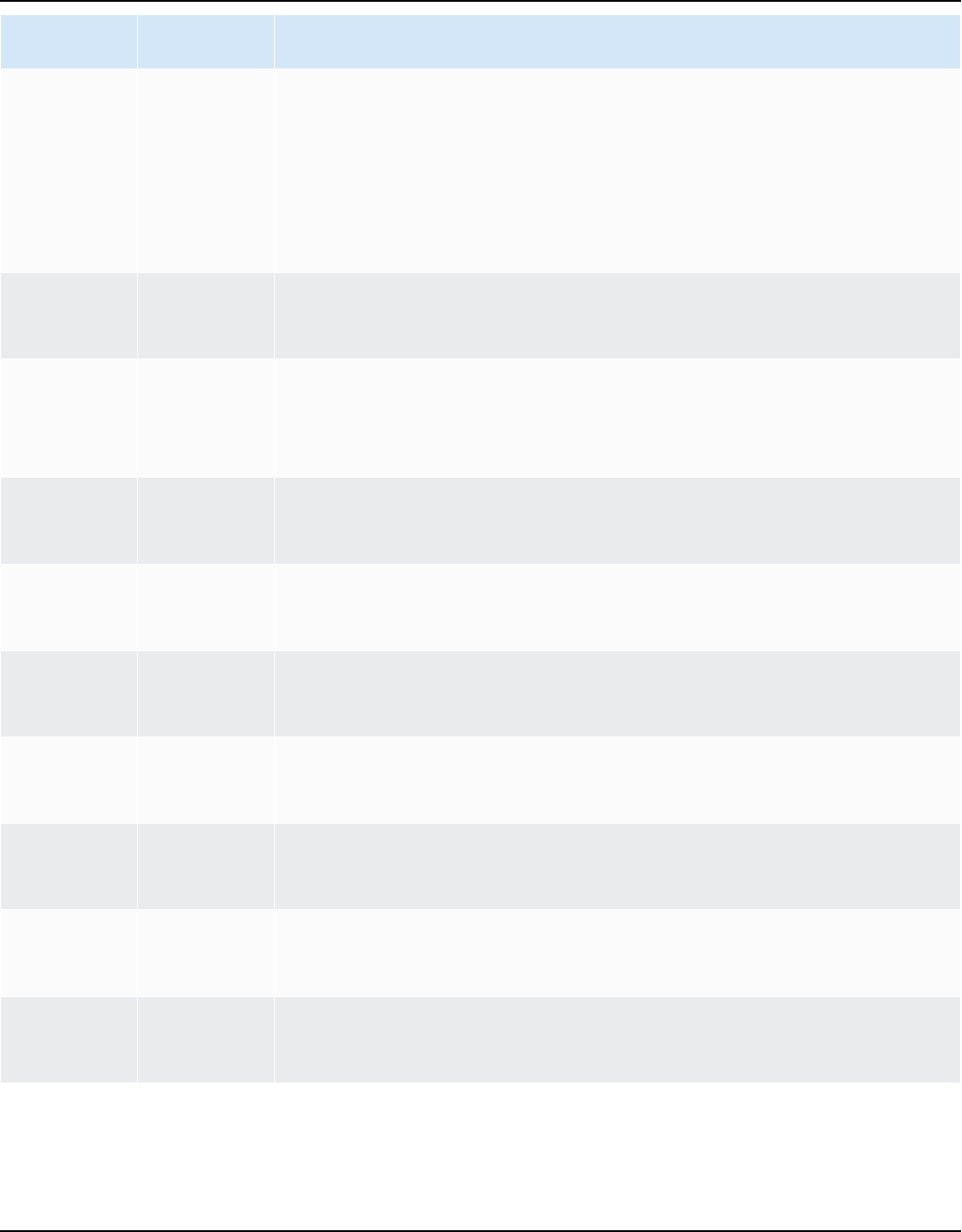

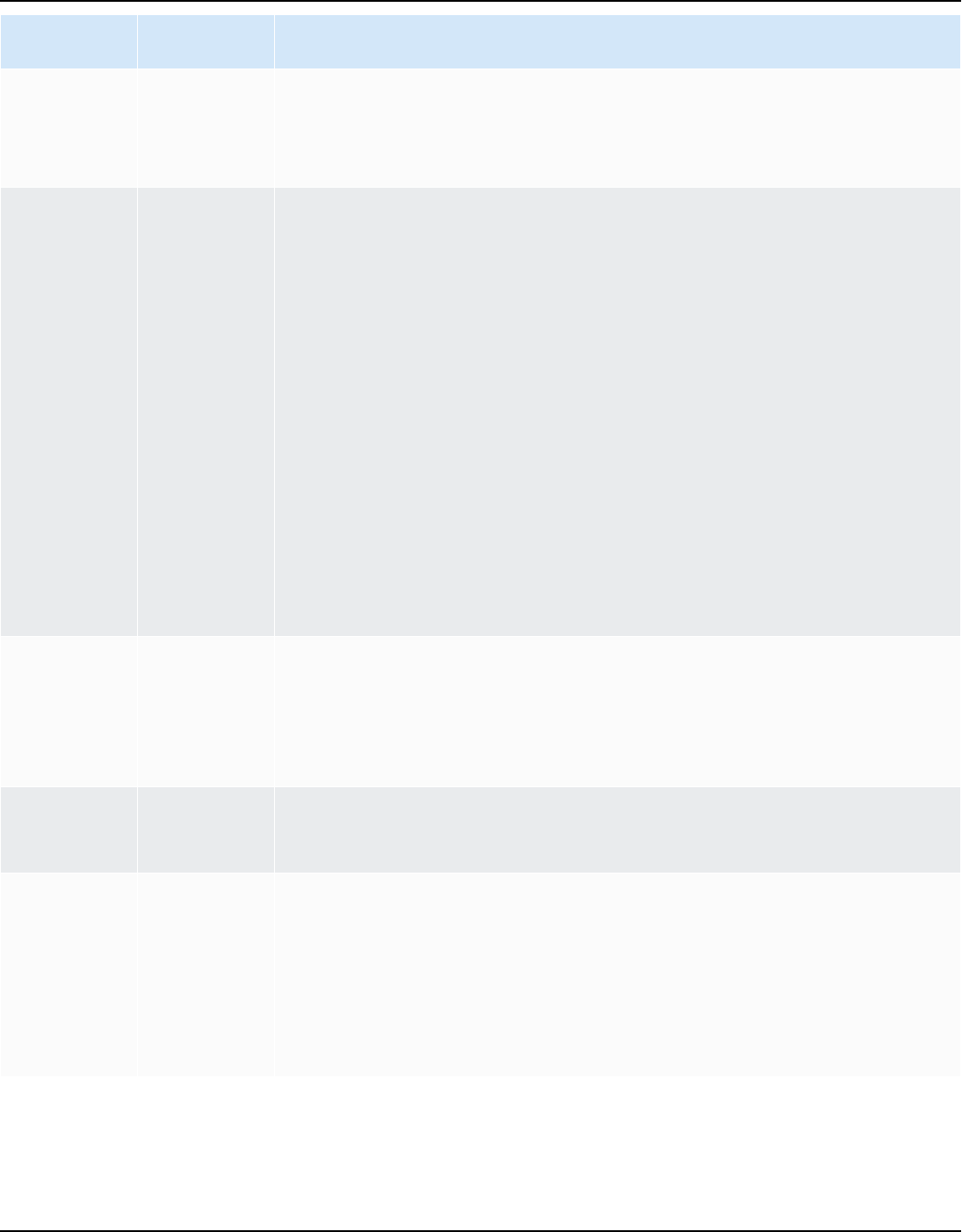

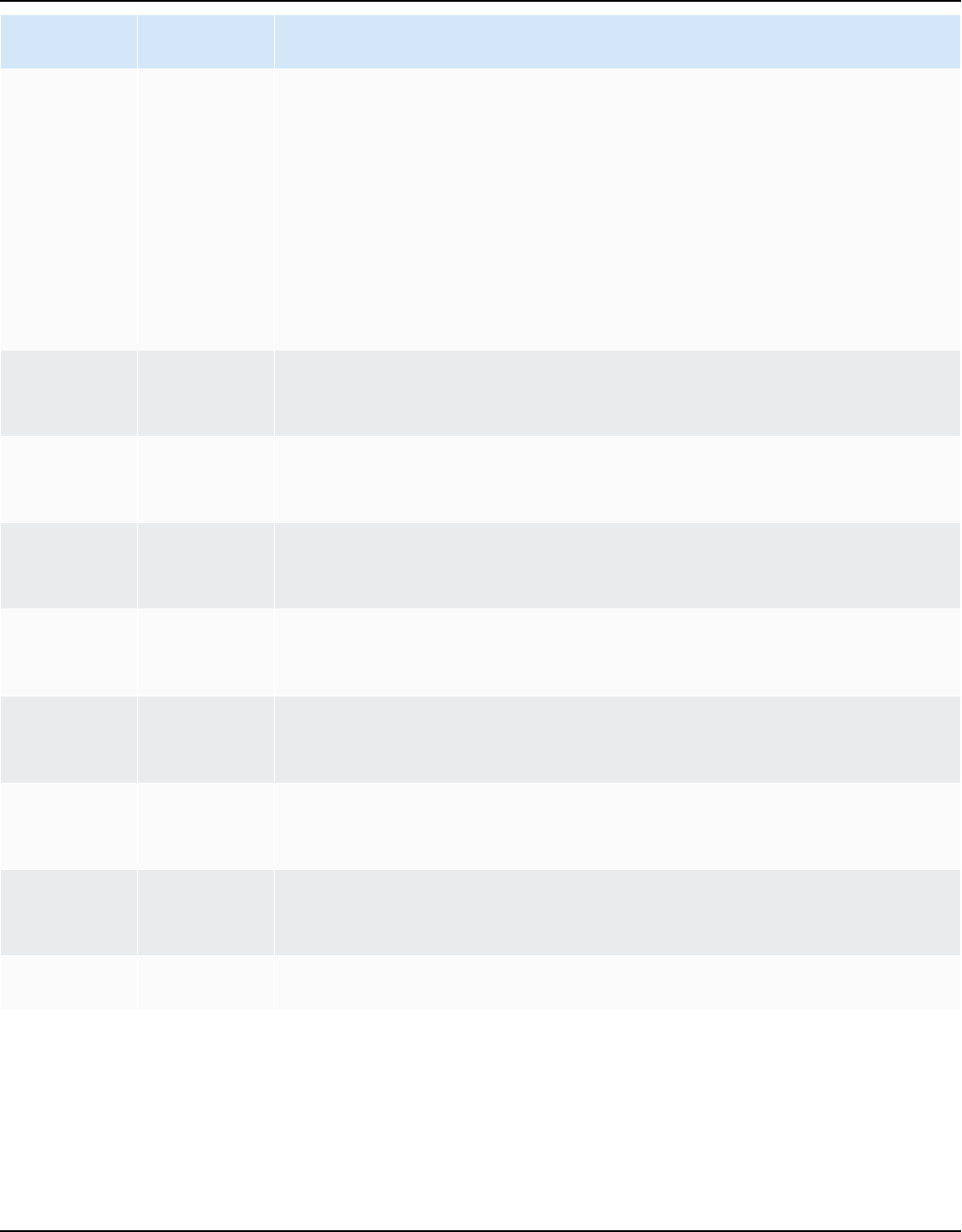

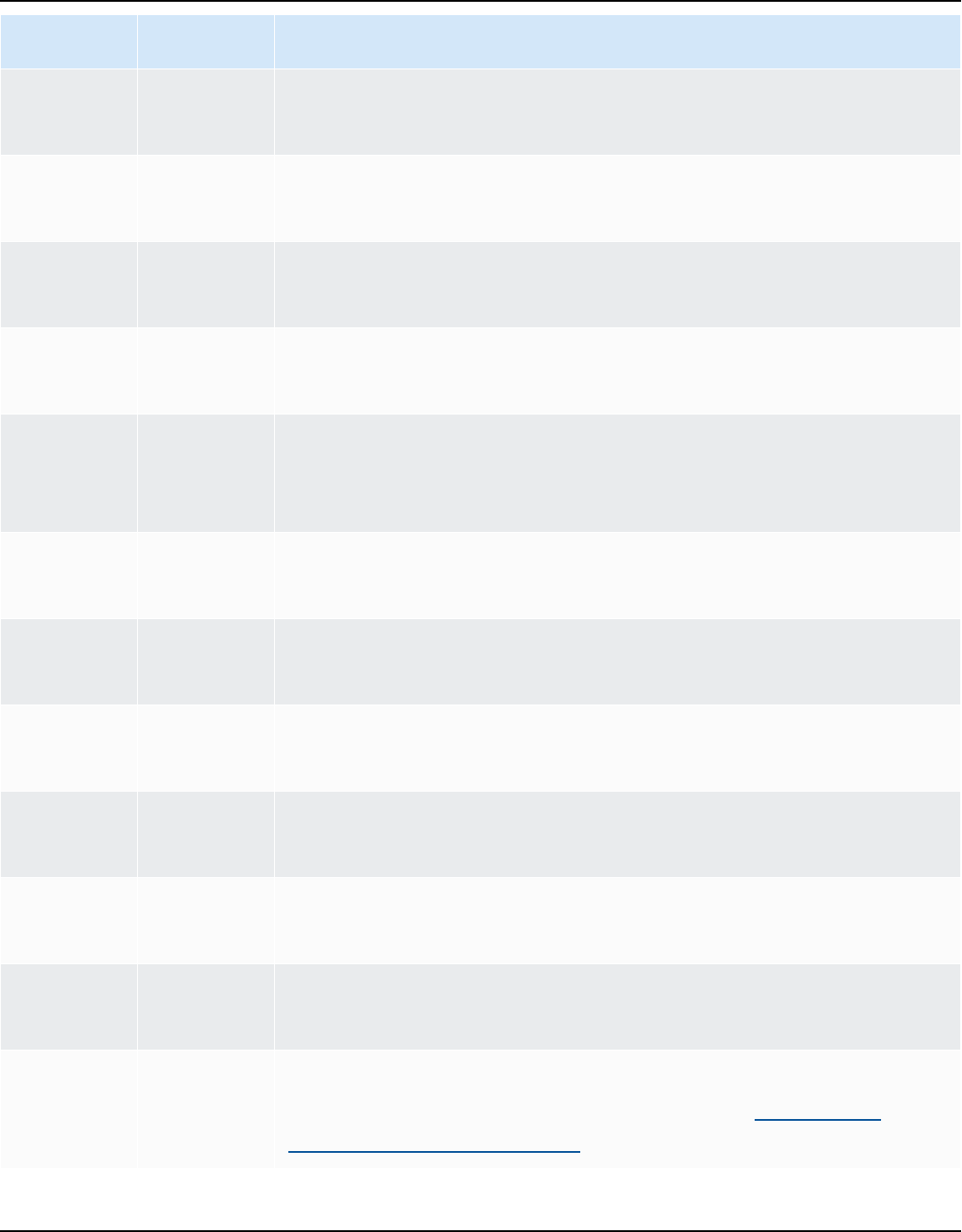

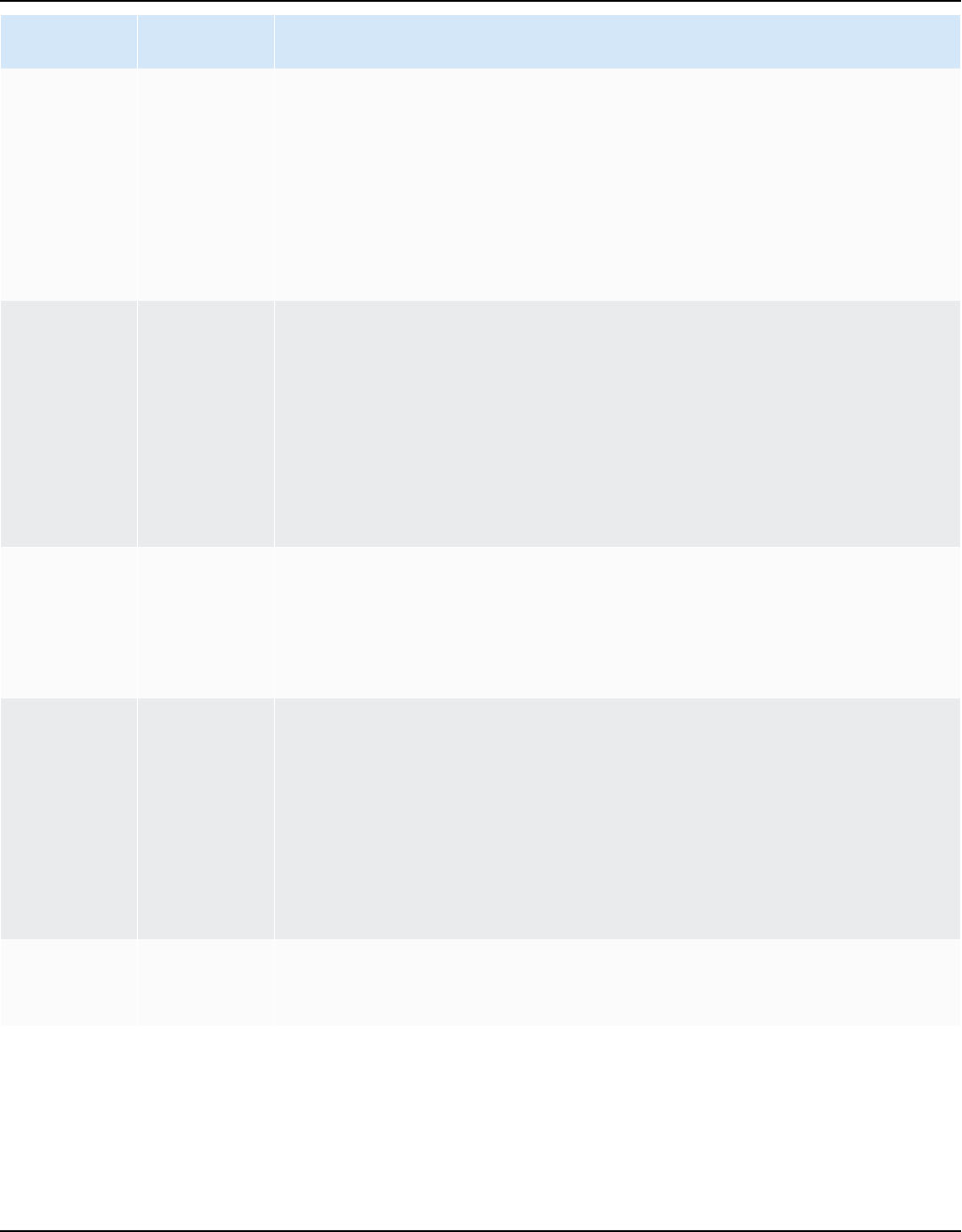

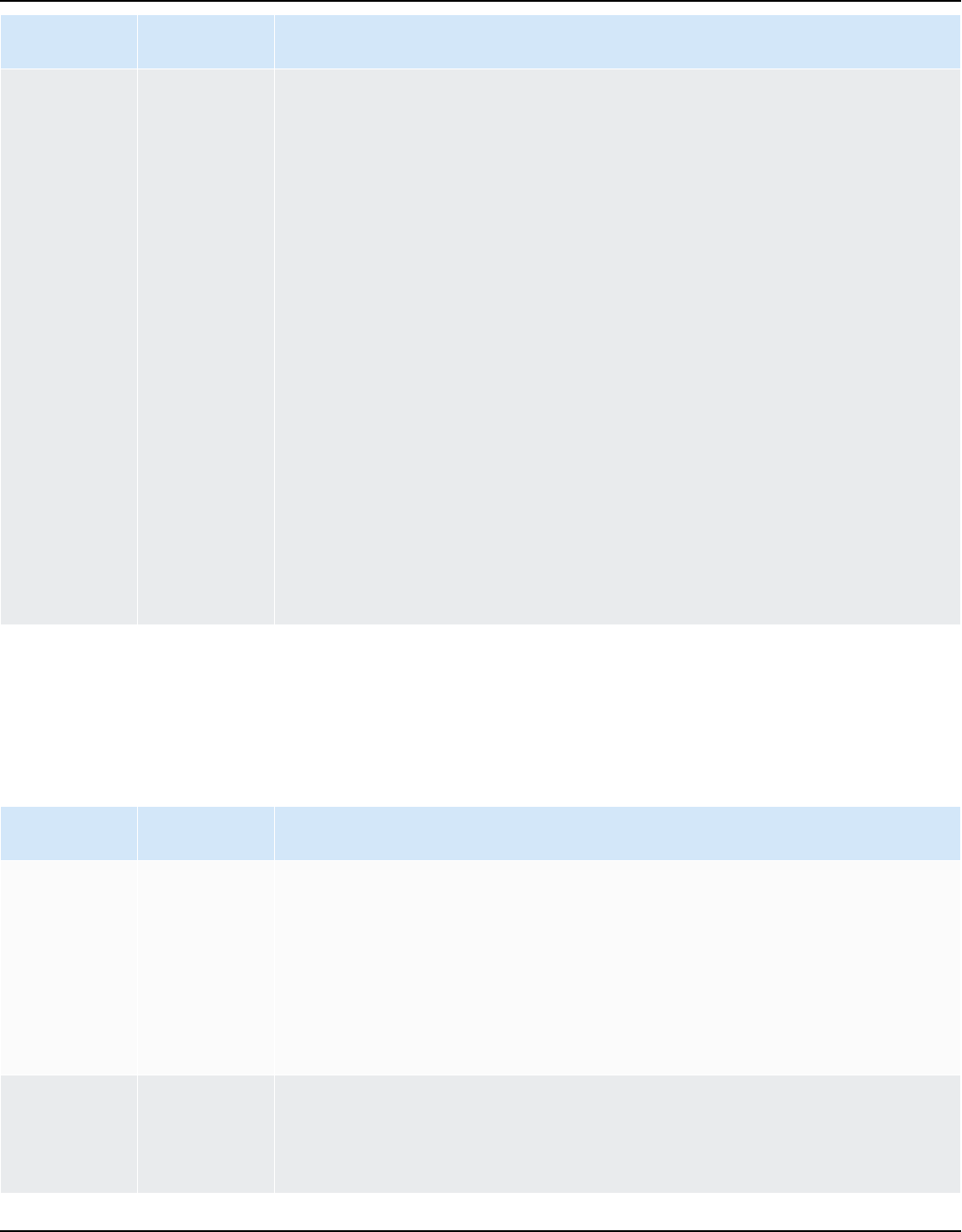

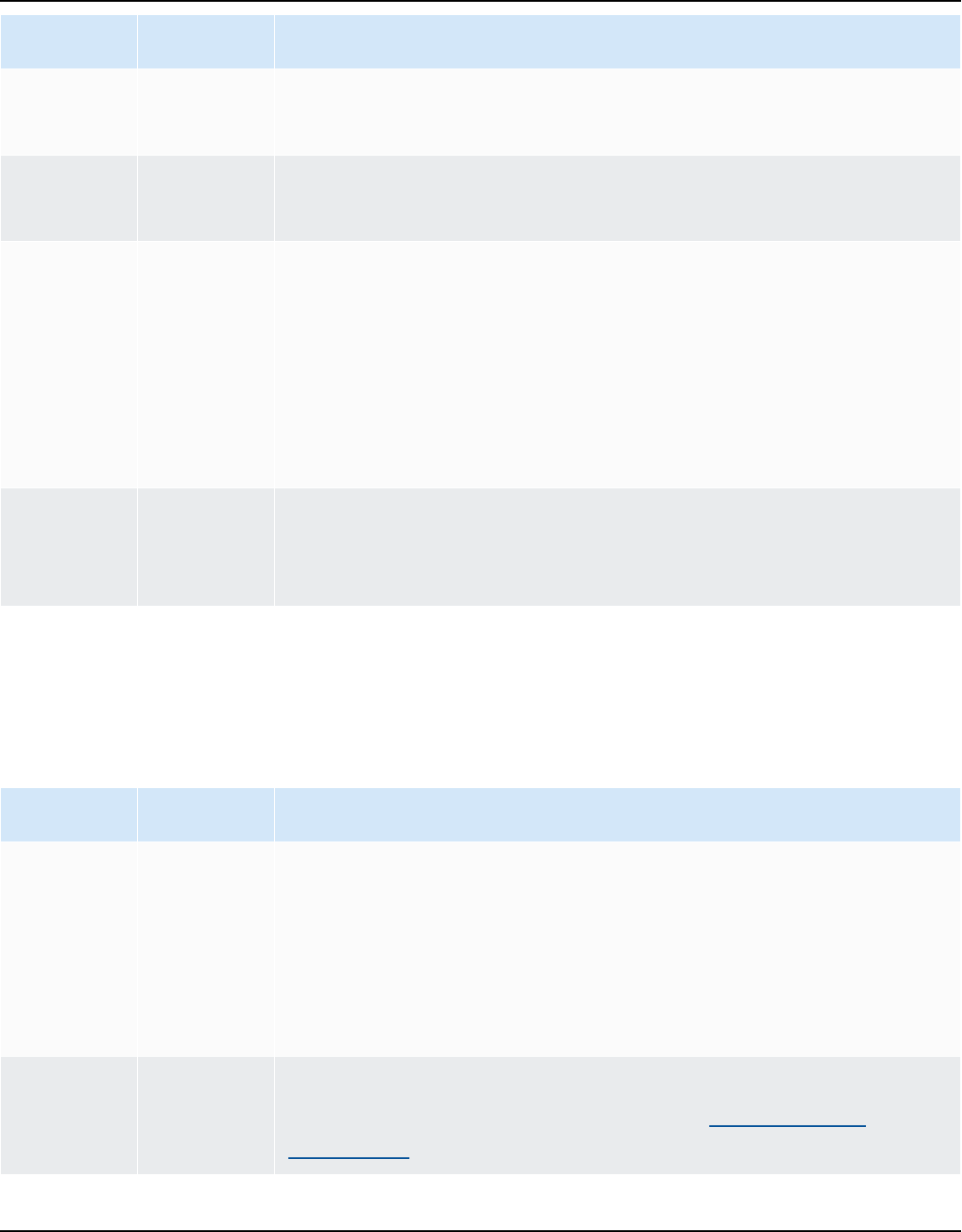

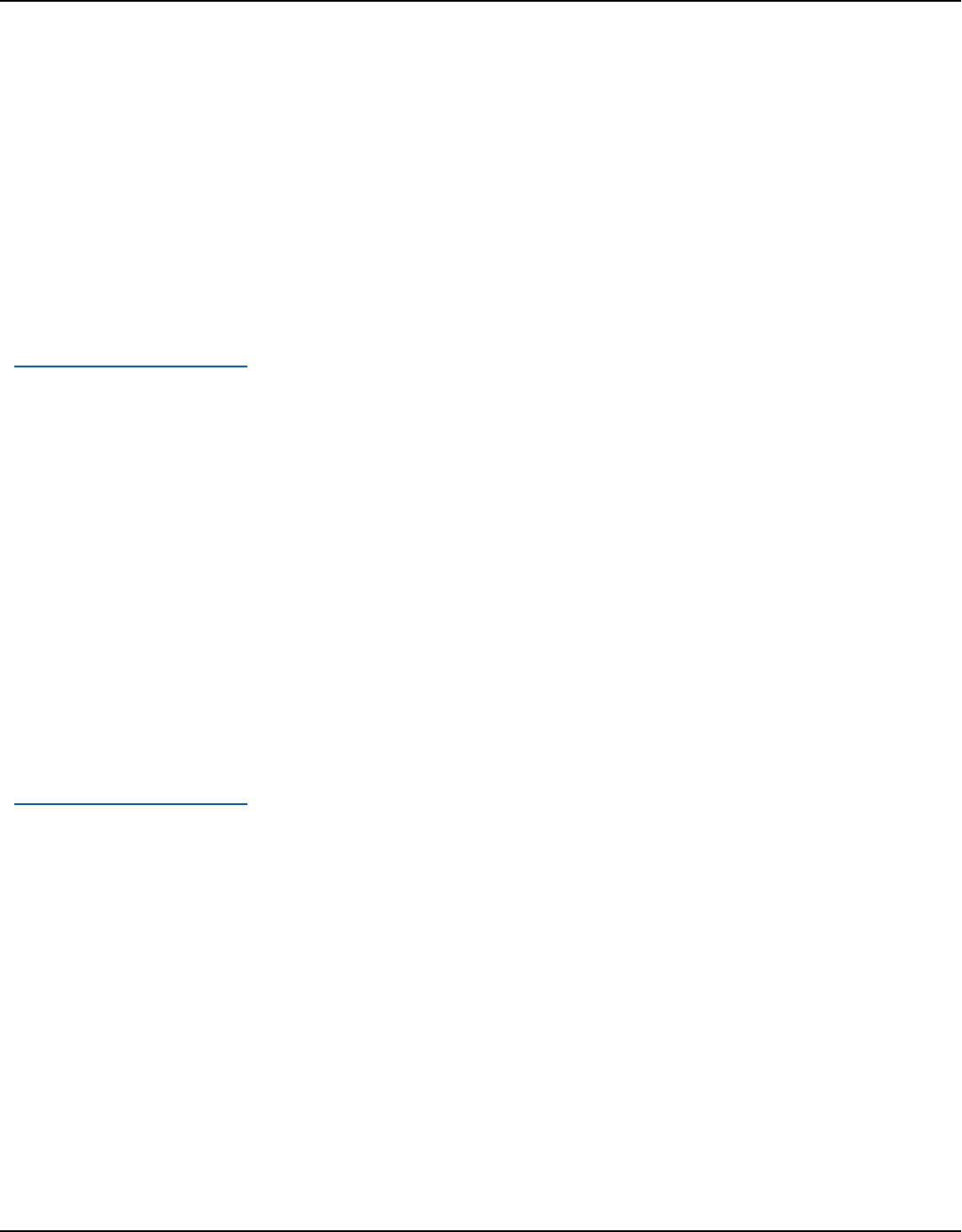

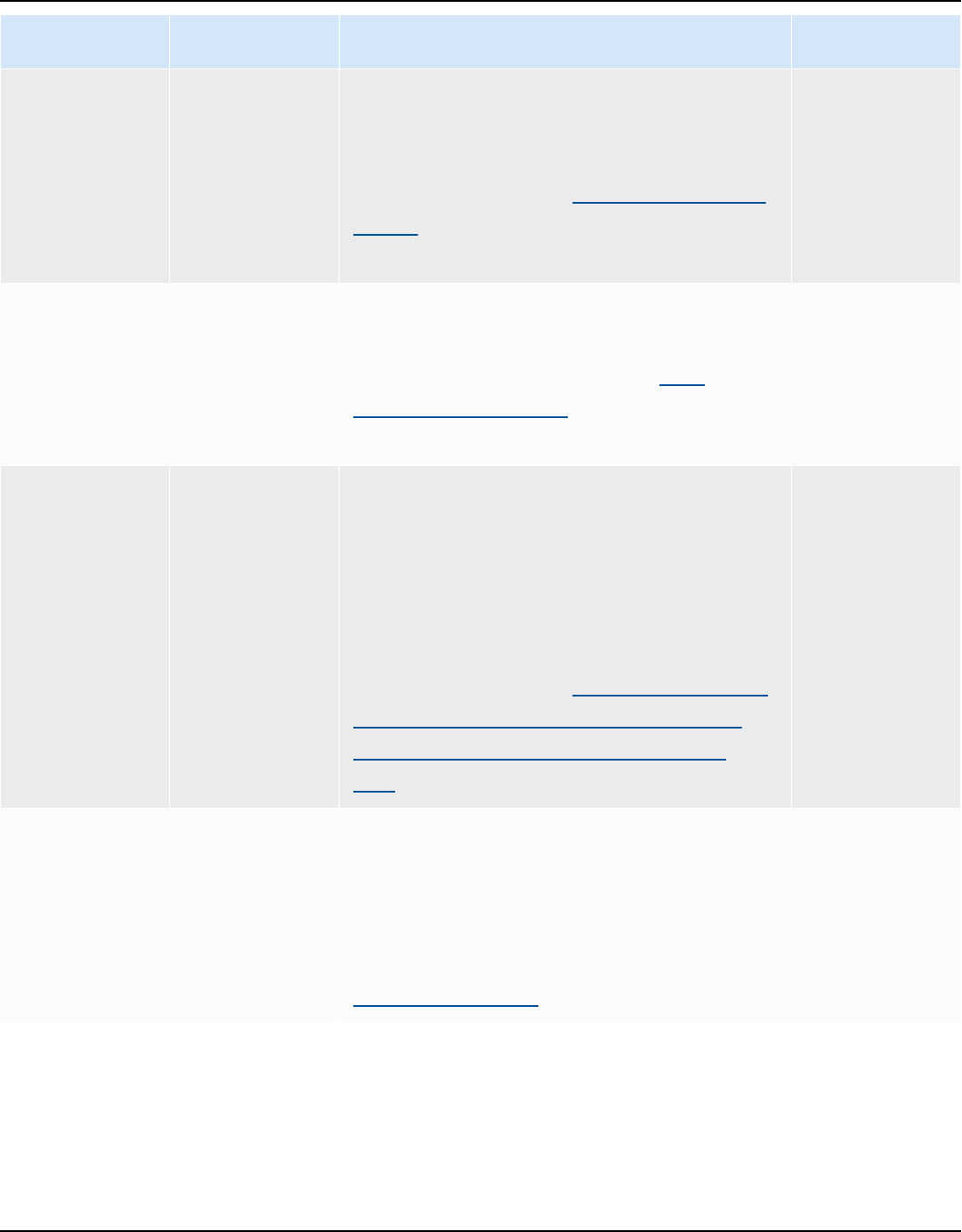

Operating system File name

Fedora Linux

aws-schema-conversion-tool-1.0. build-num

ber .x86_64.rpm

Microsoft Windows

AWS Schema Conversion Tool-1.0. build-number .msi

Ubuntu Linux

aws-schema-conversion-tool-1.0. build-number .deb

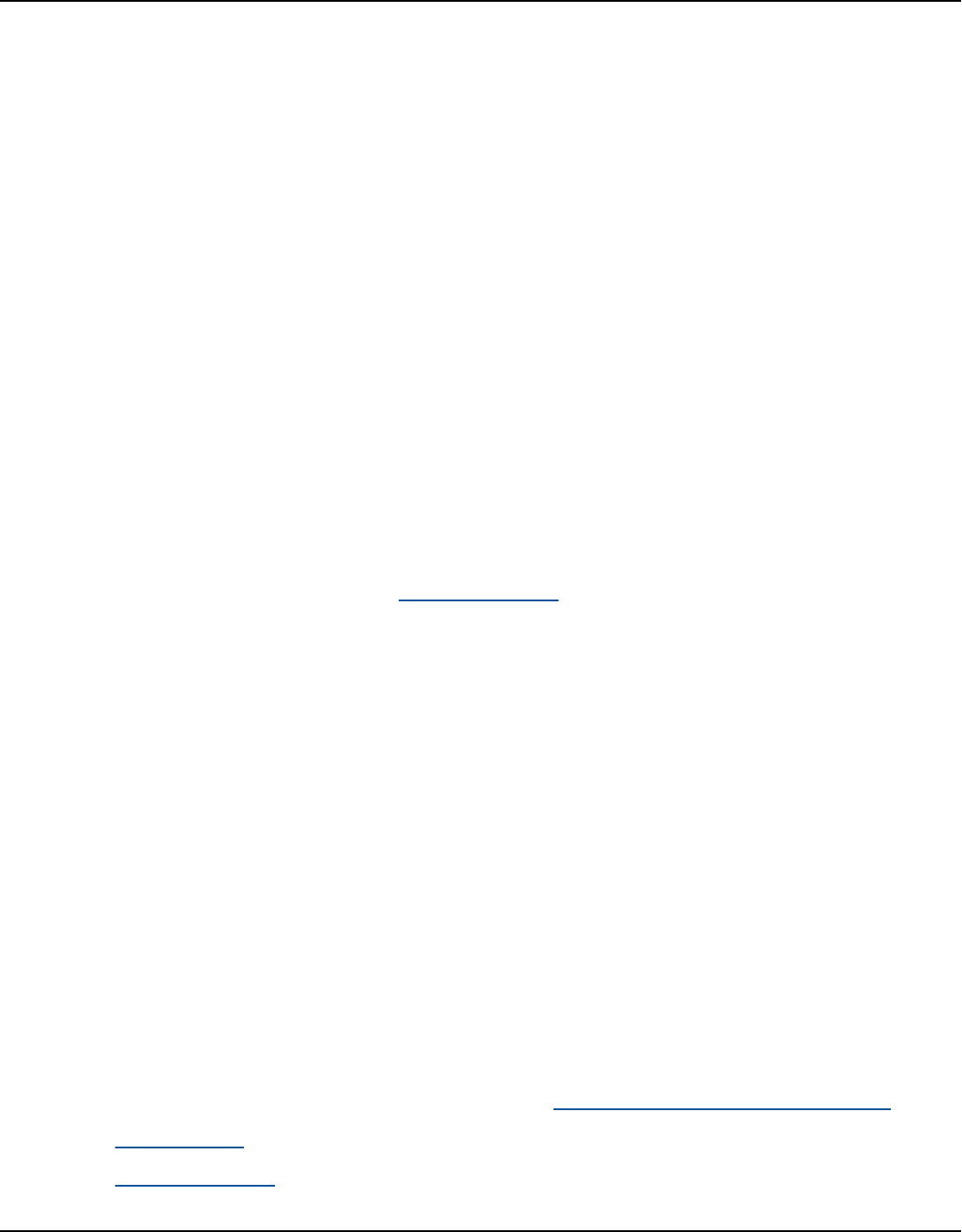

3. Run the AWS SCT installer file extracted in the previous step. Use the instructions for your

operating system, shown following.

Operating system Install instructions

Fedora Linux Run the following command in the folder that you downloaded the

file to:

sudo yum install aws-schema-conversion-tool-

1.0. build-number .x86_64.rpm

Microsoft Windows Double-click the file to run the installer.

Ubuntu Linux Run the following command in the folder that you downloaded the

file to:

sudo dpkg -i aws-schema-conversion-tool-

1.0. build-number .deb

4. Download the Java Database Connectivity (JDBC) drivers for your source and target database

engines. For instructions and download links, see Installing JDBC drivers for AWS Schema

Conversion Tool.

Now, you have completed the setup of the AWS SCT application. Double-click the application icon

to run AWS SCT.

Installing AWS SCT Version 1.0.672 8

AWS Schema Conversion Tool User Guide

Validating the AWS Schema Conversion Tool insttallation

There are several ways you can verify the distribution file of AWS SCT. The simplest is to compare

the checksum of the file with the published checksum from AWS. As an additional level of security,

you can use the procedures following to verify the distribution file, based on the operating system

where you installed the file.

This section includes the following topics.

Topics

• Verifying the checksum of the AWS SCT file

• Verifying the AWS SCT RPM files on Fedora

• Verifying the AWS SCT DEB files on Ubuntu

• Verifying the AWS SCT MSI file on Microsoft Windows

Verifying the checksum of the AWS SCT file

In order to detect any errors that could have been introduced when downloading or storing the

AWS SCT compressed file, you can compare the file checksum with a value provided by AWS. AWS

uses the SHA256 algorithm for the checksum.

To verify the AWS SCT distribution file using a checksum

1. Download the AWS SCT distribution file using the links in the Installing section. For more

information, see Installing AWS Schema Conversion Tool.

2. Download the latest checksum file, called sha256Check.txt. This file includes the checksums

for the latest AWS SCT version. For example, the file can appear as follows:

Fedora b4f5f66f91bfcc1b312e2827e960691c269a9002cd1371cf1841593f88cbb5e6

Ubuntu 4315eb666449d4fcd95932351f00399adb6c6cf64b9f30adda2eec903c54eca4

Windows 6e29679a3c53c5396a06d8d50f308981e4ec34bd0acd608874470700a0ae9a23

3. Run the SHA256 validation command for your operating system in the directory that contains

the distribution file. For example, run the following command in Linux.

Validating installation Version 1.0.672 9

AWS Schema Conversion Tool User Guide

shasum -a 256 aws-schema-conversion-tool-1.0.latest.zip

4. Compare the results of the command with the value shown in the sha256Check.txt file. If the

checksums match, then it is safe to run the distribution file. If the checksums don't match, then

don't run the distribution file, and contact AWS Support.

Verifying the AWS SCT RPM files on Fedora

AWS provides another level of validation in addition to the distribution file checksum. All RPM

files in the distribution file are signed by an AWS private key. The public GPG key can be viewed at

amazon.com.public.gpg-key.

To verify the AWS SCT RPM files on Fedora

1. Download the AWS SCT distribution file using the links in the Installing section.

2. Verify the checksum of the AWS SCT distribution file.

3. Extract the contents of the distribution file. Locate the RPM file you want to verify.

4. Download GPG public key from amazon.com.public.gpg-key

5. Import the public key to your RPM DB (make sure you have the appropriate permissions) by

using the following command:

sudo rpm --import [email protected]

6. Check that the import was successful by running the following command:

rpm -q --qf "%{NAME}-%{VERSION}-%{RELEASE} \n %{SUMMARY} \n" gpg-pubkey-

ea22abf4-5a21d30c

7. Check the RPM signature by running the following command:

rpm --checksig -v aws-schema-conversion-tool-1.0.build number-1.x86_64.rpm

Verifying the AWS SCT DEB files on Ubuntu

AWS provides another level of validation in addition to the distribution file checksum. All DEB files

in the distribution file are signed by a GPG detached signature.

Verifying the AWS SCT RPM files on Fedora Version 1.0.672 10

AWS Schema Conversion Tool User Guide

To verify the AWS SCT DEB files on Ubuntu

1. Download the AWS SCT distribution file using the links in the Installing section.

2. Verifying the checksum of the AWS SCT distribution file.

3. Extract the contents of the distribution file. Locate the DEB file you want to verify.

4. Download the detached signature from aws-schema-conversion-tool-1.0.latest.deb.asc.

5. Download the GPG public key from amazon.com.public.gpg-key.

6. Import the GPG public key by running the following command:

gpg --import [email protected]

7. Verify the signature by running the following command:

gpg --verify aws-schema-conversion-tool-1.0.latest.deb.asc aws-schema-conversion-

tool-1.0.build number.deb

Verifying the AWS SCT MSI file on Microsoft Windows

AWS provides another level of validation in addition to the distribution file checksum. The MSI file

has a digital signature you can check to ensure it was signed by AWS.

To verify the AWS SCT MSI file on Windows

1. Download the AWS SCT distribution file using the links in the Installing section.

2. Verifying the checksum of the AWS SCT distribution file.

3. Extract the contents of the distribution file. Locate the MSI file you want to verify.

4. In Windows Explorer, right-click the MSI file and select Properties.

5. Choose the Digital Signatures tab.

6. Verify that the digital signature is from Amazon Services LLC.

Installing JDBC drivers for AWS Schema Conversion Tool

For AWS SCT to work correctly, download the JDBC drivers for your source and target database

engines. If you use a virtual target database platform, you don't need to download the JDBC driver

Verifying the AWS SCT MSI file on Microsoft Windows Version 1.0.672 11

AWS Schema Conversion Tool User Guide

for your target database engine. For more information, see Mapping to virtual targets in the AWS

Schema Conversion Tool.

After you download the drivers, you give the location of the driver files. For more information, see

Storing driver paths in the global settings.

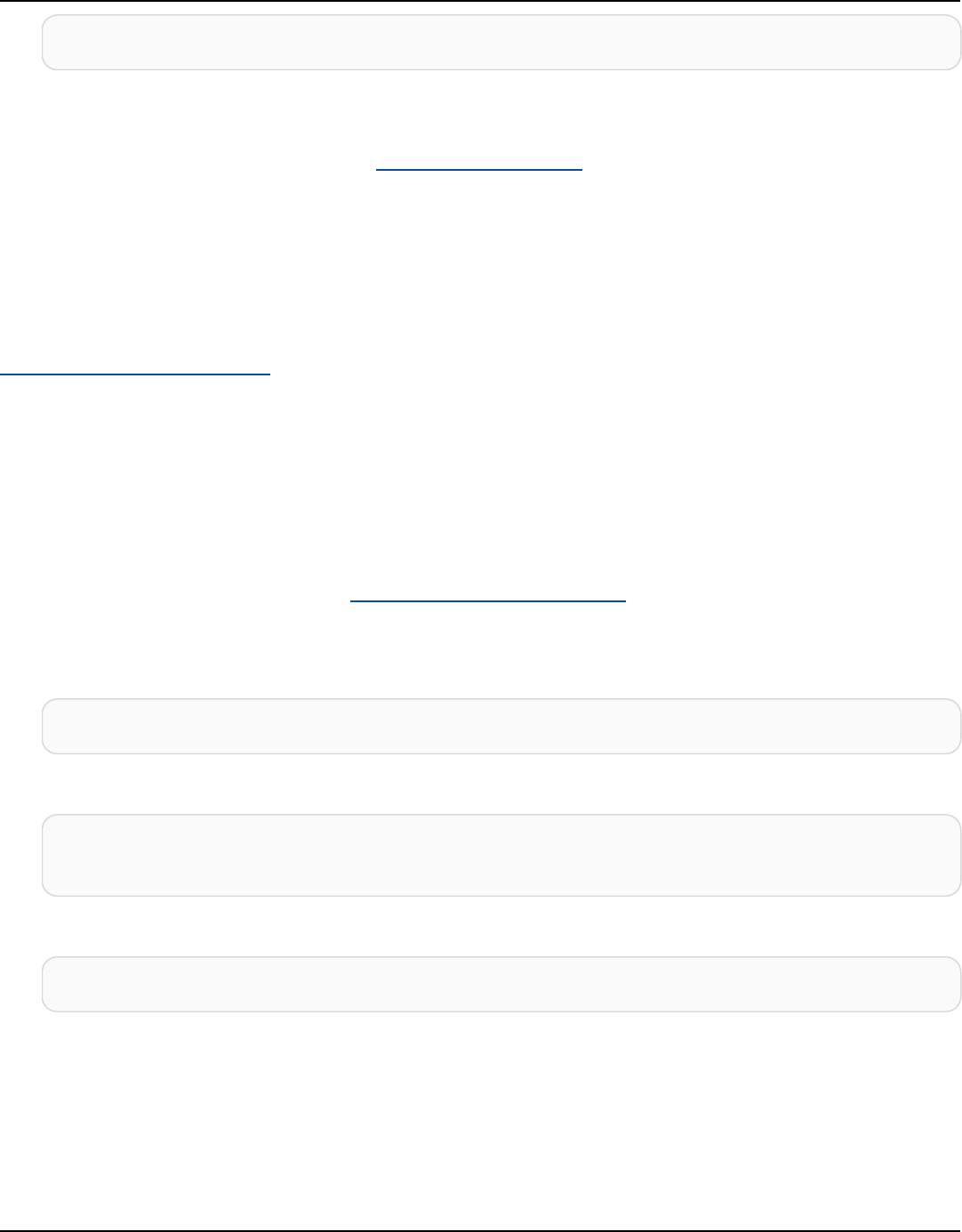

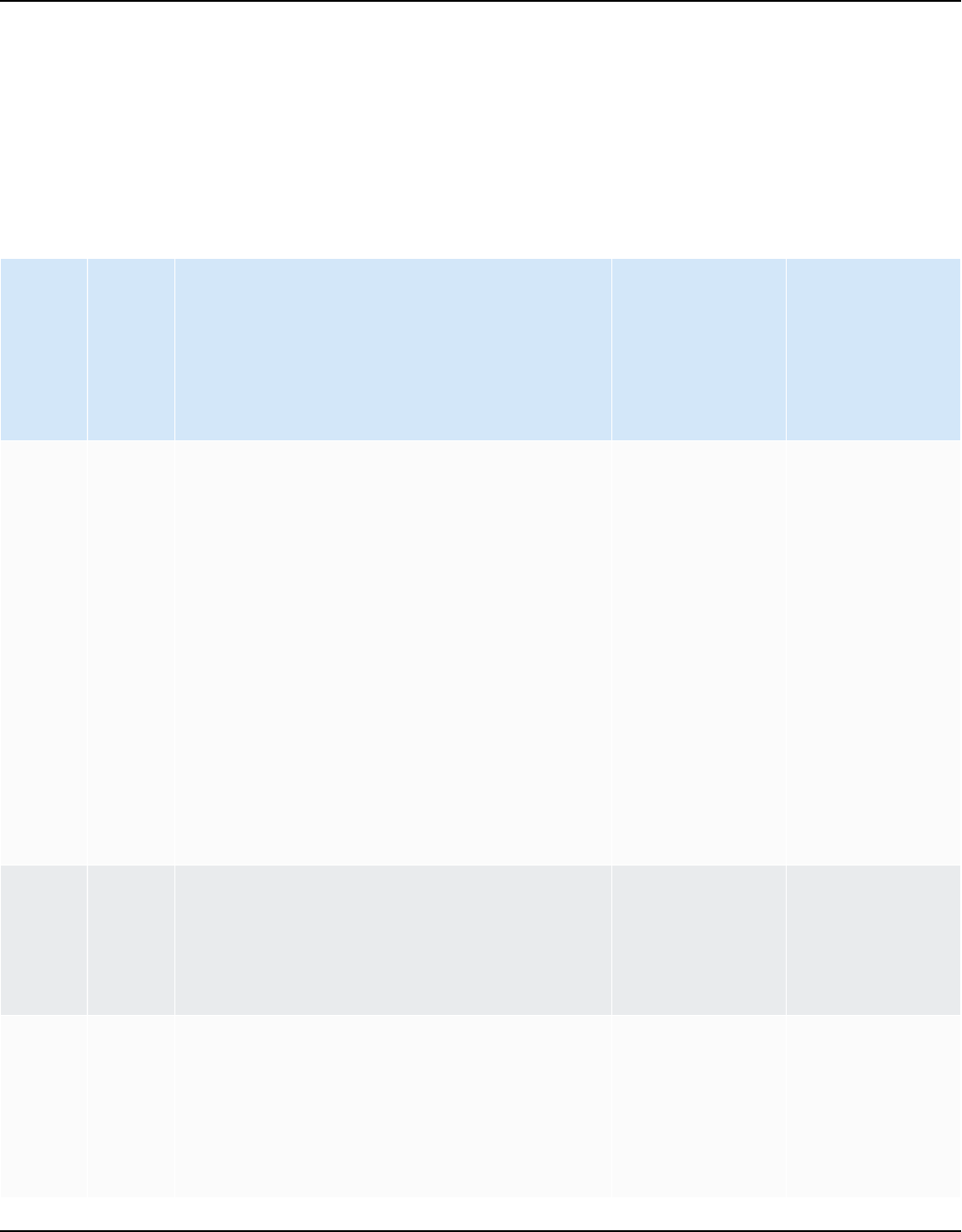

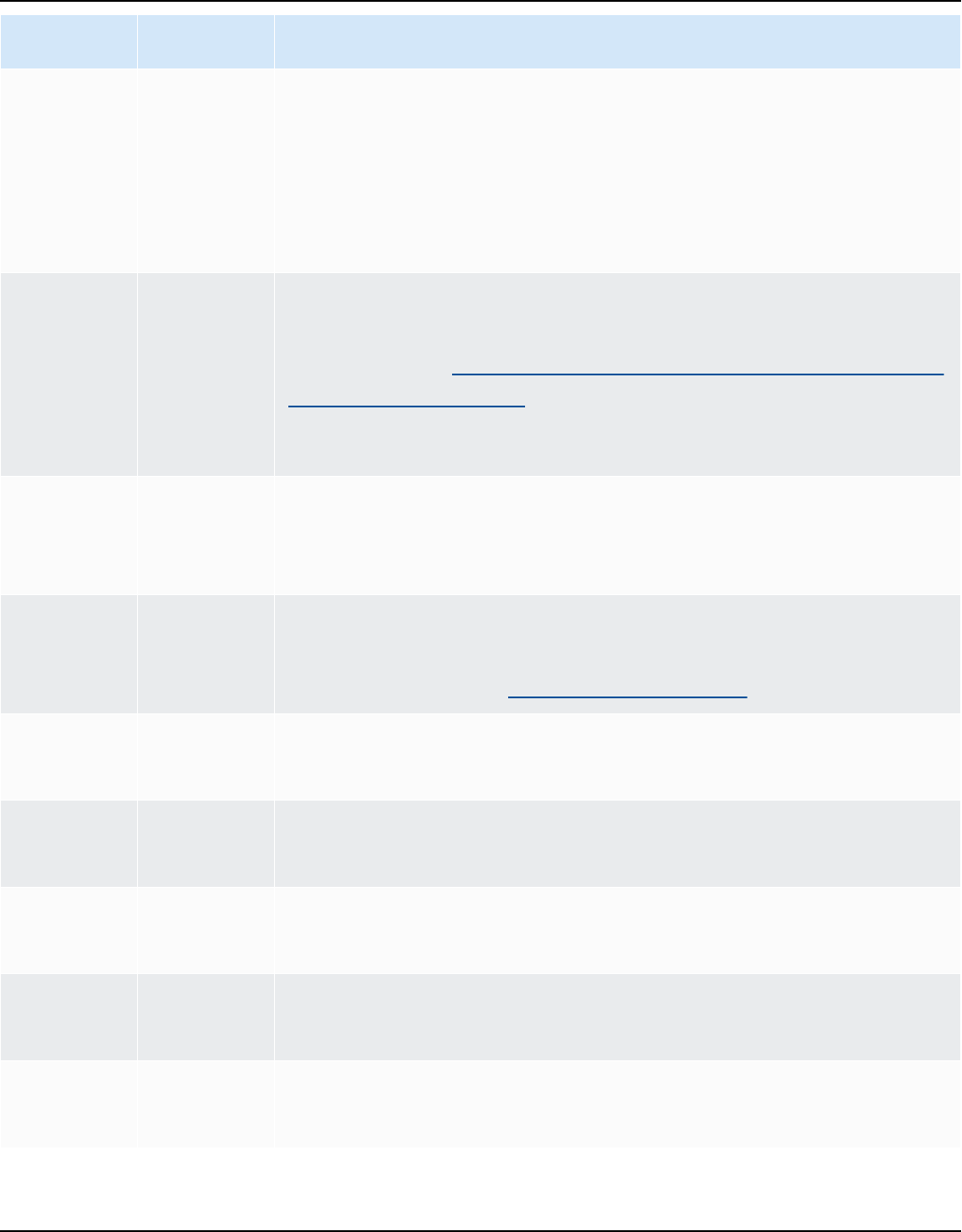

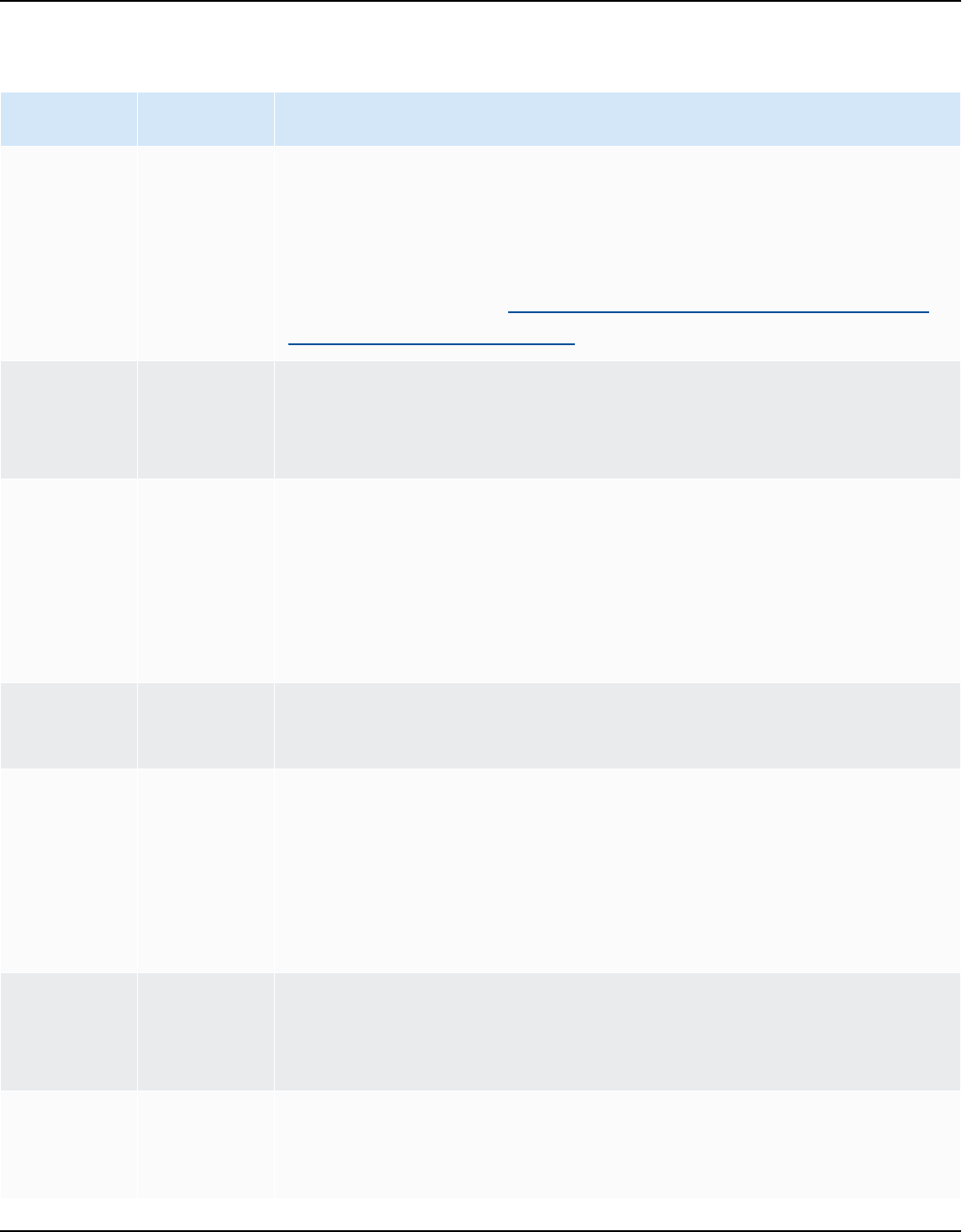

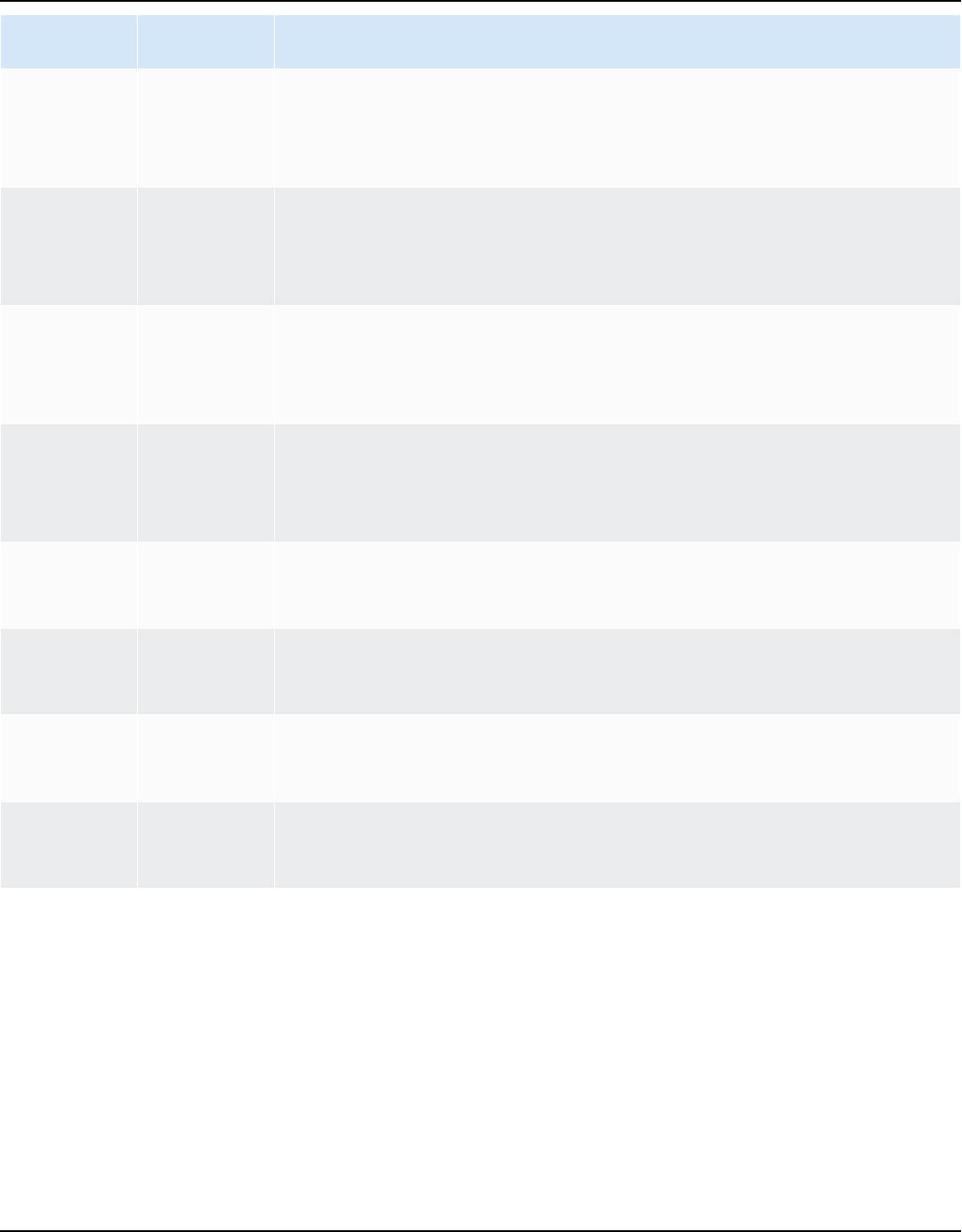

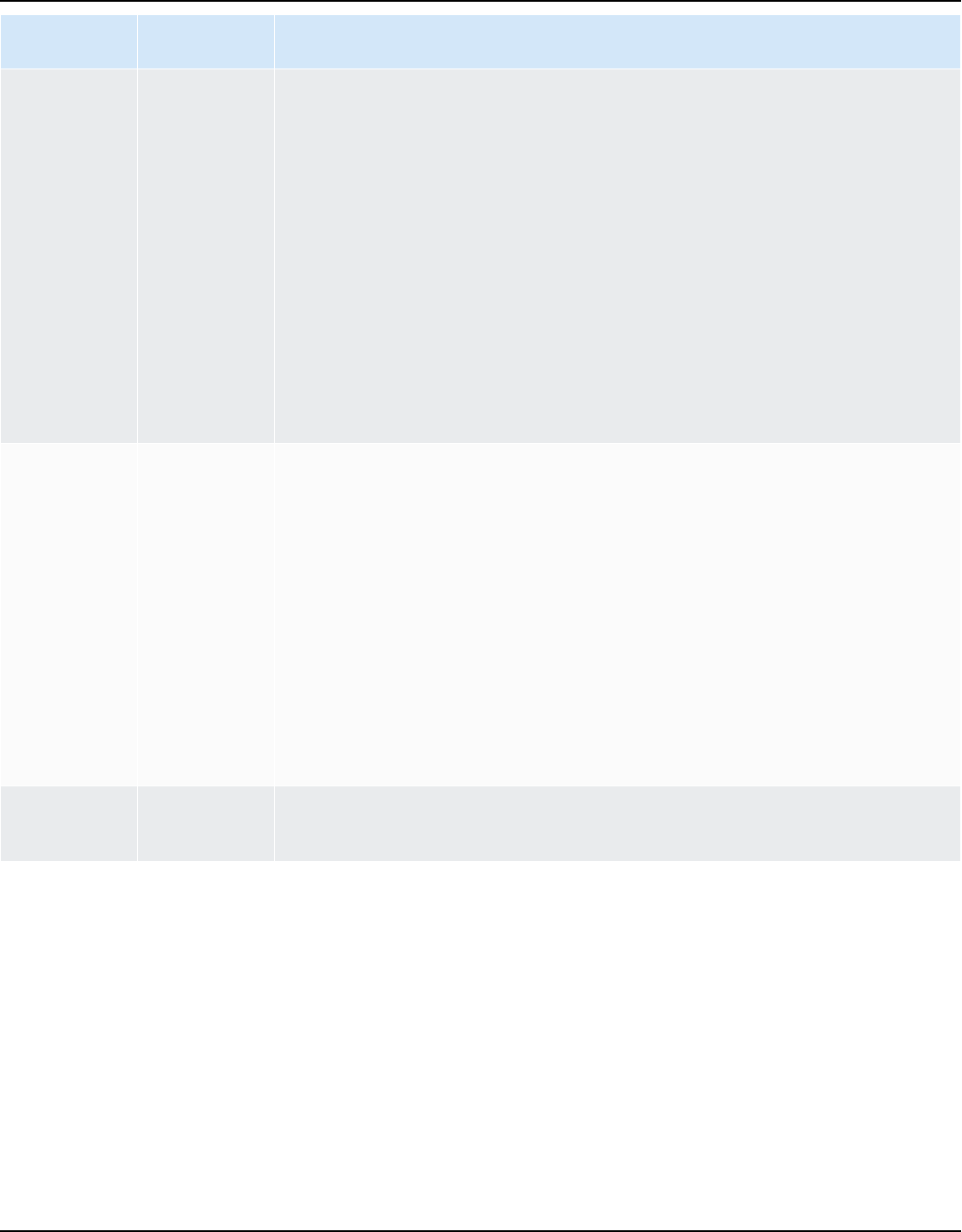

You can download the database drivers from the following locations.

Important

Download the latest version of the driver available. The following table includes the lowest

version of database driver supported by AWS SCT.

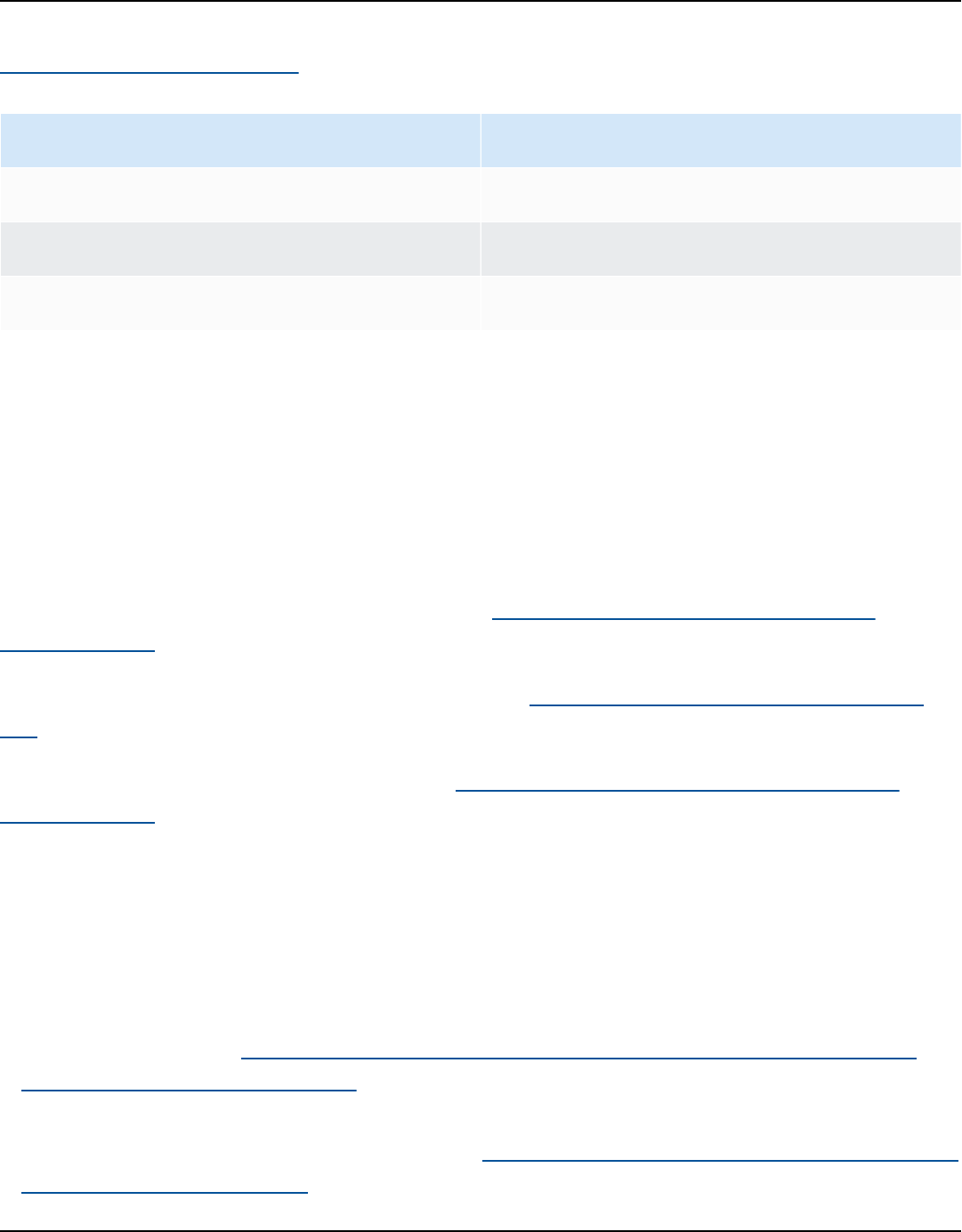

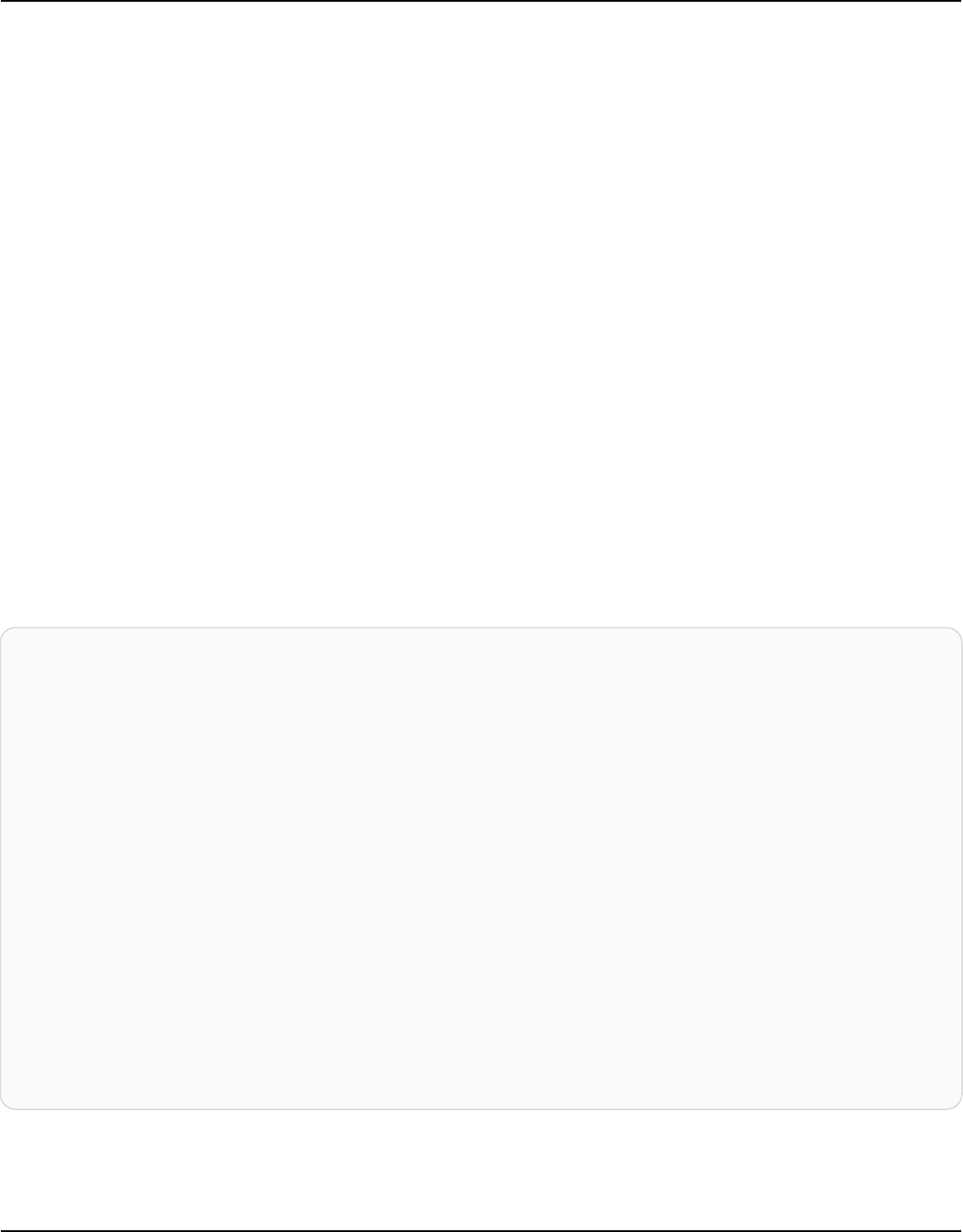

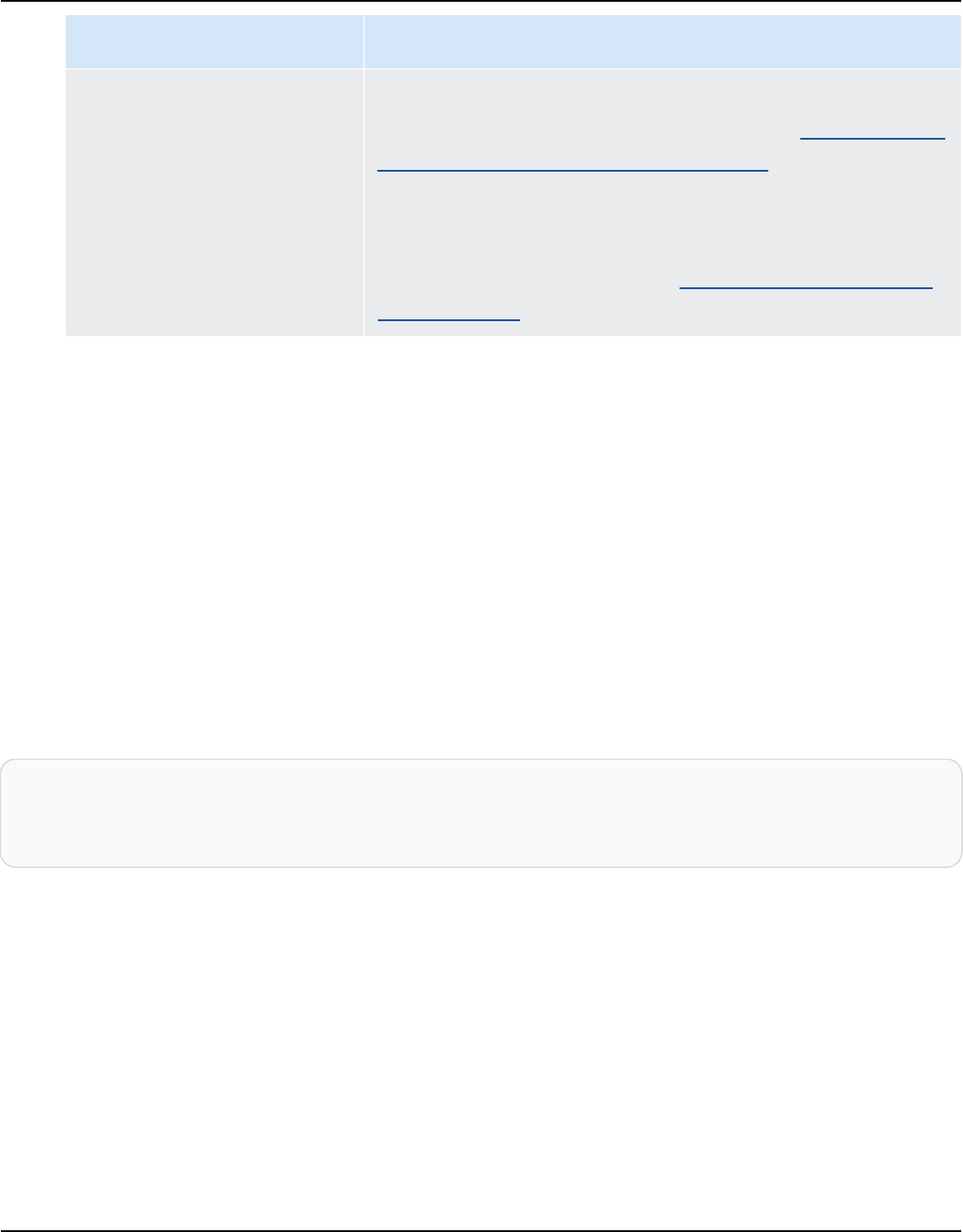

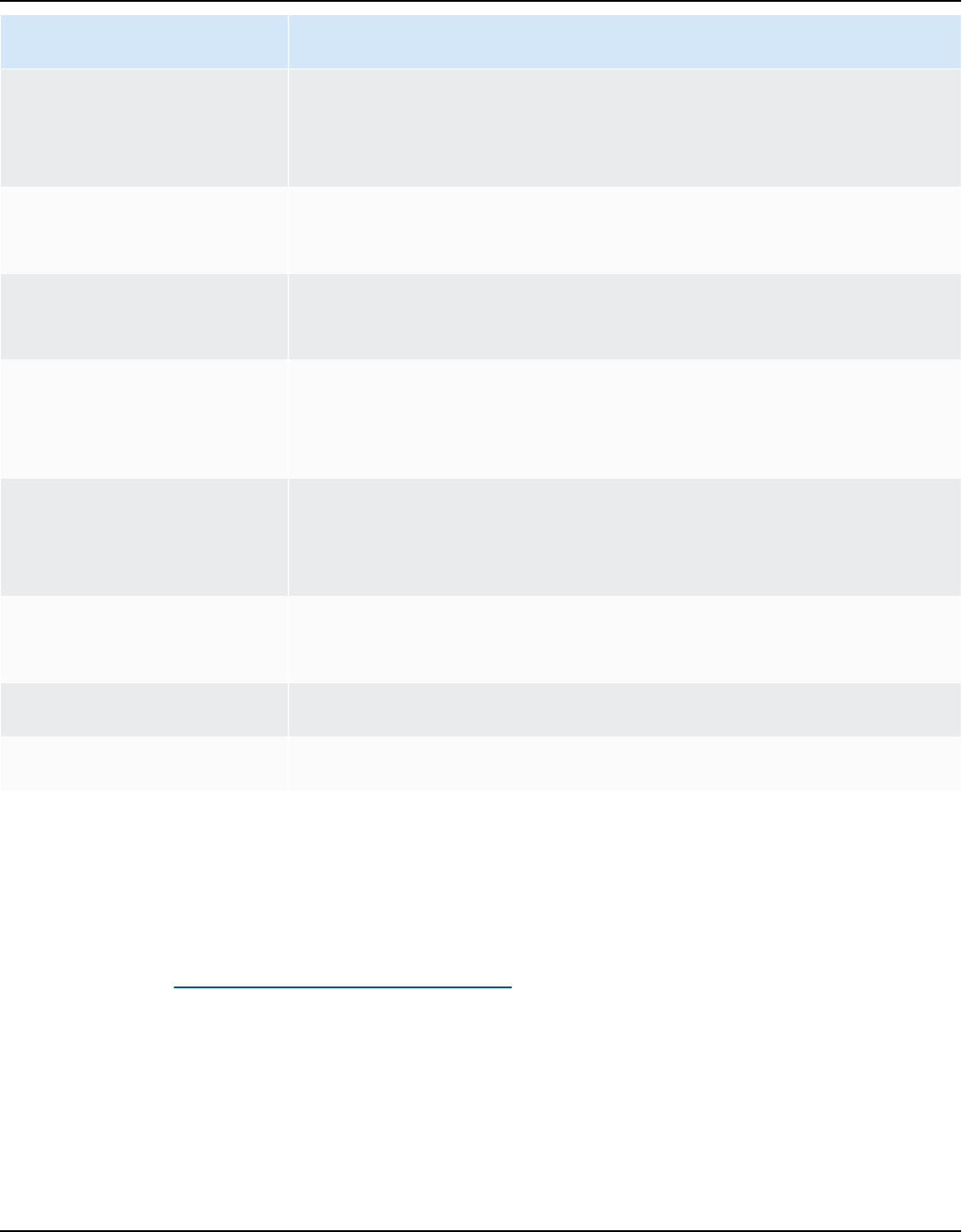

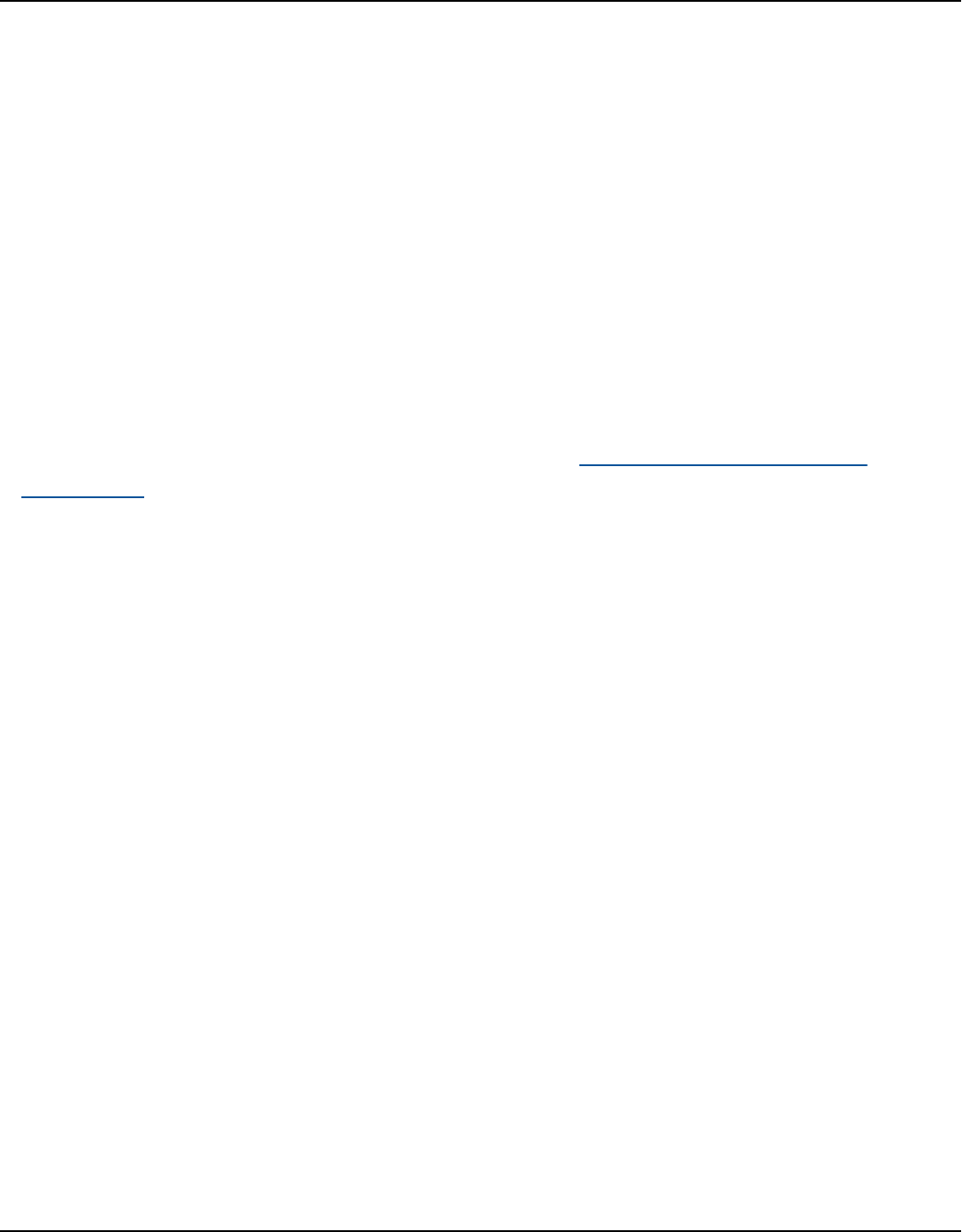

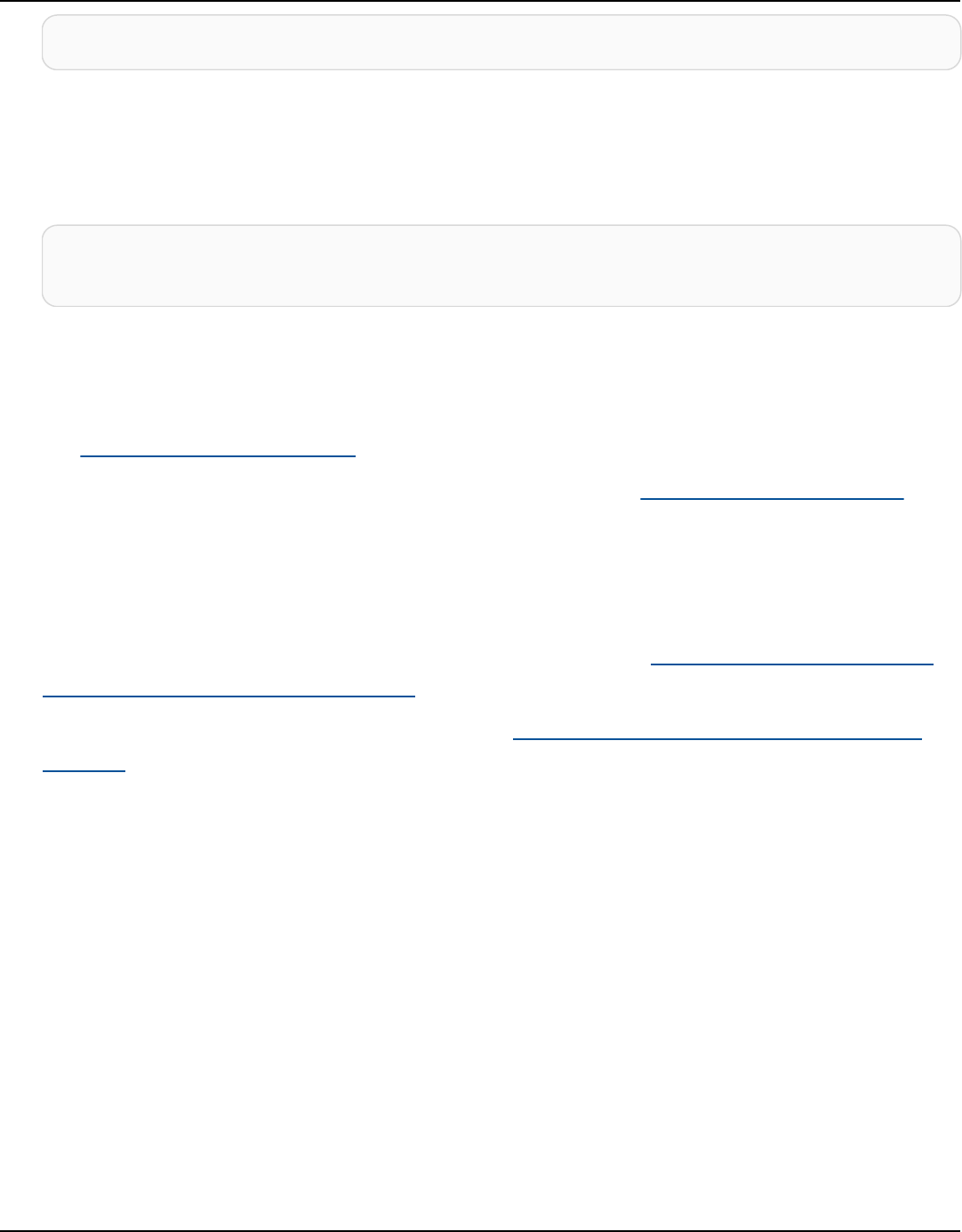

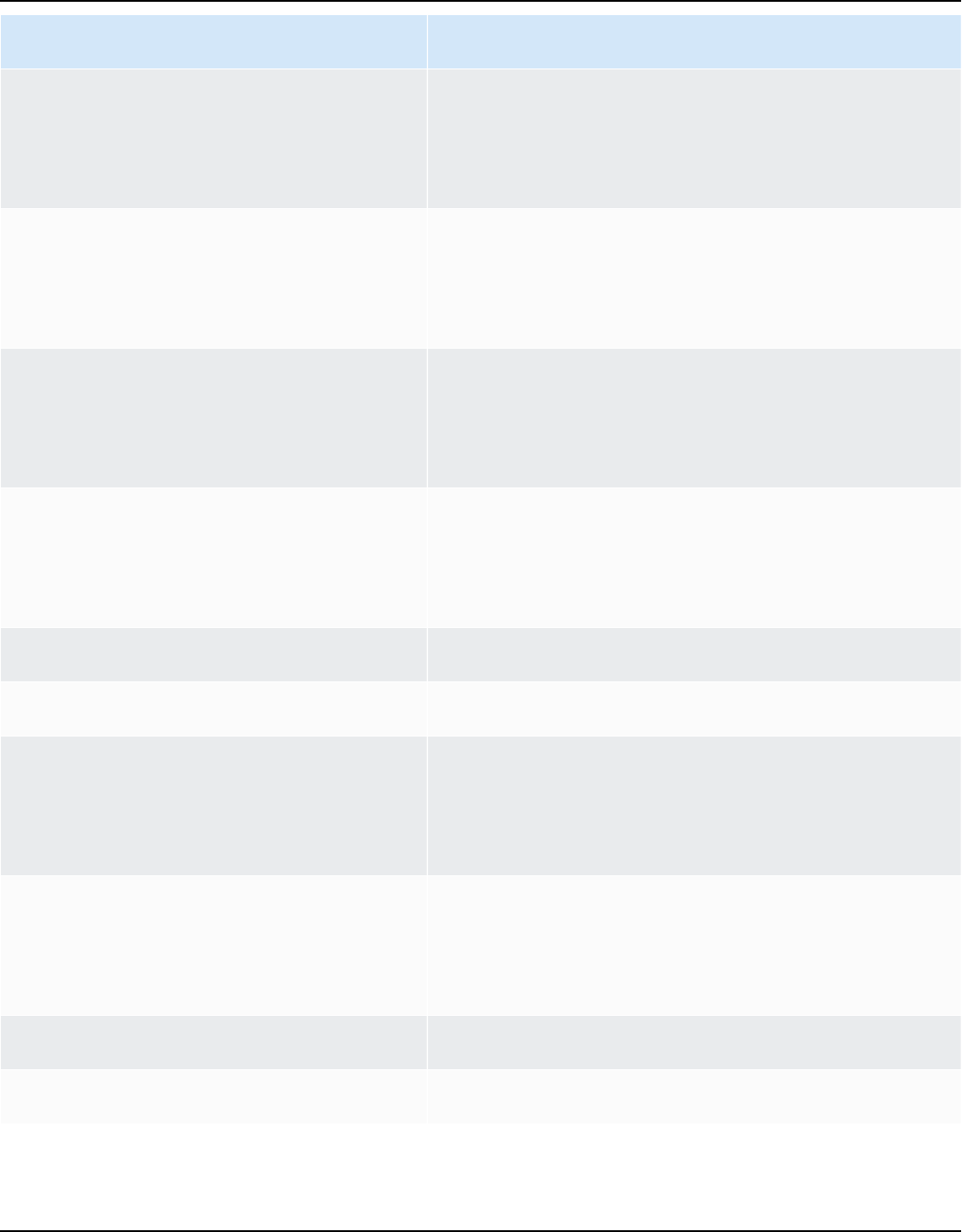

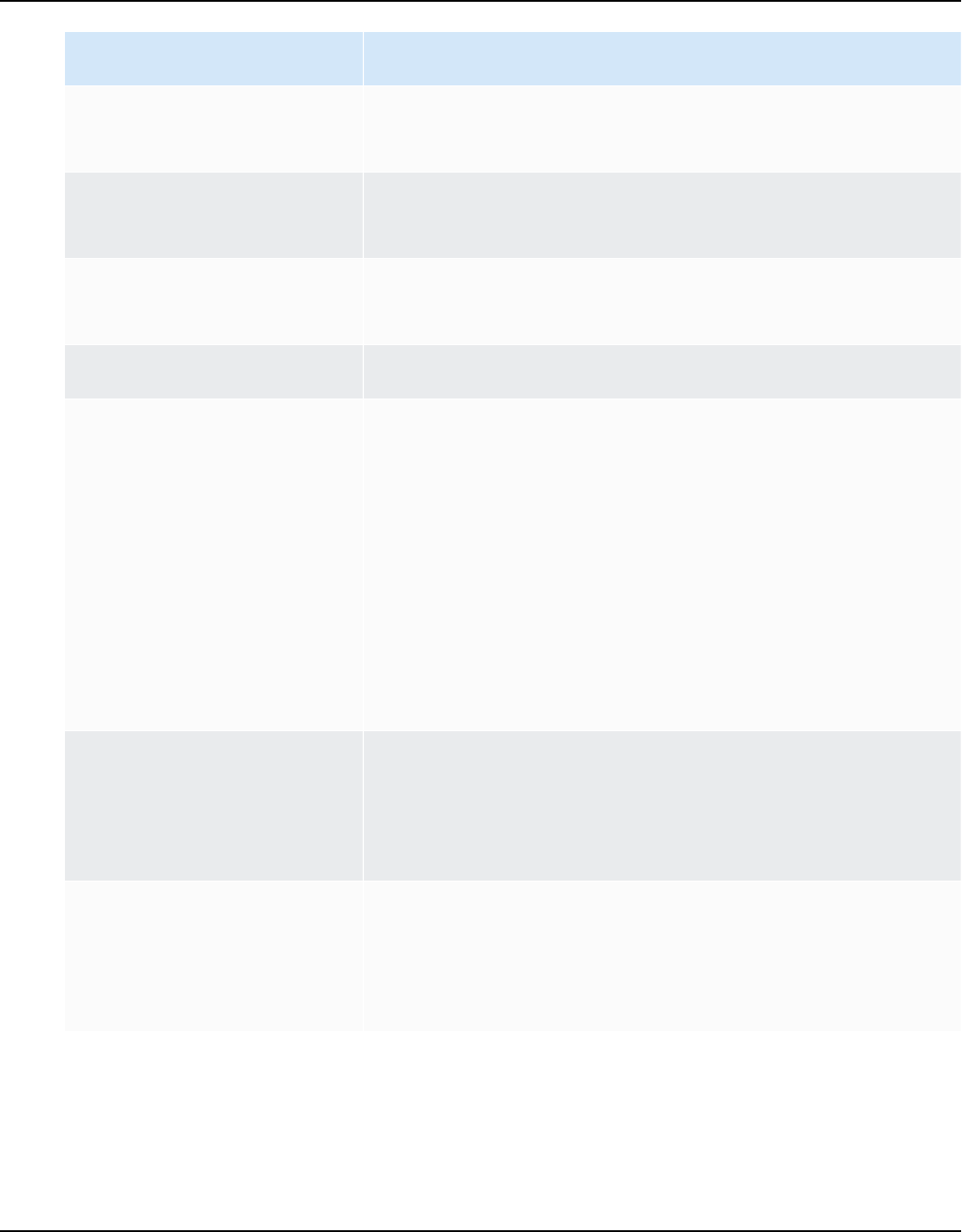

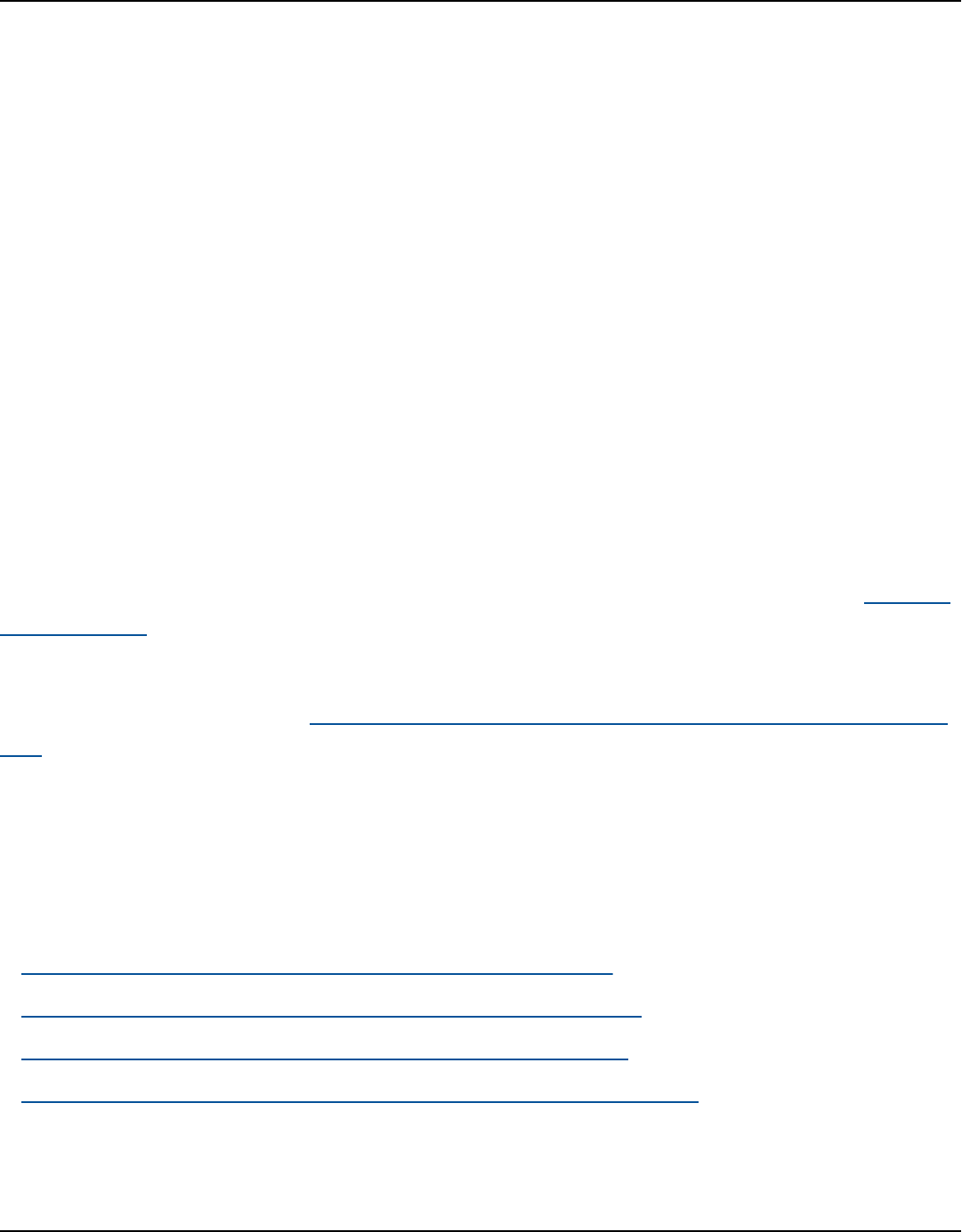

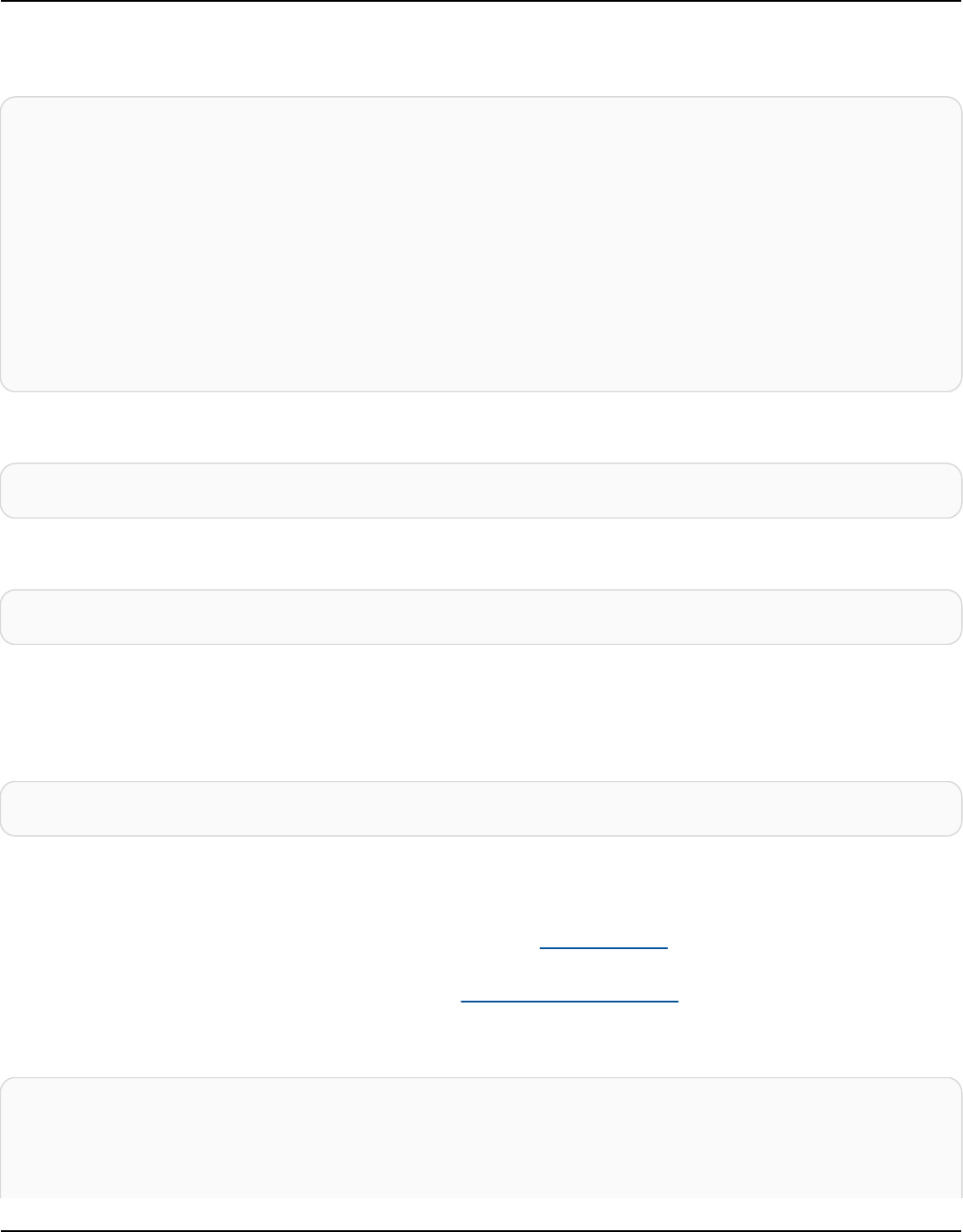

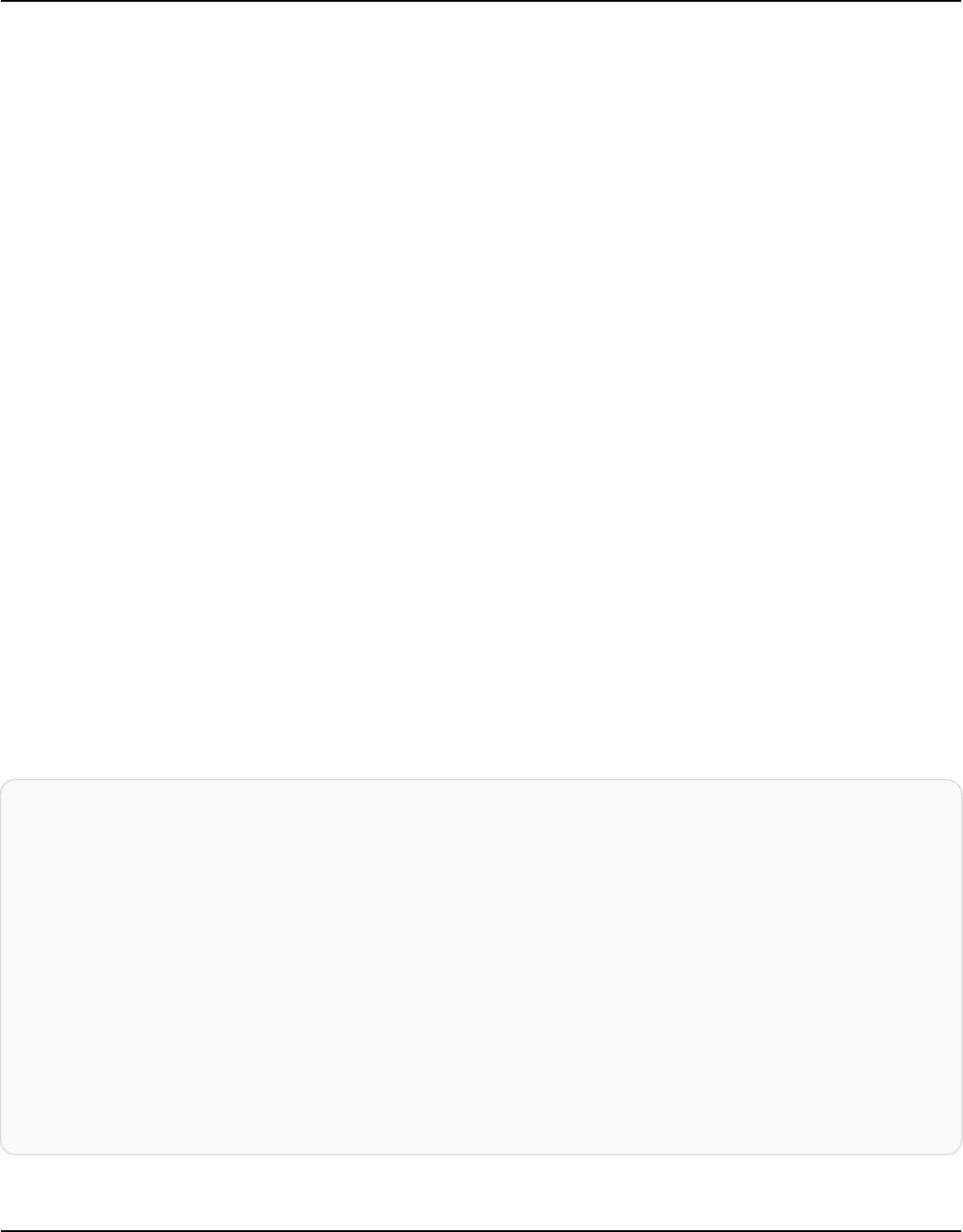

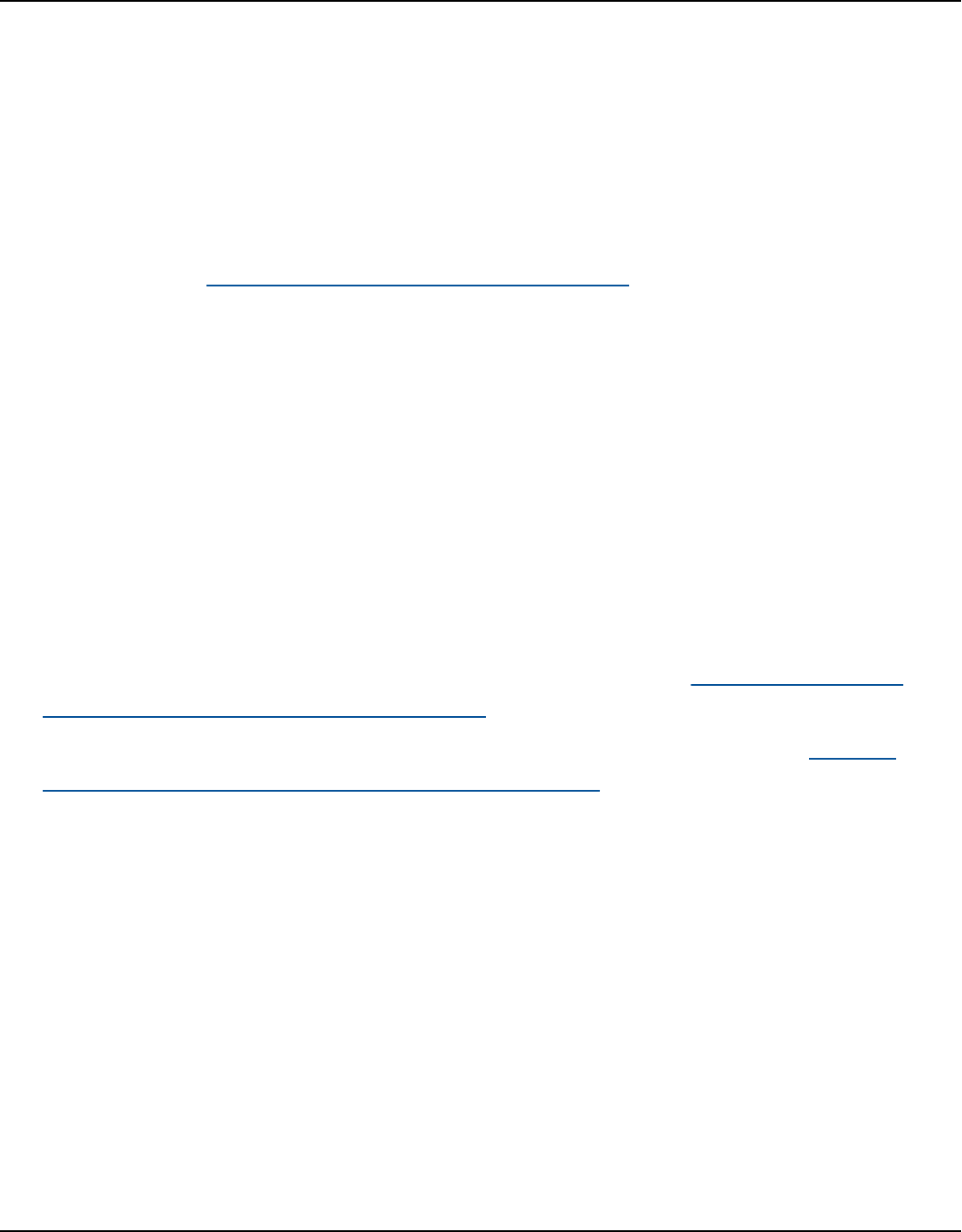

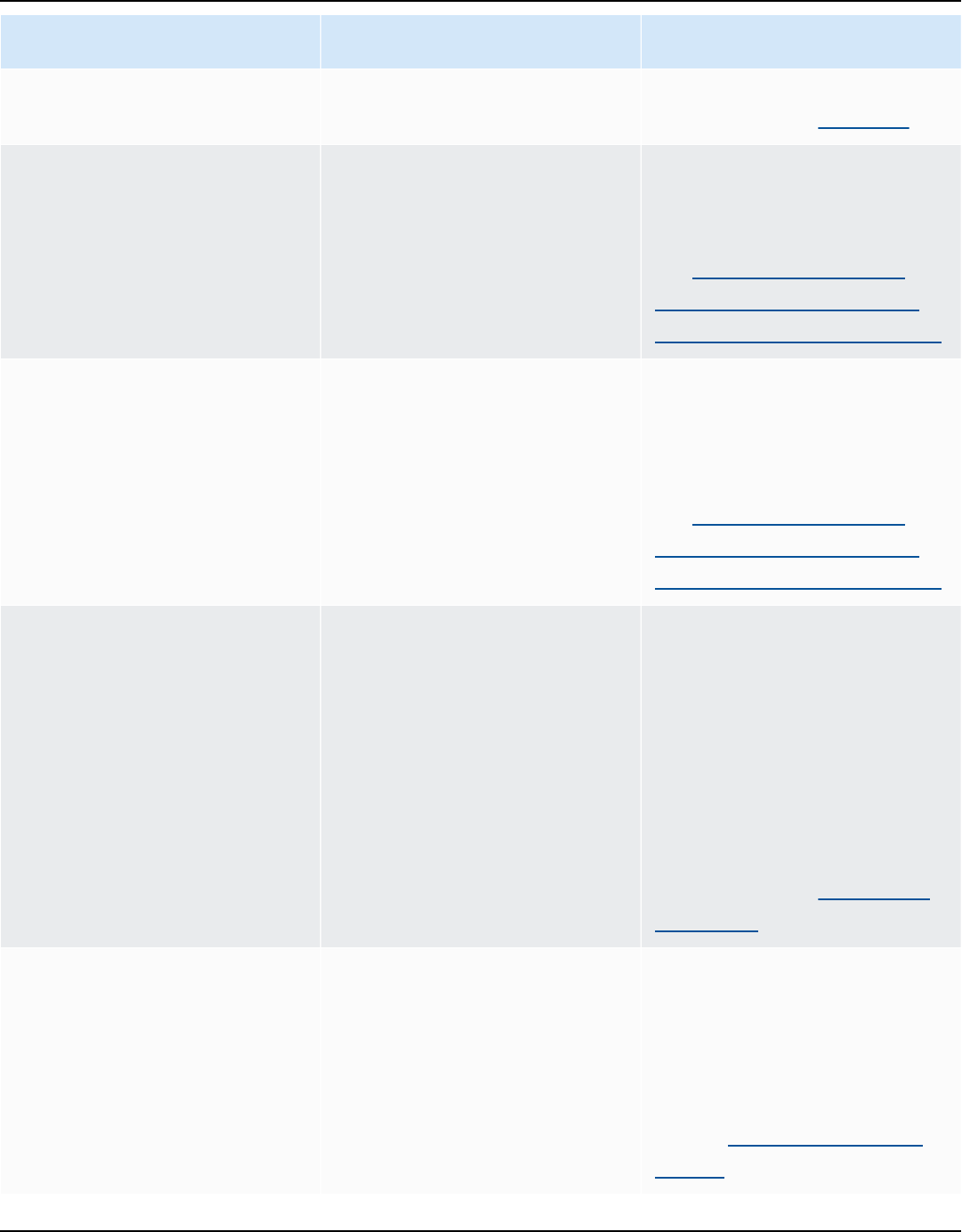

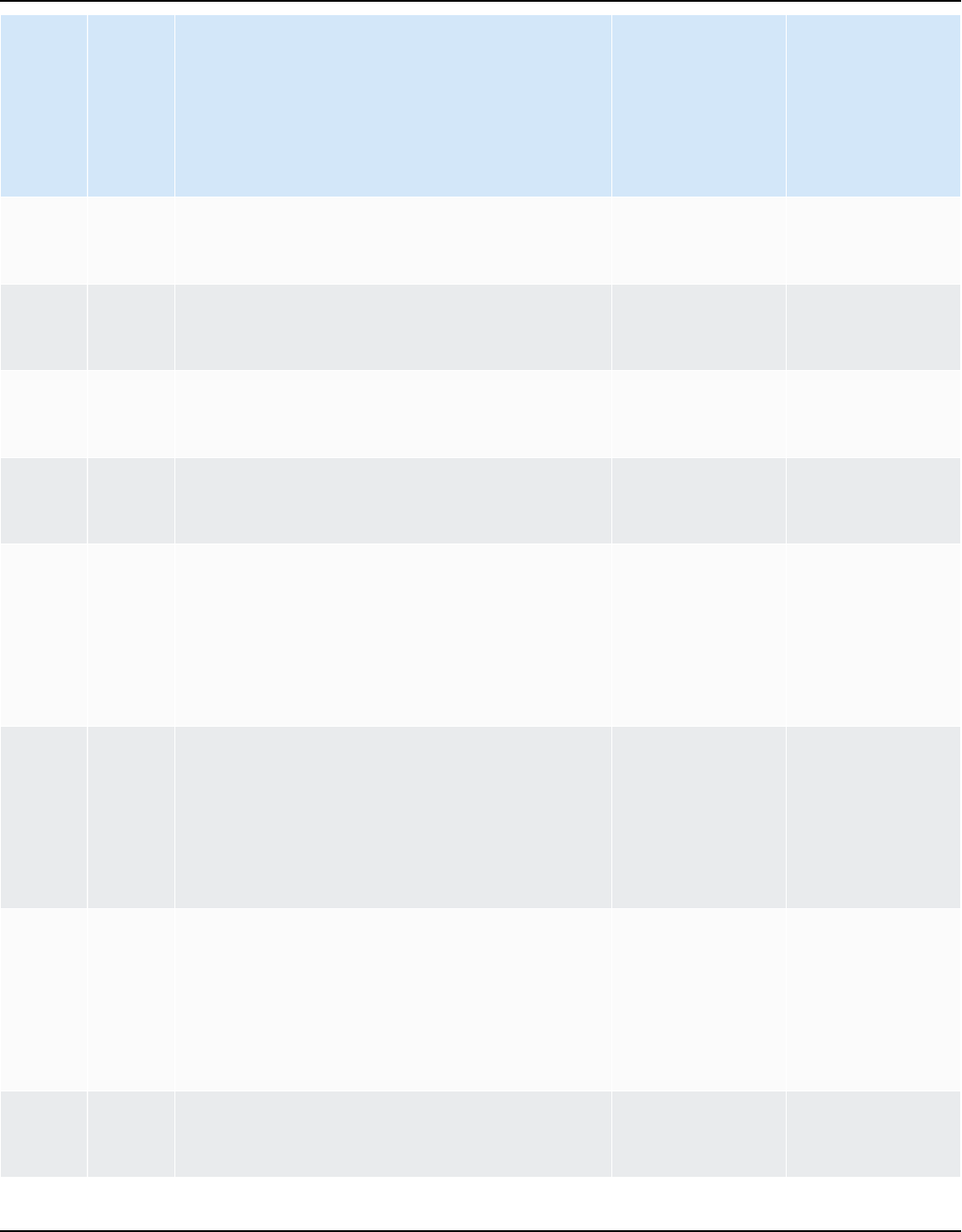

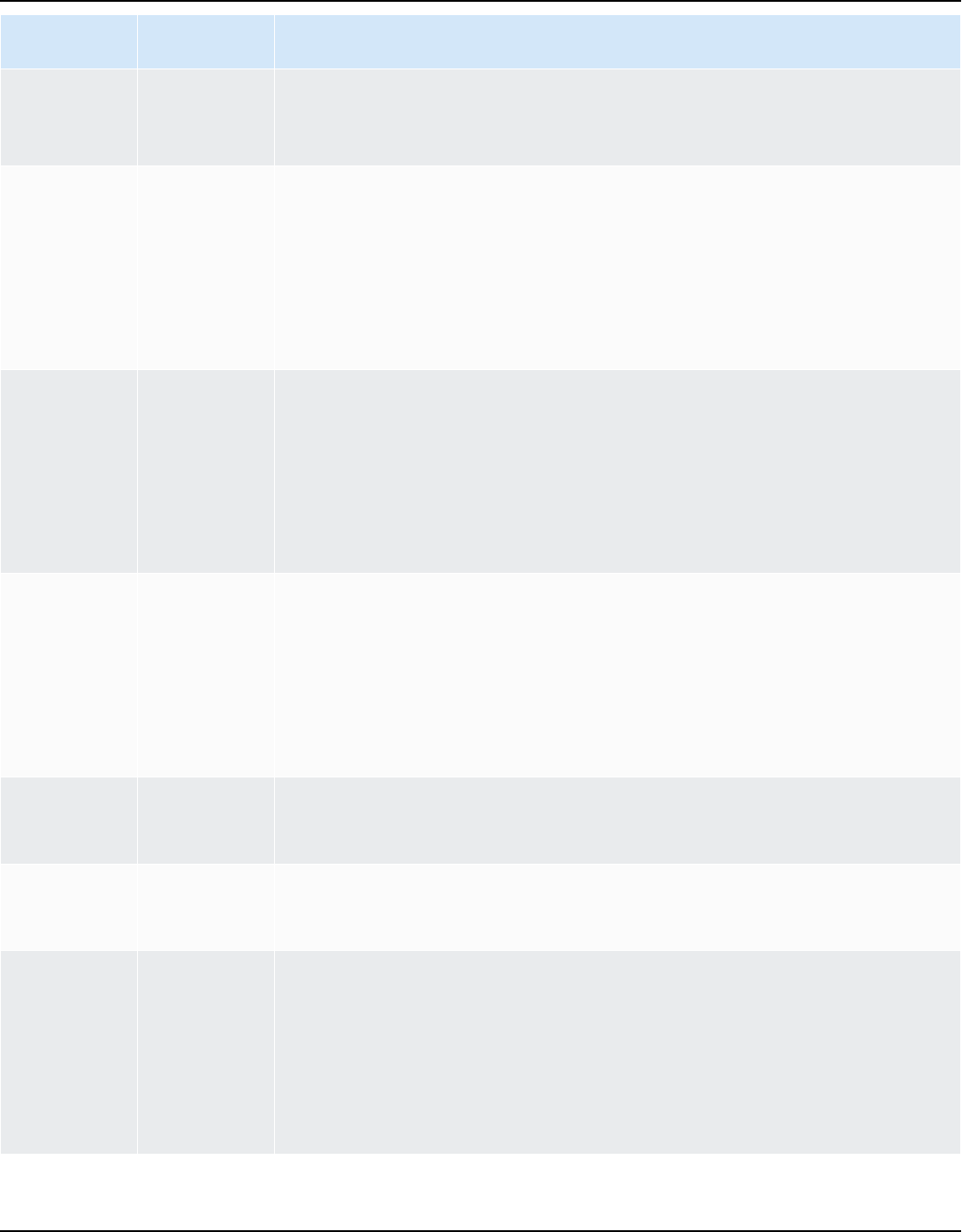

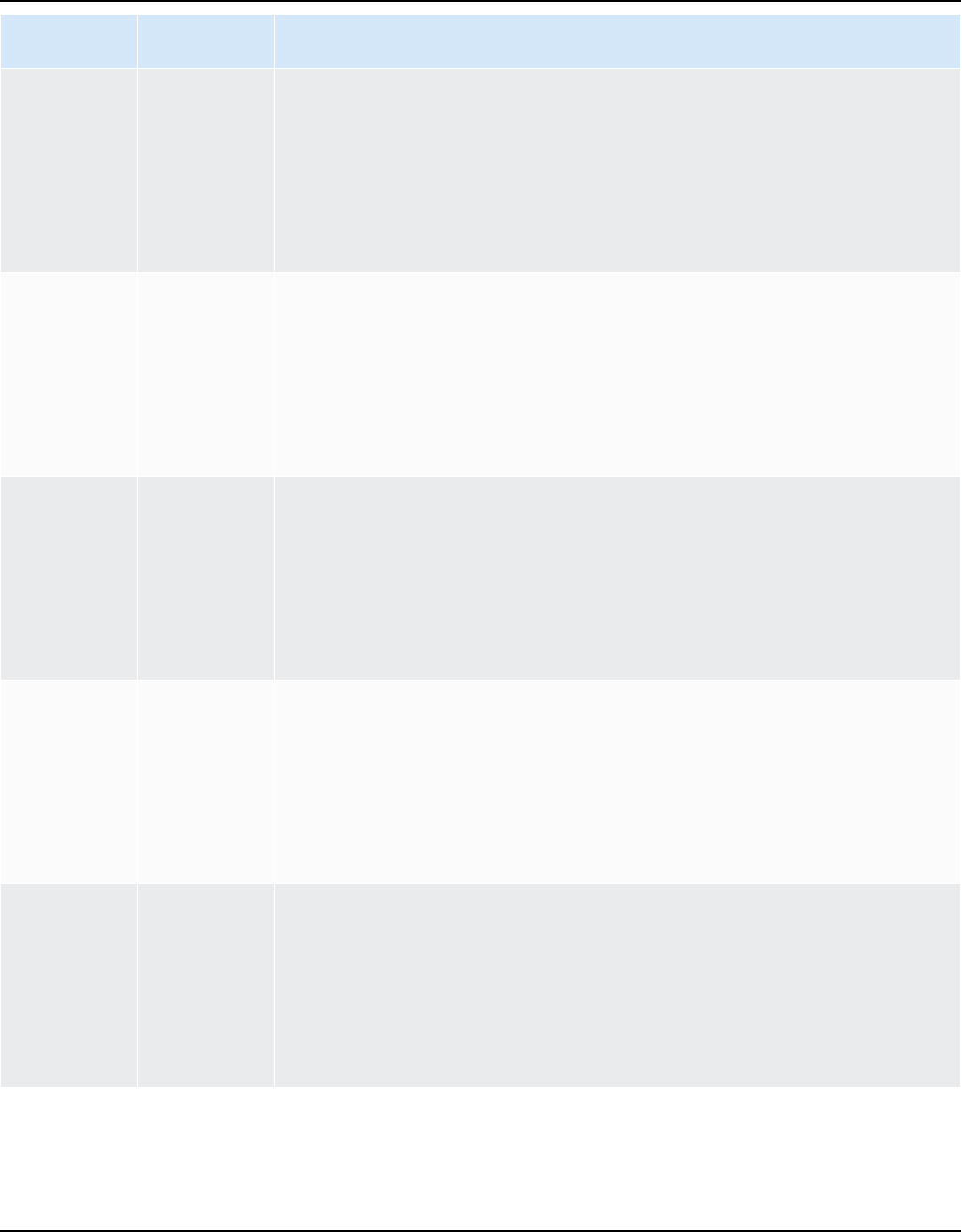

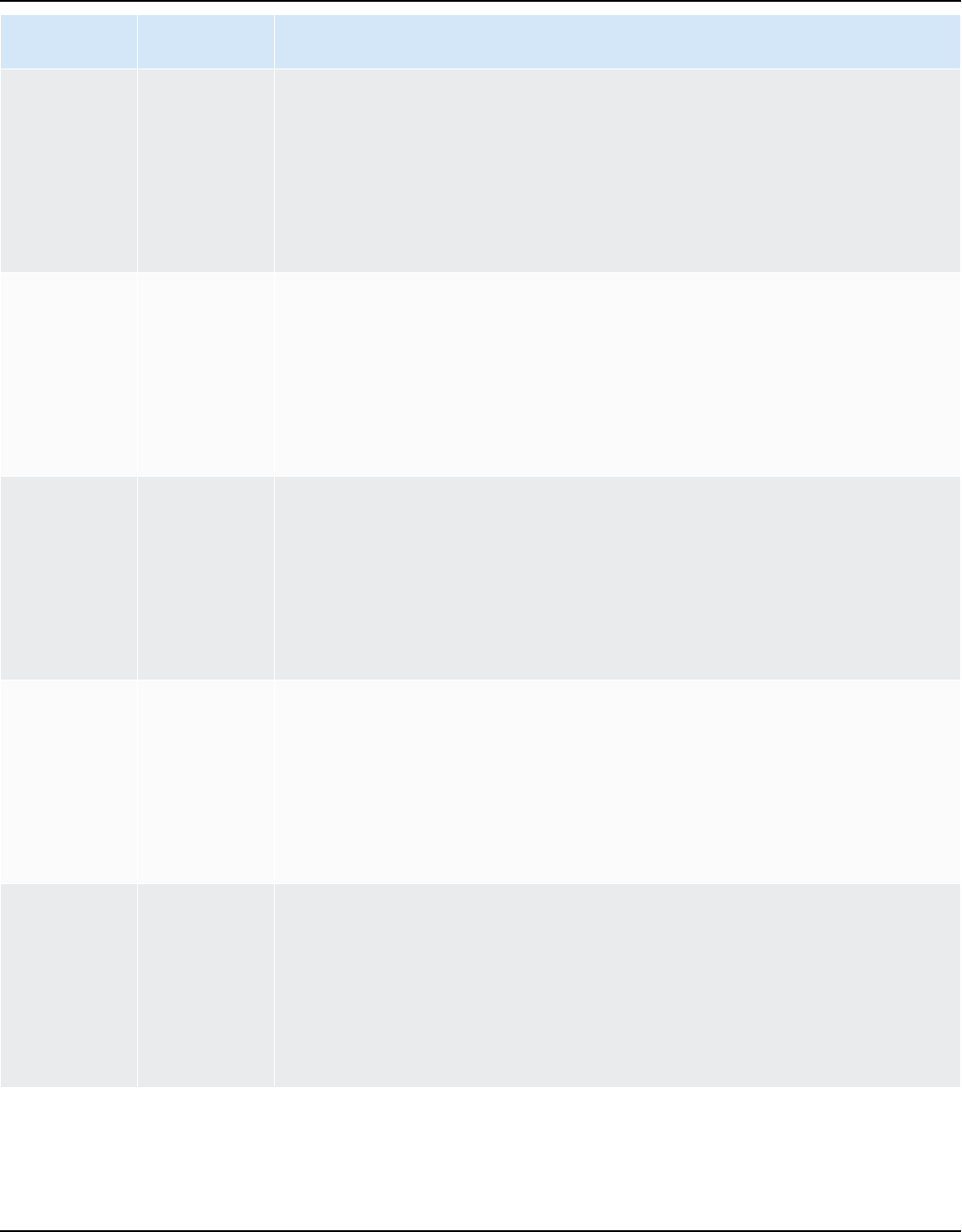

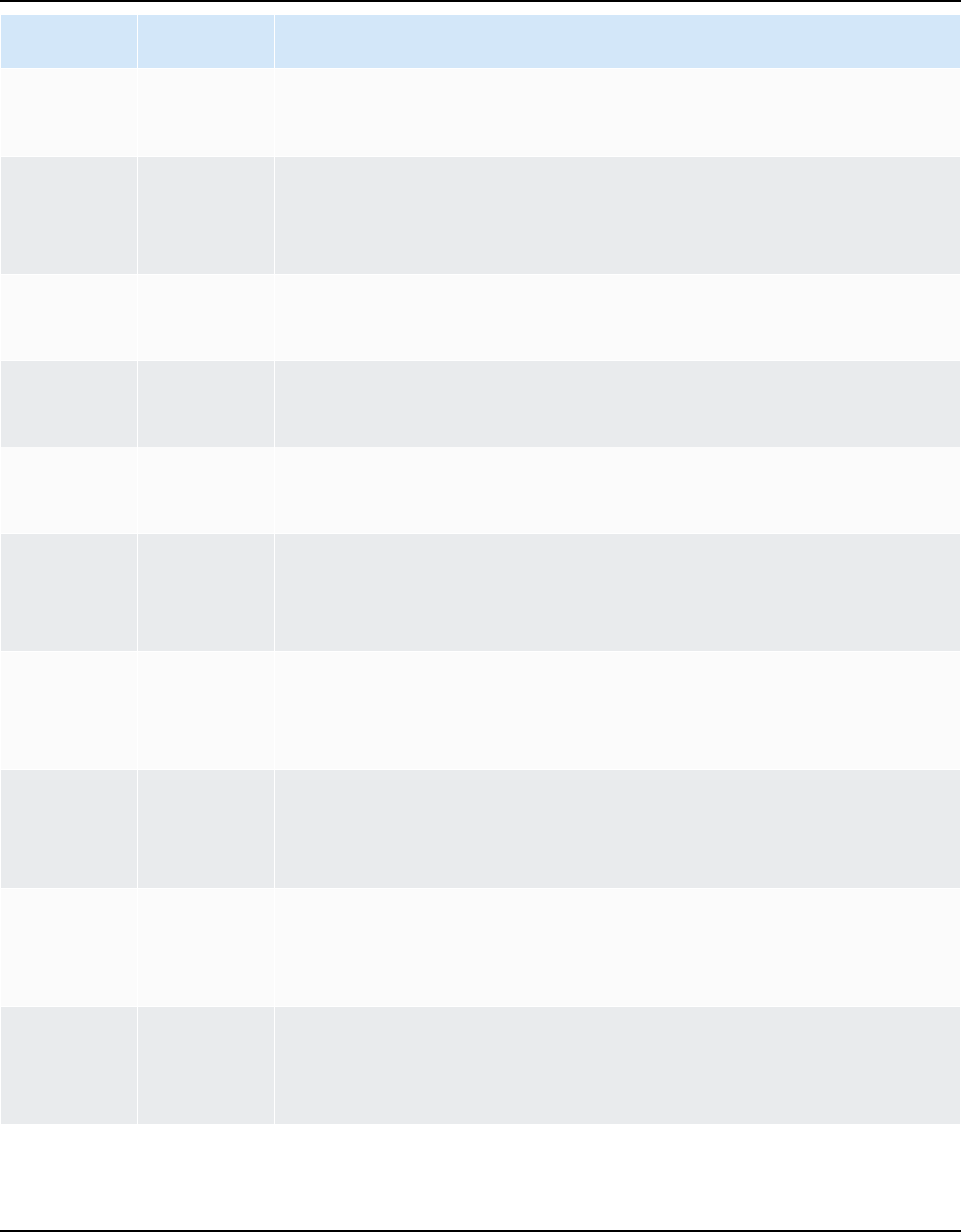

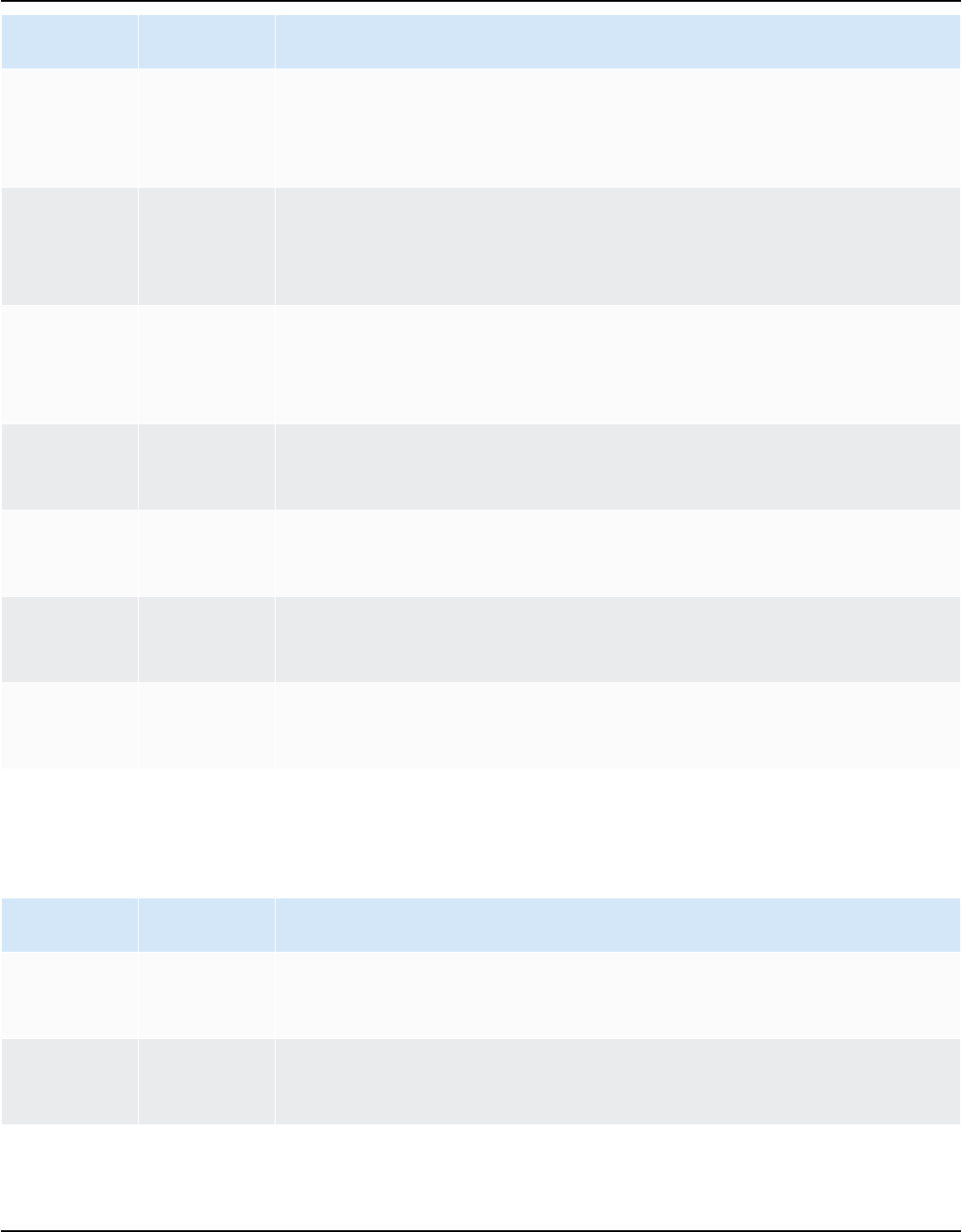

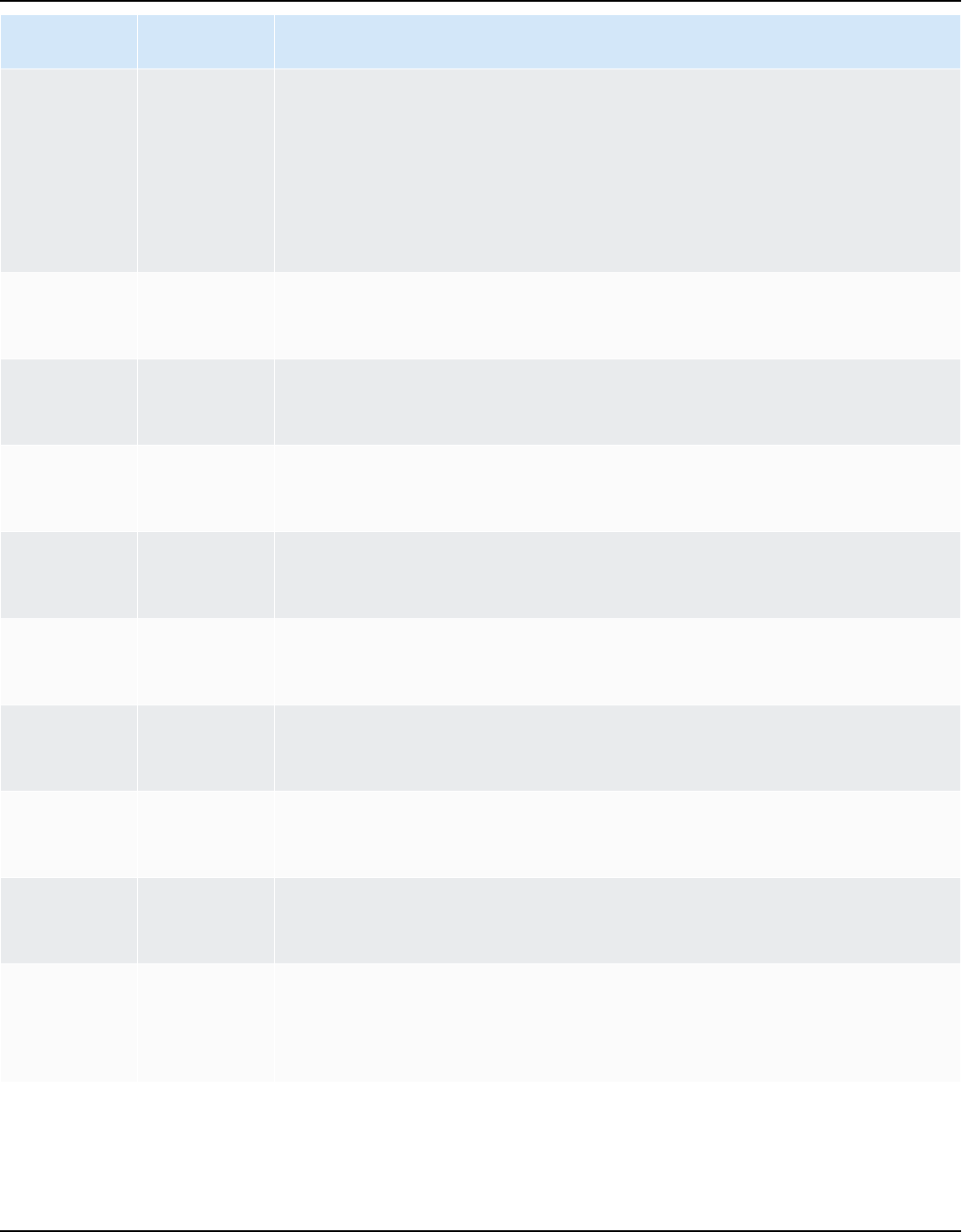

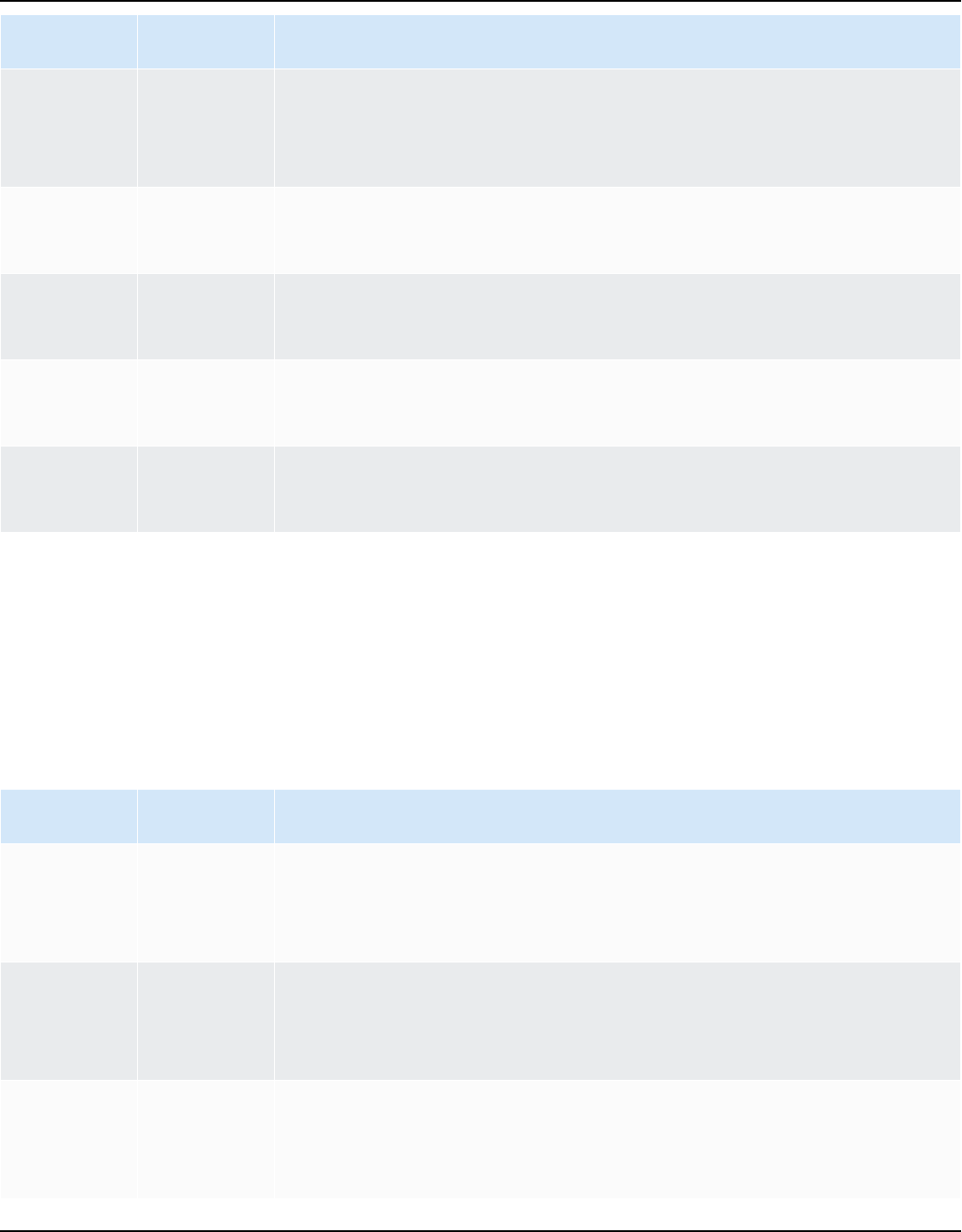

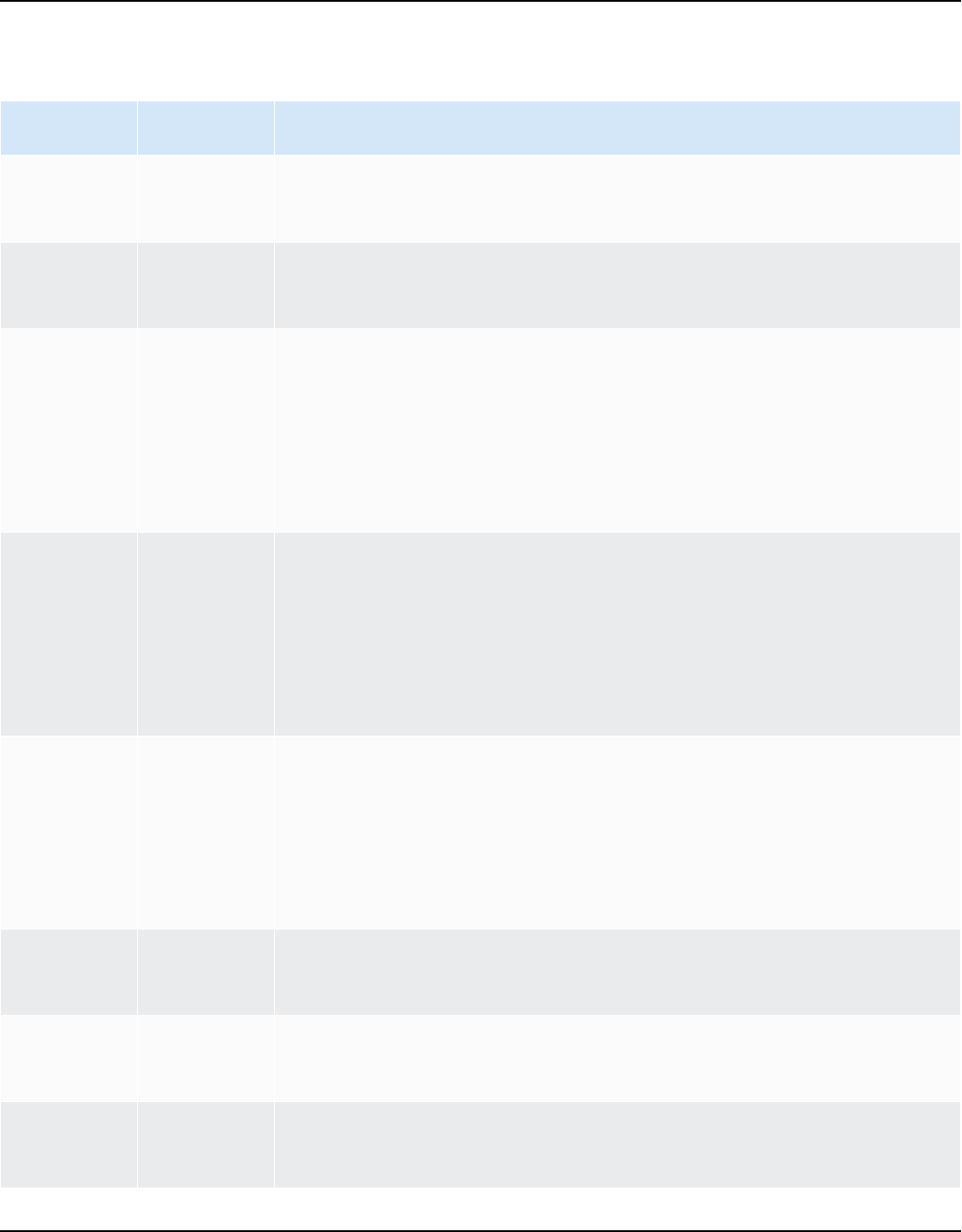

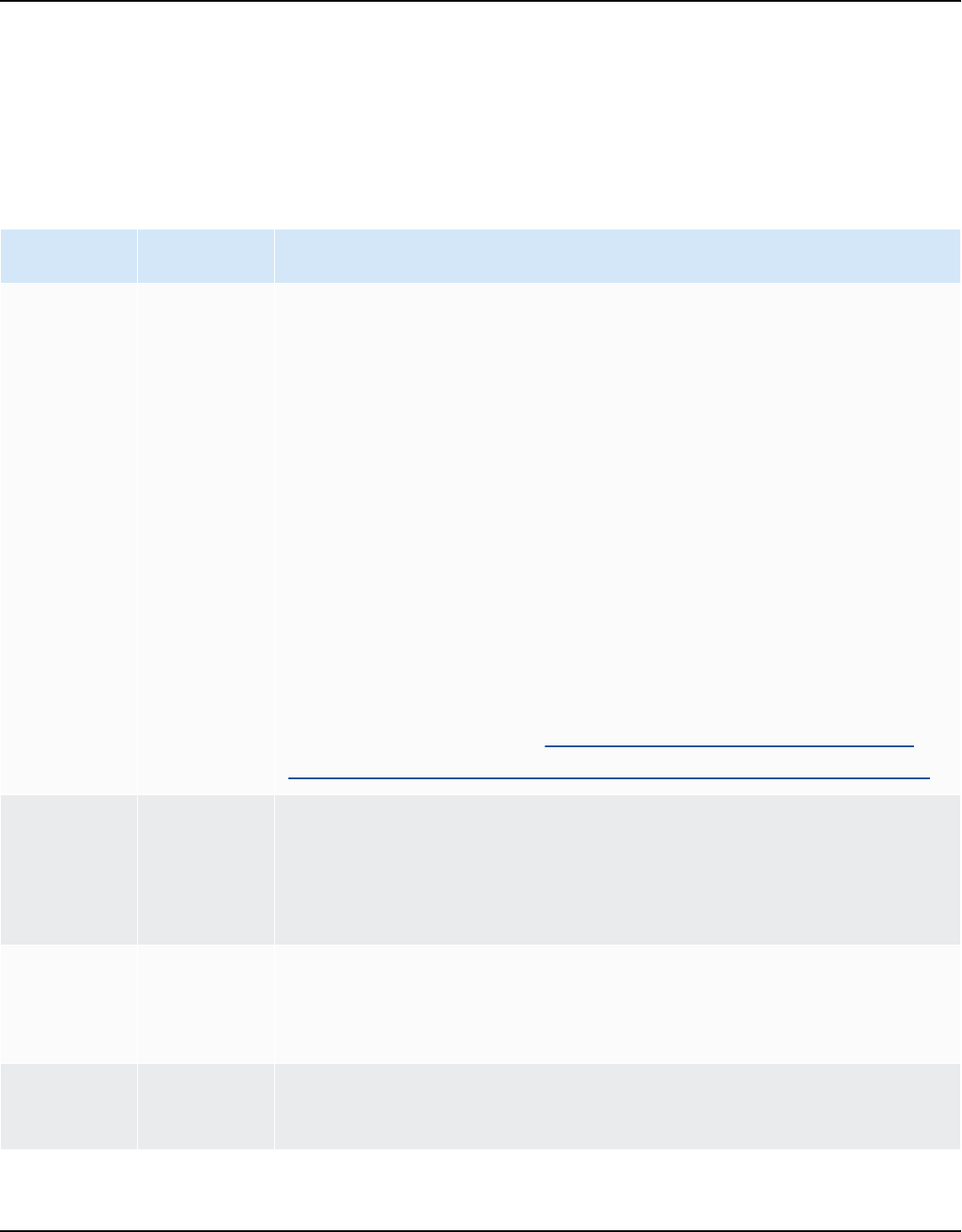

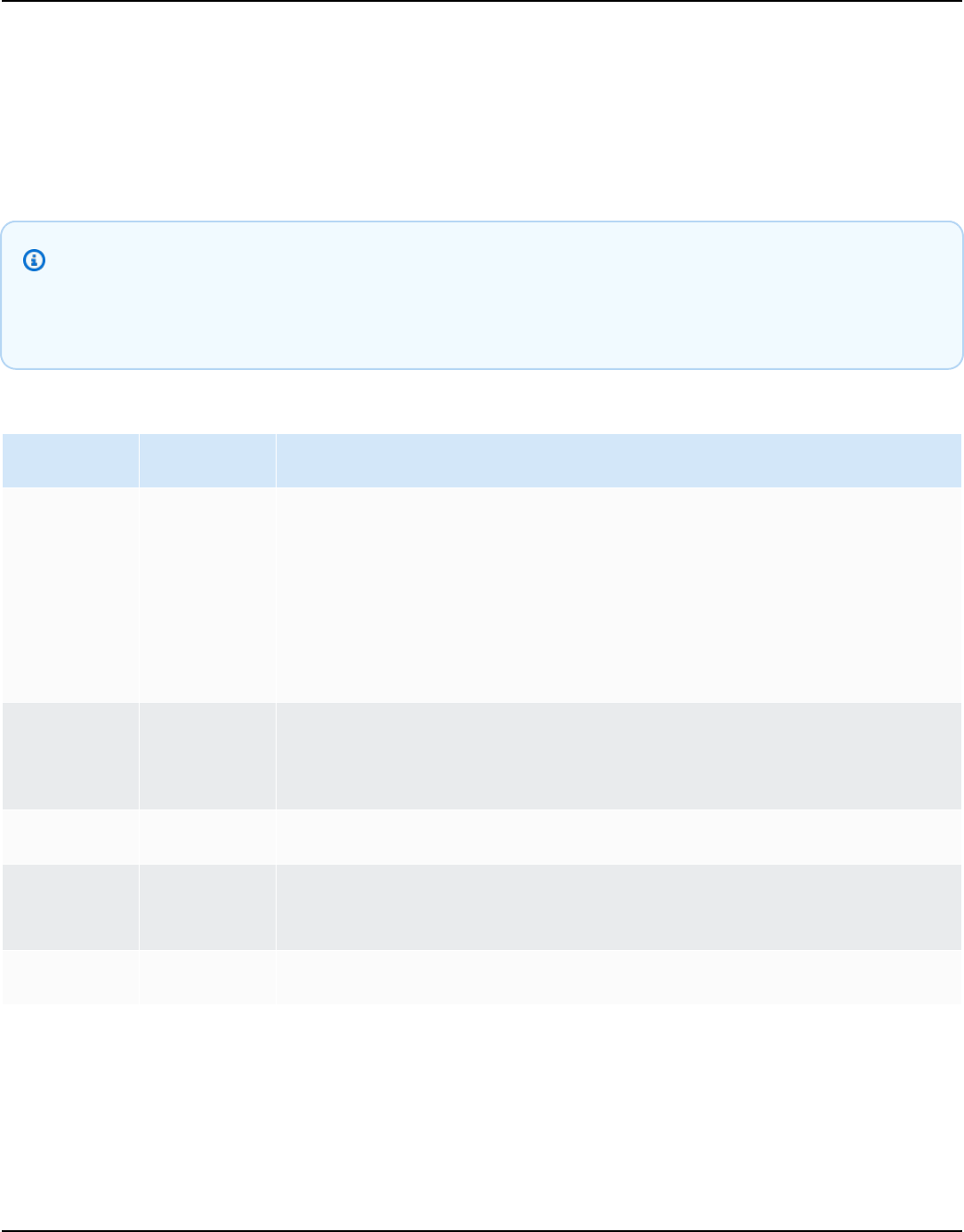

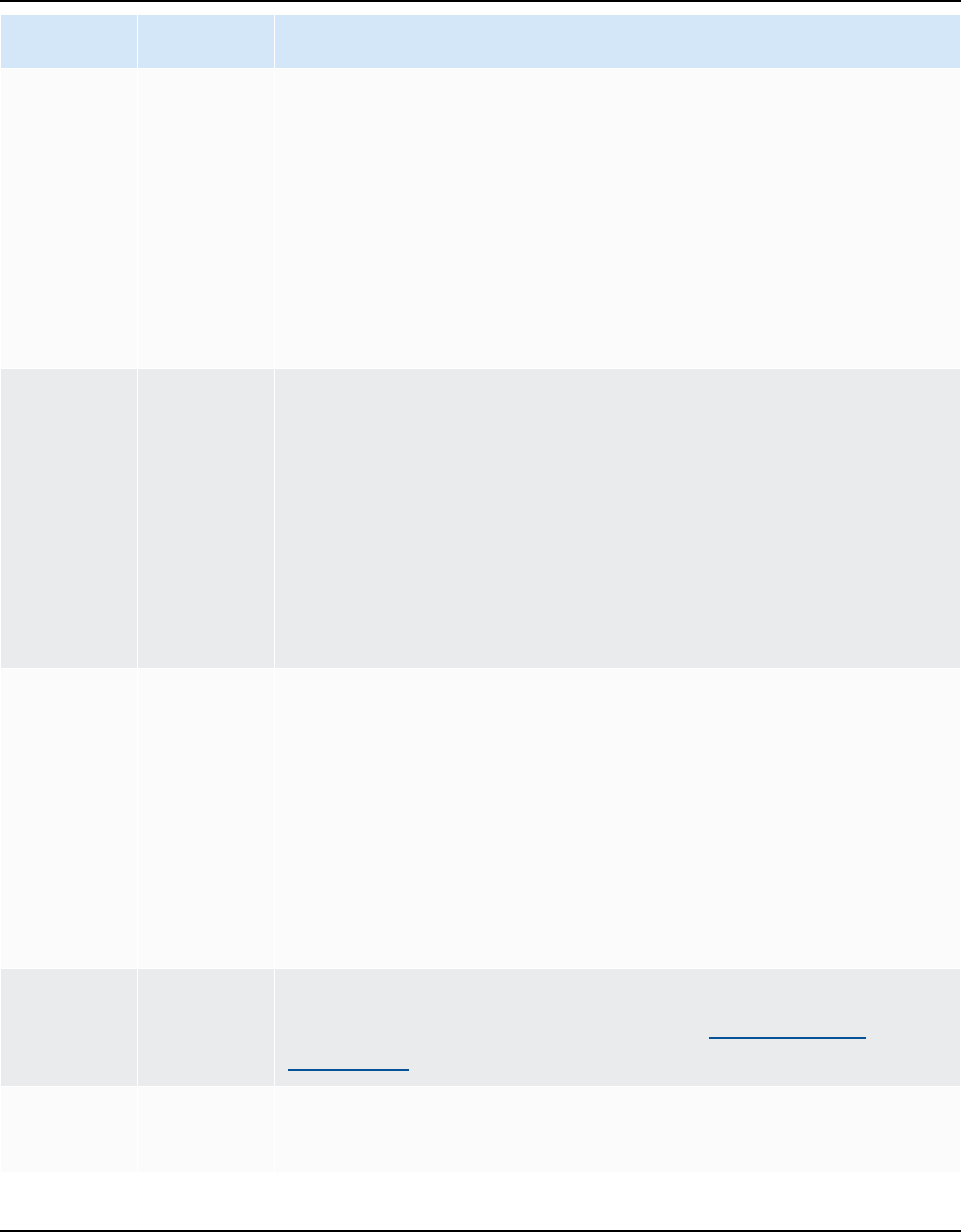

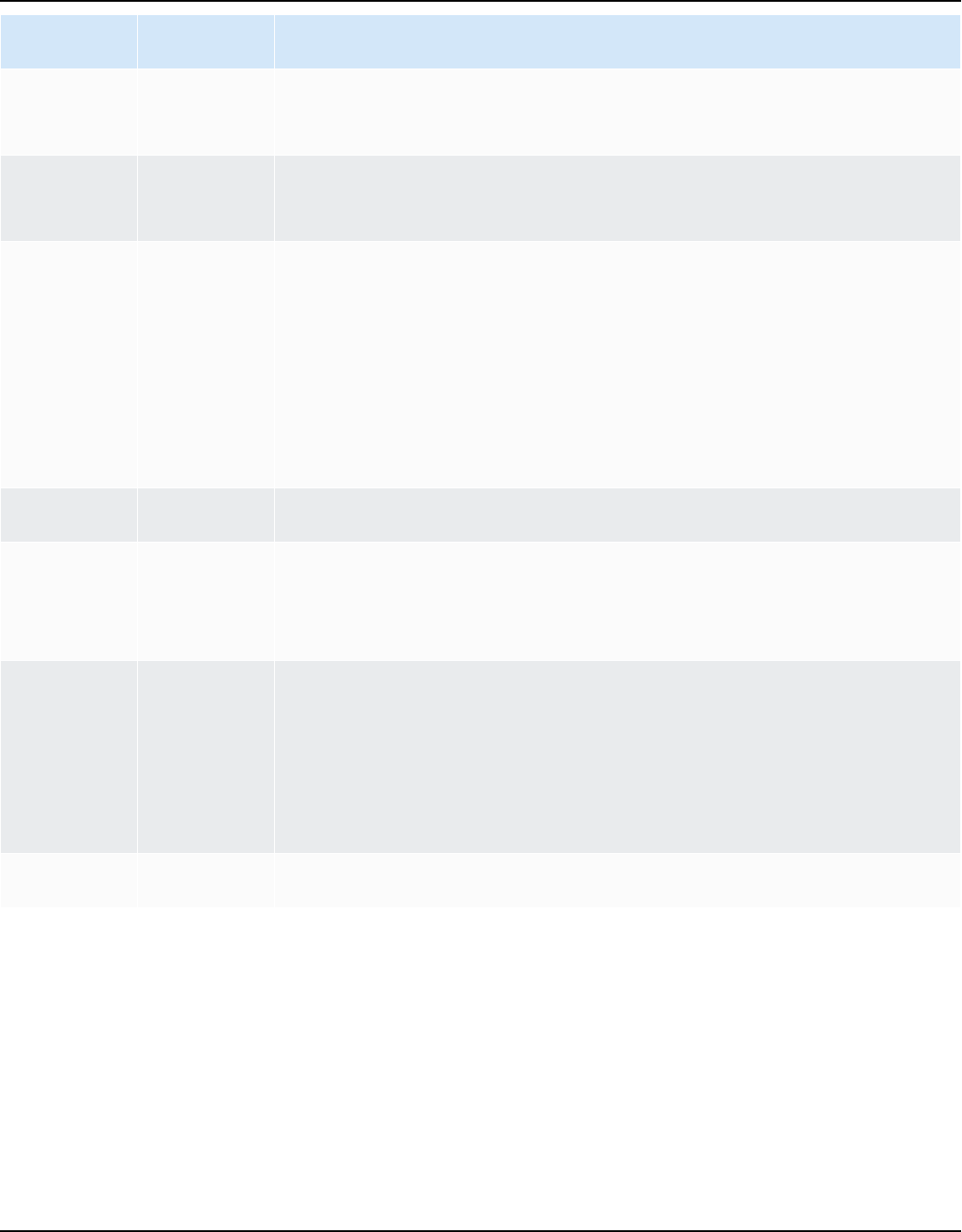

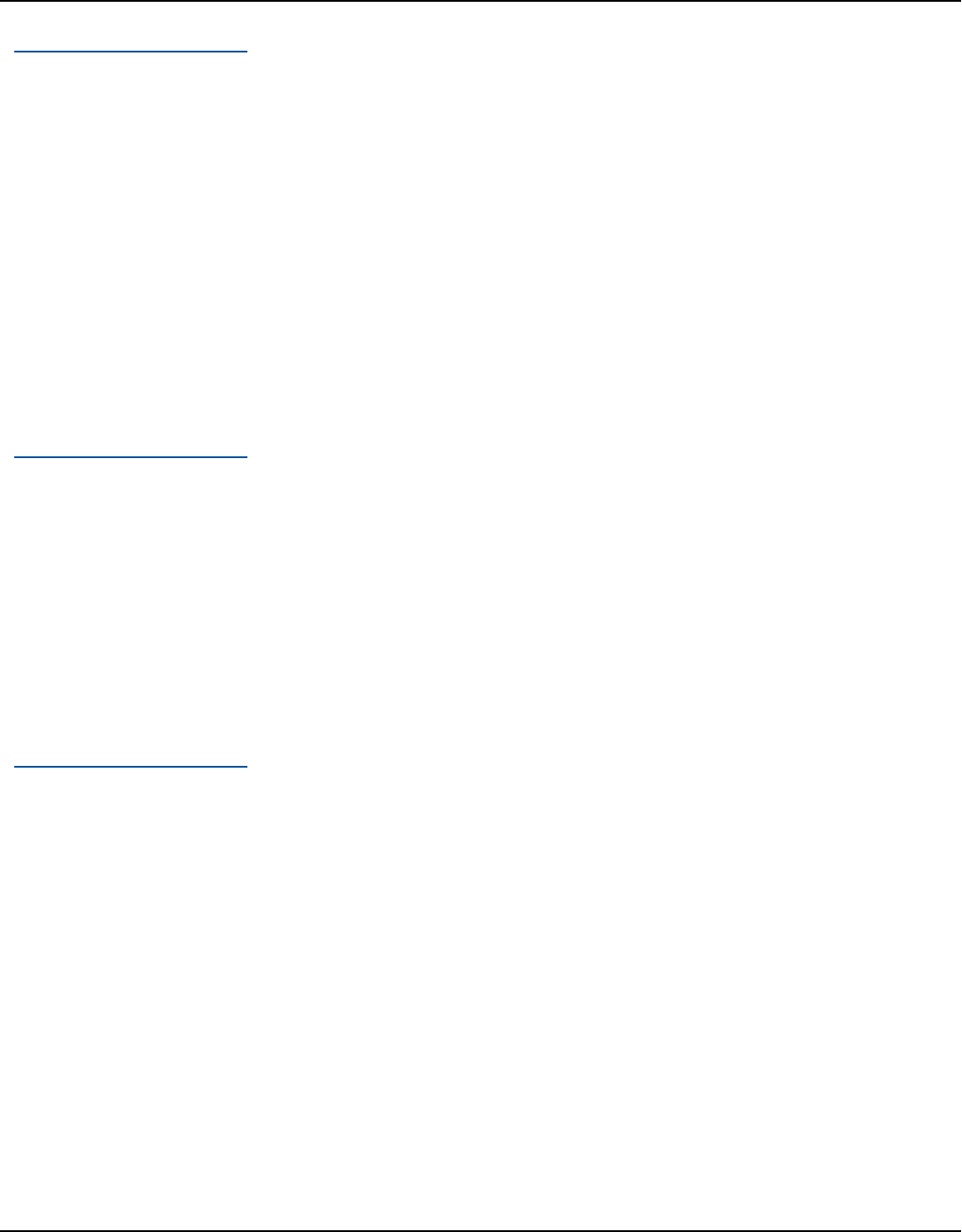

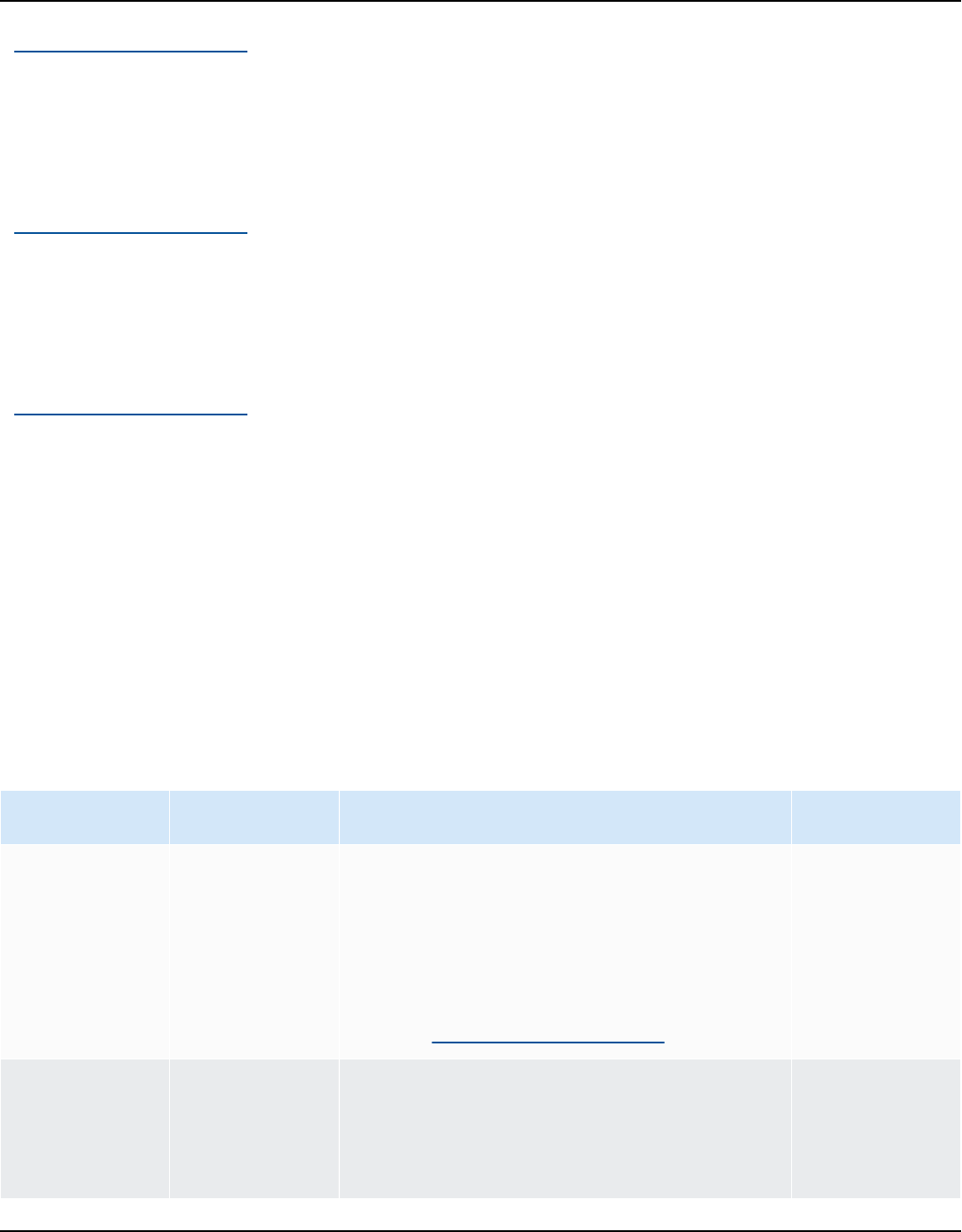

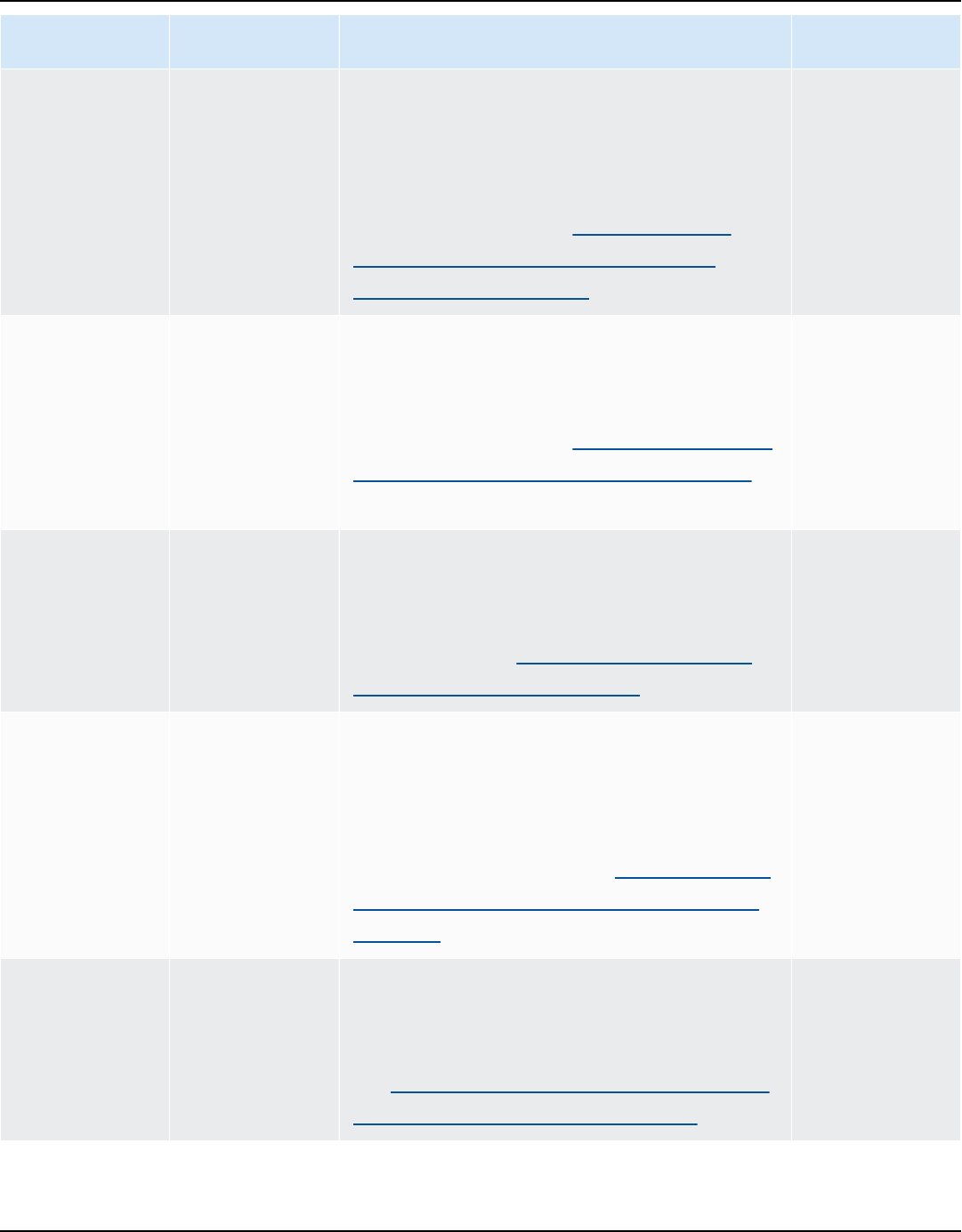

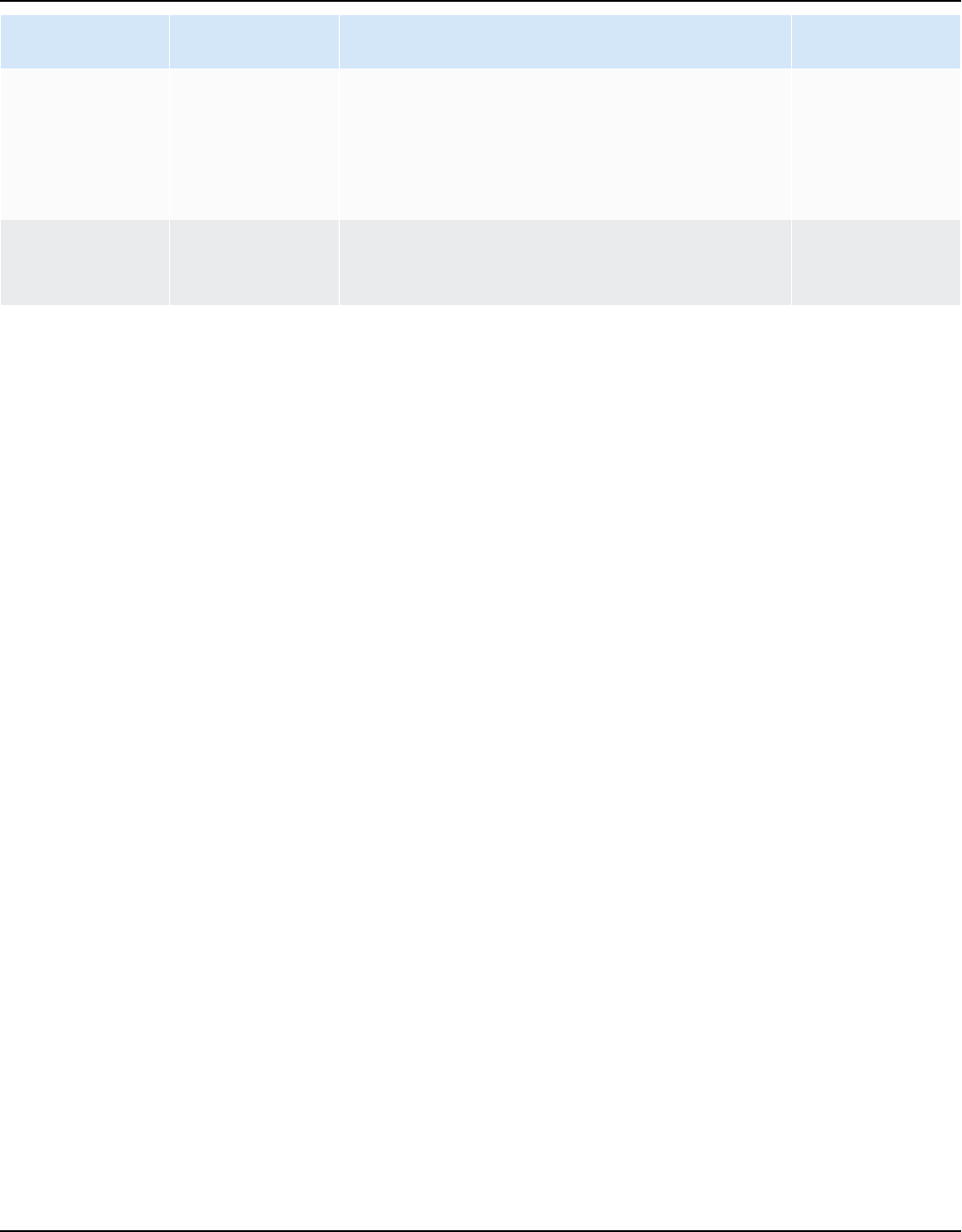

Database

engine

Drivers Download location

Amazon

Aurora

MySQL-

Compatible

Edition

mysql-connector-ja

va-5.1.6.jar

https://www.mysql.com/products/connector/

Amazon

Aurora

PostgreSQL-

Compatible

Edition

postgresql-42.2.19

.jar

https://jdbc.postgresql.org/download/postg

resql-42.2.19.jar

Amazon EMR

HiveJDBC42.jar

http://awssupportdatasvcs.com/bootstrap-act

ions/Simba/latest/

Amazon

Redshift

redshift-jdbc42-2.

1.0.9.jar

https://s3.amazonaws.com/redshift-

downloads/drivers/jdbc/2.1.0.9/redshift-j

dbc42-2.1.0.9.zip

Amazon

Redshift

Serverless

redshift-jdbc42-2.

1.0.9.jar

https://s3.amazonaws.com/redshift-

downloads/drivers/jdbc/2.1.0.9/redshift-j

dbc42-2.1.0.9.zip

Installing JDBC drivers Version 1.0.672 12

AWS Schema Conversion Tool User Guide

Database

engine

Drivers Download location

Apache Hive

hive-jdbc-2.3.4-st

andalone.jar

https://repo1.maven.org/maven2/org/apache

/hive/hive-jdbc/2.3.4/hive-jdbc-2.3.4-sta

ndalone.jar

Azure SQL

Database

mssql-jdbc-7.2.2.j

re11.jar

https://docs.microsoft.com/en-us/sql/conn

ect/jdbc/release-notes-for-the-jdbc-driver?

view=sql-server-ver15#72

Azure

Synapse

Analytics

mssql-jdbc-7.2.2.j

re11.jar

https://docs.microsoft.com/en-us/sql/conn

ect/jdbc/release-notes-for-the-jdbc-driver?

view=sql-server-ver15#72

Greenplum

Database

postgresql-42.2.19

.jar

https://jdbc.postgresql.org/download/postg

resql-42.2.19.jar

IBM Db2 for

z/OS

db2jcc-db2jcc4.jar

https://www.ibm.com/support/pages/db2-

jdbc-driver-versions-and-downloads-db2-zos

IBM Db2

LUW

db2jcc-db2jcc4.jar

https://www.ibm.com/support/pages/node/3

82667

MariaDB

mariadb-java-clien

t-2.4.1.jar

https://downloads.mariadb.com/Connectors/

java/connector-java-2.4.1/mariadb-java-clien

t-2.4.1.jar

Microsoft

SQL Server

mssql-jdbc-10.2.jar

https://docs.microsoft.com/en-us/sql/conn

ect/jdbc/download-microsoft-jdbc-driver-for-

sql-server?view=sql-server-ver15

MySQL

mysql-connector-ja

va-8.0.15.jar

https://dev.mysql.com/downloads/connector/

j/

Installing JDBC drivers Version 1.0.672 13

AWS Schema Conversion Tool User Guide

Database

engine

Drivers Download location

Netezza

nzjdbc.jar

Use the client tools software.

Download driver version

7.2.1, which is backwards

compatible with data

warehouse version 7.2.0.

http://www.ibm.com/support/knowle

dgecenter/SSULQD_7.2.1/com.ibm.nz.

datacon.doc/c_datacon_plg_overview.html

Oracle

ojdbc8.jar

Driver versions 8 and higher

are supported.

https://www.oracle.com/database/technologi

es/jdbc-ucp-122-downloads.html

PostgreSQL

postgresql-42.2.19

.jar

https://jdbc.postgresql.org/download/postg

resql-42.2.19.jar

SAP ASE

(Sybase ASE)

jconn4.jar

The jConnect JDBC driver

Snowflake

snowflake-jdbc-3.9

.2.jar

For more information, see

Downloading / Integrating the

JDBC Driver.

https://repo1.maven.org/maven2/net/

snowflake/snowflake-jdbc/3.9.2/snowflake-

jdbc-3.9.2.jar

Teradata

terajdbc4.jar

tdgssconfig.jar

For Teradata JDBC driver

version 16.20.00.11 and

higher, you don't need the

tdgssconfig.jar file.

https://downloads.teradata.com/download/

connectivity/jdbc-driver

Installing JDBC drivers Version 1.0.672 14

AWS Schema Conversion Tool User Guide

Database

engine

Drivers Download location

Vertica

vertica-jdbc-9.1.1

-0.jar