1

December 8, 2022

Private Passenger Auto

Artificial Intelligence/Machine Learning

Survey Results

NAIC Staff Report

NAIC SURVEY TECHNICAL TEAM

Kris DeFrain, Director of Research and Actuarial Services

Dorothy L. Andrews, Senior Behavioral Data Scientist and Actuary

Roberto Perez Santiago, Property/Casualty Rate Modeling Actuary and Data Scientist

Justin Cox, Data Analyst III

Paula D. Harms, Center for Insurance Policy and Research (CIPR) Senior Research Analyst

2

Table of Contents

INTRODUCTION ............................................................................................................................................. 5

BACKGROUND ............................................................................................................................................... 6

GENERAL SECTION OF THE SURVEY .............................................................................................................. 8

COMPANY OPERATION: CLAIMS ................................................................................................................. 10

COMPANY OPERATION: FRAUD DETECTION .............................................................................................. 15

COMPANY OPERATION: MARKETING ......................................................................................................... 20

COMPANY OPERATION: RATING ................................................................................................................. 24

COMPANY OPERATION: UNDERWRITING ................................................................................................... 29

COMPANY OPERATION: LOSS PREVENTION ............................................................................................... 33

CUSTOMER DATA CORRECTION .................................................................................................................. 37

GOVERNANCE ............................................................................................................................................. 40

THIRD-PARTY DATA SOURCES AND MODELS .............................................................................................. 43

REGULATORS’ ACCESS TO DATA: DASHBOARD .......................................................................................... 59

CONCLUSION/NEXT STEPS .......................................................................................................................... 60

APPENDIX A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims,

Fraud Detection, Marketing, Loss Prevention ...................................................................................... 61

APPENDIX B: Definitions Specific to Claims ................................................................................................ 62

APPENDIX C: Definitions Specific to Fraud Detection ................................................................................. 63

APPENDIX D: Definitions Specific to Marketing .......................................................................................... 64

APPENDIX E: Definitions Specific to Rating ................................................................................................. 65

APPENDIX F: Definitions Specific to Underwriting ...................................................................................... 66

APPENDIX G: Definitions Specific to Loss Prevention ................................................................................. 67

APPENDIX H: Data Use Table (“Data Elements”) Definitions ...................................................................... 68

APPENDIX I: Model Governance Definitions ............................................................................................... 69

3

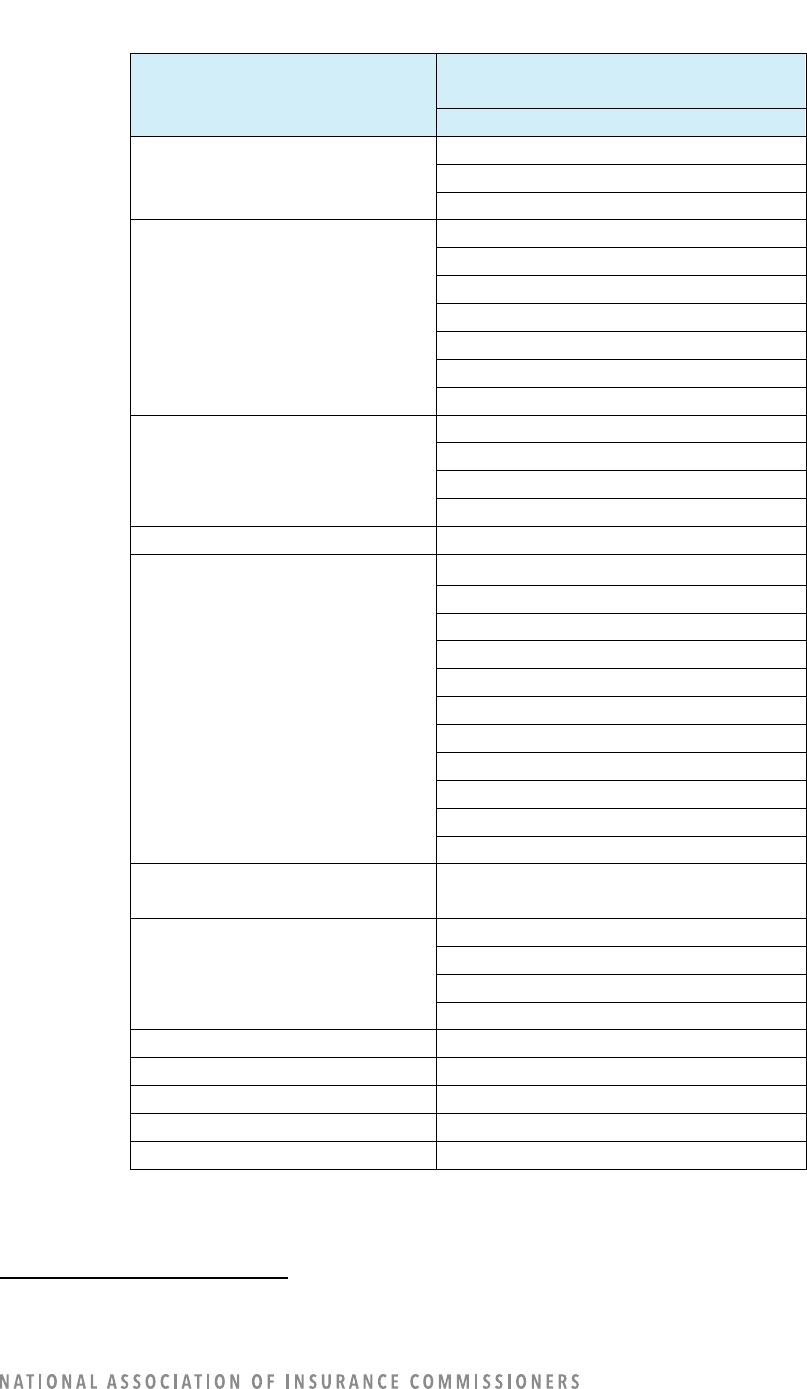

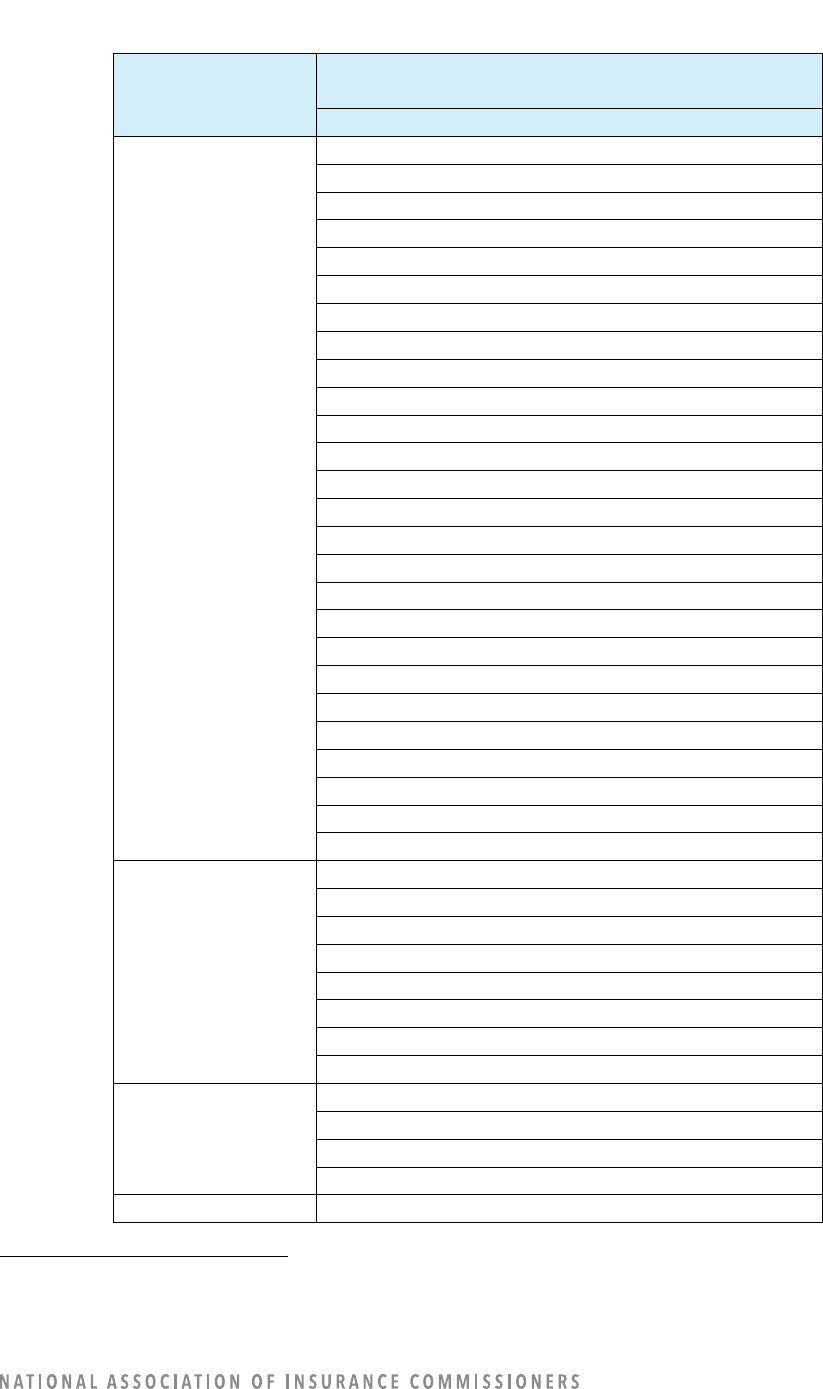

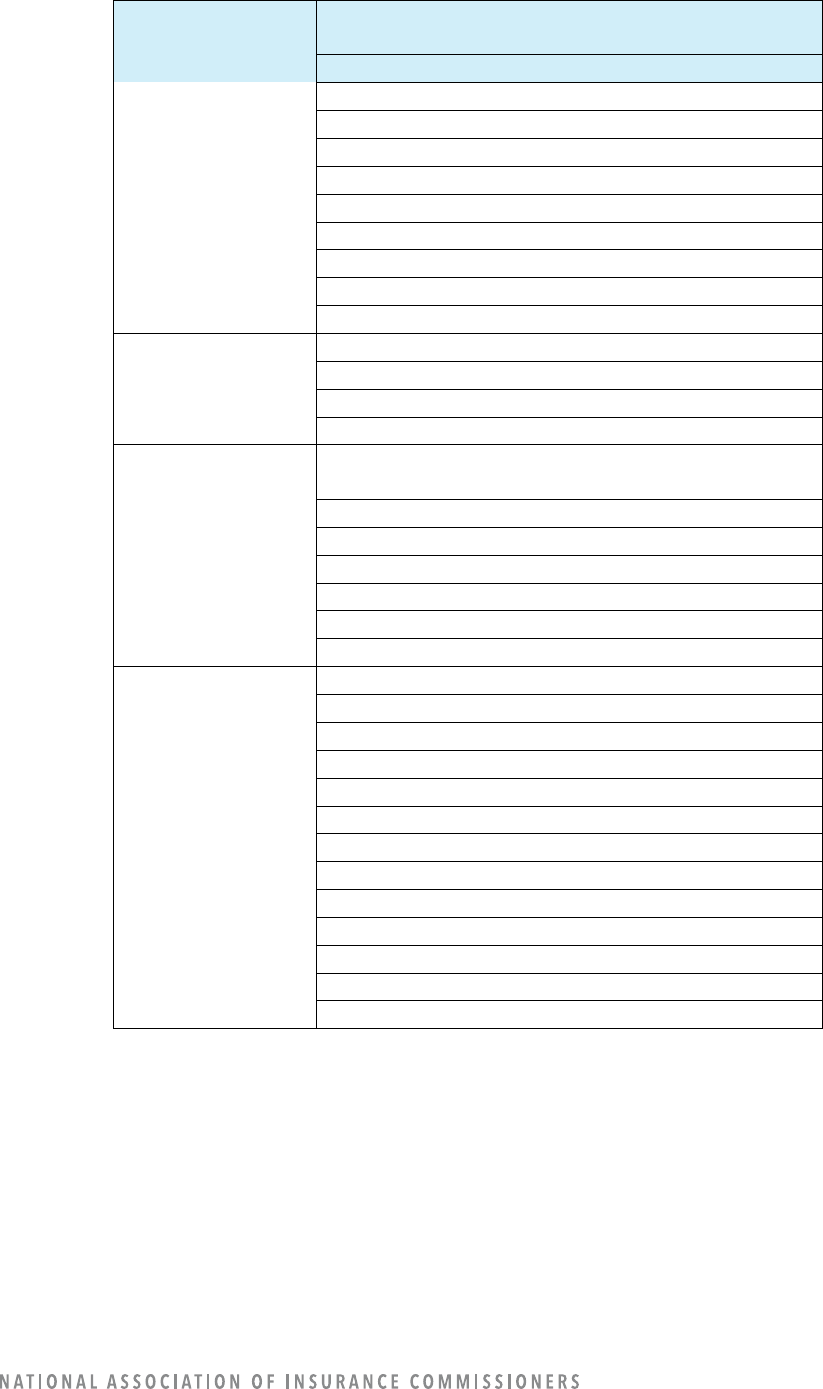

Index of Tables

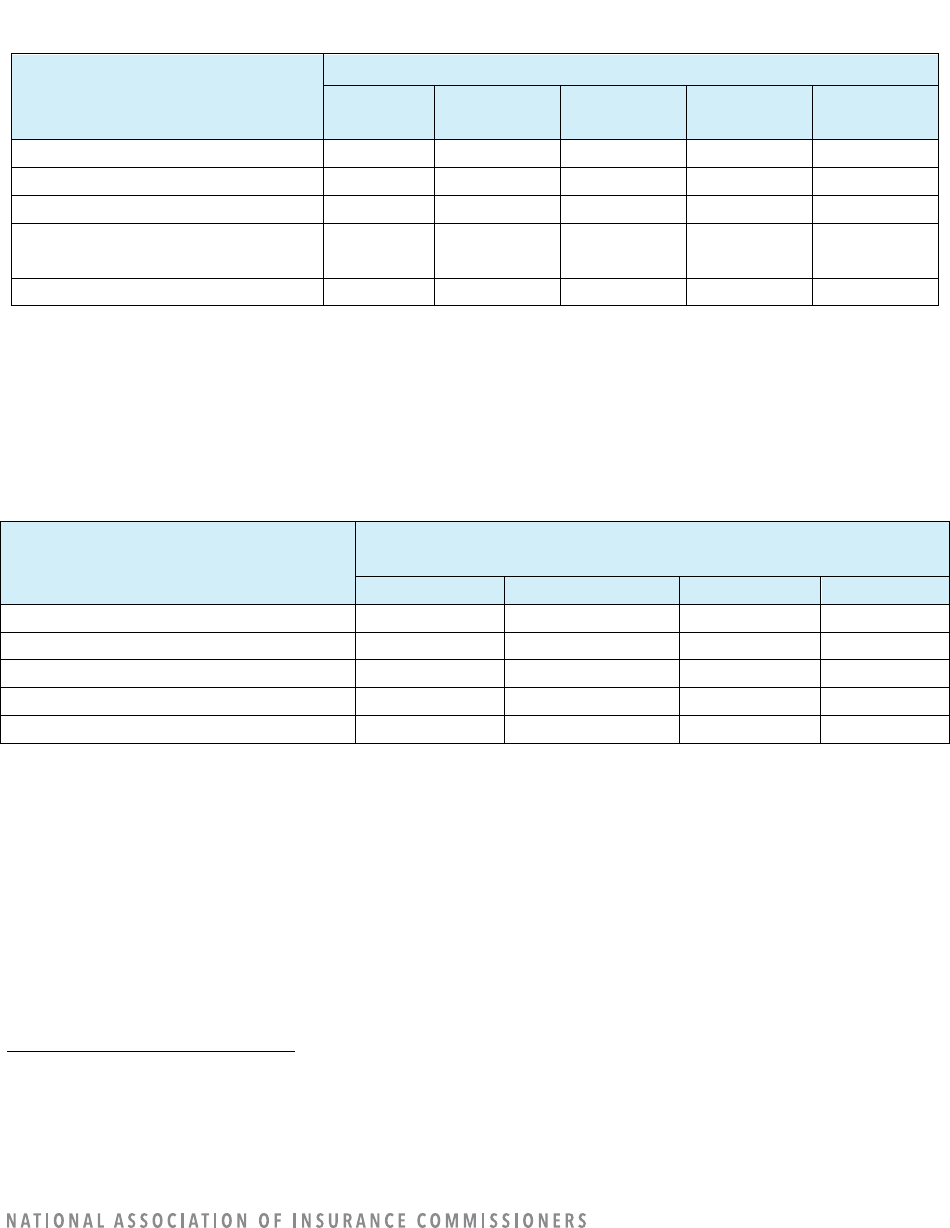

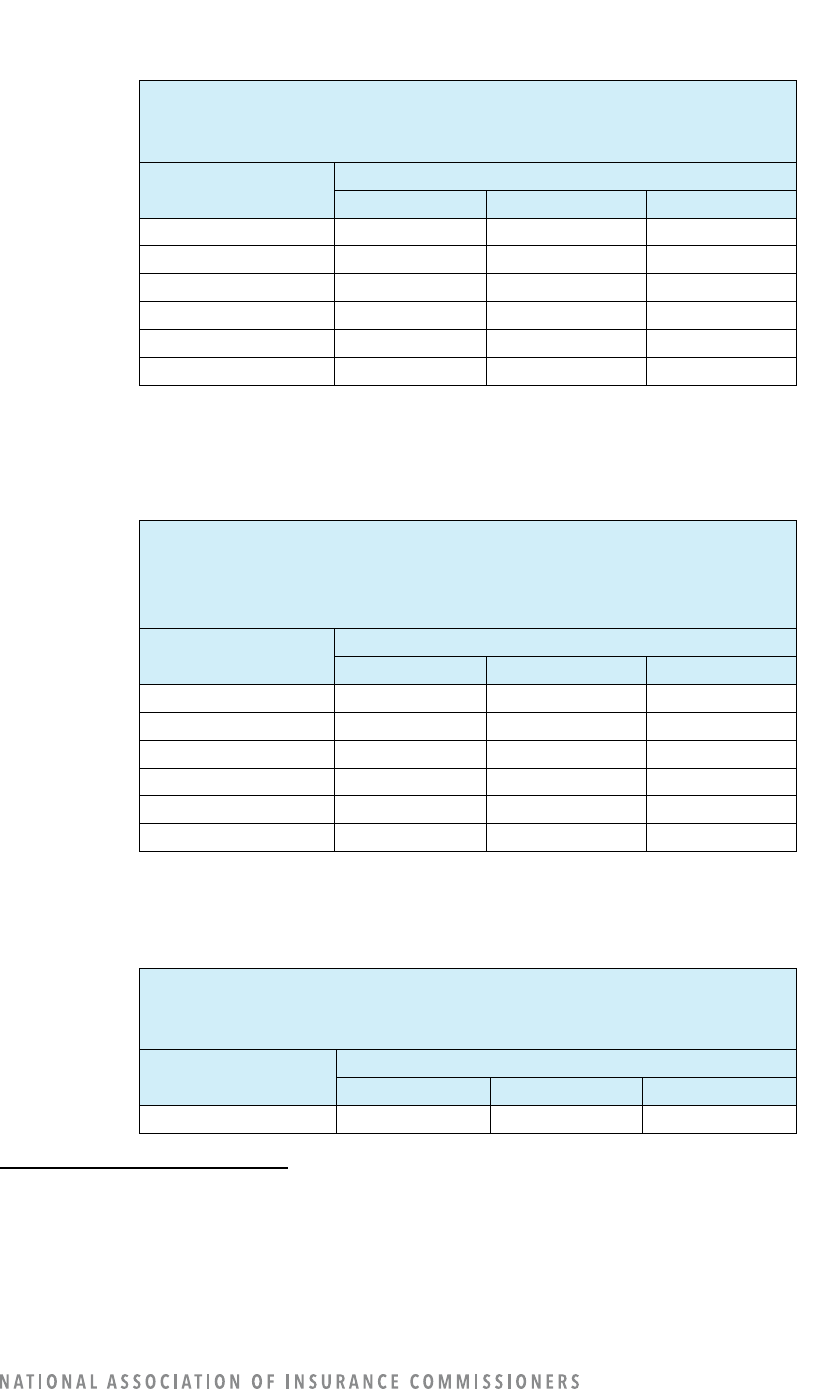

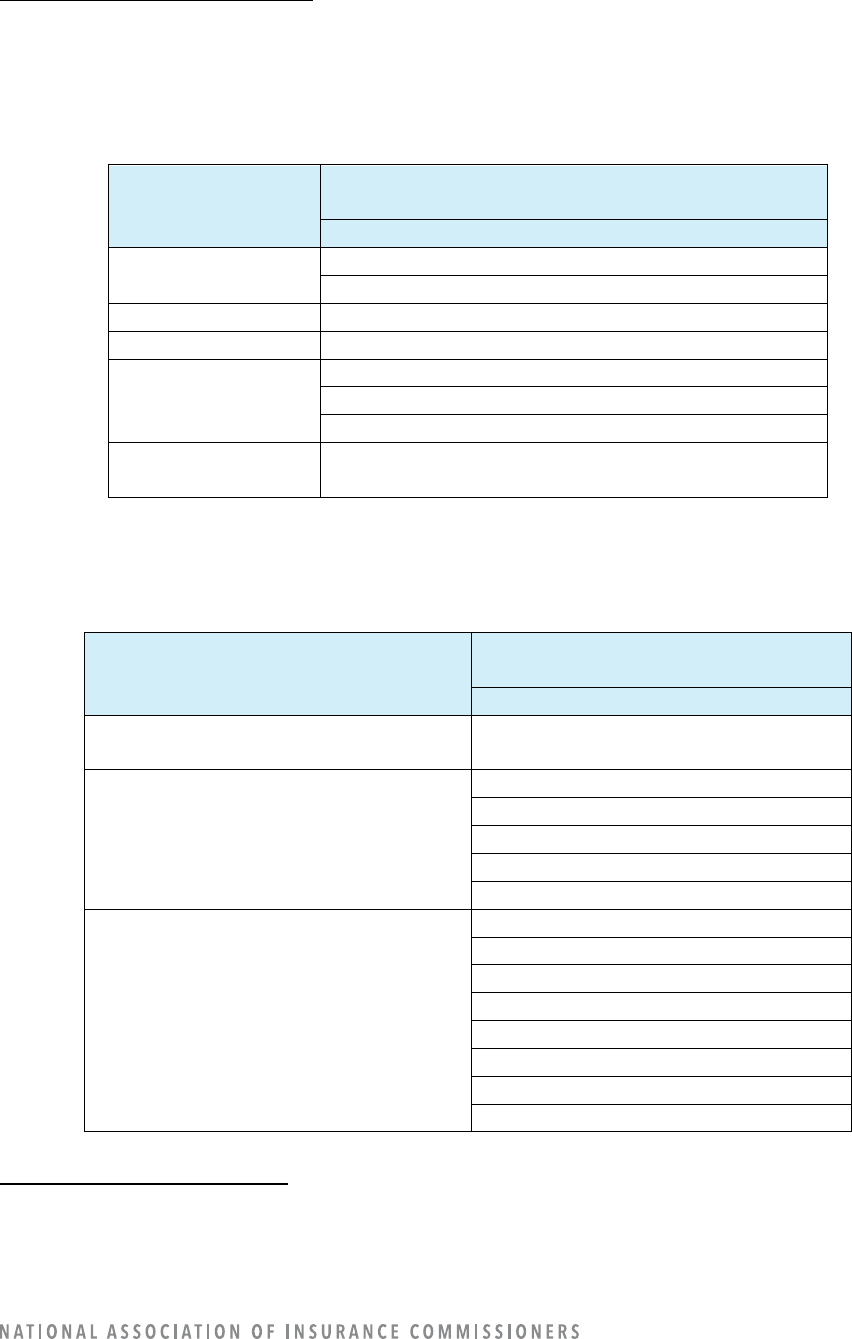

Table 1: Companies Using or Exploring Use of AI/ML .................................................................................. 8

Table 2: Companies’ Reasons for Not Using AI/ML ...................................................................................... 9

Table 3: Companies with Models in Use or Under Construction by Insurer Operation Area ...................... 9

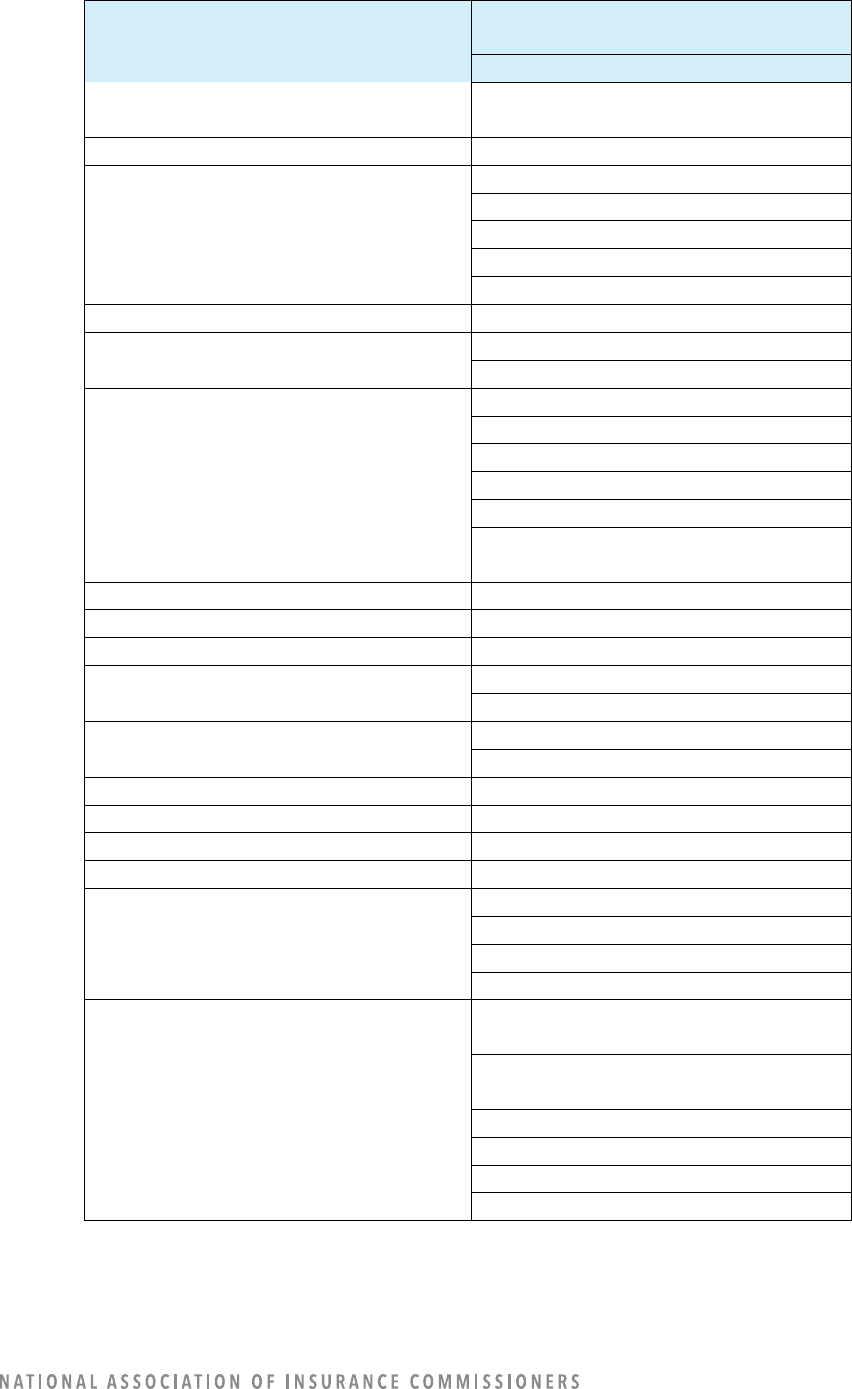

Table 4: Companies Use of Claims Models ................................................................................................. 11

Table 5: Level of Decision-Making by Use of Claims Models...................................................................... 11

Table 6: Claims Model Sources by Model Use ............................................................................................ 12

Table 7: Companies Use of Claims Data Elements ..................................................................................... 13

Table 8: Claims Model Sources (Internal vs. Third Party) by Data Elements .............................................. 13

Table 9: Companies’ Use of Consumer or Other Type of Score as an Input for Claims Data

Elements ..................................................................................................................................................... 14

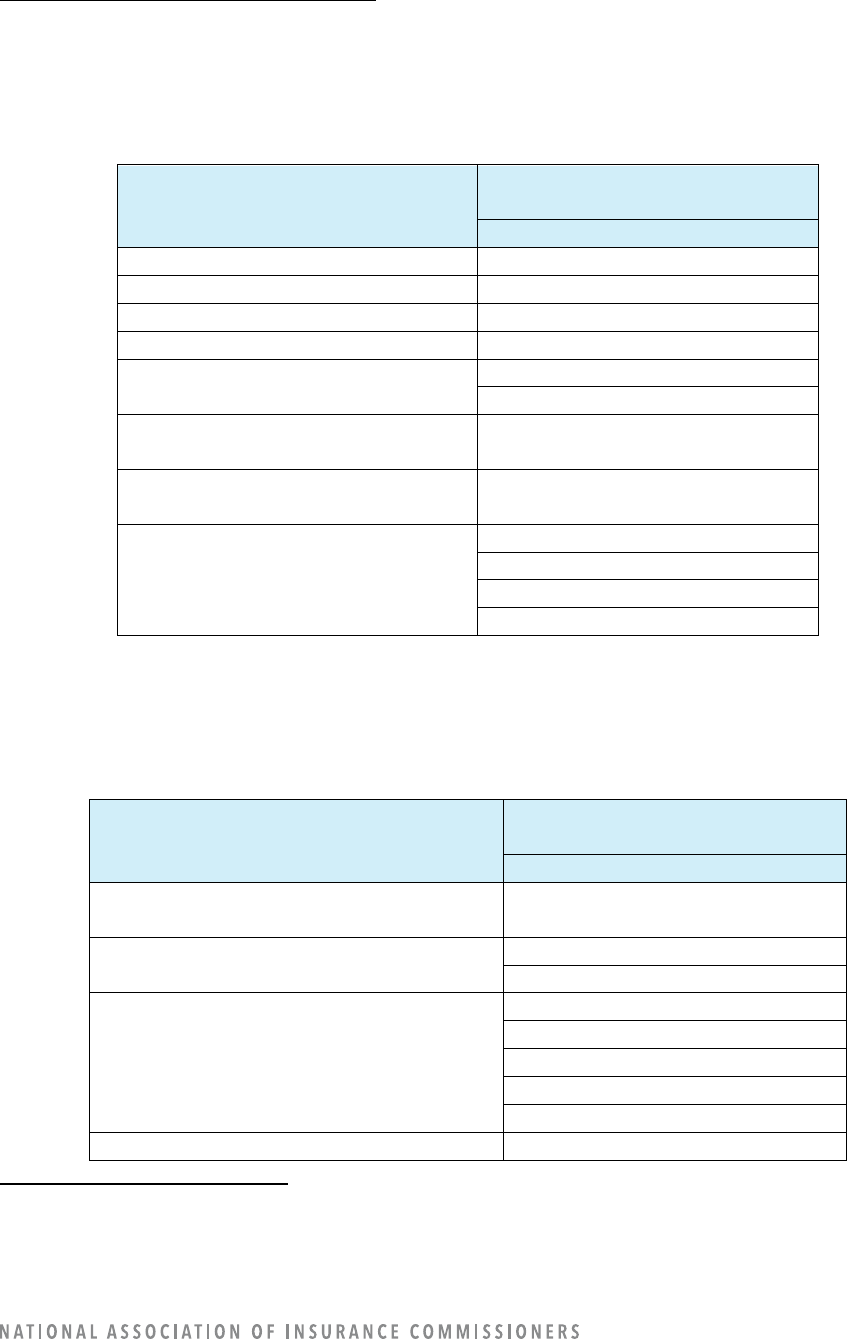

Table 10: Companies Use of Fraud Detection Models ............................................................................... 15

Table 11: Level of Decision-Making by Use of Fraud Detection Models .................................................... 16

Table 12: Fraud Detection Model Sources by Model Use .......................................................................... 17

Table 13: Companies Use of Fraud Detection Data Elements .................................................................... 17

Table 14: Fraud Detection Model Sources (Internal vs. Third Party) by Data Elements ............................ 18

Table 15: Companies’ Use of Consumer or Other Type of Score as an Input for Fraud Detection

Data Elements ............................................................................................................................................. 19

Table 16: Companies Use of Marketing Models ......................................................................................... 20

Table 17: Level of Decision-Making by Use of Marketing Models ............................................................. 21

Table 18: Marketing Model Sources by Model Use .................................................................................... 21

Table 19: Companies Use of Marketing Data Elements ............................................................................. 22

Table 20: Marketing Model Sources (Internal vs. Third Party) by Data Elements...................................... 23

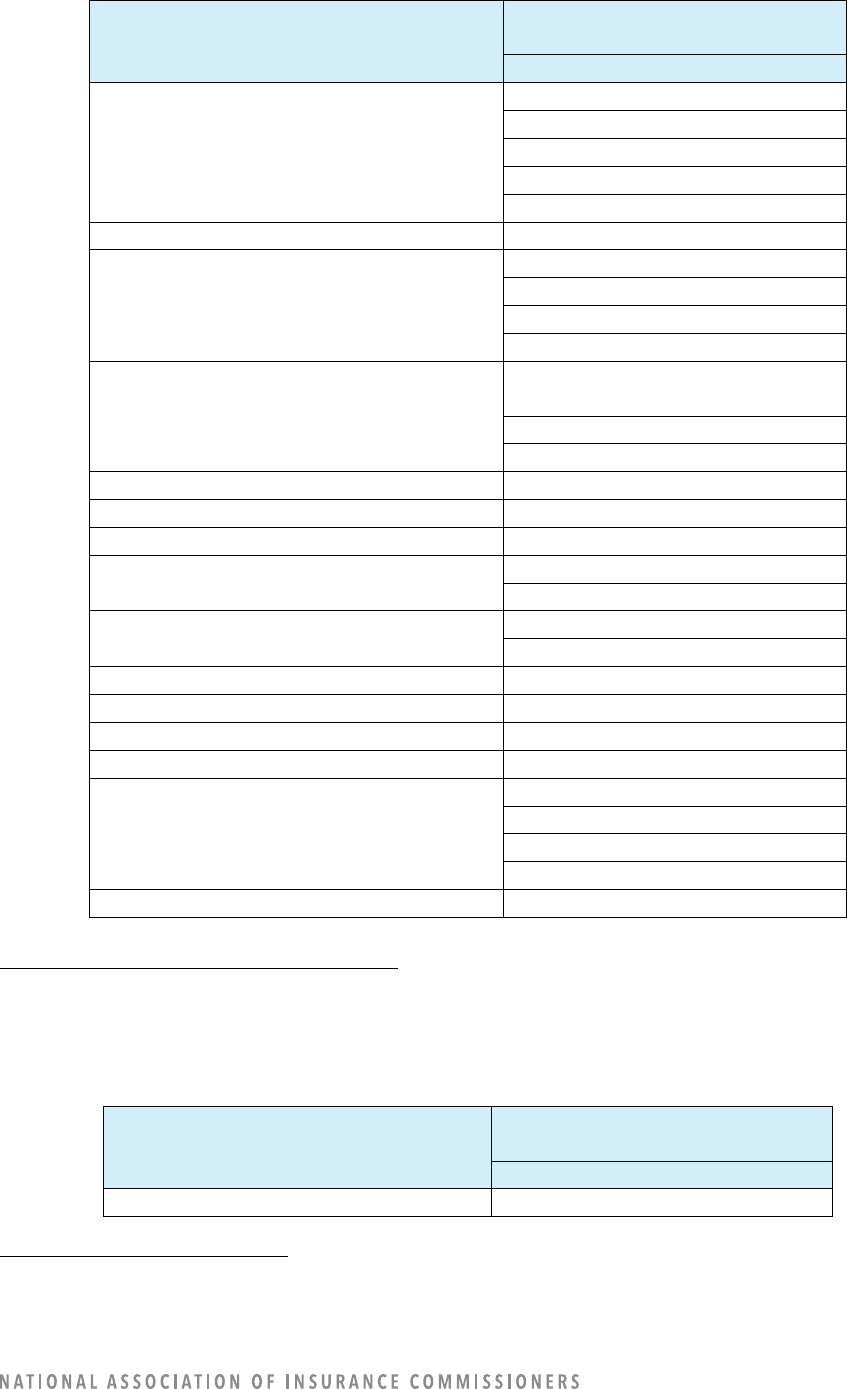

Table 22: Companies’ Use of Rating Models .............................................................................................. 25

Table 23: Level of Decision-Making by Use of Rating Models .................................................................... 25

Table 24: Rating Model Sources by Model Use .......................................................................................... 26

Table 25: Companies Use of Rating Data Elements .................................................................................... 26

Table 26: Rating Model Sources (Internal vs. Third Party) by Data Elements ............................................ 27

Table 27: Companies’ Use of Consumer or Other Type of Score as an Input for Rating Data

Elements ..................................................................................................................................................... 28

Table 28: Companies’ Use of Underwriting Models ................................................................................... 29

Table 29: Level of Decision-Making by Use of Underwriting Models ......................................................... 30

Table 30: Underwriting Model Sources by Model Use ............................................................................... 30

Table 31: Companies Use of Underwriting Data Elements ........................................................................ 31

Table 32: Underwriting Model Sources (Internal vs. Third Party) by Data Elements ................................. 32

Table 33: Companies’ Use of Consumer or Other Type of Score as an Input for Underwriting Data

Elements ..................................................................................................................................................... 33

Table 34: Companies Use of Claims Models ............................................................................................... 34

Table 35: Level of Decision-Making by Use of Loss Prevention Models ..................................................... 34

Table 36: Loss Prevention Model Sources by Model Use ........................................................................... 35

Table 37: Companies Use of Loss Prevention Data Elements .................................................................... 35

Table 38: Loss Prevention Model Sources (Internal vs. Third Party) by Data Elements ............................. 36

4

Table 39: Companies’ Use of Consumer or Other Type of Score as an Input for Loss Prevention

Data Elements ............................................................................................................................................. 37

Table 40: Companies’ Disclosure to Consumers about the Data Elements by Insurer Operation

Area ............................................................................................................................................................. 38

Table 41: Companies’ Disclosure to Consumers about the Purposes of Data Elements by Insurer

Operation Area ........................................................................................................................................... 38

Table 42: Consumers Ability to Correct Data by Insurer Operation Area .................................................. 39

Table 43: Governance Documentation of NAIC AI Principle: Fairness and Ethics Considerations ............. 40

Table 44: Governance Documentation of NAIC AI Principle: Accountability for Data Algorithms'

Compliance with Laws as well as Intended and Unintended Impacts ........................................................ 40

Table 45: Governance Documentation of NAIC AI Principle: Appropriate Resources and Knowledge

Involved to Ensure Compliance with Laws Including those Related to Unfair Discrimination ................... 41

Table 46: Governance Documentation of NAIC AI Principle: Ensure Transparency with Appropriate

Disclosures Including Notice to Consumers Specific to Data Being Used and Methods for Appeal

and Recourse Related to Inaccurate Data .................................................................................................. 41

Table 47: Governance Documentation of NAIC AI Principle: AI Systems are Secure, Safe and

Robust including Decision Traceability and Security and Privacy Risk Protections .................................... 41

Table 48: Companies Following Other Existing Standards or Guidance in Regard to a Governance

Framework .................................................................................................................................................. 42

Table 49: Source (Internal or External) of Other Existing Standards or Guidance in Regard to a

Governance Framework.............................................................................................................................. 42

Table 50: Existing Other Standards or Guidance in Regard to a Governance Framework ......................... 42

Table 51: Third Parties’ Claims Models Used by Insurers ........................................................................... 43

Table 52: Third Parties’ Claims Data Element Sources Used by Insurers ................................................... 45

Table 53: Third Parties’ Fraud Detection Models Used by Insurers ........................................................... 46

Table 54: Third Party Fraud Detection Data Element Sources Used by Insurers ....................................... 48

Table 55: Third Parties’ Marketing Models Used by Insurers .................................................................... 50

Table 56: Third Party Marketing Data Element Sources Used by Insurers ................................................. 52

Table 57: Third Parties’ Rating Models Used by Insurers ........................................................................... 55

Table 58: Third Party Rating Data Element Sources Used by Insurers ....................................................... 55

Table 59: Third Parties’ Underwriting Models Used by Insurers ................................................................ 57

Table 60: Third Party Underwriting Data Element Sources Used by Insurers ............................................ 57

Table 61: Third Parties’ Loss Prevention Models Used by Insurers ............................................................ 58

Table 62: Third Party Loss Prevention Data Element Sources Used by Insurers ........................................ 59

5

INTRODUCTION

Purpose of the Survey

At the outset of the Artificial Intelligence (AI)/Machine Learning (ML) surveys, the predecessor to the Big

Data and Artificial Intelligence (H) Working Group defined five key objectives. Regulators want to: 1) learn

directly from the industry about what is happening in this space; 2) get a sense of the current level of risk

and exposure and whether or how the industry is managing or mitigating that risk; 3) develop information

for trending, such as how the risk is evolving over time, and the industry’s responsive actions; 4) inform a

meaningful and useful regulatory approach, framework, and/or strategy for overseeing and monitoring

this activity; and 5) learn from prior surveys to inform and improve future surveys.

This Private Passenger Auto (PPA) survey is expected to help regulators in terms of 1) consumer

protections and 2) areas that regulators might expect companies involved in this type of activity to be,

actively and with intention, ensuring that they are putting processes and procedures in place to meet, or

at least consider, the expectations laid out in the NAIC’s AI Principles.

This initial survey was developed to document industry observations in the PPA insurance market

regarding use of data and AI/ML, gain insight from open-ended questions, get a good sense of the current

level of risk and exposure, and learn what companies be doing to mitigate and/or manage its risk and

exposure.

Purpose of Thi

s Report

With the tremendous amount of data submitted for this survey, the subject matter expert (SME) group

asked NAIC technical staff to assist in conducting a thorough analysis. The survey analysis team was asked

to evaluate the results, provide data analysis, and investigate potential inaccuracies in the data. The team

was specifically asked to investigate what types of data are being used by companies in their AI/ML

models; evaluate third-party AI/ML model and data use; explore levels of governance; and evaluate

transparency, consumer disclosures, and potential consumer actions to correct data.

Goals of the Private Passenger Auto Survey

1. Analyze industry use of artificial intelligence (AI)/machine learning

(ML).

2. Identify industry’s risk and exposure and mitigation of model risk.

3. Calculate trends.

4. Gather background for regulatory approach/framework.

5. Inform/improve future surveys.

6

BACKGROUND

The PPA survey was conducted under market conduct examination authority of nine states: Connecticut,

Illinois, Iowa, Louisiana, Nevada, North Dakota, Pennsylvania, Rhode Island, and Wisconsin. SMEs from

these states opted to limit the survey request to only larger companies, defined as those PPA writers with

more than $75 million in 2020 direct premium written. The survey call letter was distributed on Sept. 28,

2021, and survey responses were requested by Oct. 28, 2021. A total of 193 responses were received, and

almost 90% of those indicated they are doing something pertaining to AI/ML.

Survey Web Page

The survey template, filing documentation, frequently asked questions (FAQ), and a link to the submission

application can be found on the PPA AI/ML survey

web page.

Surveyed Companies and Requesting States

The PPA insurance companies with at least $75 million in 2020 direct written premium transacting ongoing

business in at least one of the following states were requested to provide survey responses within 30

days: Connecticut, Illinois, Iowa, Louisiana, Nevada, North Dakota, Pennsylvania, Rhode Island, or

Wisconsin (requesting states).

Nine states conducted a market conduct analysis of various property/casualty (P/C) companies to:

• Gain a better understanding of the insurance industry’s use and governance of big data and

AI/ML.

• Seek information that could aid in the development of guidance or a potential regulatory

framework to support the insurance industry’s use of big data and AI/ML.

• Inform as to the current and planned business practices of the company.

The requesting states agreed the collected data will not be used to evaluate or determine the company’s

compliance with applicable laws and regulations.

Regulatory Subject Matter Experts

For each of the requesting states, the following SMEs created the survey and will communicate the survey

responses to the Big Data and Artificial Intelligence (H) Working Group.

CT: George Bradner

IL: Erica Weyhenmeyer

IA: Andria Seip

LA: Nichole Torblaa

ND: Mike Andring and Chris Aufenthie

NV: Gennady Stolyarov

PA: Michael McKenney

RI: Matt Gendron

WI: Timothy Cornelius

The following NAIC staff assisted the SMEs with survey development, survey distribution, and data

collection: Denise Matthews, Tim Mullen, Teresa Cooper, Paula D. Harms, and Justin Cox.

7

Artificial Intelligence/Machine Learning Definition

The definition of AI/ML was provided on the PPA AI/ML survey web site with the following link:

PPA AI/ML

Filing Guidance & Definitions (Version 2021.0.0).

“Definition of Artificial Intelligence / Machine Learning (AI/ML) for Survey – Applicable to All Sections

AI/ML describes an automated process in which a system begins recognizing patterns without being

specifically programmed to achieve a predetermined result. This is different from a standard

algorithm in that an algorithm is a process or set of rules executed to solve an equation or problem in

a predetermined fashion. Evolving algorithms are considered a subset of AI/ML.

Artificial Intelligence/Machine Learning Systems include:

• Systems that adapt and adjust to new data and experience without manual human

intervention.

• Systems that arrive at results for which the outcomes and the stepwise approach toward the

outcomes were not configured in advance by a human programmer.

• Systems that dynamically respond to conditions in the external environment without the

specific nature of such responses being known in advance to the designers of the systems.

• Systems that utilize neural networks and/or deep-learning algorithms, such as supervised,

semi-supervised, and unsupervised learning algorithms.

• Systems that engage in automatic speech recognition, facial recognition, image recognition,

text recognition, natural language processing, generation of customer-specific

recommendations, automated customer communications (e.g., chatbots with non-

preprogrammed prompts), autonomous or semi-autonomous vehicle operation or data

gathering, or any other approach that does not require either preprogramming or a manual

human intervention in every instance of an action or decision.

• Systems that automatically generate adaptive responses based on interactions with a

consumer or third party.

• Systems that determine which data elements to rely upon, in a non-preprogrammed fashion,

among a variety of possible alternatives.

Artificial Intelligence/Machine Learning Systems exclude:

• Static “scorecards” that deterministically map consumer or other risk characteristics to

treatments or decisions. (However, an AI/ML system may use the output of such static

“scorecards” as input data for the AI/ML system to consider.)

• Systems with solely preprogrammed decision rules. (e.g., “If A, then B” applied invariably in

all situations).

• Tables of point or factor assignments in rating plans.

• Static ratemaking and/or predictive-modeling methodologies, including linear regression,

generalized linear modeling (GLM), or generalized additive modeling (GAM). Purely

informational static databases, such as databases used to obtain reference amounts for claim

settlements, or static databases pertaining to consumer characteristics or experience,

regardless of the amount of information in the database. However, if AI/ML is used to create

a static predictive model, that AI/ML system is considered within the scope of this survey.

8

• Deterministic “phone trees” that navigate consumers through prerecorded voice prompts.

• Any approach that a company could have realistically utilized in the year 2000 or prior.”

A key decision affecting interpretation of results was the definition of AI/ML for purposes of the survey.

The SME group drafted the AI/ML definition to exclude some methods, such as linear regression,

commonly used models such as GLM and GAMs, and any approach that a company could have realistically

used in the year 2000 or prior. The SMEs developed the AI/ML definition to focus on the “more advanced”

models. Regulators noted they have extensive experience reviewing the older models used for rating,

having completed the NAIC’s 2020 white paper Regulatory Review of Predictive Models and having

conducted numerous training and educational events.

This definition resulted in approximately 80% of the models used in rating, based on the types of models

submitted to the NAIC’s rate model review team, to be excluded from the survey results. We have no

information about the impact of this definition on the reporting of models for companies’ non-rating

operations. While there is some possibility of a mixed bag of data due to using a definition of AI/ML that

is not academically accepted, the SME regulators experienced with rating models said the answers appear

to reflect the requested definition accordingly. However, after the survey results were partially revealed,

Hartford employees said they are aware of the state of the AI/ML usage in the insurance industry and

believe the reporting of models exceed the expected number if the survey’s AI/ML definition had been

used by all reporting companies. This position is speculation and cannot be proven with the available data.

Regulators would need to delve deeper by asking the companies whether the definition was consistently

used across company operations.

Confidentiality

The individual company results are confidential. Some combined results have been publicly presented at

Big Data and Artificial Intelligence (H) Working Group meetings and are presented in this report.

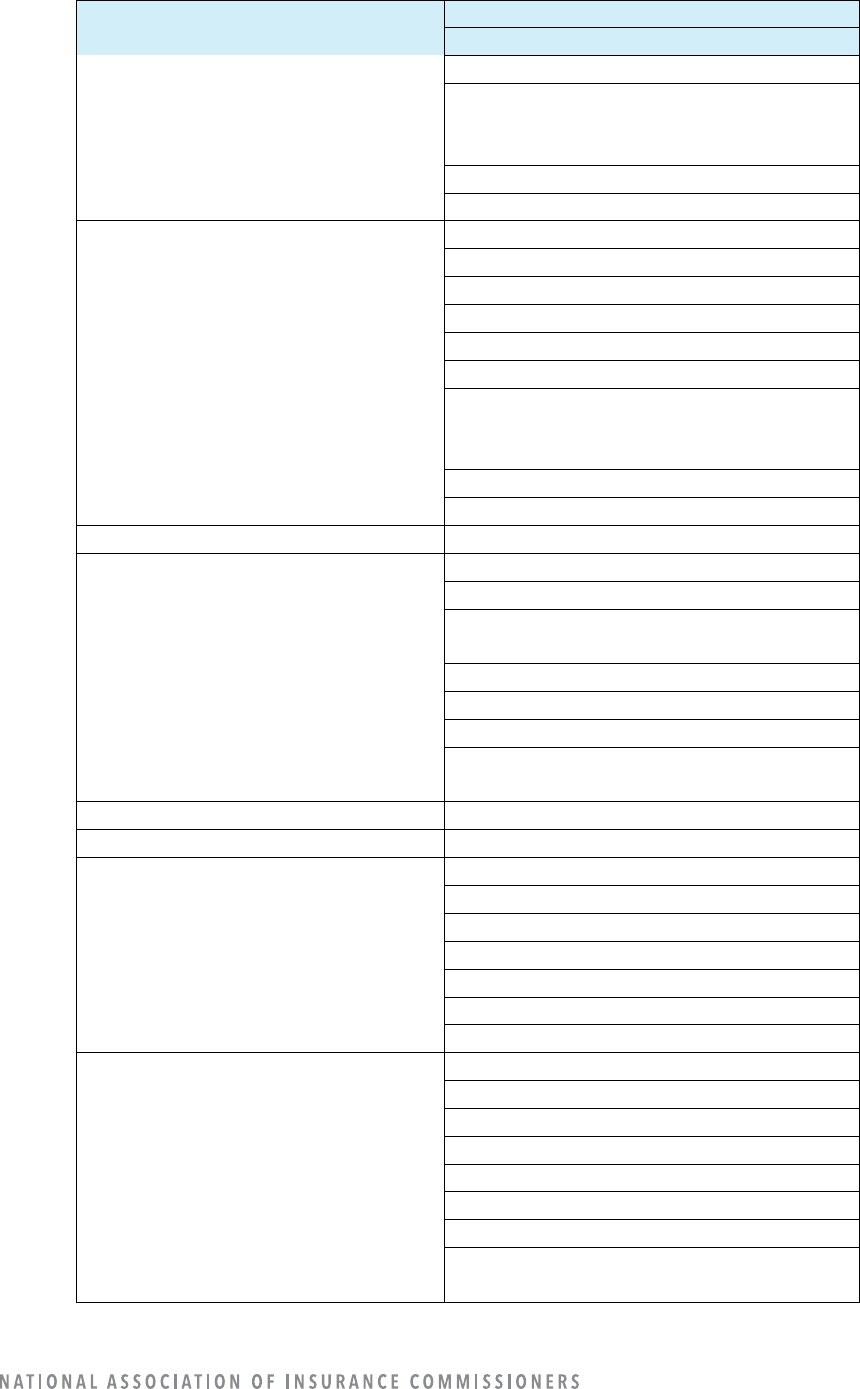

GENERAL SECTION OF THE SURVEY

Out of 193 companies that completed the survey, 169 companies currently use, plan to use, or plan to

explore using AI / ML as defined for this survey. This equates to 88.6% of reporting companies. (Refer to

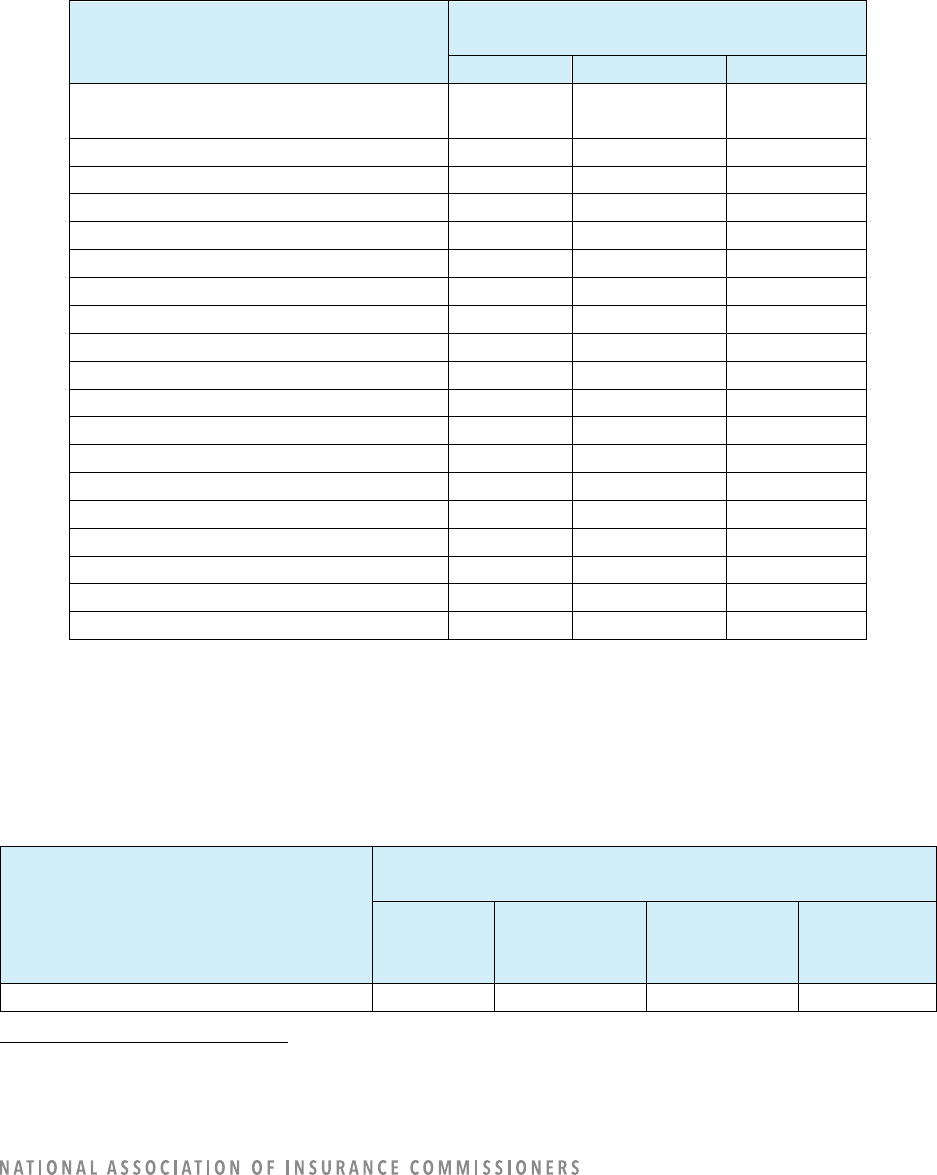

Table 1.)

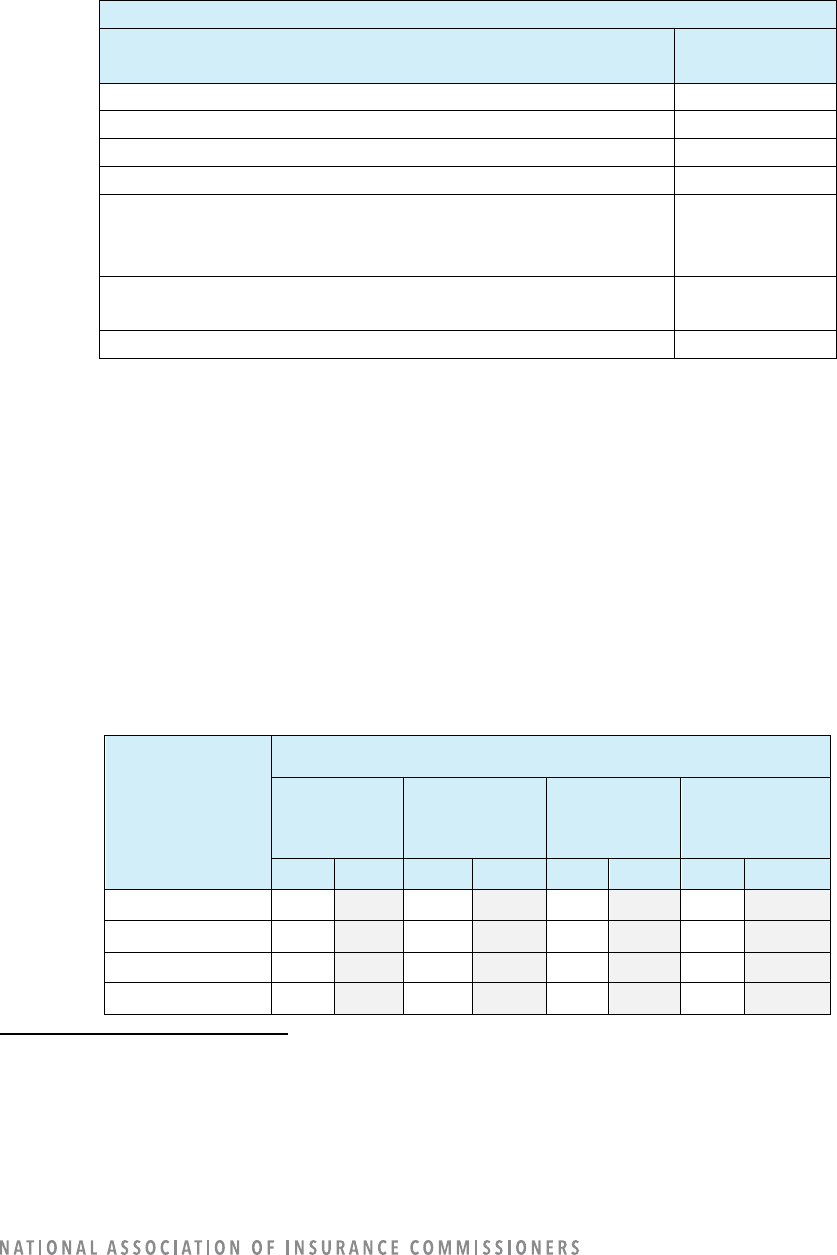

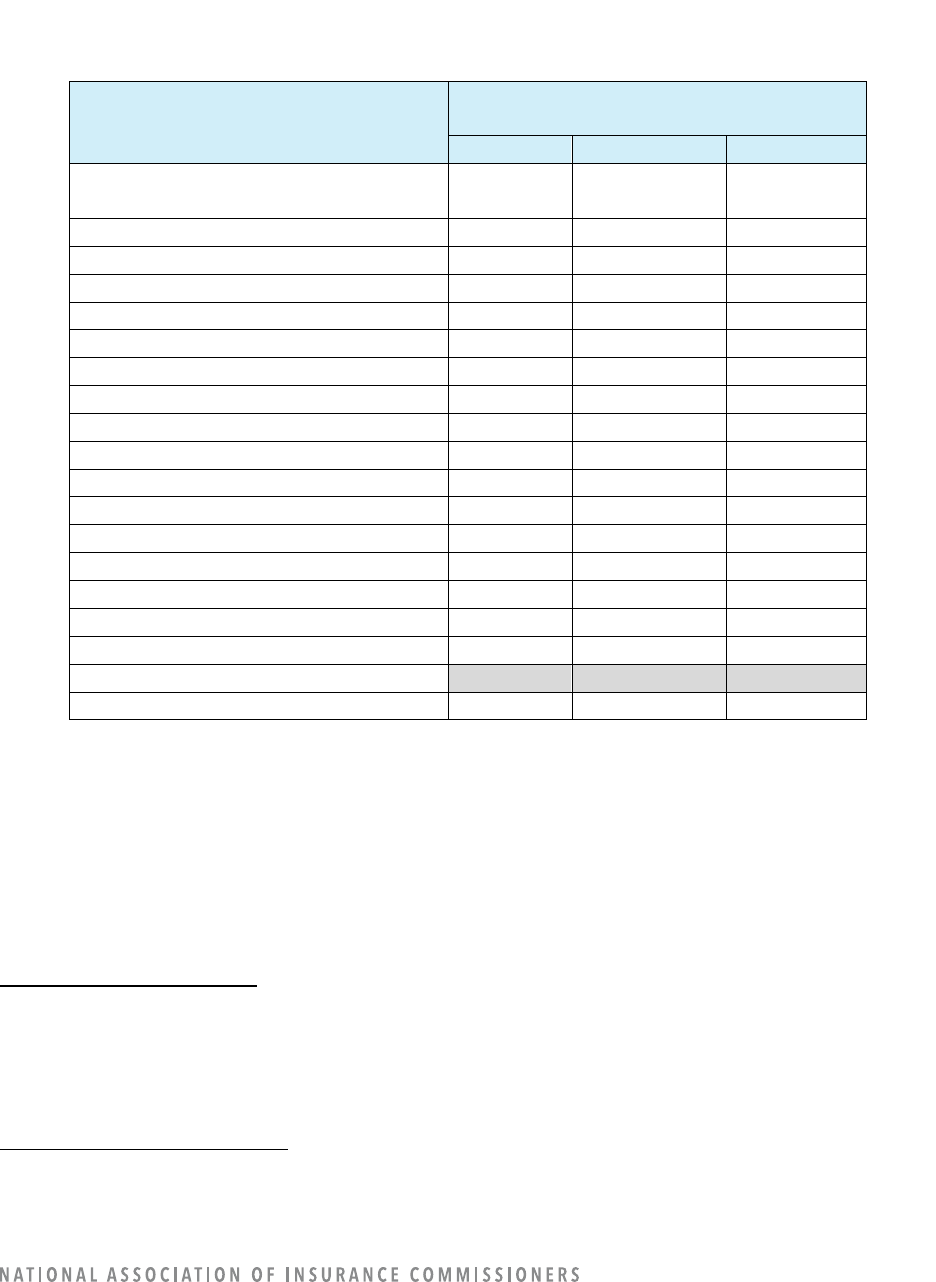

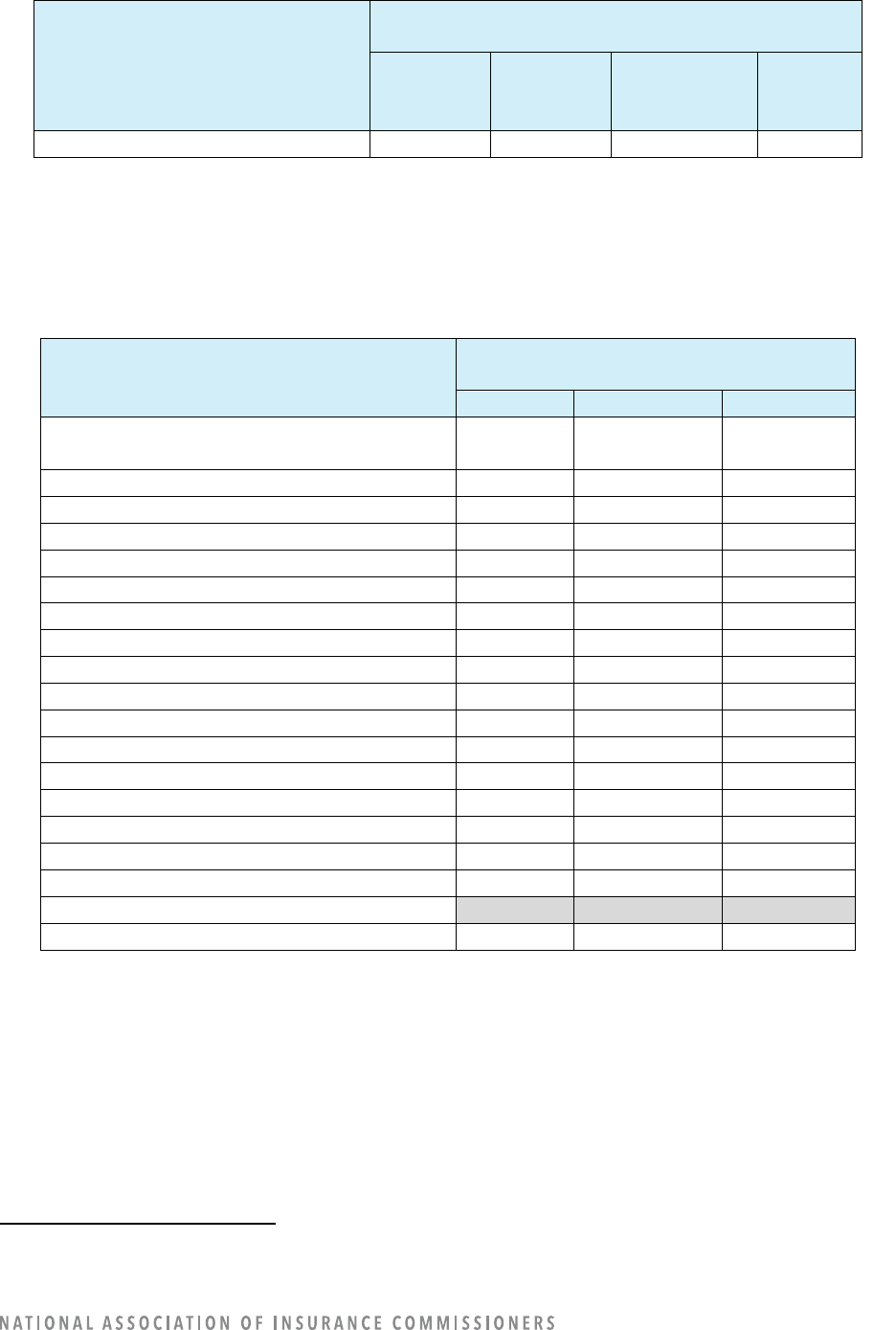

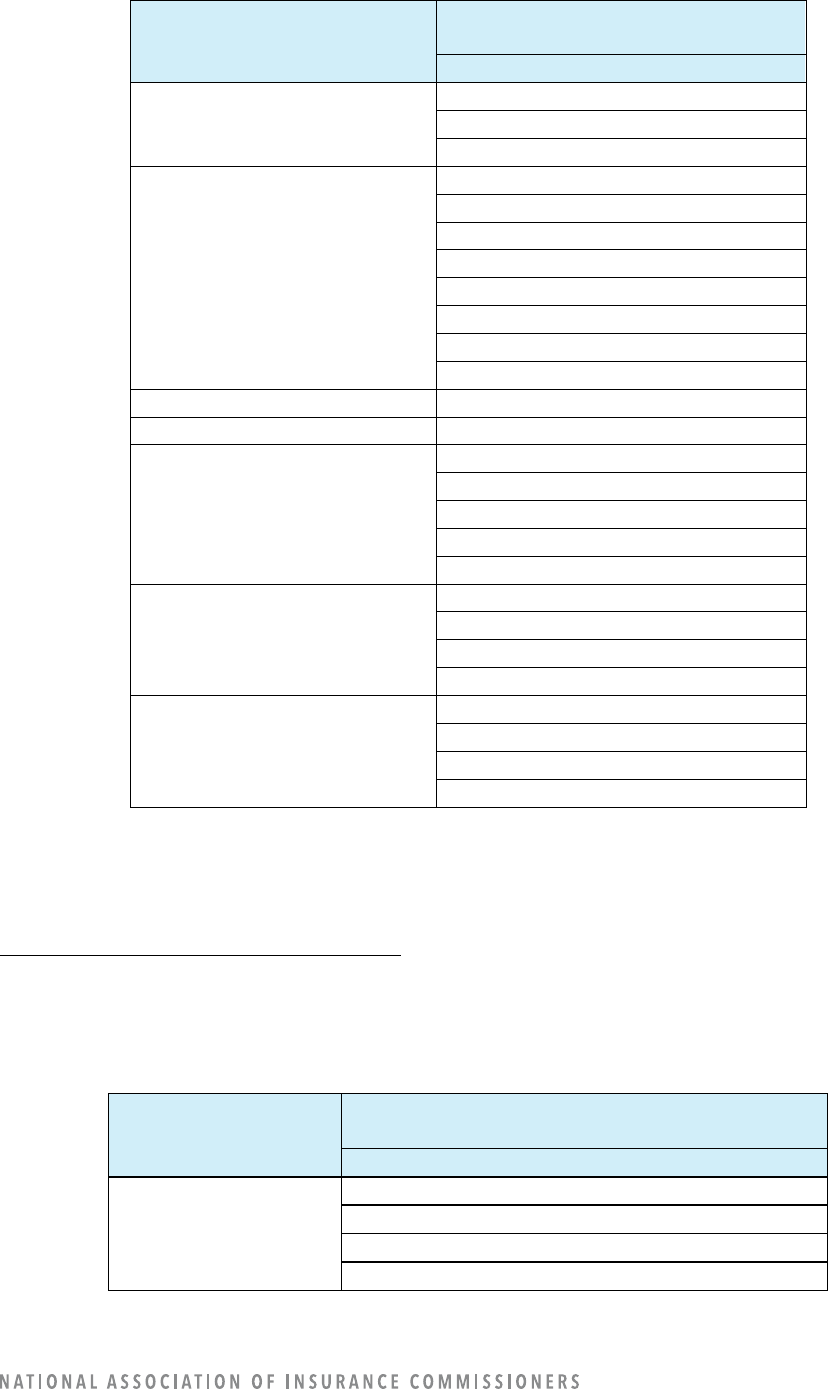

Table 1: Companies Using or Exploring the Use of AI/ML

Number of Companies

Planning to Use or Explore

Using AI/ML

Yes

169

No

24

Total

193

The 24 companies that indicated they had no plan to use or explore use of AI/ML also provided their

reason(s) why, with the most often selected reasons being: 1) no compelling business reason; and 2) lack

of resources and expertise. In addition to the options listed in the survey and shown in Table 2, a few

companies wrote in additional reasons. One company said it was not convinced it will yield a better risk

9

selection and/or product pricing result. Three companies said they use preconfigured programming in

their business processes. One company said it does not currently have policies in the requesting states.

Table 2: Companies’ Reasons for Not Using AI/ML

If not using AI/ML, why?

Options listed in the survey:

Number of

Companies

No compelling business reason

10

Waiting for regulatory guidance

6

Lack of resources and expertise

9

Lack of reliable data and associated security risk

6

Reliance on legacy systems requiring IT (Information

Technology), data, and technology system upgrade before

starting AI/ML initiatives

7

Waiting on the availability of a third-party vendor

product/service

1

Risk not commensurate with current strategy or appetite

4

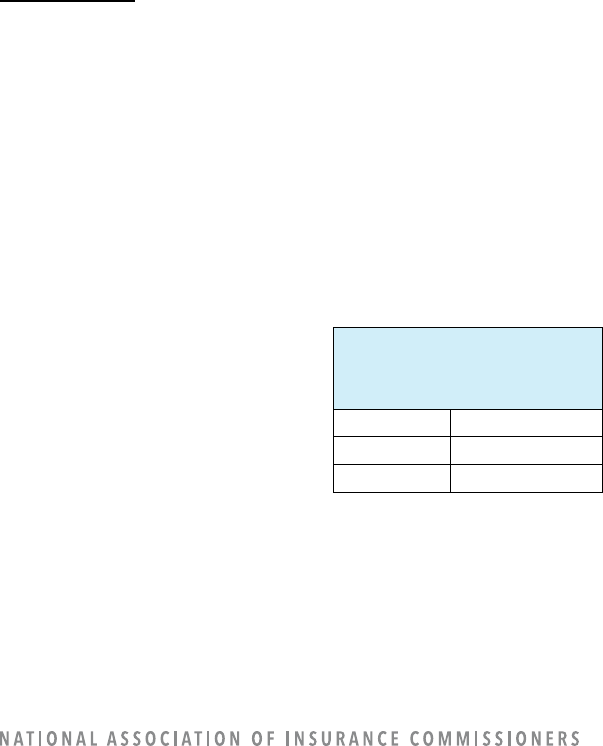

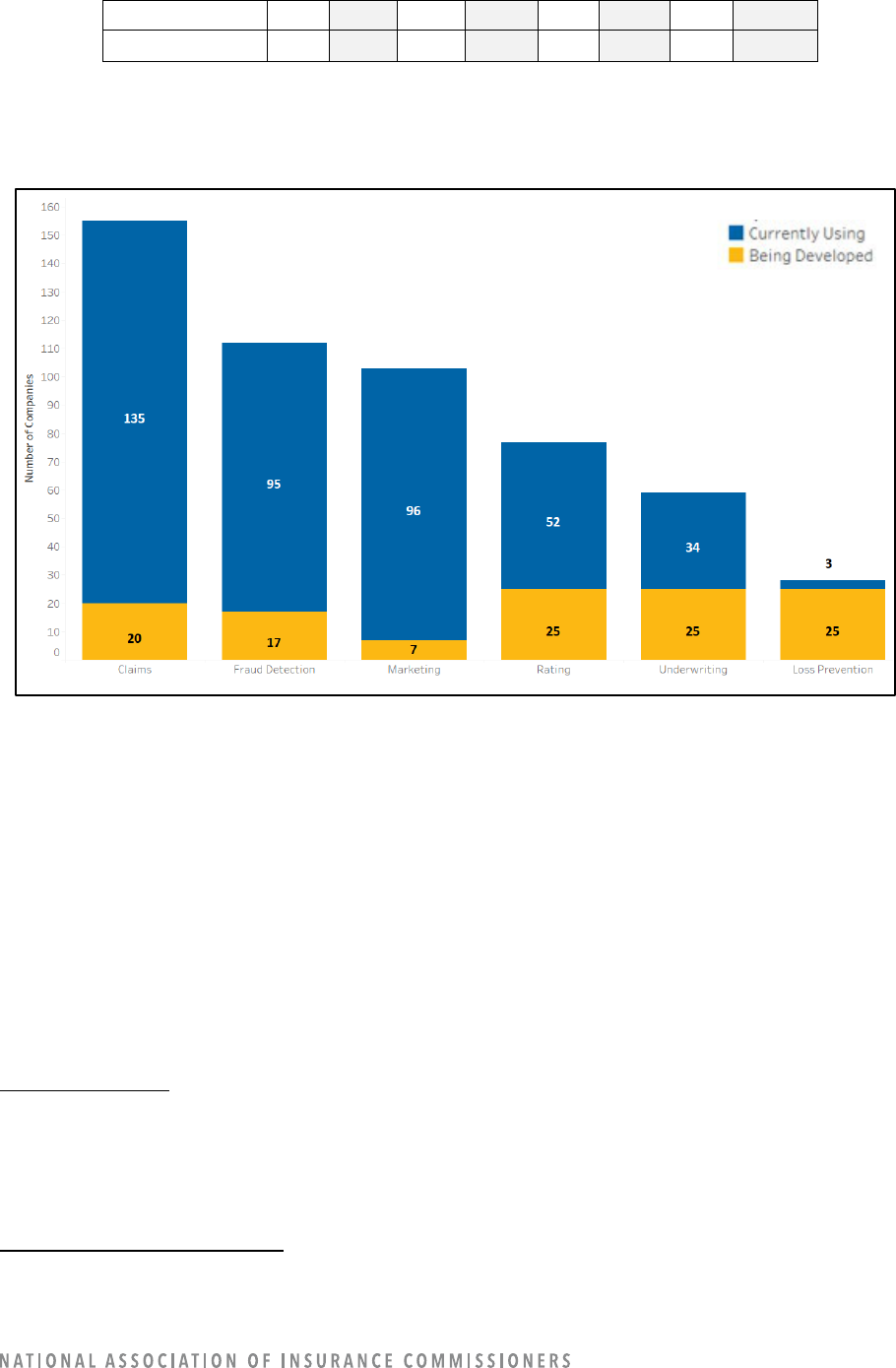

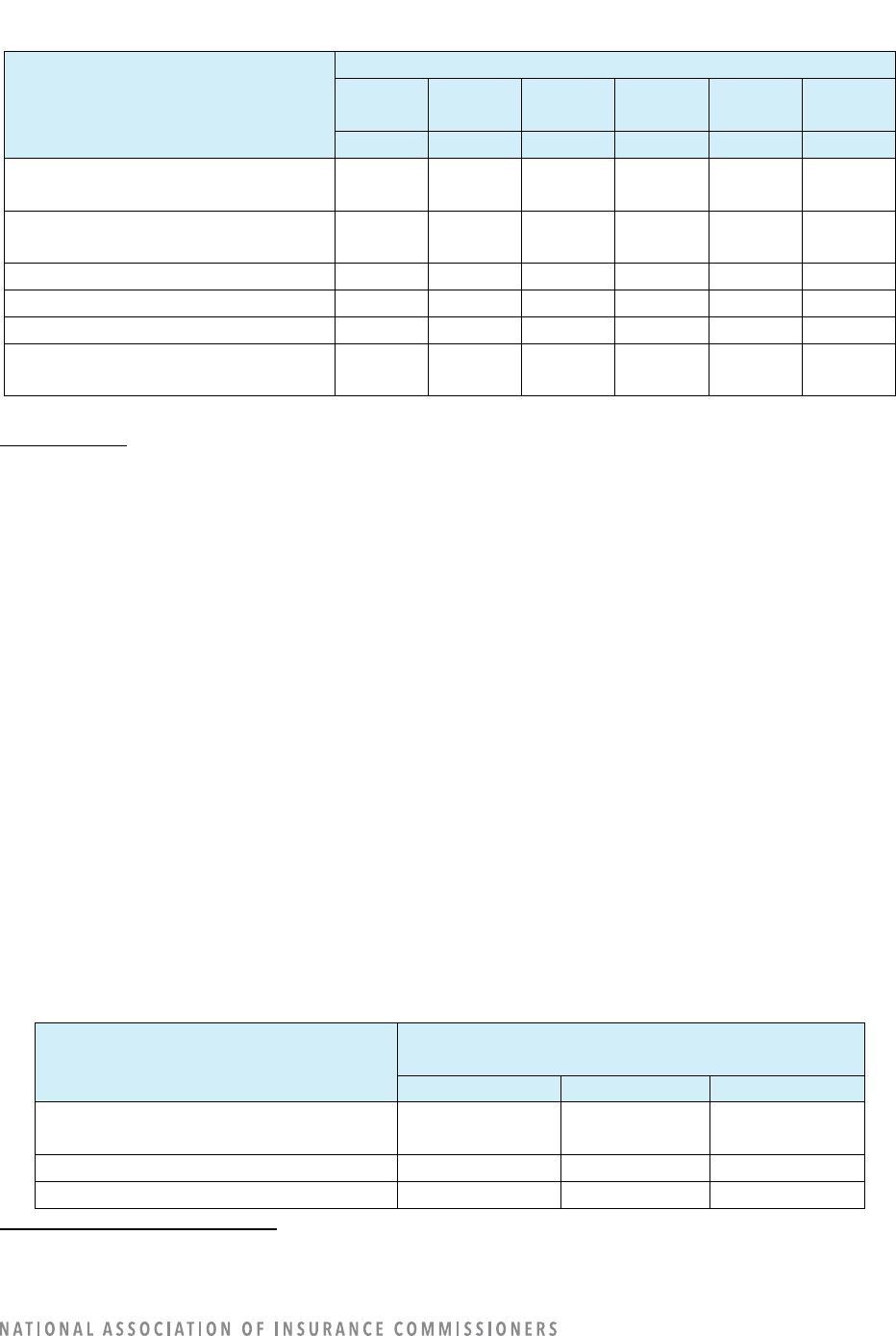

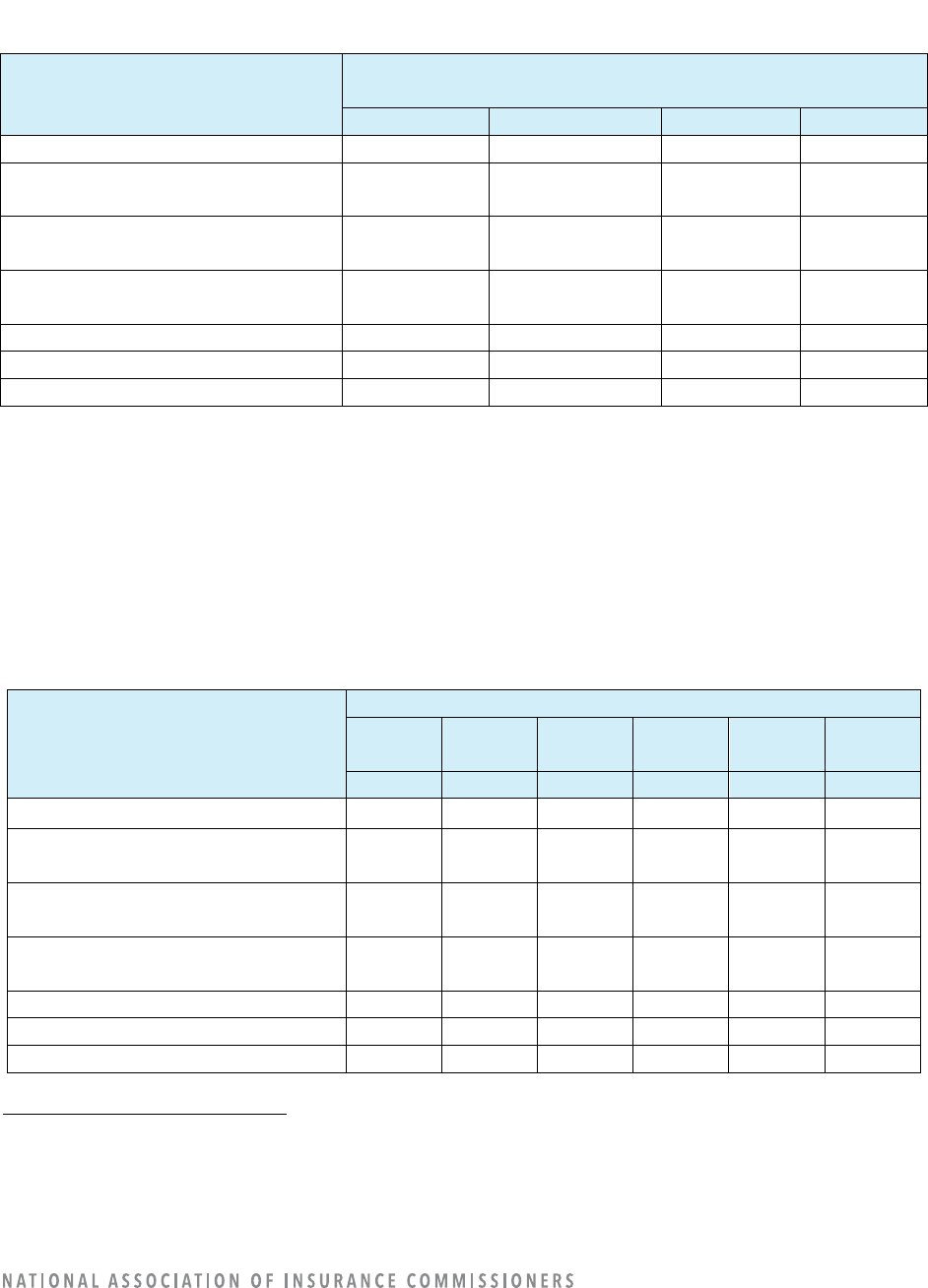

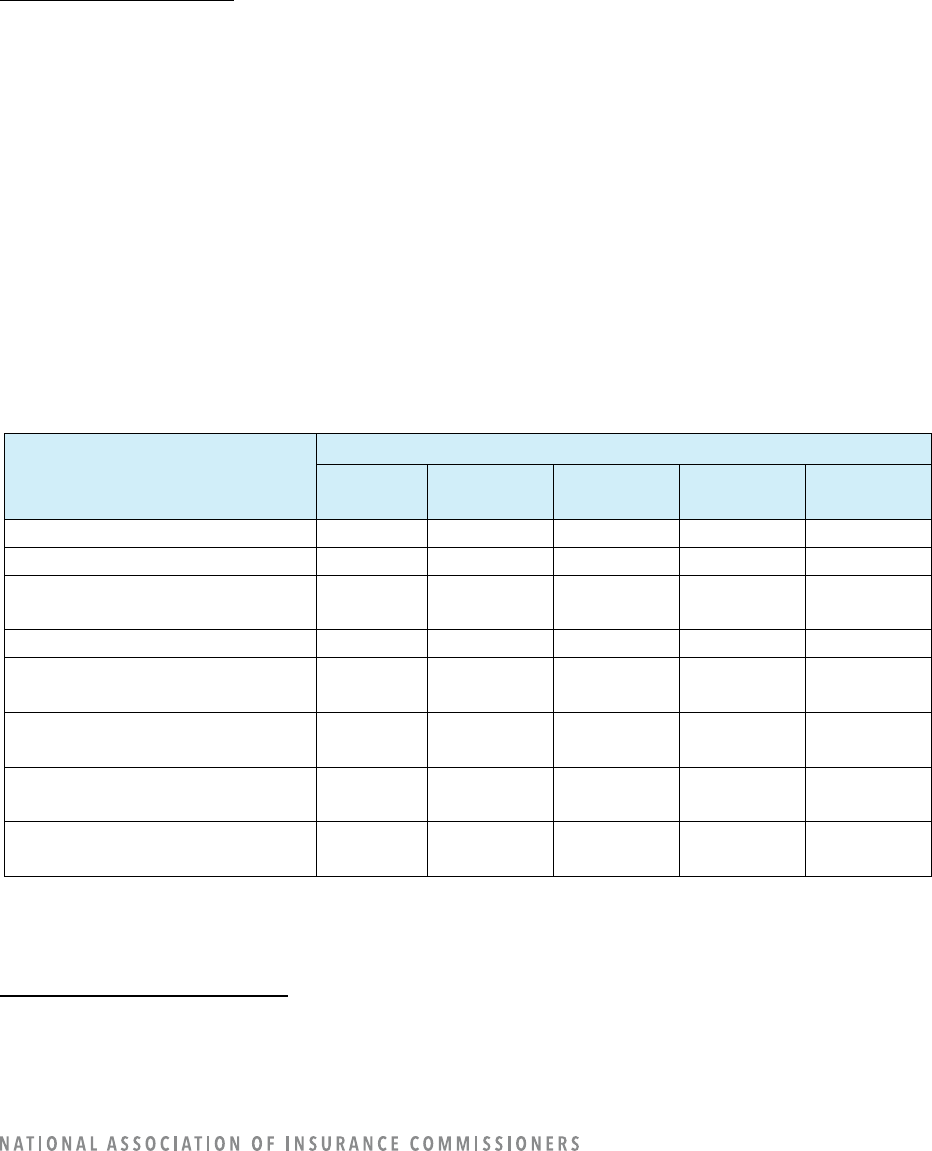

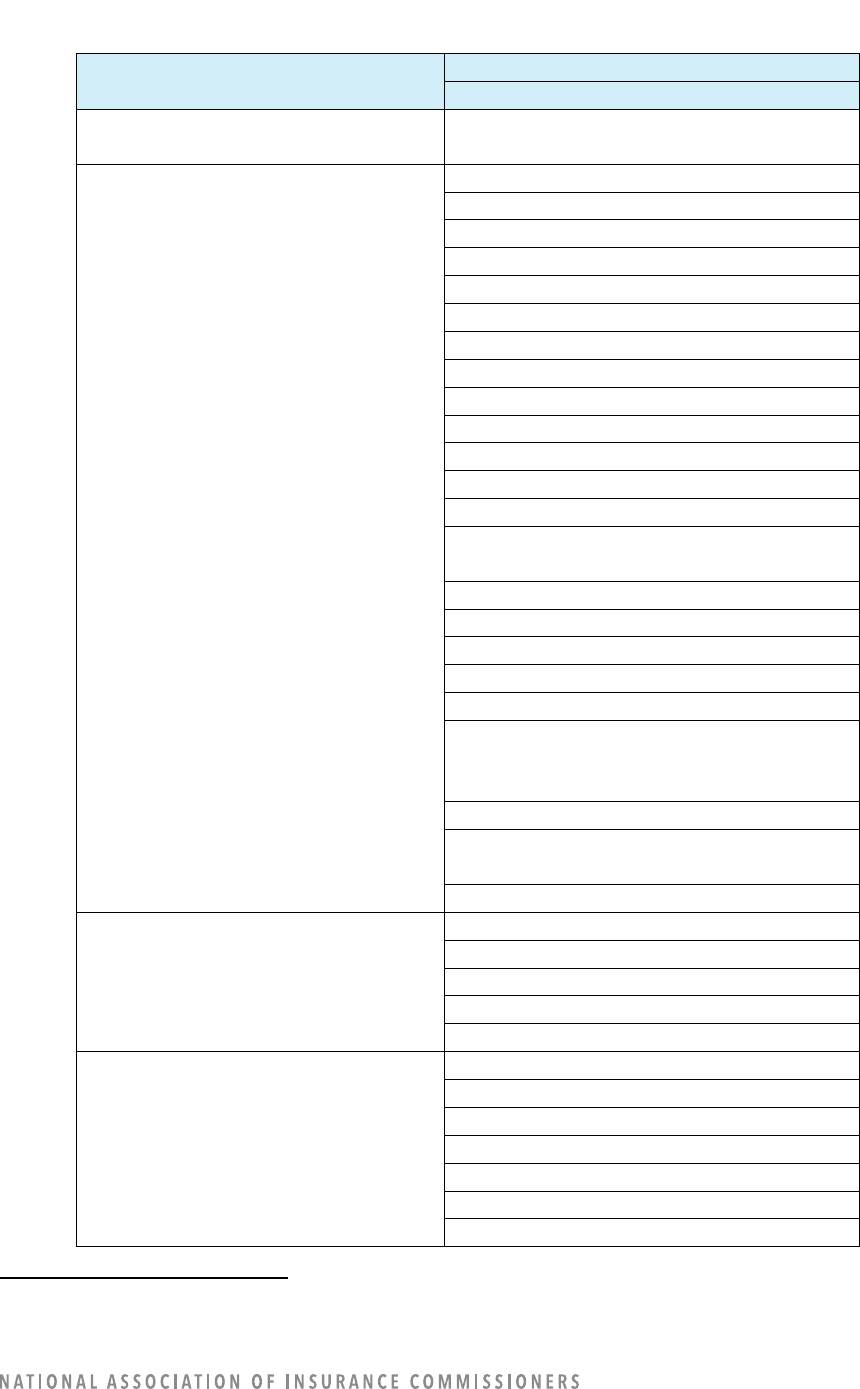

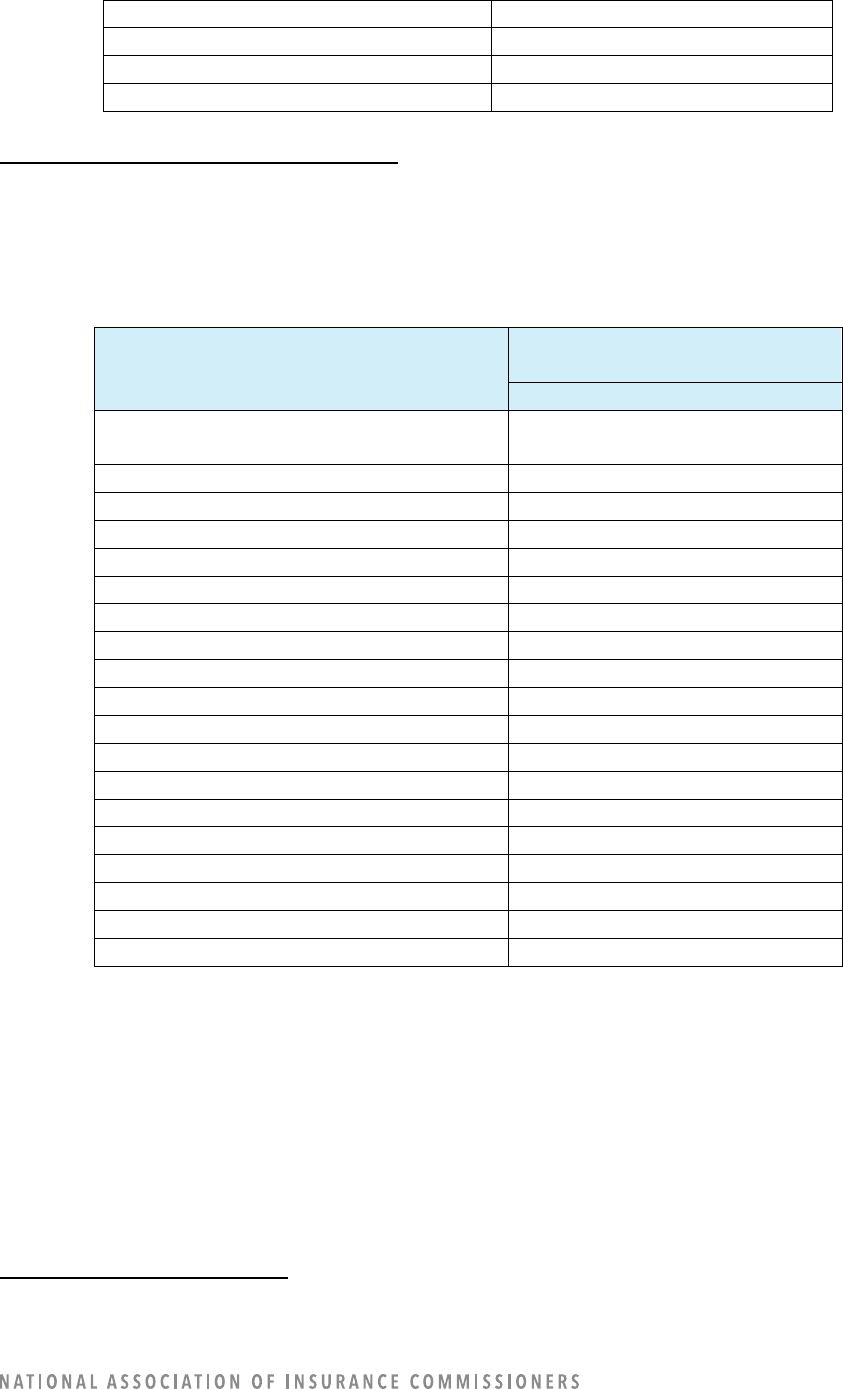

Among company operations areas, companies reported varying levels of AI/ML use, from only 2% in the

loss prevention area to 70% in claims operations. In order from maximum to minimum use, the percentage

of companies using AI/ML for the following operation areas were: claims, 70%; marketing, 50%; fraud

detection, 49%; rating, 27%; underwriting, 18%; and loss prevention 2%. Adding in the companies with

models under construction, the percentages were: claims, 80%; fraud detection, 58%; marketing, 54%;

rating, 40%; underwriting, 31%; and loss prevention, 15%. (Refer to Table 3.)

Intuitively, one might expect to see rating and/or underwriting as the areas with the largest amount of

AI/ML use. The results of this survey are purposely affected by the definition of AI/ML to exclude the

most-often used types of rating and underwriting models to focus on the more advanced types of AI/ML.

Table 3: Companies with Models in Use or Under Construction by Company Operation Area

Company

Operation

Area

1

Number and Percentage of Companies

In Use

Under

Construction

2

None (N/A) Total

#

%

#

%

#

%

#

%

Rating

52

27%

25

13%

116

60%

193

100%

Underwriting

34

18

25

13

134

69

193

100

Claims

135

70

20

10

38

20

193

100

Fraud Detection

95

49

17

9

81

42

193

100

1

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

2

The “under construction” category had options of number of years until expected implementation, but we question the results

of the categorization because the choices in the survey were ambiguous. For example, there was not a consistent understanding

of whether “< 1 year” meant that the company will be implementing AI/ML in the next year or if the company had been using

AI/ML for less than a year.

10

Marketing

96

50

7

4

90

47

193

100

Loss Prevention

3

2

25

13

165

85

193

100

The same information is shown pictorially in Figure 1.

Figure 1: Number of Companies Currently Using or Developing AI/ML Models

In addition to the company operations areas listed in the survey template, companies provided numerous

“other” AI/ML uses. The following are additional uses of AI/ML: agency models (portal effectiveness and

insights, agency and sales management, cross-selling); customer interactions (chatbot, customer care

operations, call center, customer experience, and customer service); information technology (IT)-related

models (performance monitoring, threat detection/protection); knowledge management; language

processing (speech-to-text, event insights); operational efficiency; social media sentiment analysis;

premium audits; video imaging to predict accidents; and workload forecasting.

COMPANY OPERATION: CLAIMS

3

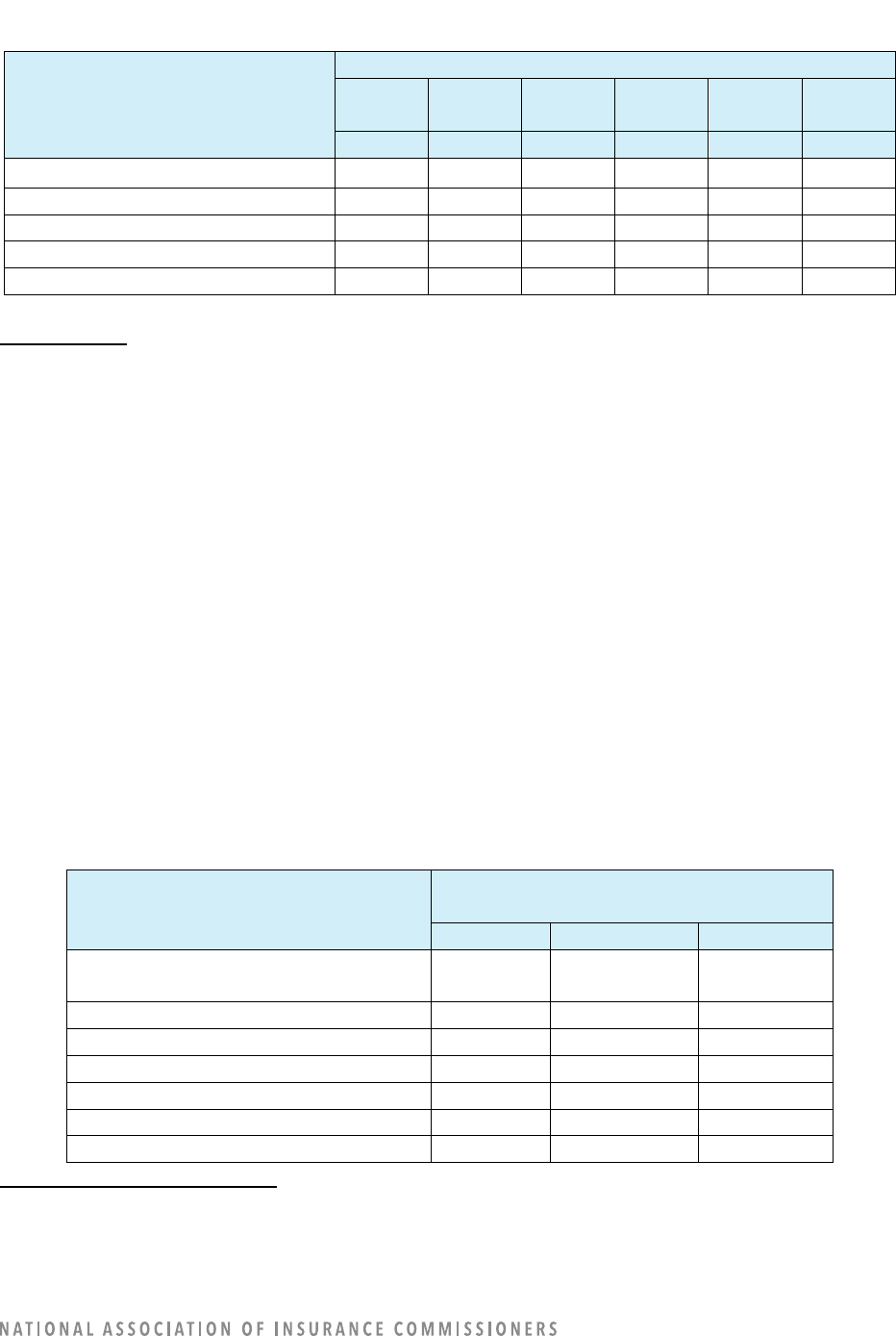

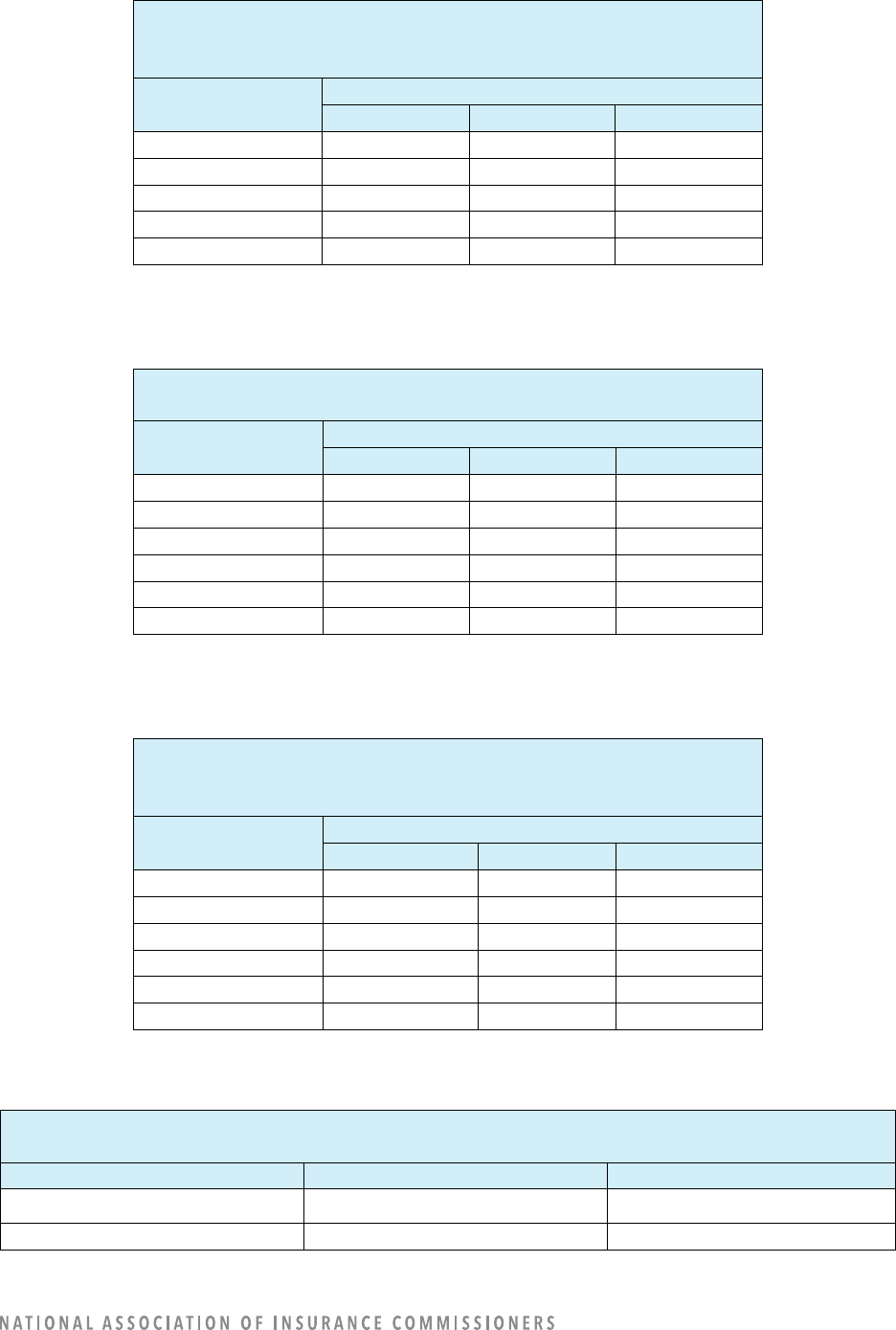

Out of 193 reporting companies, 135 reported using AI/ML for claims operations, and 20 reported having

models under construction.

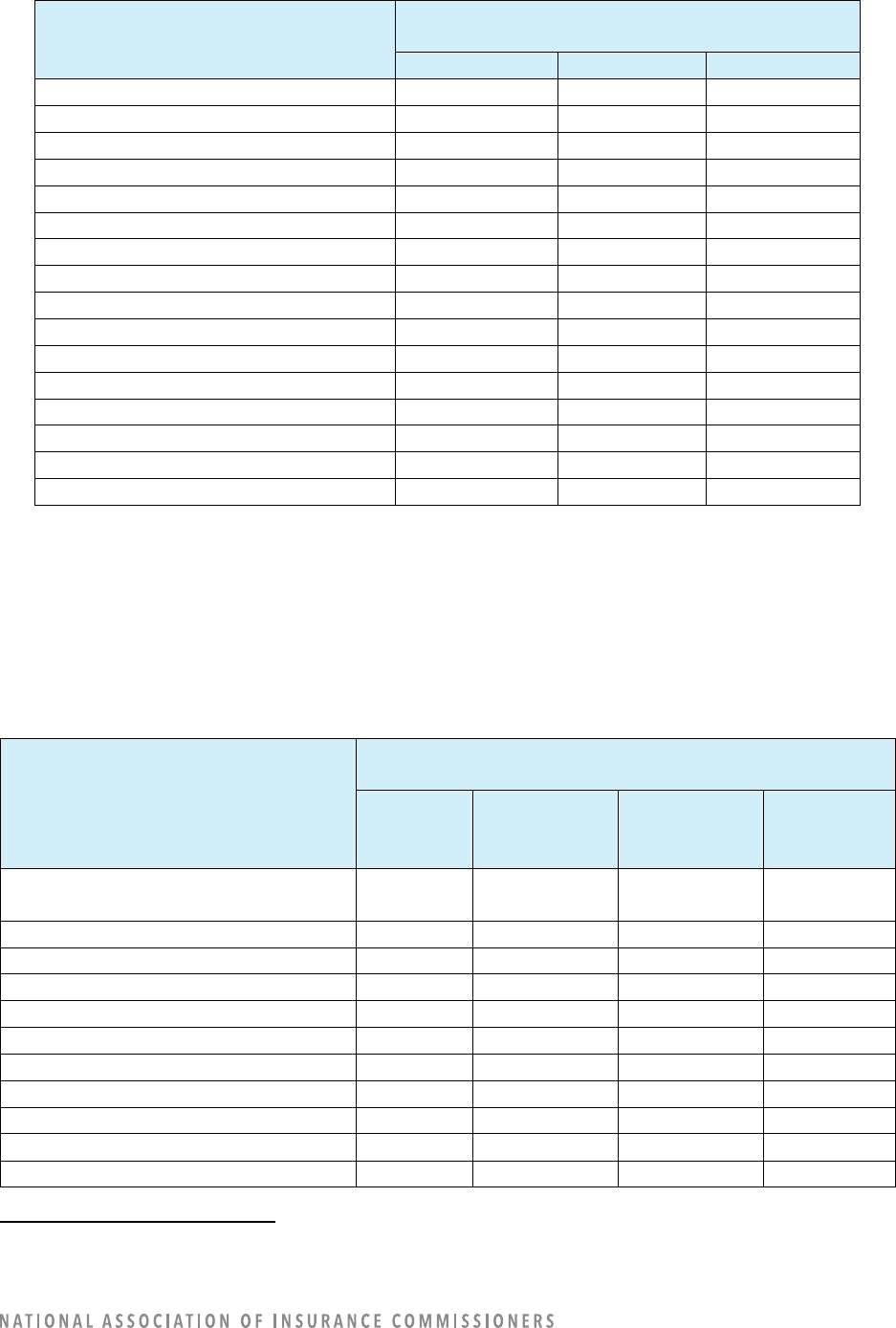

Claims Model Uses

In insurance claims operations, companies reported currently using AI/ML claims models mostly as an

informational resource for adjusters (96 companies). Few companies are using AI/ML claims models for

claims approvals (9) and none are using them for claims denials. Other AI/ML claims models are currently

used to determine claim settlement amounts (50), to make claim assignment decisions (58), to evaluate

3

For definitions, refer to Appendix B: Definitions Specific to Claims.

11

images of loss (55), and for other claim-related functions (66). The uses of claims models identified in

Table 4 were options that could be selected in the survey template. Companies noted some additional

uses of claims models in their write-in comments: subrogation potential, claims triage, speech analysis,

loss recognition, litigation likelihood, selection of claims for a streamlined liability investigation process,

accident detection, listen to voice calls, claim classification, work prioritization, reserving, reserve

management, fast-track processing, volume forecasting, leadership quality reviews, call deflection, early

total loss recognition, uninsured motorist exposure, physical damage assessment, arbitration, “doc bot,”

and supplemental requests on claims. One company mentioned the use of AI/ML to recommend repair

shops.

Once models under construction begin to be used, companies will most often be using AI/ML claims

models for evaluation of images of the loss (114 companies) and other claim-related functions (113).

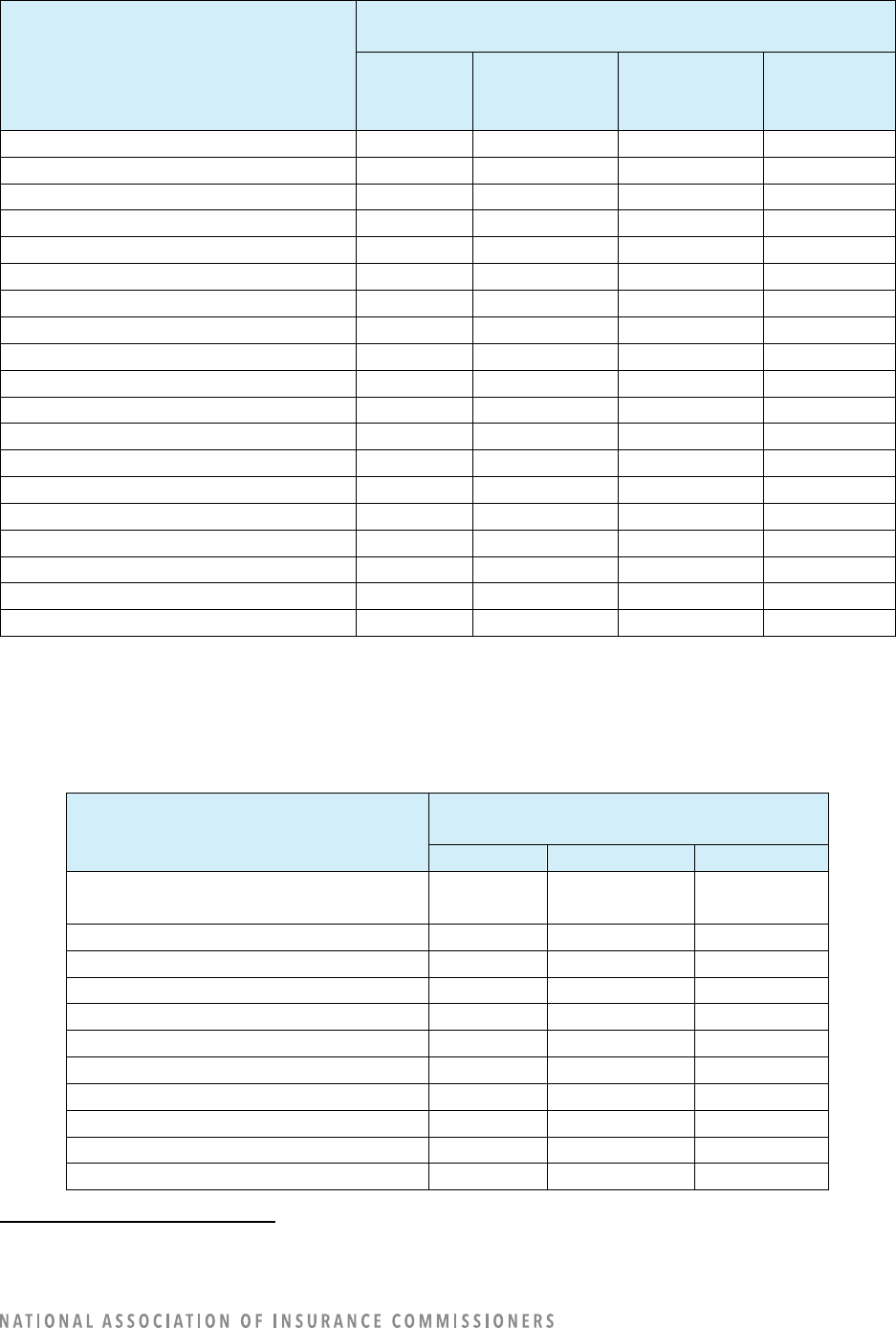

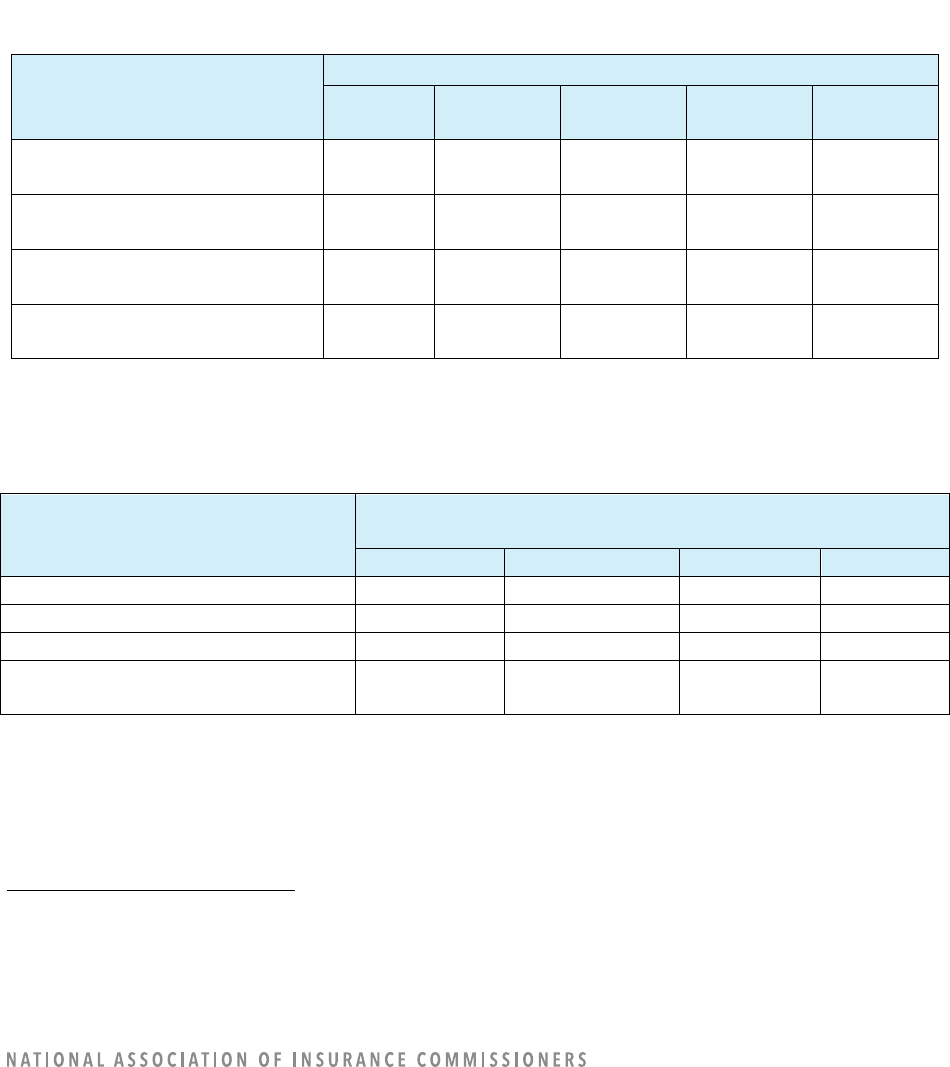

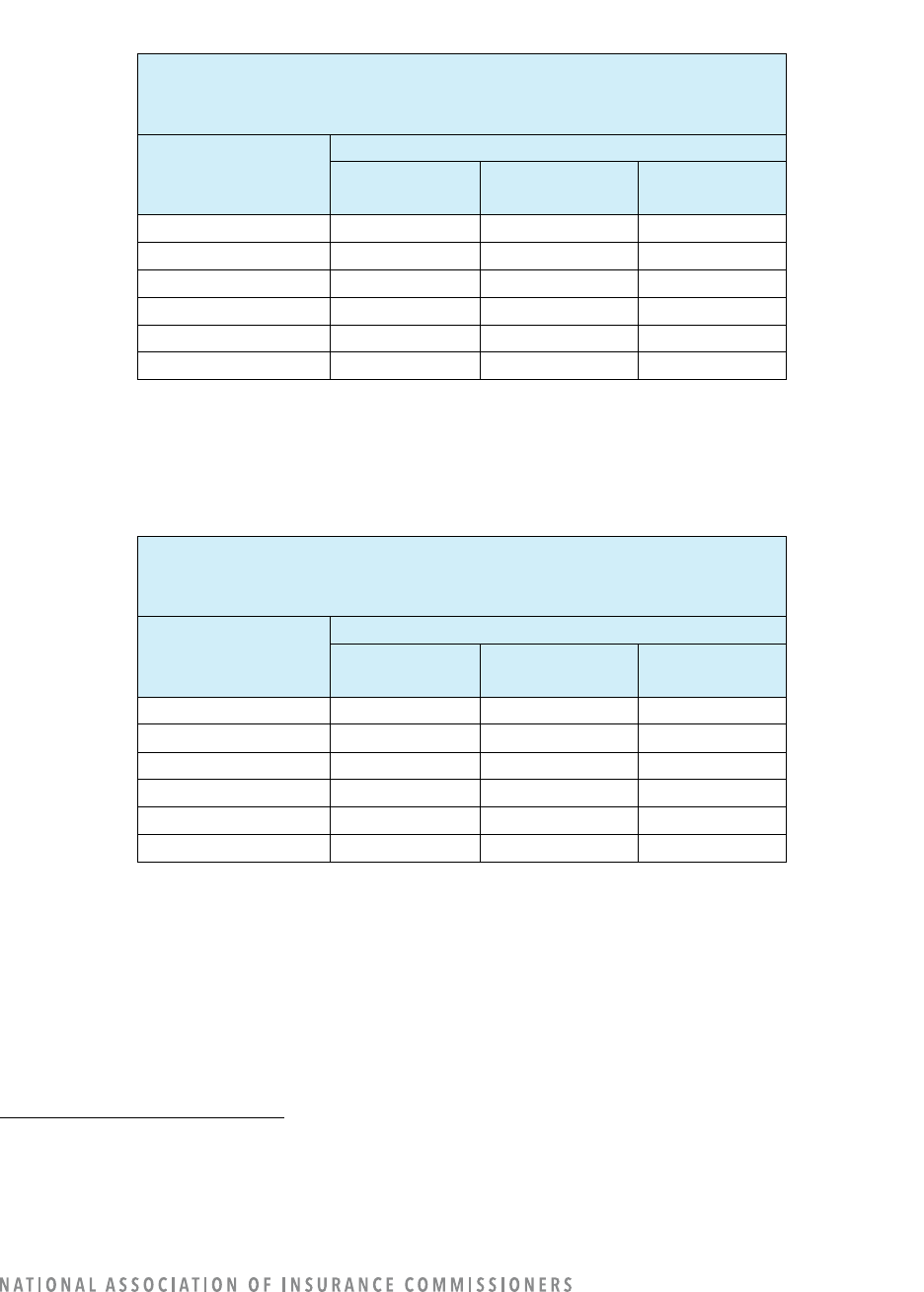

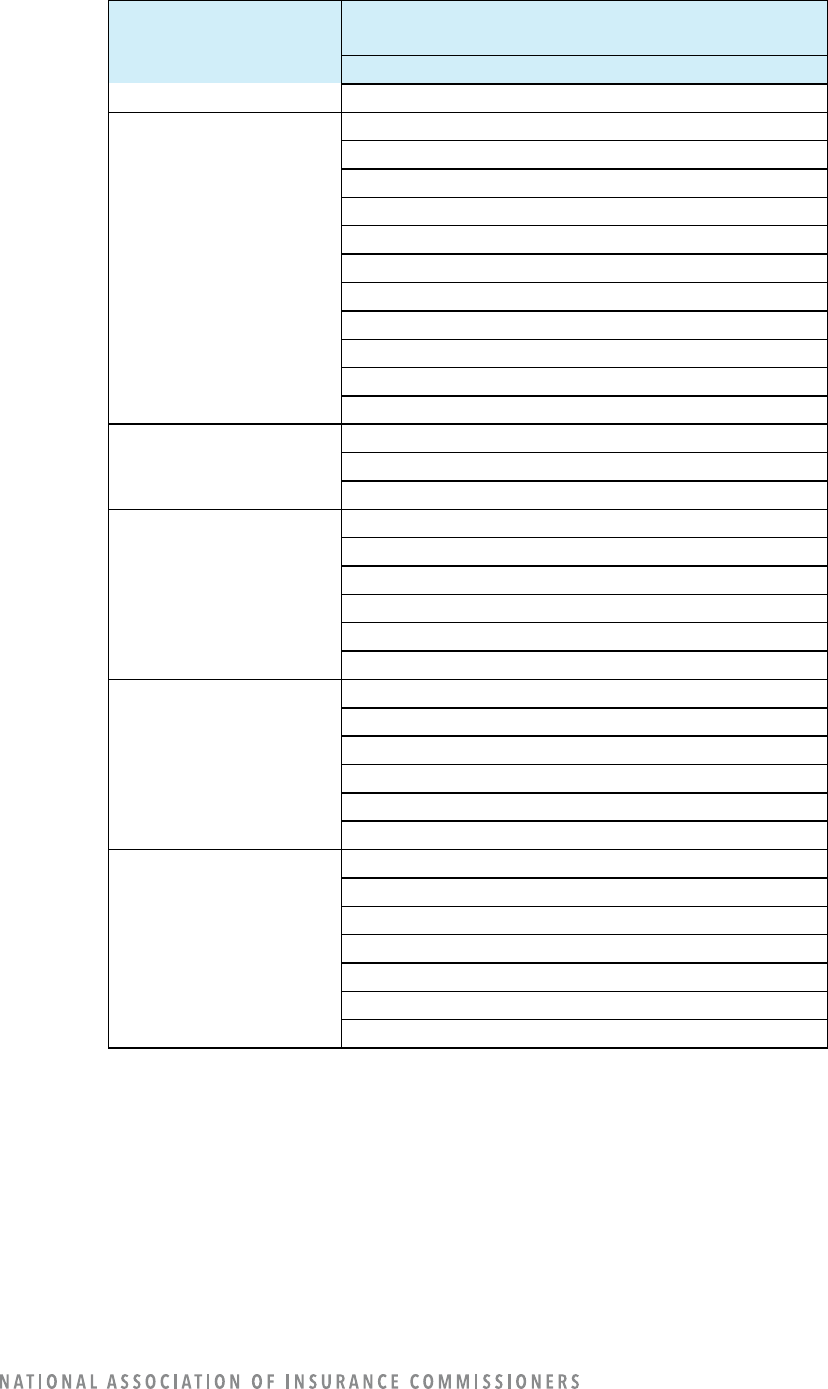

Table 4: Companies’ Use of Claims Models

Claims Model Uses

4

Number of Companies

In Use

Research

Proof of

Concept

Prototype

None

Claim Approval

9

5

0

0

179

Claim Denial

0

0

0

0

193

Determine Settlement Amount

50

6

10

3

124

Claim Assignment Decisions

58

15

11

1

108

Informational Resource for

Adjusters

96

0

3

0

94

Evaluation of Images of the Loss

55

24

27

8

79

Other Claim-Related Functions

66

21

11

15

80

The level of insurance company employee decisions influenced by AI/ML varies by model use.

Determination of settlement amount tends to include augmentation, defined as suggesting an answer

and advising the human who is making the decision. Claim assignment decisions tend to be automated or

at least the models provide augmentation. (Refer to Table 5). Note that Table 5 differs from the previous

tables because the data represents the number of models instead of the number of companies.

Table 5: Level of Decision-Making by Use of Claims Models

Claims Model Uses

5

Number of Models (In Use or Under Construction) by

Level of Decisions Influenced by AI/ML

Automation*

Augmentation*

Support*

Other

Claim Approval

9

6

0

0

Claim Denial

0

0

0

0

Determine Settlement Amount

30

94

11

0

Claim Assignment Decisions

106

81

8

0

4

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

5

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

12

Claims Model Uses

5

Number of Models (In Use or Under Construction) by

Level of Decisions Influenced by AI/ML

Automation*

Augmentation*

Support*

Other

Informational Resource for

Adjusters

6

82

164

0

Evaluation of Images of the Loss

16

201

35

0

Other Claim-Related Functions

34

95

92

2

*“Automation” was defined as no human intervention on execution. “Augmentation” was defined as a model that

suggests an answer and advises the human making a decision. “Support” was defined as a model that provides

information but does not suggest a decision or action.

Models being used by insurance companies are developed in-house (with or without third-party

assistance) or purchased from a third party. Models for claim approval, claim assignment decisions,

adjusters’ informational resource, and other claim-related functions tend to be developed in-house.

Models used to determine settlement amounts and evaluate images of the loss tend to be developed by

third parties. (Refer to Table 6.)

Table 6: Claims Model Sources by Model Use

Claims Model Uses

6

Model Source

In-

House

In-

House

Third-

Party

Third-

Party

Total Total

#

%

#

%

#

%

Claim Approval

11

73%

4

27%

15

100%

Claim Denial

0

0

0

0

0

100

Determine Settlement Amount

27

20

108

80

135

100

Claim Assignment Decisions

155

79

40

21

195

100

Informational Resource for

Adjusters

222

88

30

12

252

100

Evaluation of Images of the Loss

70

28

182

72

252

100

Other Claim Related Functions

172

77

51

23

223

100

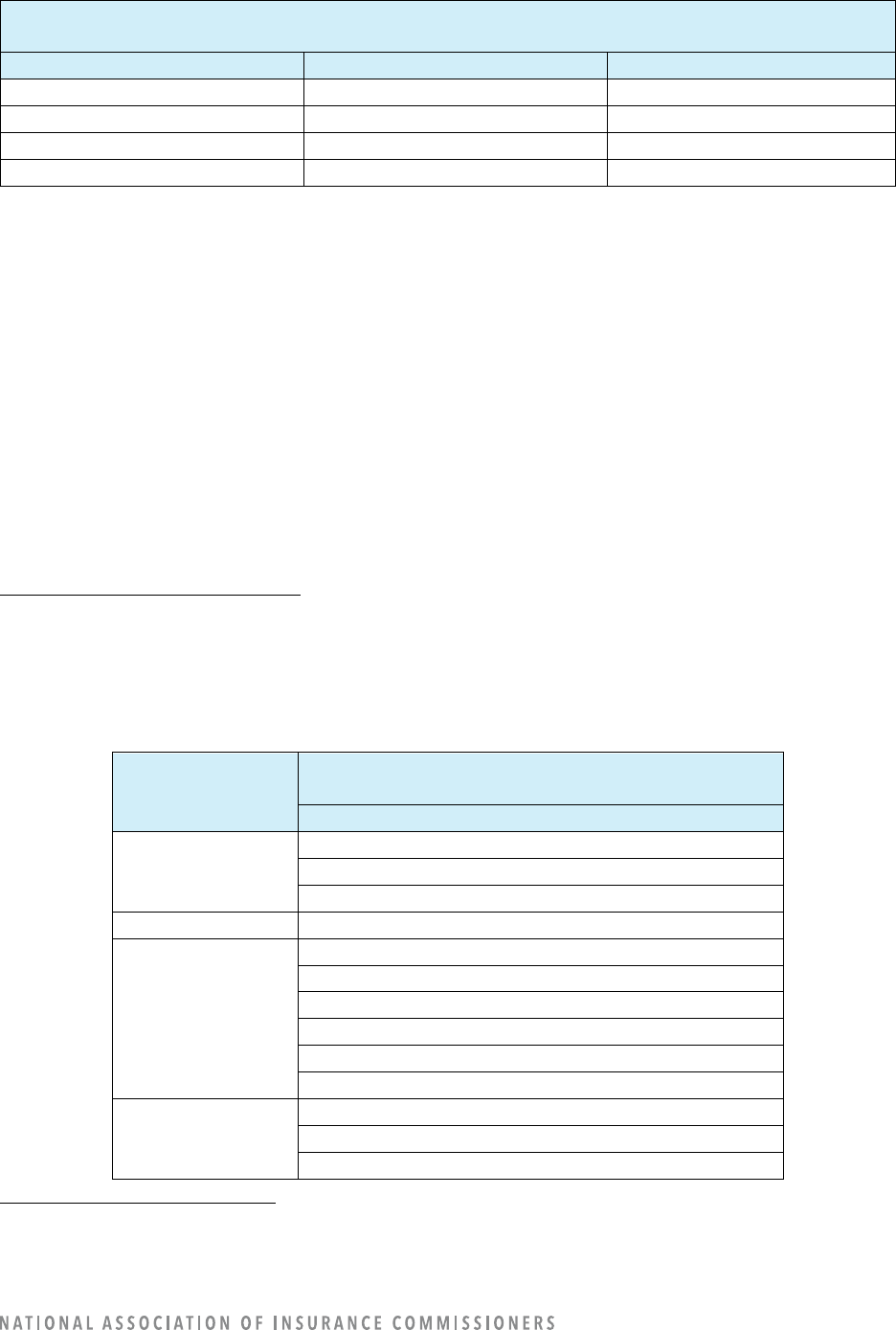

Data Elements

It is well known that insurers use big data for many purposes and models. Given this survey is focused on

the use of the “more advanced” AI/ML, the data element information here is similarly focused on the use

of data elements only when used in “more advanced” AI/ML models.

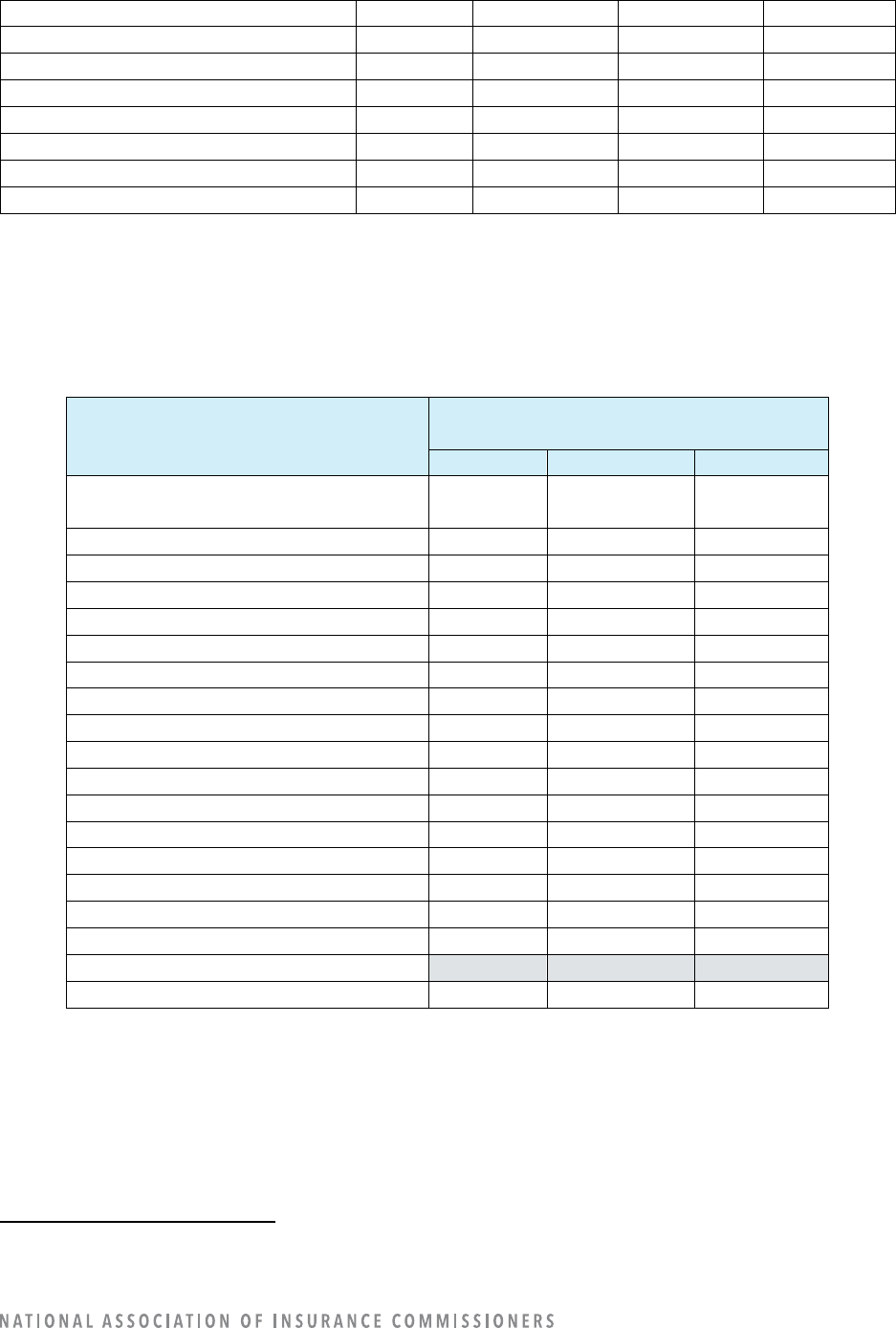

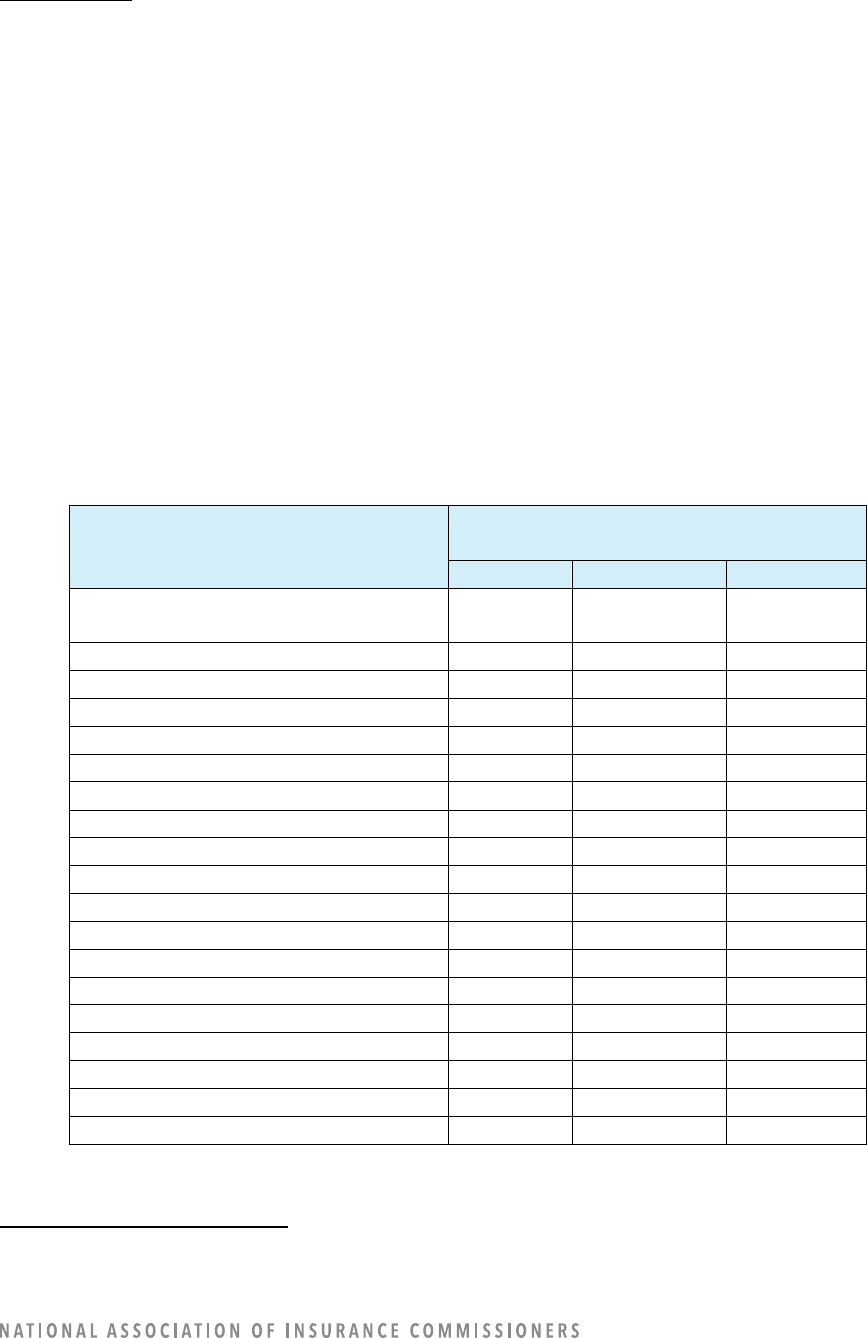

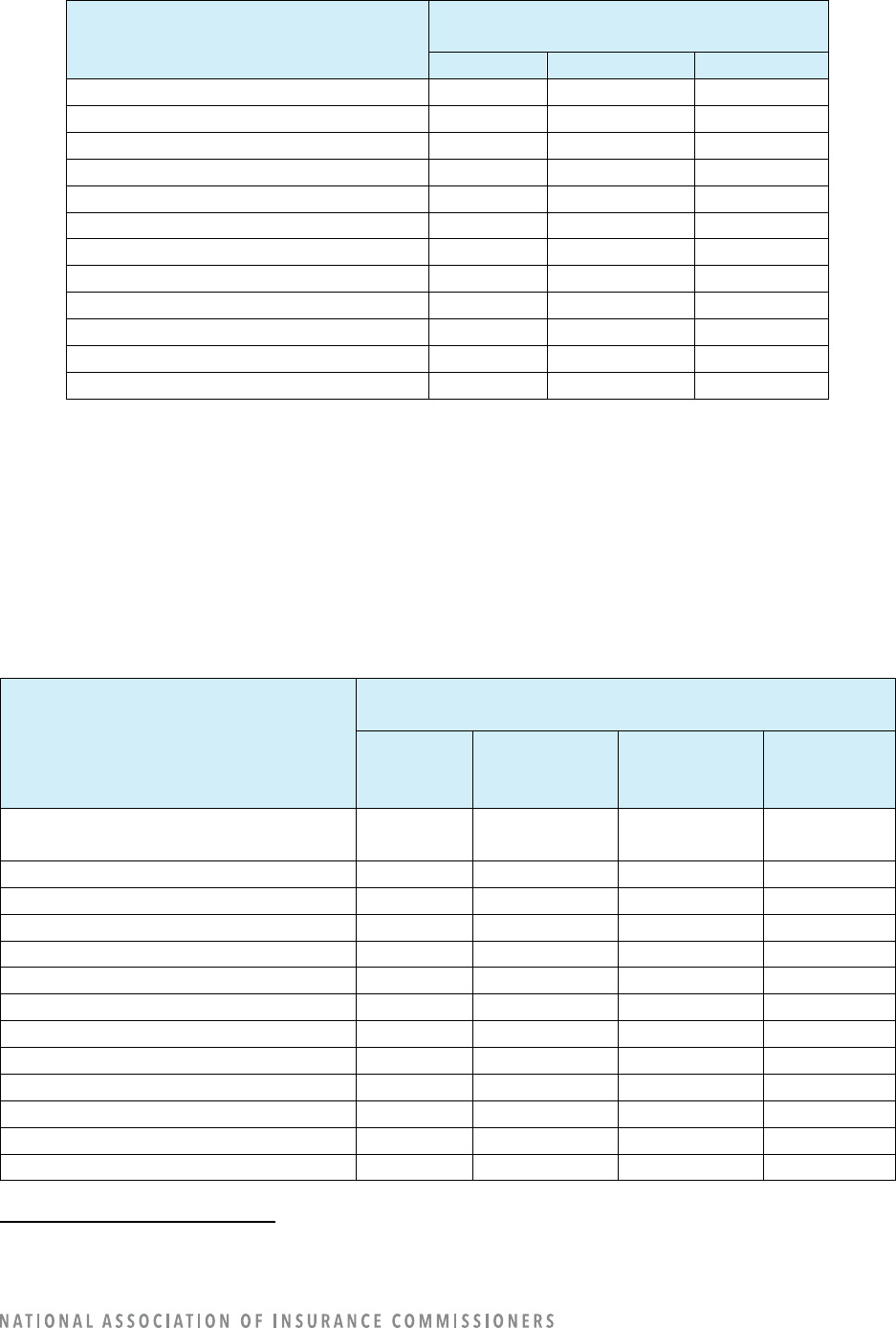

For claims, the following five data elements were the most frequently reported as being used for AI/ML:

• Vehicle-specific data (123 companies)

• Loss experience (74)

• Medical (63)

• Geocoding (22)

• Telematics (21)

6

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

13

There are at least some companies using a consumer or other type of “score” (16), driving behavior (10),

criminal convictions (9), voice analysis (8), online media (7), education (2), and personal financial

information (2). Companies also reported using “other” nontraditional data elements (32). (Refer to Table

7.)

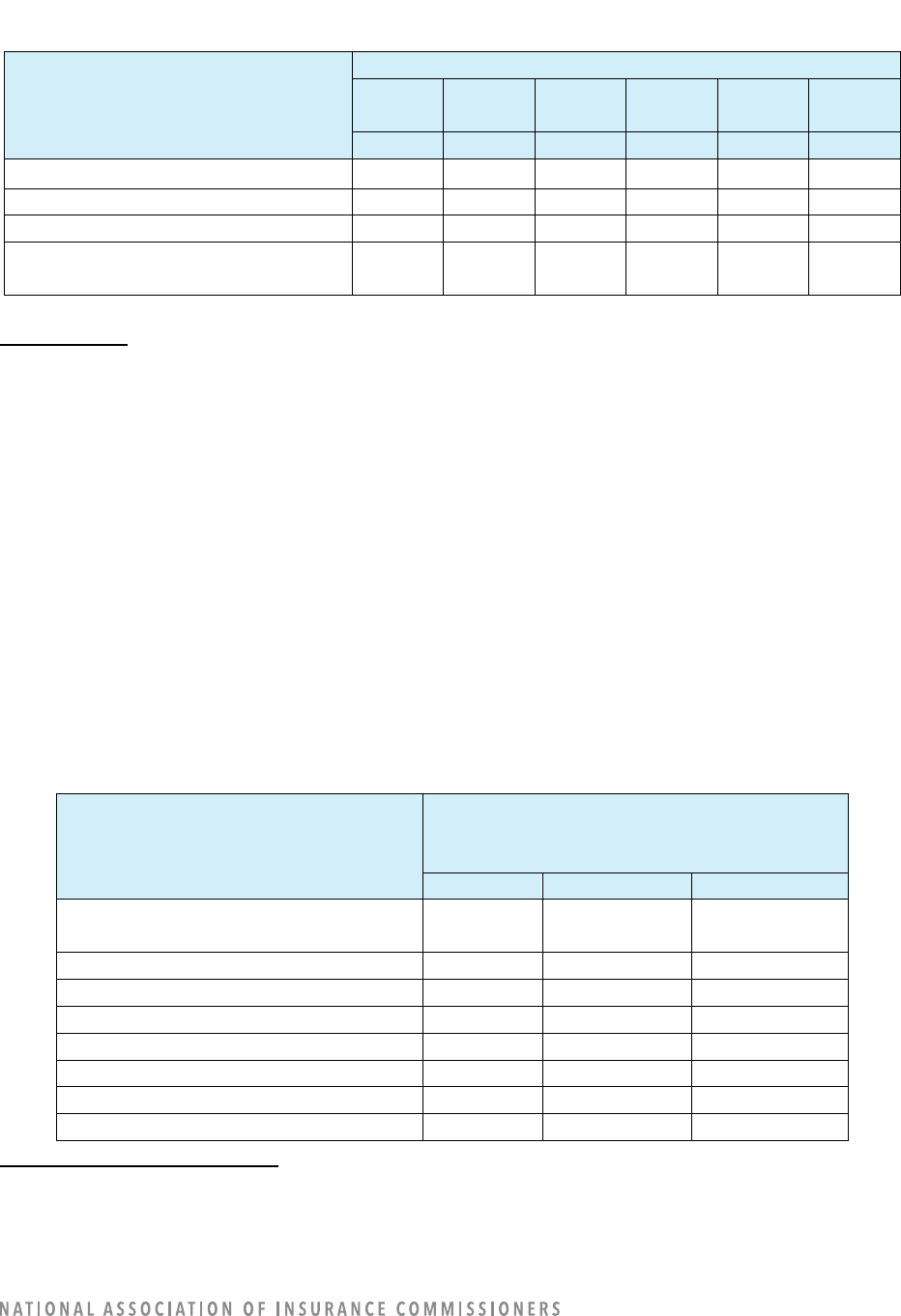

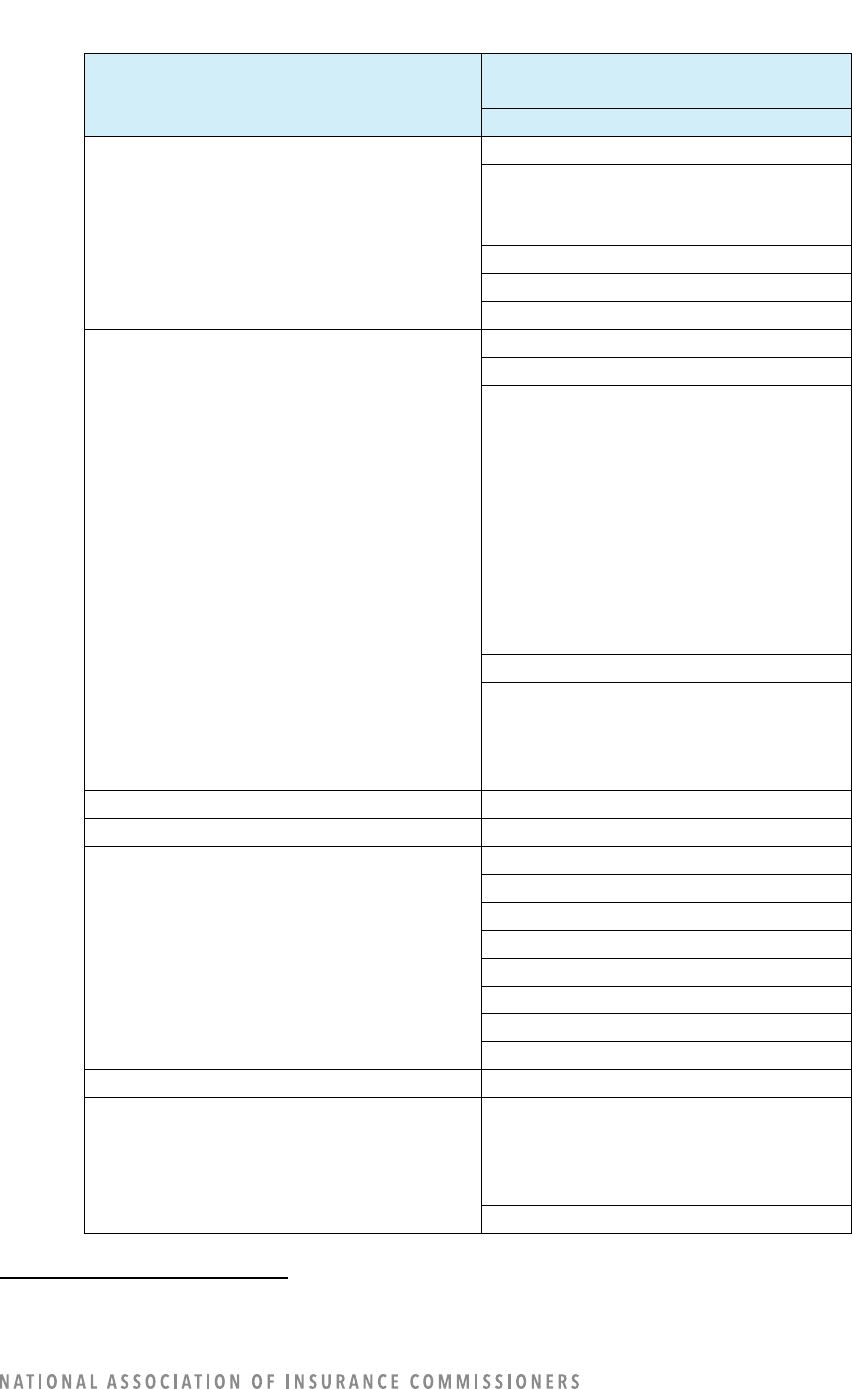

Table 7: Companies’ Use of Claims Data Elements

Claims Data Elements

7

Number of Companies Using/Not Using the

Data Element in a Claims AI/ML Model*

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

9

153

31

Demographic

40

122

31

Driving Behavior

10

152

31

Education

2

160

31

Vehicle-Specific Data

123

39

31

Facial Detection/Recognition/Analysis

0

162

31

Geocoding

22

140

31

Natural Catastrophe

0

162

31

Job Stability

0

162

31

Income

0

162

31

Occupation

0

162

31

Personal Financial Information

2

160

31

Loss Experience

74

88

31

Medical

63

99

31

Online Media

7

155

31

Telematics

21

141

31

Voice Analysis

8

153

32

Consumer or Other Type of “Score”

16

147

30

Other Nontraditional Data Elements

32

130

31

*The question is not whether the data element is used, but only whether the data element is used in an

AI/ML model.

The data elements used in claims models tend to be internal data sources or a mix of internal and

external sources. (Refer to Table 8.)

Table 8: Claims Model Sources (Internal vs. Third Party) by Data Elements

Claims Data Elements

8

# of Companies Using the Data Element

in a Claims AI/ML model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

Criminal Conviction

0

9

0

184

7

For definitions, refer to Appendix H: Data Use Table Definitions.

8

For definitions, refer to Appendix H: Data Use Table Definitions.

14

Claims Data Elements

8

# of Companies Using the Data Element

in a Claims AI/ML model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

(Excluding auto-related convictions)

Demographic

20

2

18

153

Driving Behavior

7

0

3

183

Education

0

2

0

191

Vehicle-Specific Data

51

21

51

70

Facial Detection/Recognition/Analysis

0

0

0

193

Geocoding

13

7

2

171

Natural Catastrophe

0

0

0

193

Job Stability

0

0

0

193

Income

0

0

0

193

Occupation

0

0

0

193

Personal Financial Information

0

2

0

191

Loss Experience

50

16

8

119

Medical

45

4

14

130

Online Media

0

7

0

186

Telematics

1

7

13

172

Voice Analysis

6

0

2

185

Consumer or Other Type of “Score”

7

2

7

177

Other Non-Traditional Data Elements

31

1

0

161

Very few companies reported using a consumer or other type of “score” as an input for claims models.

(Refer to Table 9.)

Table 9: Companies’ Use of Consumer or Other Type of “Score” as an Input for Claims Data Elements

Claims Data Elements

9

Number of Companies Using a Consumer or

Other Type of “Score” as an Input

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

61

132

Demographic

0

69

124

Driving Behavior

0

58

135

Education

0

58

135

Vehicle-Specific Data

3

110

80

Facial Detection/Recognition/Analysis

0

58

135

Geocoding

1

59

133

Natural Catastrophe

0

58

135

Job Stability

0

58

135

Income

0

58

135

Occupation

0

58

135

9

For definitions, refer to Appendix H: Data Use Table Definitions.

15

Claims Data Elements

9

Number of Companies Using a Consumer or

Other Type of “Score” as an Input

Yes

No

Blank

Personal Financial Information

0

58

135

Loss Experience

0

73

120

Medical

0

68

125

Online Media

0

58

135

Telematics

0

65

128

Voice Analysis

0

58

135

Consumer or Other Type of “Score”

Other Nontraditional Data Elements

0

83

110

Refer to the “Customer Data Correction,” “Governance,” and “Third-Party” sections of this report for

additional data analysis regarding company operations areas.

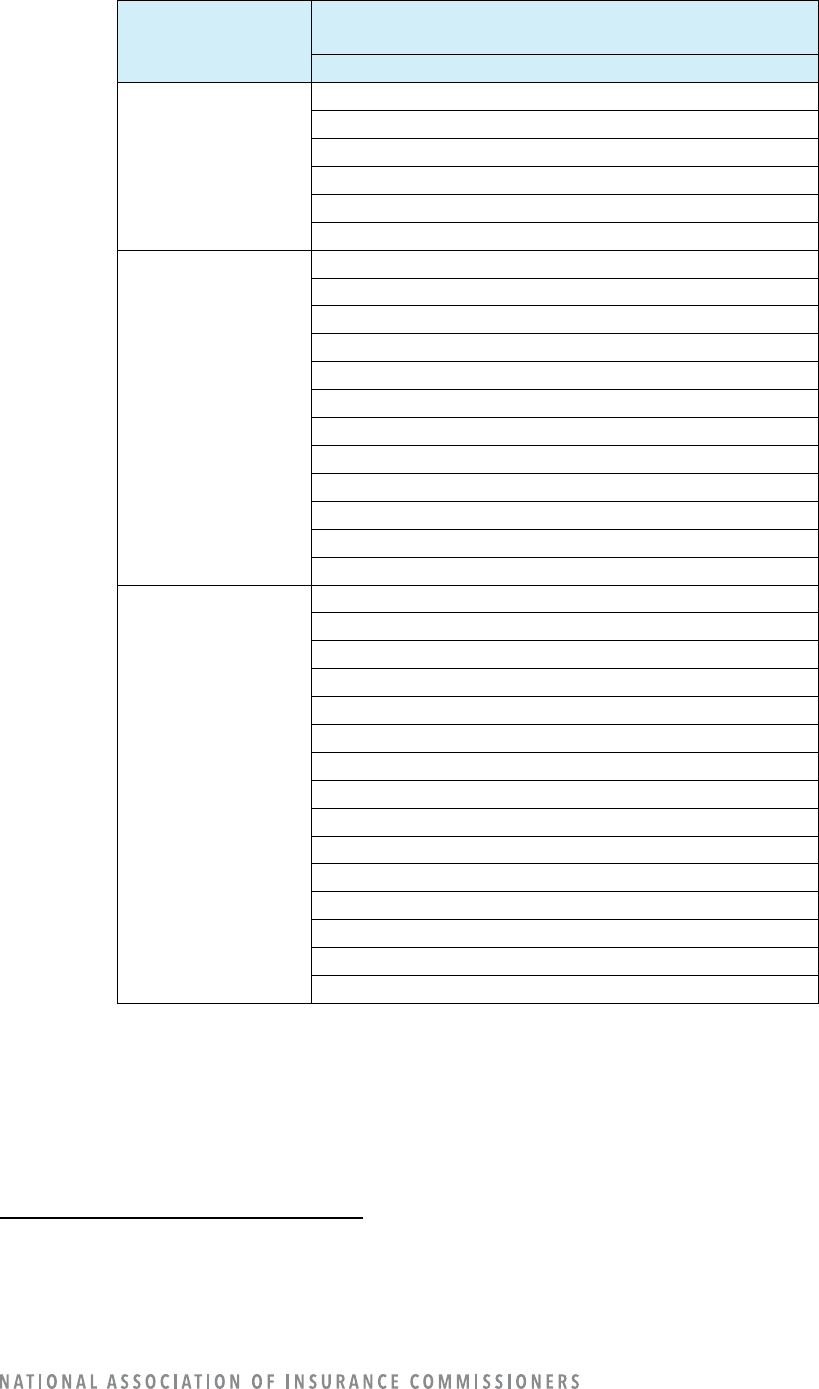

COMPANY OPERATION: FRAUD DETECTION

10

Out of 193 reporting companies, 95 companies reported using AI/ML for fraud-detection operations, and

17 reported having models under construction.

Fraud-Detection Model Uses

In insurance fraud detection, companies reported currently using AI/ML models mostly as a referral of

claims for further investigation (83 companies). Other AI/ML fraud-detection models are currently used

in the following areas: detect medical producer fraud (27), detect third-party liability (17), fast-tracking of

likely non-fraudulent claims (10), detect first-party liability (10), and “other” fraud detection-related

functions (four). The uses of fraud-detection models identified in Table 10 were options that could be

selected in the survey template. Companies noted some additional uses of fraud-detection models in their

write-in comments: fraudulent quote detection, organized crime rings identification, social network

analysis, facial recognition, behavior models, detect prefill information harvesters, device risk, and claims

watch list.

Some models are under construction for fraud detection, but there appears to be no significant

development planned in the near future.

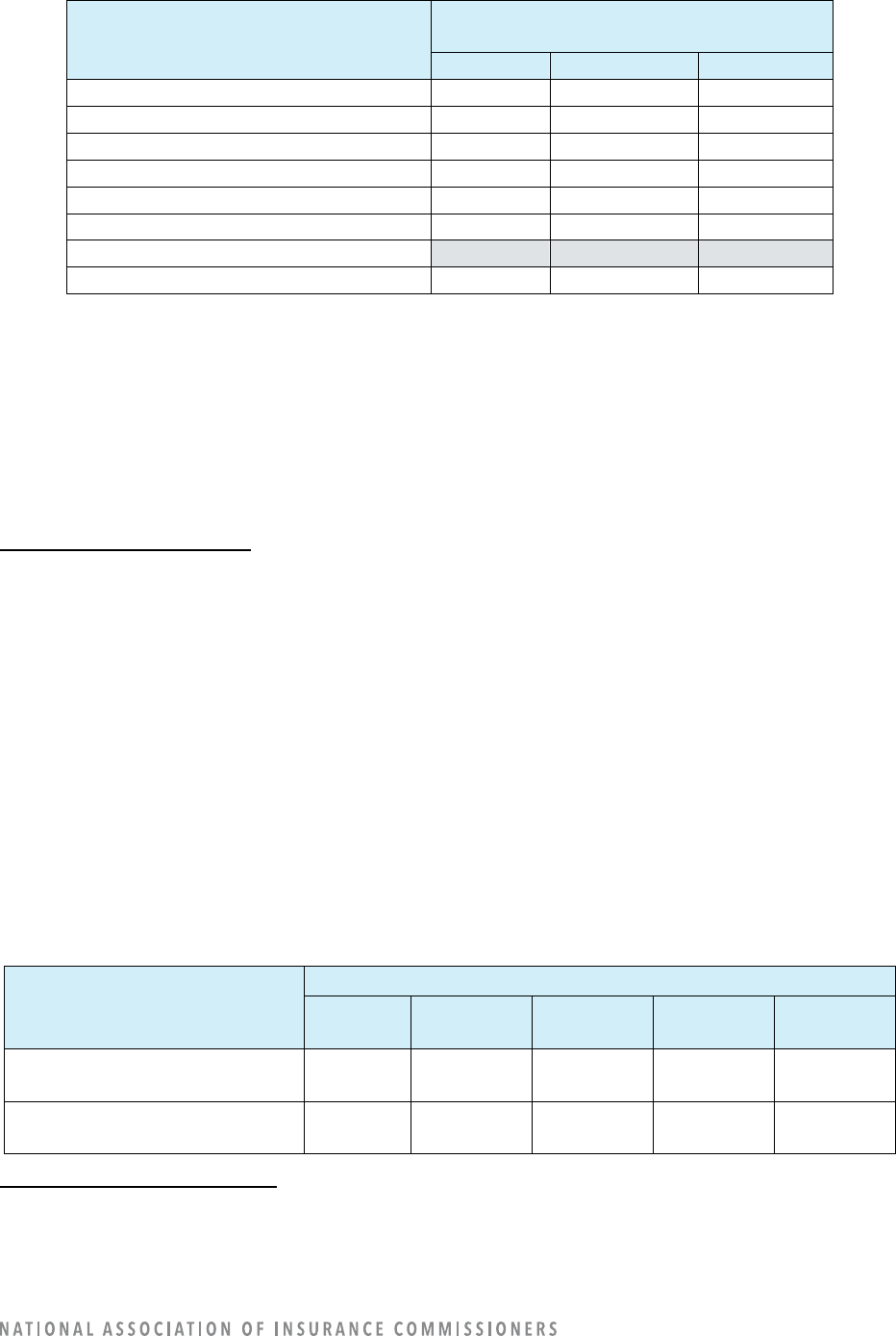

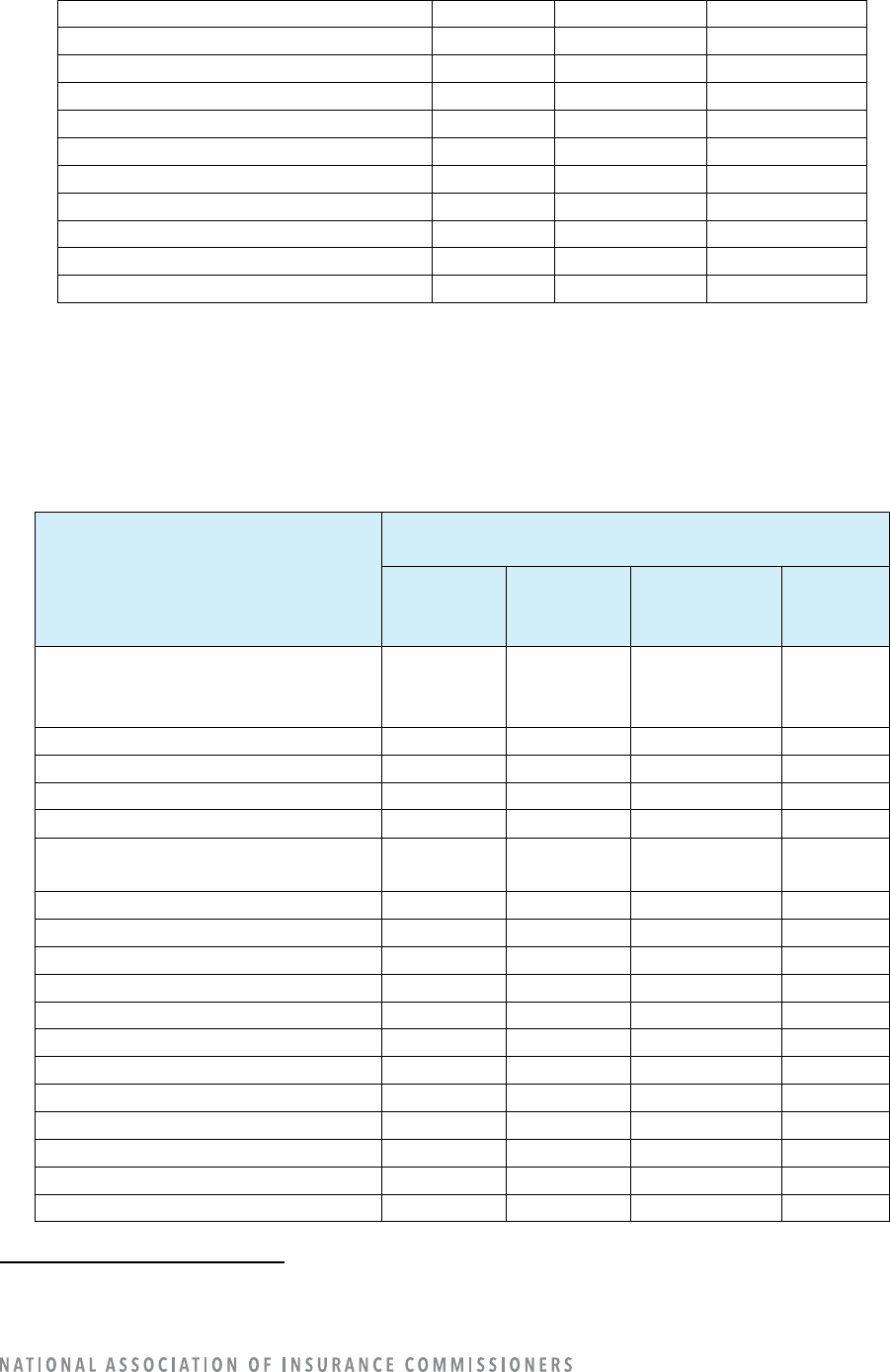

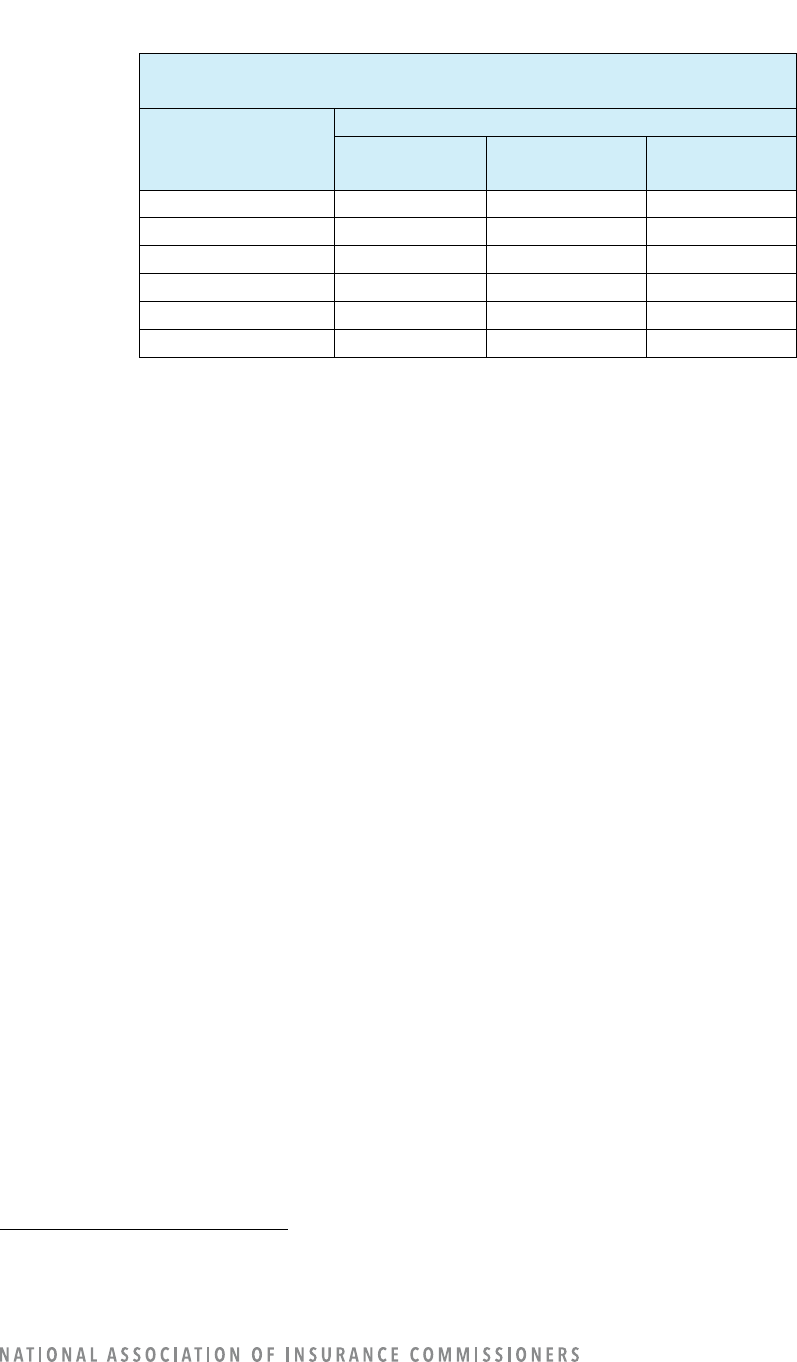

Table 10: Companies’ Use of Fraud-Detection Models

Fraud-Detection Model Uses

11

Number of Companies

In Use

Research

Proof of

Concept

Prototype

None (N/A)

Fast-Tracking of Likely Non-

Fraudulent Claims

10

15

3

1

164

Referral of Claims for Further

Investigation

83

3

6

3

98

10

For definitions, refer to Appendix C: Definitions Specific to Fraud Detection.

11

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

16

Fraud-Detection Model Uses

11

Number of Companies

In Use

Research

Proof of

Concept

Prototype

None (N/A)

Detect Medical Producer Fraud

27

3

2

9

152

Detect First-Party Liability

10

2

2

1

178

Detect Third-Party Liability

17

2

2

1

171

Other Fraud Detection-Related

Functions

4

2

12

4

171

The level of decisions influenced by AI/ML varies by model use. Most fraud detection model uses provide

support. For referral of claims for further investigation, there is an even split between augmentation and

support. (Refer to Table 11. Note that Table 11 differs from the previous tables because the data

represents the number of models instead of the number of companies.)

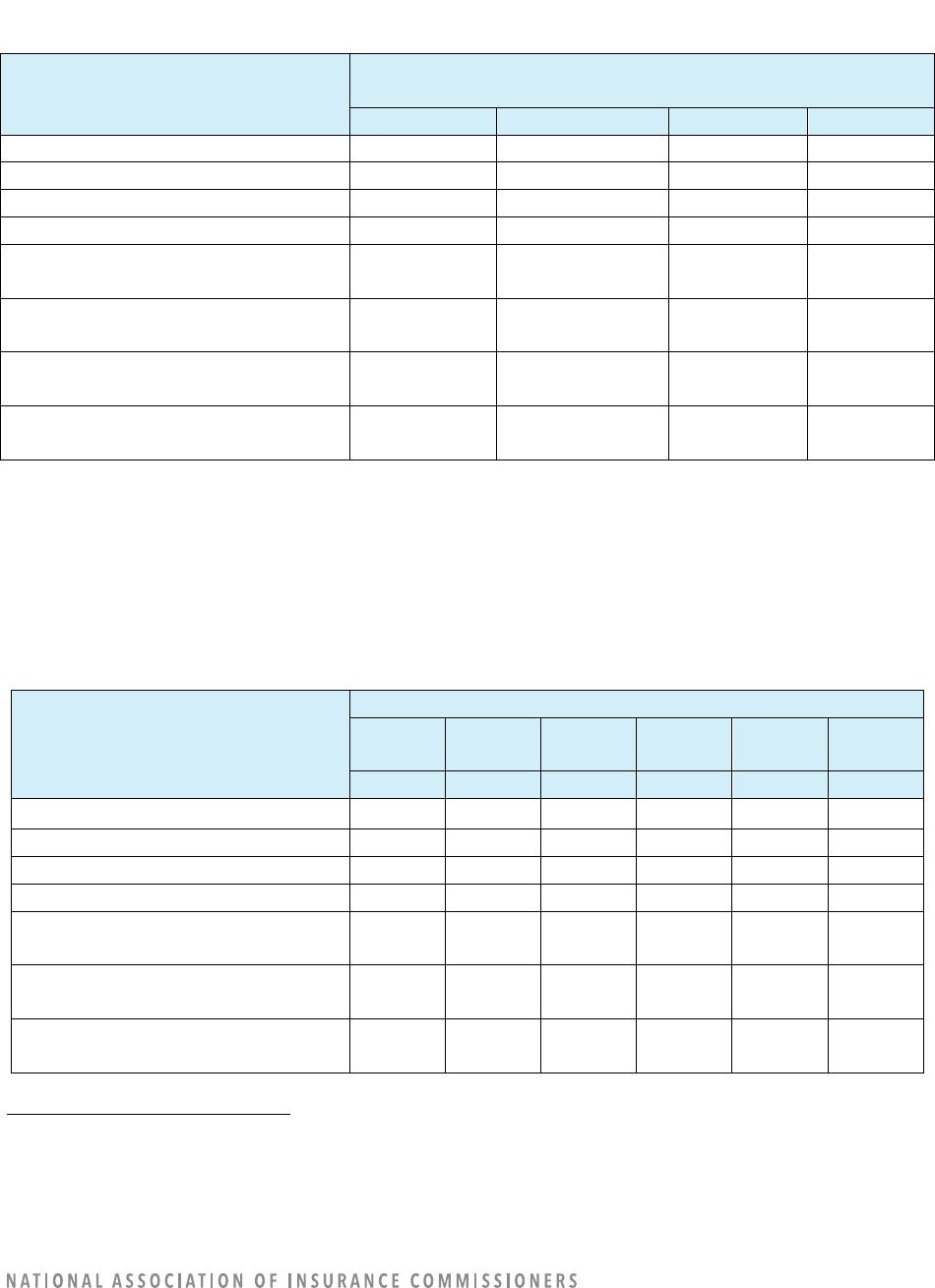

Table 11: Level of Decision-Making by Use of Fraud-Detection Models

Fraud-Detection Model Uses

12

Number of Models (In Use or Under Construction) by

Level of Decisions Influenced by AI/ML

Automation*

Augmentation*

Support*

Other

Fast-Tracking of Likely Non-

Fraudulent Claims

1

5

23

1

Referral of Claims for Further

Investigation

0

89

93

2

Detect Medical Producer Fraud

0

17

44

0

Detect First-Party Liability

1

4

13

0

Detect Third-Party Liability

1

11

13

0

Other Fraud Detection-Related

Functions

0

8

26

0

*“Automation” was defined as no human intervention on execution. “Augmentation” was defined as a model that suggests an

answer and advises the human making a decision. “Support” was defined as a model that provides information but does not

suggest a decision or action.

Models to detect first-party and third-party liability tend to be developed by third parties. The model use

of “Other Fraud Detection-Related Functions” tended to be developed by third parties. All other uses of

fraud detection models result from a mixture of in-house and third-party models. (Refer to Table 12.)

12

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

17

Table 12: Fraud-Detection Model Sources by Model Use

Fraud-Detection Model Uses

Model Source

In-

House

In-

House

Third-

Party

Third-

Party

Total Total

#

%

#

%

#

%

Fast-Tracking of Likely Non-

Fraudulent Claims

15

50%

15

50%

30

100%

Referral of Claims for Further

Investigation

120

65

64

34

184

100

Detect Medical Producer Fraud

39

64

22

36

61

100

Detect First-Party Liability

3

17

15

83

18

100

Detect Third-Party Liability

10

40

15

60

25

100

Other Fraud Detection-Related

Functions

9

26

25

74

34

100

Data Elements

The survey was limited to the use of the “more advanced” AI/ML. Therefore, the data element information

here does not represent the industry’s entire use of big data (which would require adding in the data

element information from excluded models (e.g., regression-type models, etc.).

For fraud detection, the following five data elements were the most frequently reported as being used for

AI/ML:

• Loss experience (80 companies)

• Vehicle-specific data (68)

• Medical (67)

• Criminal conviction (43)

• Online media (29)

There are at least some companies using demographic (28 companies), geocoding (21), driving behavior

(6), personal financial information (3), consumer or other type of “score” (3), occupation (1), and

telematics (1) for fraud-detection purposes. Companies also reported using “other” nontraditional data

elements (12). Some of the other uses were: identification of fraudulent quotes and organized crime rings,

detection of prefill information, device risk, claims watch list, social network analysis, facial recognition,

and behavior models. (Refer to Table 13.)

Table 13: Companies’ Use of Fraud-Detection Data Elements

Fraud-Detection Data Elements

13

Number of Companies Using/Not Using the Data

Element in a Fraud-Detection AI/ML Model*

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

43

79

71

Demographic

28

94

71

Driving Behavior

6

116

71

13

For definitions, refer to Appendix H: Data Use Table Definitions.

18

Fraud-Detection Data Elements

13

Number of Companies Using/Not Using the Data

Element in a Fraud-Detection AI/ML Model*

Yes

No

Blank

Education

0

122

71

Vehicle-Specific Data

68

54

71

Facial Detection/Recognition/Analysis

0

122

71

Geocoding

21

101

71

Natural Catastrophe

0

122

71

Job Stability

0

120

73

Income

0

122

71

Occupation

1

121

71

Personal Financial Information

3

119

71

Loss Experience

80

42

71

Medical

67

55

71

Online Media

29

93

71

Telematics

1

121

71

Voice Analysis

0

122

71

Consumer or Other Type of “Score”

3

119

71

Other Nontraditional Data Elements

12

110

71

*The question is not whether the data element is used, but only whether the data element is used in an AI/ML model.

There are differences in data sources for the data elements. The data elements used in fraud-detection

models are most often from external data sources for criminal conviction, geocoding, and online media.

Other fraud-detection models tend to use internal data sources or a mix of internal and external sources.

(Refer to Table 14.)

Table 14: Fraud-Detection Model Sources (Internal vs. Third Party) by Data Elements

Fraud-Detection Data Elements

14

Number of Companies Using the Data Element

in a Fraud-Detection AI/ML model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

36

7

150

Demographic

16

2

10

165

Driving Behavior

3

0

3

187

Education

0

0

0

193

Vehicle-Specific Data

35

2

31

125

Facial Detection/Recognition/Analysis

0

0

0

193

Geocoding

3

18

0

172

Natural Catastrophe

0

0

0

193

Job Stability

0

0

0

193

Income

0

0

0

193

Occupation

1

0

0

192

14

For definitions, refer to Appendix H: Data Use Table Definitions.

19

Personal Financial Information

0

3

0

190

Loss Experience

39

0

41

113

Medical

45

4

18

126

Online Media

0

18

11

164

Telematics

1

0

0

192

Voice Analysis

0

0

0

193

Consumer or Other Type of “Score”

1

2

0

190

Other Nontraditional Data Elements

12

0

0

181

Few companies reported using a consumer or other type of “score” as an input for fraud-detection

models. (Refer to Table 15.)

Table 15: Companies’ Use of Consumer or Other Type of “Score” as an Input for Fraud-Detection Data

Elements

Fraud-Detection Data Elements

15

Number of Companies Using a Consumer or

Other Type of “Score” as an Input

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

64

129

Demographic

0

65

128

Driving Behavior

0

57

136

Education

0

57

136

Vehicle-Specific Data

1

75

117

Facial Detection/Recognition/Analysis

0

57

136

Geocoding

1

58

135

Natural Catastrophe

0

57

136

Job Stability

0

57

136

Income

0

57

136

Occupation

0

57

136

Personal Financial Information

0

57

136

Loss Experience

0

76

117

Medical

0

66

127

Online Media

0

57

136

Telematics

0

57

136

Voice Analysis

0

57

136

Consumer or Other Type of “Score”

Other Nontraditional Data Elements

5

57

131

Refer to the “Customer Data Correction, “Governance,” and “Third-Party” sections of this report for

additional data analysis regarding company operations areas.

15

For definitions, refer to Appendix H: Data Use Table Definitions.

20

COMPANY OPERATION: MARKETING

16

Out of 193 reporting companies, 96 companies reported using AI/ML for fraud-detection operations, and

seven (7) reported having models under construction. So, approximately half of the companies are using

AI/ML for marketing.

Marketing Model Uses

Companies are using many marketing models for multiple uses. Companies use marketing models for

targeted online advertising (56 companies), identification of recipients of mail and phone advertising (42),

provision of offers to existing customers (42), and direct online sales (41). Only 19 companies are currently

using models for identification of potential customer groups, and only seven (7) companies are currently

using AI/ML for demand modeling. Companies are also using marketing models for other marketing-

related functions (46).

The uses of marketing models identified in Table 16 were options that could be selected in the survey

template. Companies noted some additional uses of marketing models in their write-in comments:

customer service, customer-related metrics, customer interactions using natural language processing

(NLP), mixed media modeling, marketing content variation, alternative quote recommendation, creative

optimization, budget and channel spend allocation, customer retention and acquisition (including lifetime

value), referrals, agency rank, and click analysis on third-party sites (web searching).

Table 16: Companies’ Use of Marketing Models

Marketing Model Uses

17

Number of Companies

In Use

Research

Proof of

Concept

Prototype

None (N/A)

Targeted Online Advertising

56

2

3

0

132

Identification of Recipients of

Mail or Phone Advertising

42

2

0

0

149

Provision of Offers to Existing

Customers

42

2

3

11

135

Identification of Potential

Customer Groups

19

3

7

0

164

Demand Modeling

7

10

0

0

176

Direct Online Sales

41

5

0

0

147

Other Marketing-Related

Functions

46

10

0

3

134

Many of the marketing models are automated with no human intervention on execution. Marketing

models are mostly automated when used for targeted online marketing (136 models), direct online sales

(88), provision of offers to existing customers (56), and other marketing-related functions (75). When

identifying recipients of mail or phone advertising, there is most often augmentation (68), where a model

provides an answer and advises the human who is making the decision. When identifying potential

16

For definitions, refer to Appendix D: Definitions Specific to Marketing.

17

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

21

customer groups, the number of models is evenly split between all three levels of decision-making.

Demand modeling is evenly split between augmentation and support to the human. (Refer to Table 17.)

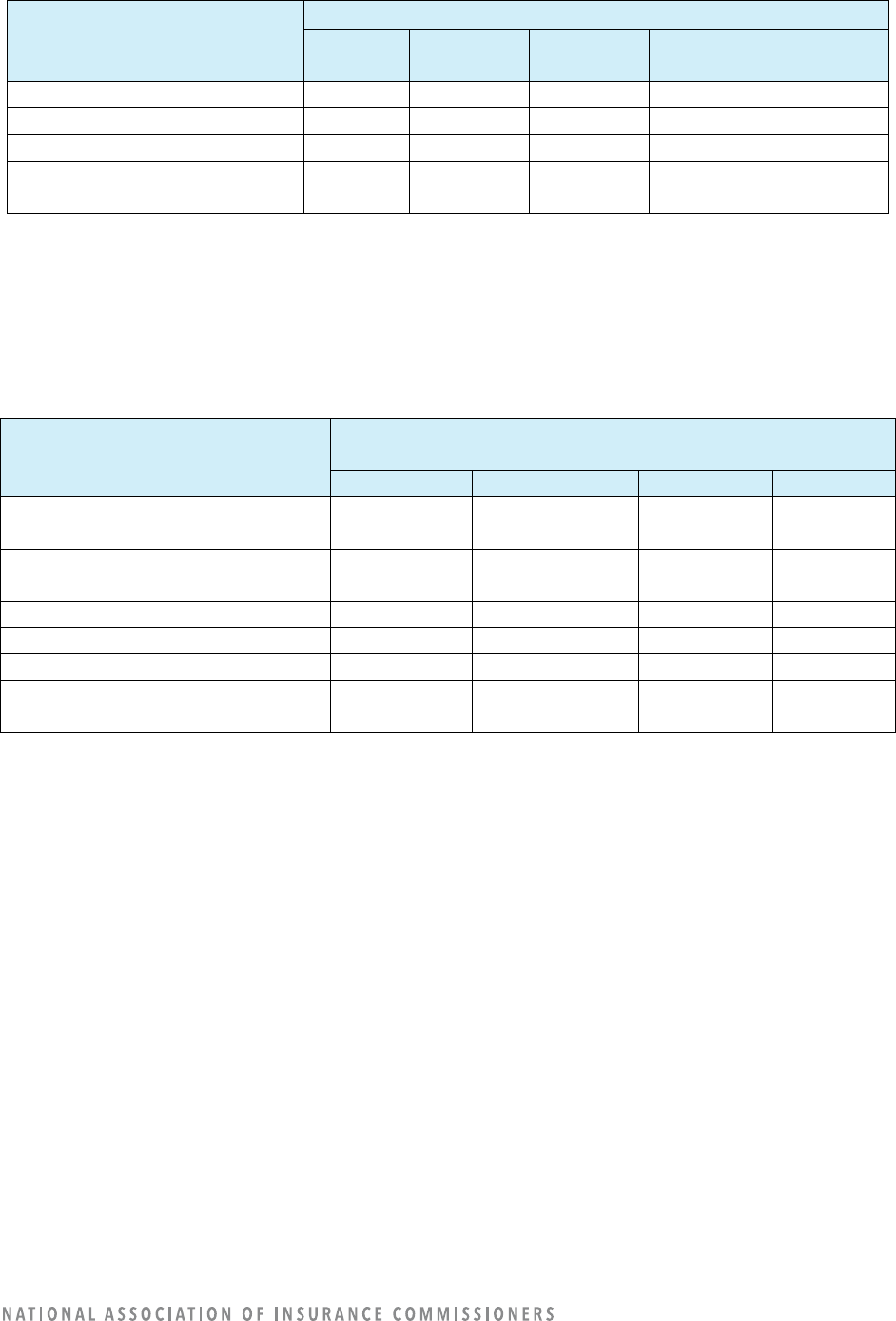

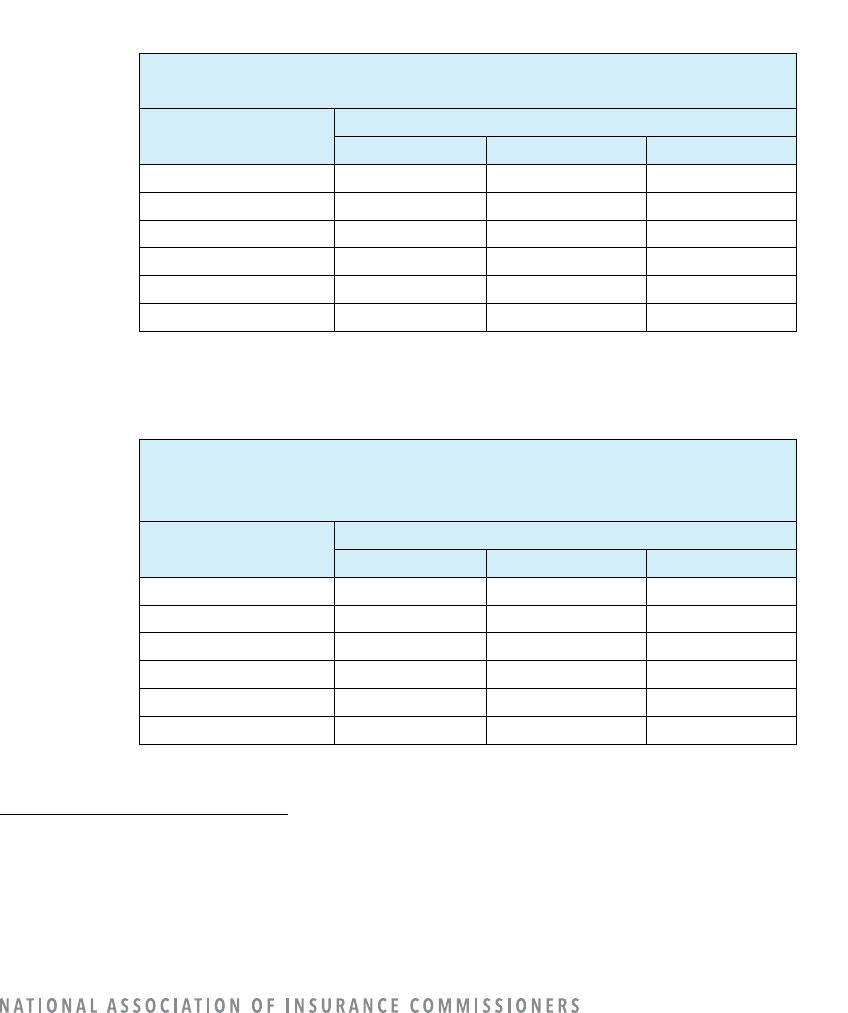

Table 17: Level of Decision-Making by Use of Marketing Models

Marketing Model Uses

18

Number of Models (In Use or Under Construction) by

Level of Decisions influenced by AI/ML

Automation*

Augmentation*

Support*

Other

Targeted Online Advertising

136

45

23

1

Identification of Recipients of Mail

or Phone Advertising

28

68

23

1

Provision of Offers to Existing

Customers

56

27

24

1

Identification of Potential Customer

Groups

32

28

22

1

Demand Modeling

2

13

14

0

Direct Online Sales

88

40

12

5

Other Marketing-Related Functions

75

23

16

2

*“Automation” was defined as no human intervention on execution. “Augmentation” was defined as a model that suggests an

answer and advises the human making a decision. “Support” was defined as a model that provides information but does not

suggest a decision or action.

Marketing models being used by insurance companies are equally developed in-house (with or without

third-party assistance) and purchased from a third party. Two exceptions are that third-party models are

used for targeted online advertising, and in-house models are used for the provision of offers to existing

customers. (Refer to Table 18.)

Table 18: Marketing Model Sources by Model Use

Marketing Model Uses

19

Model Source

In-

House

In-

House

Third-

Party

Third-

Party

Total Total

#

%

#

%

#

%

Targeted Online Advertising

19

9%

186

91%

205

100%

Identification of Recipients of Mail

or Phone Advertising

46

38

74

62

120

100

Provision of Offers to Existing

Customers

78

72

30

28

108

100

Identification of Potential Customer

Groups

48

58

35

42

83

100

Demand Modeling

16

55

13

45

29

100

Direct Online Sales

76

52

69

48

145

100

Other Marketing-Related Functions

69

59

47

41

116

100

18

For definitions, See Appendix A: "Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention"

19

For definitions, See Appendix A: "Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention"

22

Data Elements

The survey was limited to the use of the “more advanced” AI/ML. Therefore, the data element information

here does not represent the industry’s entire use of big data (which would require adding in the data

element information from excluded models (e.g., regression-type models, etc.).

For marketing, the following five data elements were the most frequently reported as being used:

• Demographic (79 companies)

• Education (42)

• Consumer or other type of “score” (42)

• Geocoding (40)

• Vehicle-specific data (39)

There are at least some companies using driving behavior (33 companies), occupation (32), online media

(29), loss experience (21), personal financial information (13), telematics (11), job stability (11), income

(4), and natural catastrophe (1) for fraud-detection purposes. Companies also reported using “other”

nontraditional data elements (26). (Refer to Table 19.)

Table 19: Companies’ Use of Marketing Data Elements

Marketing Data Elements

20

Number of Companies Using/Not Using the

Data Element in a Marketing AI/ML Model*

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

128

65

Demographic

79

48

66

Driving Behavior

33

94

66

Education

42

85

66

Vehicle-Specific Data

39

88

66

Facial Detection/Recognition/Analysis

0

127

66

Geocoding

40

87

66

Natural Catastrophe

1

126

66

Job Stability

11

116

66

Income

4

123

66

Occupation

32

95

66

Personal Financial Information

13

114

66

Loss Experience

21

106

66

Medical

0

127

66

Online Media

29

98

66

Telematics

11

116

66

Voice Analysis

0

127

66

Consumer or Other Type of “Score”

42

99

52

Other Nontraditional Data Elements

26

101

66

*The question is not whether the data element is used, but only whether the data element is used in an

AI/ML model.

20

For definitions, see Appendix H: “Data Use Table Definitions.”

23

There are differences in data sources for the data elements. For example, demographic, driving behavior,

education, geocoding, job stability, occupation, loss experience, and telematics are most often sourced

externally, while income, personal financial, and consumer or other “score” were more frequently sourced

internally. Other data elements, such as vehicle-specific data and online media, are sourced almost equally

from both external and internal data. (Refer to Table 20.)

Table 20: Marketing Model Sources (Internal vs. Third Party) by Data Elements

Marketing Data Elements

21

Number of Companies Using the Data Element

in a Marketing AI/ML model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

Criminal Conviction

(Excluding Auto-Related

Convictions)

0

0

0

193

Demographic

40

14

25

114

Driving Behavior

20

9

4

160

Education

21

6

15

151

Vehicle-Specific Data

20

14

5

154

Facial Detection/Recognition/

Analysis

0

0

0

193

Geocoding

36

8

0

149

Natural Catastrophe

0

1

0

192

Job Stability

11

0

0

182

Income

0

4

0

189

Occupation

22

10

0

161

Personal Financial Information

0

6

7

180

Loss Experience

13

1

7

172

Medical

0

0

0

193

Online Media

14

15

0

164

Telematics

11

0

0

182

Voice Analysis

0

0

0

193

Consumer or Other Type of “Score”

11

31

0

151

Other Nontraditional Data Elements

24

2

0

167

A few companies use a consumer or other type of “score” as an input for the following marketing data

elements: demographic (5 companies), occupation (4), and personal financial information (2). One

company uses consumer or other type of “score” as an input for the following market data elements:

driving behavior, education, vehicle-specific data, income, and online media. (Refer to Table 21.)

21

For definitions, see Appendix H: “Data Use Table Definitions.”

24

Table 21: Companies’ Use of Consumer or Other Type of “Score” as an Input for Marketing Data Elements

Marketing Data Elements

22

Number of Companies Using a Consumer or

Other Type of “Score” as an Input

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

46

147

Demographic

5

61

127

Driving Behavior

1

45

147

Education

1

60

132

Vehicle-Specific Data

1

46

146

Facial Detection/Recognition/Analysis

0

46

147

Geocoding

0

59

134

Natural Catastrophe

0

46

147

Job Stability

0

46

147

Income

1

46

146

Occupation

4

40

149

Personal Financial Information

2

45

146

Loss Experience

0

46

147

Medical

0

46

147

Online Media

1

59

133

Telematics

0

47

146

Voice Analysis

0

46

147

Consumer or Other Type of “Score”

Other Nontraditional Data Elements

0

60

133

Refer to the “Customer Data Correction,” “Governance,” and “Third-Party” sections of this report for

additional data analysis regarding company operations areas.

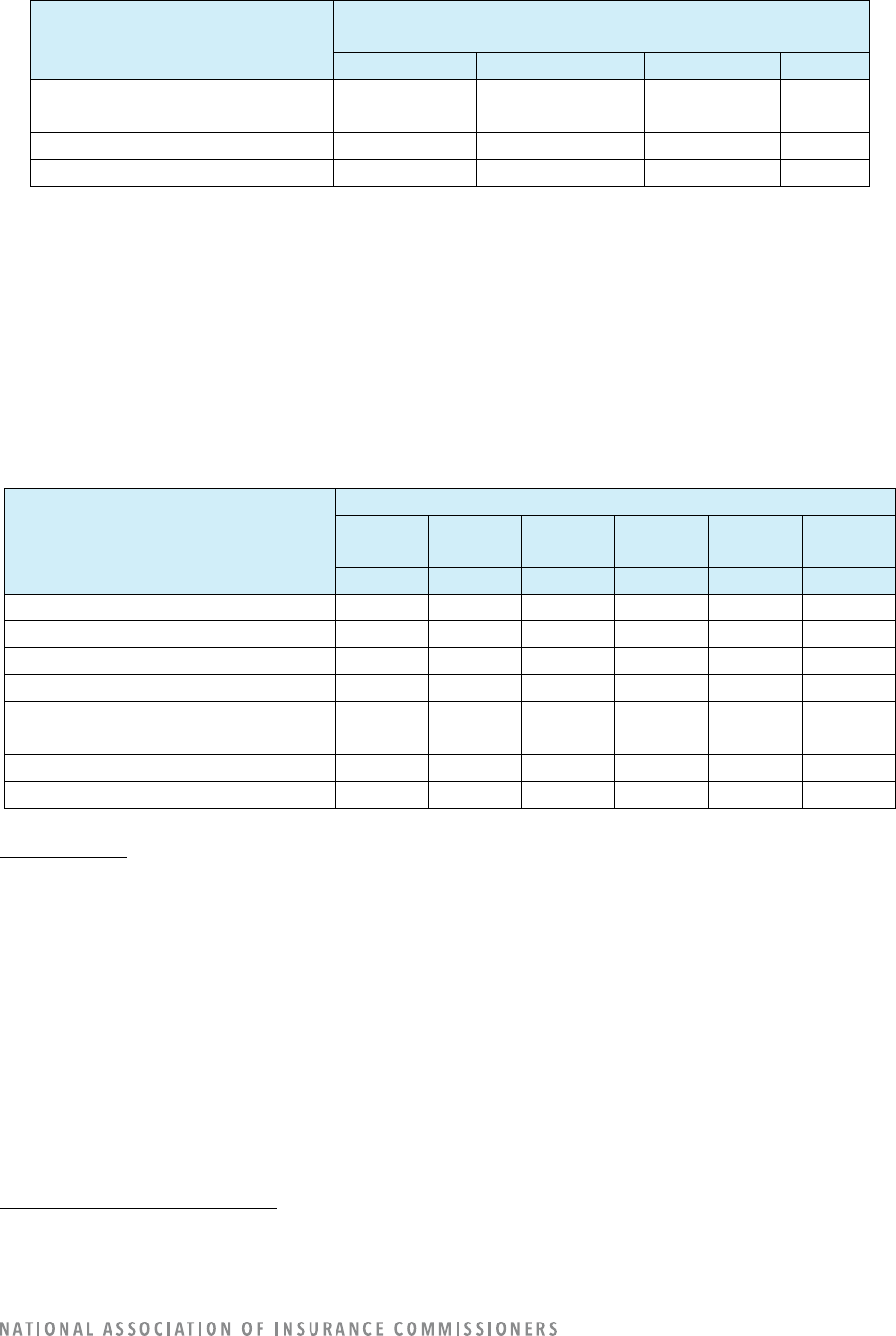

COMPANY OPERATION: RATING

23

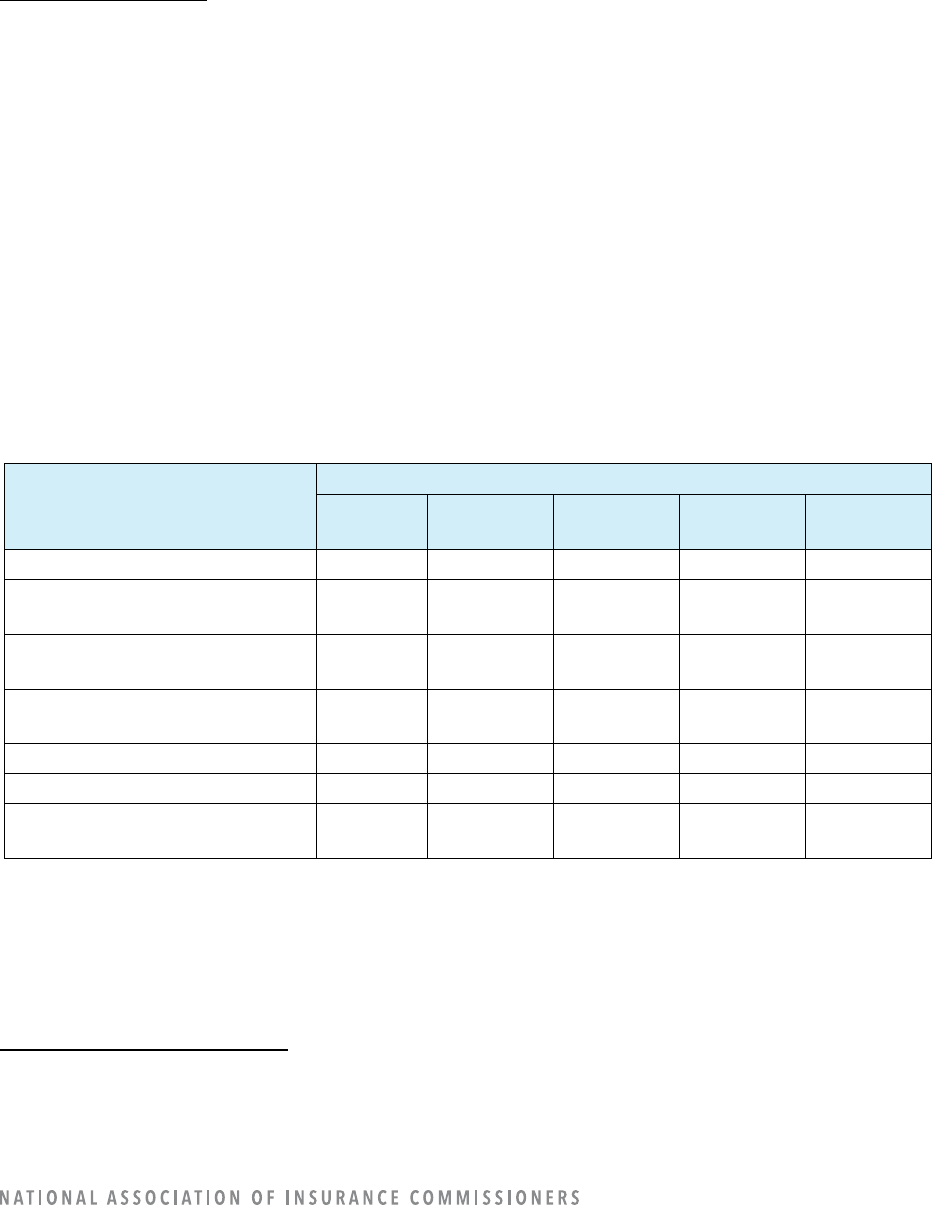

Out of 193 reporting companies, 52 companies reported using AI/ML for rating operations, and 25

reported having models under construction.

Rating Model Uses

While numbers are small, the most common use case within the rating area of operations is Rating Class

determination, with 37 companies indicating they have models either in use (23 companies) or under

construction (14). The second most common use case within the rating area of operations is numerical

relativity determination, with 27 companies indicating that they have models either in use (19) or under

construction (8). Only seven (7) companies reported using AI/ML models for retention modeling, with six

(6) companies reporting models under construction for the area. No companies reported using or having

plans to use AI/ML models for price optimization.

22

For definitions, see Appendix H: “Data Use Table Definitions.”

23

For definitions, See Appendix E: Definitions Specific to Rating

25

The uses of rating models identified in Table 22 were options that could be selected in the survey

template. Companies noted some additional uses of rating models in their write-in comments: telematics,

close rate expectation, loss development expectation, loss performance monitoring, ground-up loss

prediction, and frequency trend forecasting. Additional write-ins were policy application pre-filling and

bad-debt mitigation.

Table 22: Companies’ Use of Rating Models

Rating Model Uses

24

Number of Companies

In Use

Research

Proof of

Concept

Prototype

None (N/A)

Rating Class Determination

23

9

0

5

156

Price Optimization

0

0

0

0

193

Retention Modeling

7

3

0

3

180

Numerical Relativity

Determination

19

4

0

4

166

Other Rate-Related Functions

24

4

0

0

165

Most of the rating models are automated, requiring no human intervention for execution. The types of

models most often automated are retention models and other rate-related functions. Rating Class

determinations and numerical relativity determinations tend to be augmented, where the model suggests

an answer and advises a human who is making a decision. (Refer to Table 23.)

Table 23: Level of Decision-Making by Use of Rating Models

Rating Model Uses

25

Number of Models (In Use or Under Construction) by

Level of Decisions Influenced by AI/ML

Automation*

Augmentation*

Support*

Other

Rating Class Determination

9

33

8

3

Price Optimization

0

0

0

0

Retention Modeling

22

0

5

2

Numerical Relativity Determination

10

21

9

2

Other Rate-Related Functions

29

2

27

0

*“Automation” was defined as no human intervention on execution. “Augmentation” was defined as a model that suggests an

answer and advises the human making a decision. “Support” was defined as a model that provides information but does not

suggest a decision or action.

Rating models tend to be developed by companies and not third parties. About 75%–90% of the rating

models are developed by companies “in-house.” (Refer to Table 24.)

24

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

25

For definitions, See Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

26

Table 24: Rating Model Sources by Model Use

Rating Model Uses

26

Model Source

In-

House

In-

House

Third-

Party

Third-

Party

Total Total

#

%

#

%

#

%

Rating Class Determination

47

89%

6

11%

53

100%

Price Optimization

0

0

0

0

0

100

Retention Modeling

25

86

4

14

29

100

Numerical Relativity Determination

33

79

9

21

42

100

Other Rate-Related Functions

44

76

14

24

58

100

Data Elements

The survey was limited to the use of the “more advanced” AI/ML. Therefore, the data element information

here does not represent the industry’s entire use of big data (which would require adding in the data

element information from excluded models; e.g., regression-type models, etc.).

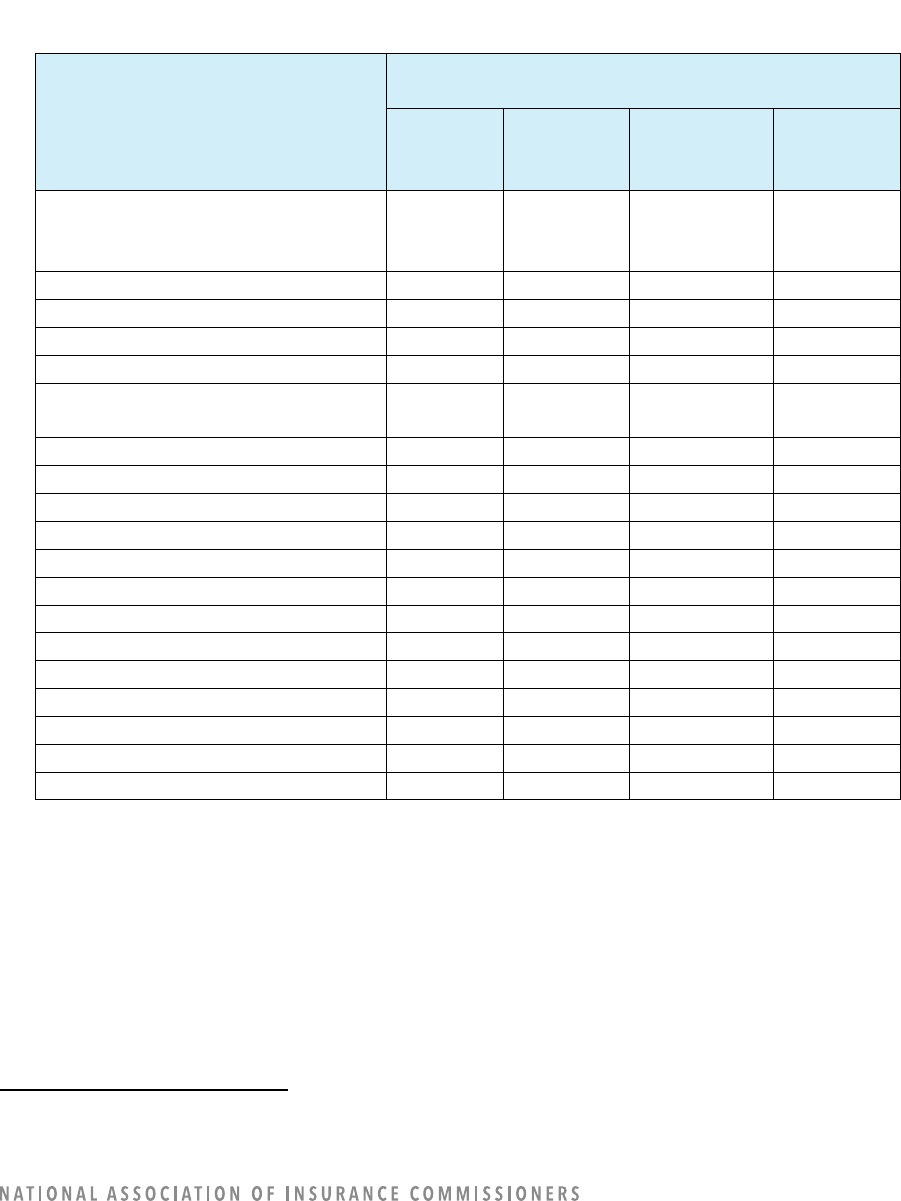

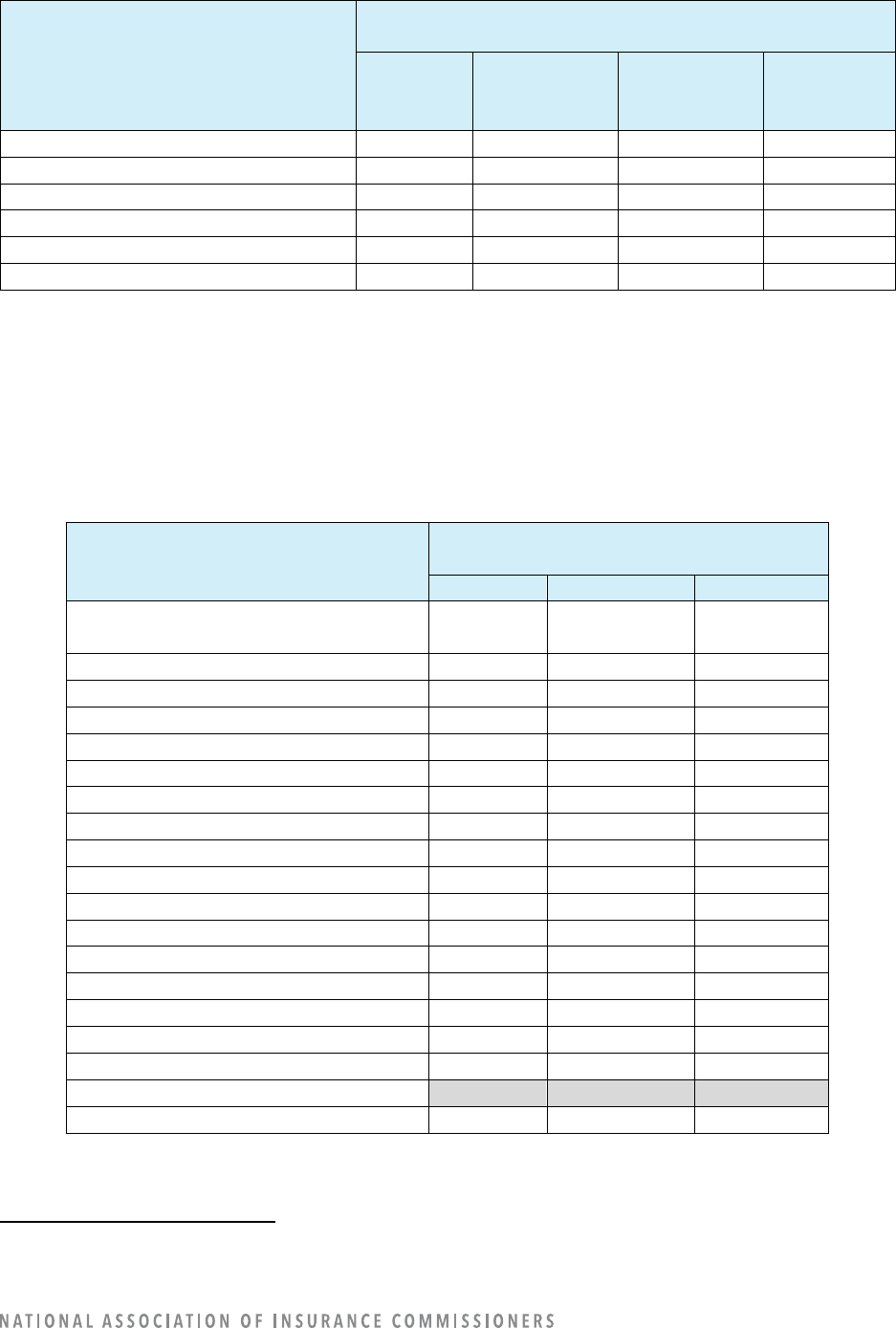

For rating, the following five data elements were the most frequently reported as being used for AI/ML:

• Vehicle-specific data (40 companies)

• Loss experience (35)

• Driving behavior (33)

• Demographic (30)

• Telematics (27)

There are at least some companies using vehicle-specific data (39 companies), driving behavior (33),

occupation (32), online media (29), loss experience (21), personal financial information (13), telematics

(11), job stability (11), income (4), and natural catastrophe (1) for fraud-detection purposes. Companies

also reported using “other” nontraditional data elements (26). (Refer to Table 25.)

Table 25: Companies’ Use of Rating Data Elements

Rating Data Elements

27

Number of Companies Using/Not Using the

Data Element in a Rating AI/ML Model*

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

113

80

Demographic

30

83

80

Driving Behavior

33

80

80

Education

7

106

80

Vehicle-Specific Data

40

73

80

Facial Detection/Recognition/Analysis

0

113

80

Geocoding

11

102

80

26

For definitions, refer to Appendix A: Guidance for Questions in Each Operational Area: Rating, Underwriting, Claims, Fraud

Detection, Marketing, Loss Prevention.

27

For definitions, refer to Appendix H: Data Use Table Definitions.

27

Rating Data Elements

27

Number of Companies Using/Not Using the

Data Element in a Rating AI/ML Model*

Yes

No

Blank

Natural Catastrophe

6

107

80

Job Stability

0

113

80

Income

0

113

80

Occupation

6

107

80

Personal Financial Information

14

99

80

Loss Experience

35

78

80

Medical

0

113

80

Online Media

0

113

80

Telematics

27

86

80

Voice Analysis

0

113

80

Consumer or Other Type of “Score”

21

94

78

Other Nontraditional Data Elements

6

107

80

*The question is not whether the data element is used, but only whether the data element is used in an AI/ML

model.

There are differences in data sources for the data elements. For example, driving behavior, telematics,

natural catastrophe, and a consumer or other “score” tend to be externally sourced, while vehicle-specific

data, loss experience, and occupation are more frequently sourced internally. Other data elements

—such

as geocoding, personal financial information, and demographic information

—are sourced from both

external and internal data. (Refer to Table 26.)

Table 26: Rating Model Sources (Internal vs. Third Party) by Data Elements

Rating Data Elements

28

Number of Companies Using the Data Element

in a Rating AI/ML Model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

0

0

193

Demographic

11

6

13

163

Driving Behavior

0

27

6

160

Education

7

0

0

186

Vehicle-Specific Data

20

6

14

153

Facial Detection/Recognition/Analysis

0

0

0

193

Geocoding

5

6

0

182

Natural Catastrophe

0

6

0

187

Job Stability

0

0

0

193

Income

0

0

0

193

Occupation

6

0

0

187

Personal Financial Information

7

7

0

179

Loss Experience

26

0

9

158

28

For definitions, refer to Appendix H: Data Use Table Definitions.

28

Rating Data Elements

28

Number of Companies Using the Data Element

in a Rating AI/ML Model*

Internal

Data

Source

External

Data

Source

Both Internal

and External

Data Sources

Blank

Medical

0

0

0

193

Online Media

0

0

0

193

Telematics

1

9

17

166

Voice Analysis

0

0

0

193

Consumer or Other Type of “Score”

4

17

0

172

Other Nontraditional Data Elements

0

6

0

187

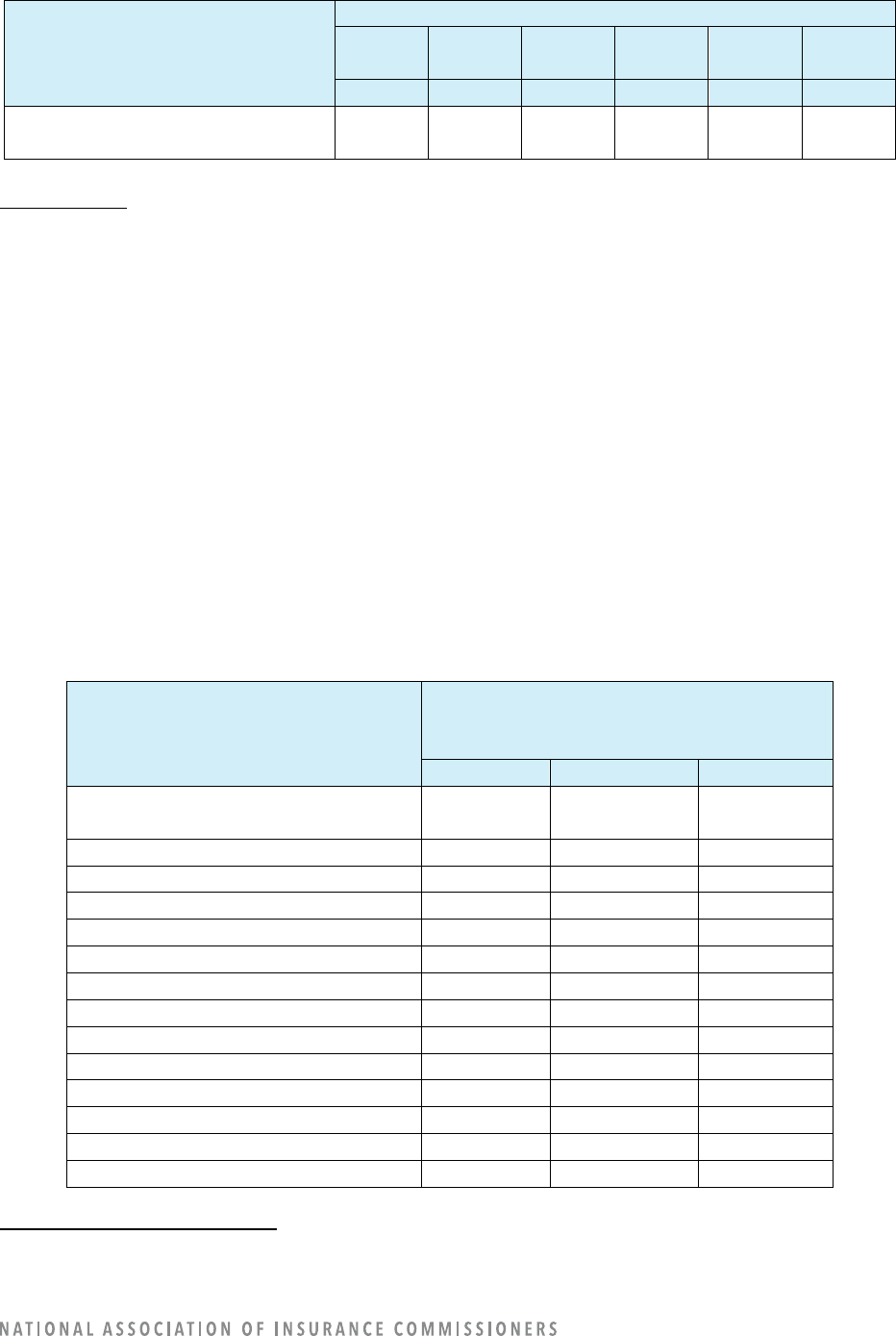

Most companies do not use a consumer or other type of score as a data element. Table 27 illustrates that

the only rating data elements for which consumer or other type of “score” was listed as an input are as

follows: demographic (4 companies), driving behavior (4), vehicle specific data (1), and personal financial

information (4). The numbers are low; recall the AI/ML definition excludes the most-often used rating

models.

Table 27: Companies’ Use of Consumer or Other Type of “Score” as an Input for Rating Data Elements

Rating Data Elements

29

Number of Companies Using a Consumer or

Other Type of “Score” as an Input

Yes

No

Blank

Criminal Conviction

(Excluding Auto-Related Convictions)

0

31

162

Demographic

4

36

153

Driving Behavior

4

29

160

Education

0

31

162

Vehicle-Specific Data

1

32

160

Facial Detection/Recognition/Analysis

0

31

162

Geocoding

0

31

162

Natural Catastrophe

0

33

160

Job Stability

0

31

162

Income

0

31

162

Occupation

0

31

162

Personal Financial Information

4

33

156

Loss Experience

0

37

156

Medical

0

31

162

Online Media

0

31

162

Telematics

0

47

146

Voice Analysis

0

31

162

Consumer or Other Type of “Score”

Other Nontraditional Data Elements

0

36

157

29

For definitions, refer to Appendix H: Data Use Table Definitions.

29

Refer to the “Customer Data Correction, “Governance,” and “Third-Party” sections of this report for

additional data analysis regarding company operations areas.

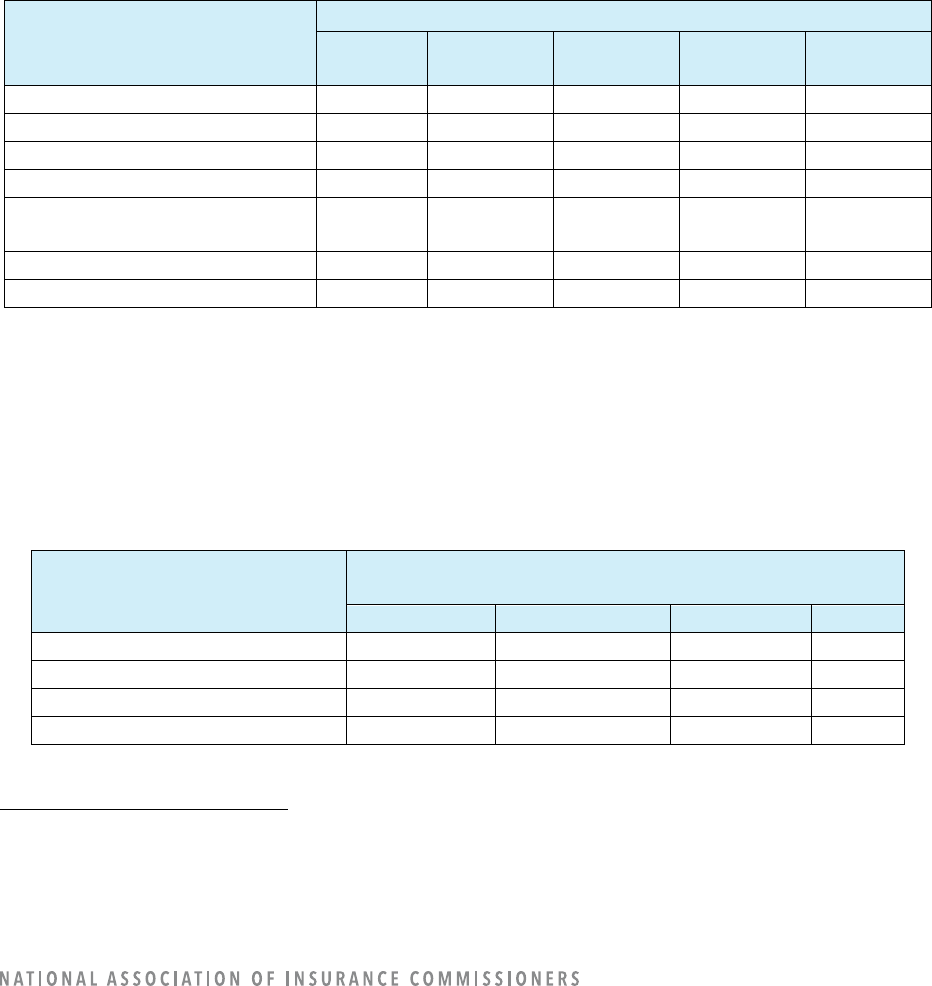

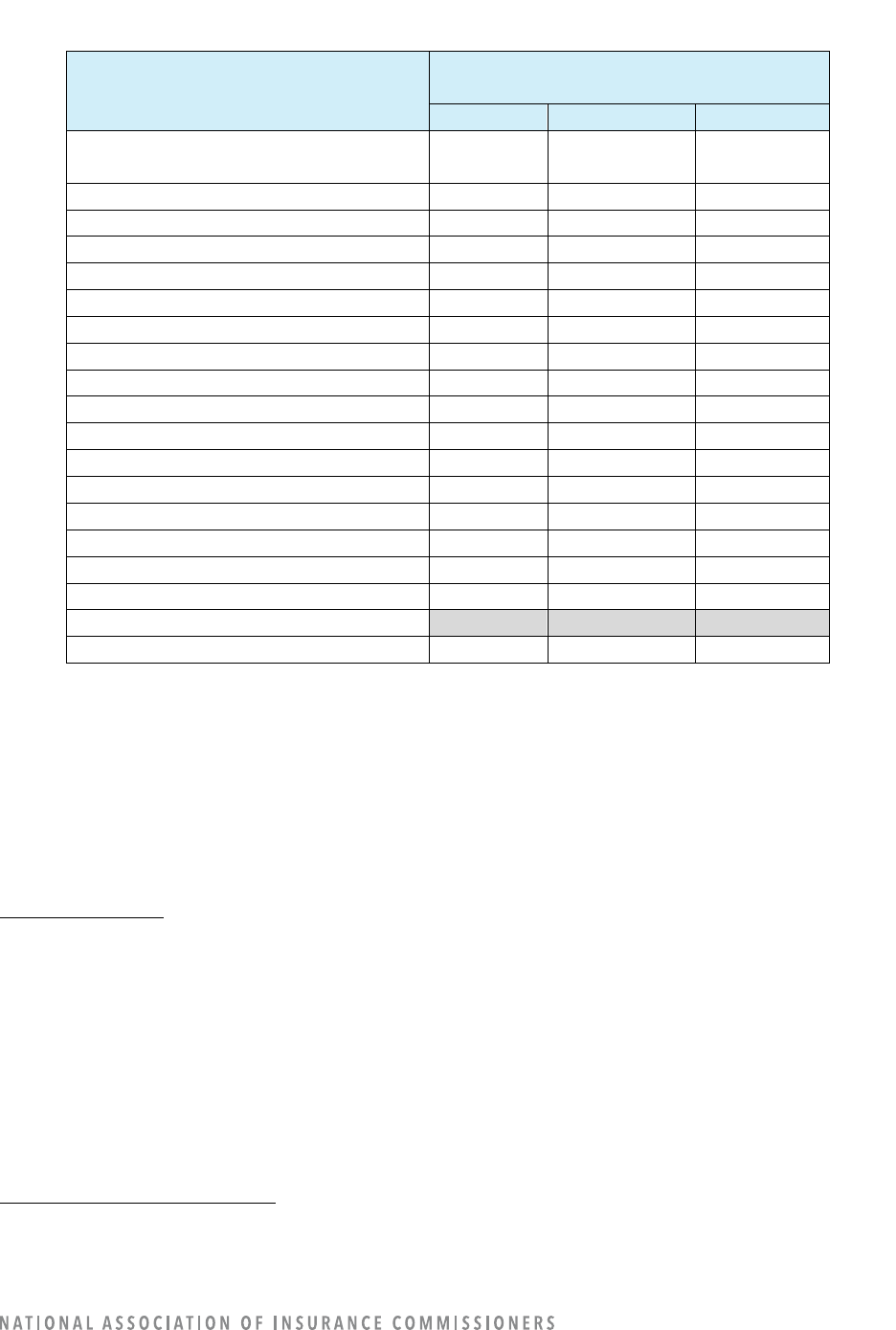

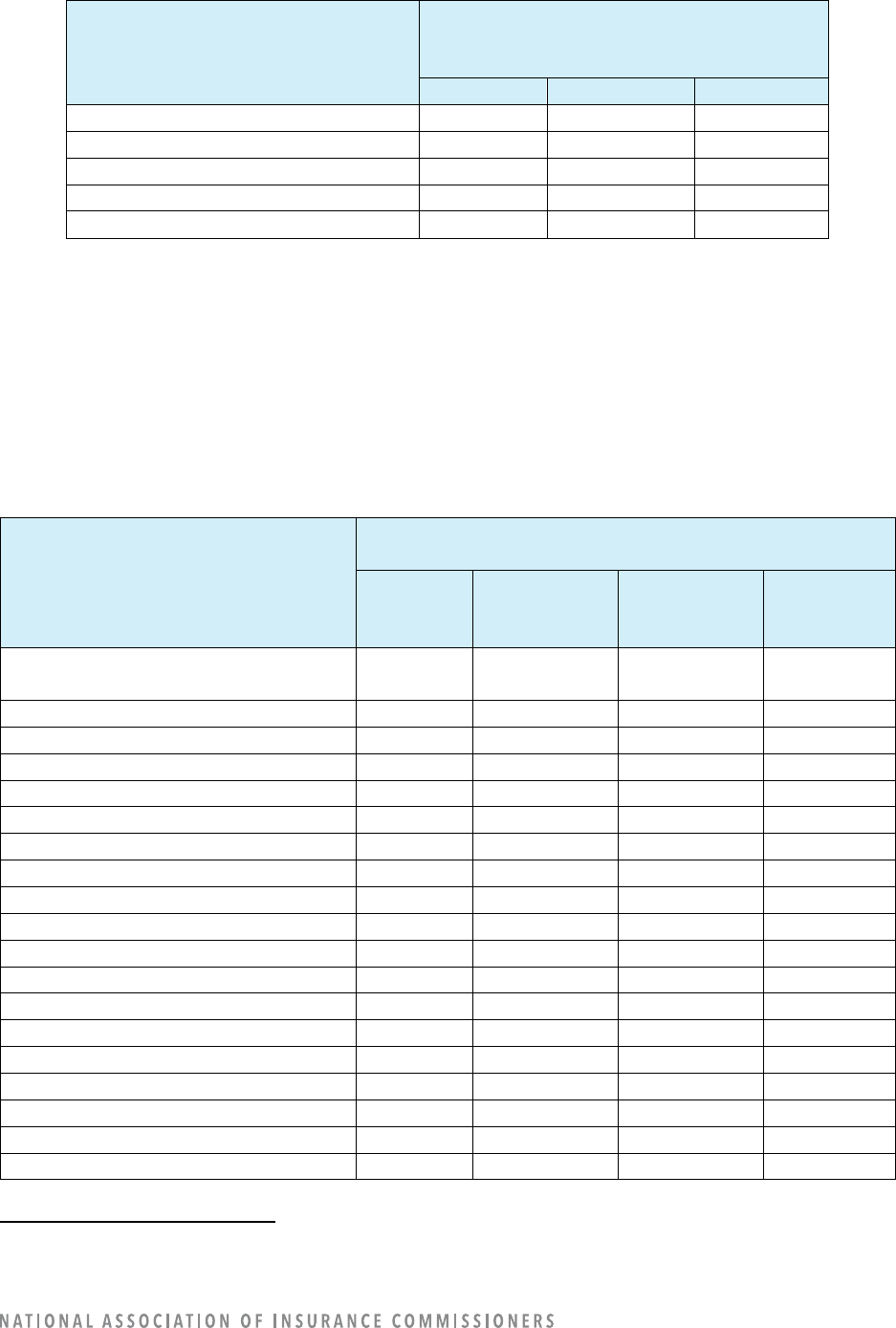

COMPANY OPERATION: UNDERWRITING

30

Out of 193 reporting companies, 34 companies reported using AI/ML for fraud-detection operations, and

25 reported having models under construction.

Underwriting Model Uses

Most underwriting models in use are reported in the “all other” use category of other underwriting-